Istio 9주차 정리

☸️ kind : k8s(1.32.2) 배포

1. Kind 클러스터 생성 (Control Plane + Worker 2대)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

kind create cluster --name myk8s --image kindest/node:v1.32.2 --config - <<EOF

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

extraPortMappings:

- containerPort: 30000 # Sample Application

hostPort: 30000

- containerPort: 30001 # Prometheus

hostPort: 30001

- containerPort: 30002 # Grafana

hostPort: 30002

- containerPort: 30003 # Kiali

hostPort: 30003

- containerPort: 30004 # Tracing

hostPort: 30004

- containerPort: 30005 # kube-ops-view

hostPort: 30005

- role: worker

- role: worker

networking:

podSubnet: 10.10.0.0/16

serviceSubnet: 10.200.1.0/24

EOF

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

Creating cluster "myk8s" ...

✓ Ensuring node image (kindest/node:v1.32.2) 🖼

✓ Preparing nodes 📦 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-myk8s"

You can now use your cluster with:

kubectl cluster-info --context kind-myk8s

Have a nice day! 👋

2. 클러스터 노드 상태 확인

1

docker ps

✅ 출력

1

2

3

4

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

22747018eabb kindest/node:v1.32.2 "/usr/local/bin/entr…" About a minute ago Up About a minute 0.0.0.0:30000-30005->30000-30005/tcp, 127.0.0.1:44959->6443/tcp myk8s-control-plane

42a8f93275a7 kindest/node:v1.32.2 "/usr/local/bin/entr…" About a minute ago Up About a minute myk8s-worker

0476037a89b7 kindest/node:v1.32.2 "/usr/local/bin/entr…" About a minute ago Up About a minute myk8s-worker2

3. 노드별 필수 유틸리티 설치

1

for node in control-plane worker worker2; do echo "node : myk8s-$node" ; docker exec -it myk8s-$node sh -c 'apt update && apt install tree psmisc lsof ipset wget bridge-utils net-tools dnsutils tcpdump ngrep iputils-ping git vim -y'; echo; done

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

...

Setting up bind9-libs:amd64 (1:9.18.33-1~deb12u2) ...

Setting up openssh-client (1:9.2p1-2+deb12u6) ...

Setting up libxext6:amd64 (2:1.3.4-1+b1) ...

Setting up dbus-daemon (1.14.10-1~deb12u1) ...

Setting up libnet1:amd64 (1.1.6+dfsg-3.2) ...

Setting up libpcap0.8:amd64 (1.10.3-1) ...

Setting up dbus (1.14.10-1~deb12u1) ...

invoke-rc.d: policy-rc.d denied execution of start.

/usr/sbin/policy-rc.d returned 101, not running 'start dbus.service'

Setting up libgdbm-compat4:amd64 (1.23-3) ...

Setting up xauth (1:1.1.2-1) ...

Setting up bind9-host (1:9.18.33-1~deb12u2) ...

Setting up libperl5.36:amd64 (5.36.0-7+deb12u2) ...

Setting up tcpdump (4.99.3-1) ...

Setting up ngrep (1.47+ds1-5+b1) ...

Setting up perl (5.36.0-7+deb12u2) ...

Setting up bind9-dnsutils (1:9.18.33-1~deb12u2) ...

Setting up dnsutils (1:9.18.33-1~deb12u2) ...

Setting up liberror-perl (0.17029-2) ...

Setting up git (1:2.39.5-0+deb12u2) ...

Processing triggers for libc-bin (2.36-9+deb12u9) ...

4. Kind 네트워크 목록 조회

1

docker network ls

✅ 출력

1

2

3

4

5

NETWORK ID NAME DRIVER SCOPE

64b0df261790 bridge bridge local

bb4d74152d4a host host local

dbf072d0a217 kind bridge local

056dcb2c01d1 none null local

5. Kind 브리지 상세 정보 확인

1

docker inspect kind

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

[

{

"Name": "kind",

"Id": "dbf072d0a217f53e0b62f42cee01bcecc1b2f6ea216475178db001f2e38681f5",

"Created": "2025-01-26T16:18:22.33980443+09:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv4": true,

"EnableIPv6": true,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.18.0.0/16",

"Gateway": "172.18.0.1"

},

{

"Subnet": "fc00:f853:ccd:e793::/64",

"Gateway": "fc00:f853:ccd:e793::1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"0476037a89b7c29dff4584506215eb077af038d807d1b2c1463fb7e61cef8910": {

"Name": "myk8s-worker2",

"EndpointID": "a0bdda25e785e7d2c25d5f7bbcd62f16f929b210e314c53b42b9cee8e12e6744",

"MacAddress": "be:c2:93:3b:fb:74",

"IPv4Address": "172.18.0.2/16",

"IPv6Address": "fc00:f853:ccd:e793::2/64"

},

"22747018eabbc4550d68aa51b861613774b827b03baa9b720d362747c0a3ea86": {

"Name": "myk8s-control-plane",

"EndpointID": "afca91598beaa690677283b0d489084cd3a469e19b32a8ac08b4224ff5d224be",

"MacAddress": "06:27:aa:c2:4b:88",

"IPv4Address": "172.18.0.3/16",

"IPv6Address": "fc00:f853:ccd:e793::3/64"

},

"42a8f93275a7454296d00ea74e7f33414893d5f22ded2c33882236ebf08ead5c": {

"Name": "myk8s-worker",

"EndpointID": "f33db179a60ae6554c546f8b597ec2fc35499ebf3b8e559b1da17f5deab38db9",

"MacAddress": "22:57:10:81:c9:8e",

"IPv4Address": "172.18.0.4/16",

"IPv6Address": "fc00:f853:ccd:e793::4/64"

}

},

"Options": {

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

6. 테스트용 컨테이너 mypc 기동

1

2

3

4

docker run -d --rm --name mypc --network kind --ip 172.18.0.100 nicolaka/netshoot sleep infinity

# 결과

2046c5ac8dd06893ec7f67033e1a4278481ef1e14fb8a3ec1f487db2714d8cb2

7. 모든 컨테이너 목록 확인

1

docker ps

✅ 출력

1

2

3

4

5

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2046c5ac8dd0 nicolaka/netshoot "sleep infinity" 20 seconds ago Up 17 seconds mypc

22747018eabb kindest/node:v1.32.2 "/usr/local/bin/entr…" 6 minutes ago Up 6 minutes 0.0.0.0:30000-30005->30000-30005/tcp, 127.0.0.1:44959->6443/tcp myk8s-control-plane

42a8f93275a7 kindest/node:v1.32.2 "/usr/local/bin/entr…" 6 minutes ago Up 6 minutes myk8s-worker

0476037a89b7 kindest/node:v1.32.2 "/usr/local/bin/entr…" 6 minutes ago Up 6 minutes myk8s-worker2

8. Kind 네트워크상의 컨테이너 IP 확인

1

docker ps -q | xargs docker inspect --format ' '

✅ 출력

1

2

3

4

/mypc 172.18.0.100

/myk8s-control-plane 172.18.0.3

/myk8s-worker 172.18.0.4

/myk8s-worker2 172.18.0.2

🧩 MetalLB 배포

1. MetalLB 매니페스트 적용

1

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.14.9/config/manifests/metallb-native.yaml

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

namespace/metallb-system created

customresourcedefinition.apiextensions.k8s.io/bfdprofiles.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgpadvertisements.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgppeers.metallb.io created

customresourcedefinition.apiextensions.k8s.io/communities.metallb.io created

customresourcedefinition.apiextensions.k8s.io/ipaddresspools.metallb.io created

customresourcedefinition.apiextensions.k8s.io/l2advertisements.metallb.io created

customresourcedefinition.apiextensions.k8s.io/servicel2statuses.metallb.io created

serviceaccount/controller created

serviceaccount/speaker created

role.rbac.authorization.k8s.io/controller created

role.rbac.authorization.k8s.io/pod-lister created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/controller created

rolebinding.rbac.authorization.k8s.io/pod-lister created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

configmap/metallb-excludel2 created

secret/metallb-webhook-cert created

service/metallb-webhook-service created

deployment.apps/controller created

daemonset.apps/speaker created

validatingwebhookconfiguration.admissionregistration.k8s.io/metallb-webhook-configuration created

2. MetalLB 관련 CRD 목록 확인

1

kubectl get crd

✅ 출력

1

2

3

4

5

6

7

8

NAME CREATED AT

bfdprofiles.metallb.io 2025-06-07T07:18:05Z

bgpadvertisements.metallb.io 2025-06-07T07:18:05Z

bgppeers.metallb.io 2025-06-07T07:18:05Z

communities.metallb.io 2025-06-07T07:18:05Z

ipaddresspools.metallb.io 2025-06-07T07:18:05Z

l2advertisements.metallb.io 2025-06-07T07:18:05Z

servicel2statuses.metallb.io 2025-06-07T07:18:05Z

3. MetalLB Pod 상태 확인

1

kubectl get pod -n metallb-system

✅ 출력

1

2

3

4

5

NAME READY STATUS RESTARTS AGE

controller-bb5f47665-29lwc 1/1 Running 0 53s

speaker-f7qvl 1/1 Running 0 53s

speaker-hcfq8 1/1 Running 0 53s

speaker-lr429 1/1 Running 0 53s

4. IPAddressPool 및 L2Advertisement 리소스 적용

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

cat << EOF | kubectl apply -f -

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: default

namespace: metallb-system

spec:

addresses:

- 172.18.255.201-172.18.255.220

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: default

namespace: metallb-system

spec:

ipAddressPools:

- default

EOF

✅ 출력

1

2

ipaddresspool.metallb.io/default created

l2advertisement.metallb.io/default created

5. IPAddressPool 및 L2Advertisement 생성 결과 확인

1

kubectl get IPAddressPool,L2Advertisement -A

✅ 출력

1

2

3

4

5

NAMESPACE NAME AUTO ASSIGN AVOID BUGGY IPS ADDRESSES

metallb-system ipaddresspool.metallb.io/default true false ["172.18.255.201-172.18.255.220"]

NAMESPACE NAME IPADDRESSPOOLS IPADDRESSPOOL SELECTORS INTERFACES

metallb-system l2advertisement.metallb.io/default ["default"]

🚀 istio 1.26.0 설치 : Ambient profile

1. myk8s-control-plane 진입 후, istioctl 설치

1

2

3

4

5

6

7

8

docker exec -it myk8s-control-plane bash

root@myk8s-control-plane:/# export ISTIOV=1.26.0

echo 'export ISTIOV=1.26.0' >> /root/.bashrc

curl -s -L https://istio.io/downloadIstio | ISTIO_VERSION=$ISTIOV sh -

cp istio-$ISTIOV/bin/istioctl /usr/local/bin/istioctl

istioctl version --remote=false

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

Downloading istio-1.26.0 from https://github.com/istio/istio/releases/download/1.26.0/istio-1.26.0-linux-amd64.tar.gz ...

Istio 1.26.0 download complete!

The Istio release archive has been downloaded to the istio-1.26.0 directory.

To configure the istioctl client tool for your workstation,

add the /istio-1.26.0/bin directory to your environment path variable with:

export PATH="$PATH:/istio-1.26.0/bin"

Begin the Istio pre-installation check by running:

istioctl x precheck

Try Istio in ambient mode

https://istio.io/latest/docs/ambient/getting-started/

Try Istio in sidecar mode

https://istio.io/latest/docs/setup/getting-started/

Install guides for ambient mode

https://istio.io/latest/docs/ambient/install/

Install guides for sidecar mode

https://istio.io/latest/docs/setup/install/

Need more information? Visit https://istio.io/latest/docs/

client version: 1.26.0

2. Istio ambient 프로파일 설치

1

root@myk8s-control-plane:/# istioctl install --set profile=ambient --set meshConfig.accessLogFile=/dev/stdout --skip-confirmation

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|\

| \

| \

| \

/|| \

/ || \

/ || \

/ || \

/ || \

/ || \

/______||__________\

____________________

\__ _____/

\_____/

✔ Istio core installed ⛵️

✔ Istiod installed 🧠

✔ CNI installed 🪢

✔ Ztunnel installed 🔒

✔ Installation complete

The ambient profile has been installed successfully, enjoy Istio without sidecars!

3. Kubernetes Gateway API CRDs 설치

1

2

root@myk8s-control-plane:/# kubectl get crd gateways.gateway.networking.k8s.io &> /dev/null || \

kubectl apply -f https://github.com/kubernetes-sigs/gateway-api/releases/download/v1.3.0/standard-install.yaml

✅ 출력

1

2

3

4

5

customresourcedefinition.apiextensions.k8s.io/gatewayclasses.gateway.networking.k8s.io created

customresourcedefinition.apiextensions.k8s.io/gateways.gateway.networking.k8s.io created

customresourcedefinition.apiextensions.k8s.io/grpcroutes.gateway.networking.k8s.io created

customresourcedefinition.apiextensions.k8s.io/httproutes.gateway.networking.k8s.io created

customresourcedefinition.apiextensions.k8s.io/referencegrants.gateway.networking.k8s.io created

4. 보조 도구 설치

1

root@myk8s-control-plane:/# kubectl apply -f istio-$ISTIOV/samples/addons

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

serviceaccount/grafana created

configmap/grafana created

service/grafana created

deployment.apps/grafana created

configmap/istio-grafana-dashboards created

configmap/istio-services-grafana-dashboards created

deployment.apps/jaeger created

service/tracing created

service/zipkin created

service/jaeger-collector created

serviceaccount/kiali created

configmap/kiali created

clusterrole.rbac.authorization.k8s.io/kiali created

clusterrolebinding.rbac.authorization.k8s.io/kiali created

service/kiali created

deployment.apps/kiali created

serviceaccount/loki created

configmap/loki created

configmap/loki-runtime created

clusterrole.rbac.authorization.k8s.io/loki-clusterrole created

clusterrolebinding.rbac.authorization.k8s.io/loki-clusterrolebinding created

service/loki-memberlist created

service/loki-headless created

service/loki created

statefulset.apps/loki created

serviceaccount/prometheus created

configmap/prometheus created

clusterrole.rbac.authorization.k8s.io/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created

service/prometheus created

deployment.apps/prometheus created

5. 컨트롤 플레인 셸 종료

1

2

root@myk8s-control-plane:/# exit

exit

6. Istio 설치 확인 및 컨트롤 플레인 정보 확인

1

kubectl get crd | grep gateways

✅ 출력

1

2

gateways.gateway.networking.k8s.io 2025-06-07T07:26:54Z

gateways.networking.istio.io 2025-06-07T07:25:24Z

7. Gateway API 리소스 확인

1

kubectl api-resources | grep Gateway

✅ 출력

1

2

3

gatewayclasses gc gateway.networking.k8s.io/v1 false GatewayClass

gateways gtw gateway.networking.k8s.io/v1 true Gateway

gateways gw networking.istio.io/v1 true Gateway

8. Istio ConfigMap 정보 확인

1

kubectl describe cm -n istio-system istio

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

Name: istio

Namespace: istio-system

Labels: app.kubernetes.io/instance=istio

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=istiod

app.kubernetes.io/part-of=istio

app.kubernetes.io/version=1.26.0

helm.sh/chart=istiod-1.26.0

install.operator.istio.io/owning-resource=unknown

install.operator.istio.io/owning-resource-namespace=istio-system

istio.io/rev=default

operator.istio.io/component=Pilot

operator.istio.io/managed=Reconcile

operator.istio.io/version=1.26.0

release=istio

Annotations: <none>

Data

====

mesh:

----

accessLogFile: /dev/stdout

defaultConfig:

discoveryAddress: istiod.istio-system.svc:15012

image:

imageType: distroless

proxyMetadata:

ISTIO_META_ENABLE_HBONE: "true"

defaultProviders:

metrics:

- prometheus

enablePrometheusMerge: true

rootNamespace: istio-system

trustDomain: cluster.local

meshNetworks:

----

networks: {}

BinaryData

====

Events: <none>

9. Istio zTunnel 상태 확인

1

docker exec -it myk8s-control-plane istioctl proxy-status

✅ 출력

1

2

3

4

NAME CLUSTER CDS LDS EDS RDS ECDS ISTIOD VERSION

ztunnel-4bls2.istio-system Kubernetes IGNORED IGNORED IGNORED IGNORED IGNORED istiod-86b6b7ff7-d7q7f 1.26.0

ztunnel-kczj2.istio-system Kubernetes IGNORED IGNORED IGNORED IGNORED IGNORED istiod-86b6b7ff7-d7q7f 1.26.0

ztunnel-wr6pp.istio-system Kubernetes IGNORED IGNORED IGNORED IGNORED IGNORED istiod-86b6b7ff7-d7q7f 1.26.0

10. 워크로드별 zTunnel 적용 여부 확인

1

docker exec -it myk8s-control-plane istioctl ztunnel-config workload

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

NAMESPACE POD NAME ADDRESS NODE WAYPOINT PROTOCOL

default kubernetes 172.18.0.3 None TCP

istio-system grafana-65bfb5f855-jfmdl 10.10.2.4 myk8s-worker2 None TCP

istio-system istio-cni-node-7kst6 10.10.1.4 myk8s-worker None TCP

istio-system istio-cni-node-dpqsv 10.10.2.2 myk8s-worker2 None TCP

istio-system istio-cni-node-rfx6w 10.10.0.5 myk8s-control-plane None TCP

istio-system istiod-86b6b7ff7-d7q7f 10.10.1.3 myk8s-worker None TCP

istio-system jaeger-868fbc75d7-4lq87 10.10.2.5 myk8s-worker2 None TCP

istio-system kiali-6d774d8bb8-zkx5r 10.10.2.6 myk8s-worker2 None TCP

istio-system loki-0 10.10.2.9 myk8s-worker2 None TCP

istio-system prometheus-689cc795d4-vrlxd 10.10.2.7 myk8s-worker2 None TCP

istio-system ztunnel-4bls2 10.10.0.6 myk8s-control-plane None TCP

istio-system ztunnel-kczj2 10.10.2.3 myk8s-worker2 None TCP

istio-system ztunnel-wr6pp 10.10.1.5 myk8s-worker None TCP

kube-system coredns-668d6bf9bc-k6lf9 10.10.0.2 myk8s-control-plane None TCP

kube-system coredns-668d6bf9bc-xbtkx 10.10.0.3 myk8s-control-plane None TCP

kube-system etcd-myk8s-control-plane 172.18.0.3 myk8s-control-plane None TCP

kube-system kindnet-g9qmc 172.18.0.2 myk8s-worker2 None TCP

kube-system kindnet-lc2q2 172.18.0.3 myk8s-control-plane None TCP

kube-system kindnet-njcw4 172.18.0.4 myk8s-worker None TCP

kube-system kube-apiserver-myk8s-control-plane 172.18.0.3 myk8s-control-plane None TCP

kube-system kube-controller-manager-myk8s-control-plane 172.18.0.3 myk8s-control-plane None TCP

kube-system kube-proxy-h2qb5 172.18.0.2 myk8s-worker2 None TCP

kube-system kube-proxy-jmfg8 172.18.0.4 myk8s-worker None TCP

kube-system kube-proxy-nswxj 172.18.0.3 myk8s-control-plane None TCP

kube-system kube-scheduler-myk8s-control-plane 172.18.0.3 myk8s-control-plane None TCP

local-path-storage local-path-provisioner-7dc846544d-vzdcv 10.10.0.4 myk8s-control-plane None TCP

metallb-system controller-bb5f47665-29lwc 10.10.1.2 myk8s-worker None TCP

metallb-system speaker-f7qvl 172.18.0.2 myk8s-worker2 None TCP

metallb-system speaker-hcfq8 172.18.0.4 myk8s-worker None TCP

metallb-system speaker-lr429 172.18.0.3 myk8s-control-plane None TCP

11. 서비스별 zTunnel 적용 여부 확인

1

docker exec -it myk8s-control-plane istioctl ztunnel-config service

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

NAMESPACE SERVICE NAME SERVICE VIP WAYPOINT ENDPOINTS

default kubernetes 10.200.1.1 None 1/1

istio-system grafana 10.200.1.136 None 1/1

istio-system istiod 10.200.1.163 None 1/1

istio-system jaeger-collector 10.200.1.144 None 1/1

istio-system kiali 10.200.1.133 None 1/1

istio-system loki 10.200.1.200 None 1/1

istio-system loki-headless None 1/1

istio-system loki-memberlist None 1/1

istio-system prometheus 10.200.1.41 None 1/1

istio-system tracing 10.200.1.229 None 1/1

istio-system zipkin 10.200.1.117 None 1/1

kube-system kube-dns 10.200.1.10 None 2/2

metallb-system metallb-webhook-service 10.200.1.89 None 1/1

12. 각 노드의 iptables 규칙 확인

1

for node in control-plane worker worker2; do echo "node : myk8s-$node" ; docker exec -it myk8s-$node sh -c 'iptables-save'; echo; done

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

node : myk8s-control-plane

# Generated by iptables-save v1.8.9 (nf_tables) on Sat Jun 7 07:33:35 2025

*mangle

:PREROUTING ACCEPT [0:0]

:INPUT ACCEPT [0:0]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

:POSTROUTING ACCEPT [0:0]

:KUBE-IPTABLES-HINT - [0:0]

:KUBE-KUBELET-CANARY - [0:0]

:KUBE-PROXY-CANARY - [0:0]

COMMIT

# Completed on Sat Jun 7 07:33:35 2025

# Generated by iptables-save v1.8.9 (nf_tables) on Sat Jun 7 07:33:35 2025

*filter

:INPUT ACCEPT [0:0]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

:KUBE-EXTERNAL-SERVICES - [0:0]

:KUBE-FIREWALL - [0:0]

:KUBE-FORWARD - [0:0]

:KUBE-KUBELET-CANARY - [0:0]

:KUBE-NODEPORTS - [0:0]

:KUBE-PROXY-CANARY - [0:0]

:KUBE-PROXY-FIREWALL - [0:0]

:KUBE-SERVICES - [0:0]

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A INPUT -j KUBE-FIREWALL

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A FORWARD -m comment --comment "kubernetes forwarding rules" -j KUBE-FORWARD

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -j KUBE-FIREWALL

-A KUBE-FIREWALL ! -s 127.0.0.0/8 -d 127.0.0.0/8 -m comment --comment "block incoming localnet connections" -m conntrack ! --ctstate RELATED,ESTABLISHED,DNAT -j DROP

-A KUBE-FORWARD -m conntrack --ctstate INVALID -m nfacct --nfacct-name ct_state_invalid_dropped_pkts -j DROP

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding rules" -m mark --mark 0x4000/0x4000 -j ACCEPT

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding conntrack rule" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

COMMIT

# Completed on Sat Jun 7 07:33:35 2025

# Generated by iptables-save v1.8.9 (nf_tables) on Sat Jun 7 07:33:35 2025

*nat

:PREROUTING ACCEPT [483:51481]

:INPUT ACCEPT [216:13458]

:OUTPUT ACCEPT [9640:578471]

:POSTROUTING ACCEPT [9749:590198]

:DOCKER_OUTPUT - [0:0]

:DOCKER_POSTROUTING - [0:0]

:ISTIO_POSTRT - [0:0]

:KIND-MASQ-AGENT - [0:0]

:KUBE-KUBELET-CANARY - [0:0]

:KUBE-MARK-MASQ - [0:0]

:KUBE-NODEPORTS - [0:0]

:KUBE-POSTROUTING - [0:0]

:KUBE-PROXY-CANARY - [0:0]

:KUBE-SEP-2VZBTCTWVROYNW5S - [0:0]

:KUBE-SEP-2XZJVPRY2PQVE3B3 - [0:0]

:KUBE-SEP-56NKIH2AGMF3225M - [0:0]

:KUBE-SEP-57OB7LMKUA4REB6U - [0:0]

:KUBE-SEP-6GODNNVFRWQ66GUT - [0:0]

:KUBE-SEP-AGD4XG6YTTFBC5MP - [0:0]

:KUBE-SEP-BK4SURRAVDQBOPR4 - [0:0]

:KUBE-SEP-BTHPIFJSQR2I7YRG - [0:0]

:KUBE-SEP-EOOOBC4NP4L4Q2B3 - [0:0]

:KUBE-SEP-HYFTSL3KNTBQVM56 - [0:0]

:KUBE-SEP-IHVFB7RO5ADTY6F5 - [0:0]

:KUBE-SEP-IPT6REQVTVHSQECW - [0:0]

:KUBE-SEP-KFUQ2ADNBCFWH5LK - [0:0]

:KUBE-SEP-O7QD6W2Z5ZWUKA37 - [0:0]

:KUBE-SEP-OUCY3KVQXMID5GFB - [0:0]

:KUBE-SEP-QEFWPNF3O63RIU3Y - [0:0]

:KUBE-SEP-QKX4QX54UKWK6JIY - [0:0]

:KUBE-SEP-RT3F6VLY3P67FIV3 - [0:0]

:KUBE-SEP-SDODBYH4DETTTORW - [0:0]

:KUBE-SEP-TMGNMEREWWYSM5SB - [0:0]

:KUBE-SEP-VIIXX4VJ3A6FHLRW - [0:0]

:KUBE-SEP-VQYPEMZ5ZXGF6COE - [0:0]

:KUBE-SEP-X33LYSV5PIJ3PHXQ - [0:0]

:KUBE-SEP-XVHB3NIW2NQLTFP3 - [0:0]

:KUBE-SEP-XWEOB3JN6VI62DQQ - [0:0]

:KUBE-SEP-ZEA5VGCBA2QNA7AK - [0:0]

:KUBE-SERVICES - [0:0]

:KUBE-SVC-A4N66M5KWTPIOJ3M - [0:0]

:KUBE-SVC-CG3LQLBYYHBKATGN - [0:0]

:KUBE-SVC-ERIFXISQEP7F7OF4 - [0:0]

:KUBE-SVC-GZ25SP4UFGF7SAVL - [0:0]

:KUBE-SVC-H7T7T7XMCTV7IA7W - [0:0]

:KUBE-SVC-HHG7U5KRFPWQTOCU - [0:0]

:KUBE-SVC-JD5MR3NA4I4DYORP - [0:0]

:KUBE-SVC-KQQXNCC3T6BSGKIX - [0:0]

:KUBE-SVC-LTFVHWNLEPNOR2EL - [0:0]

:KUBE-SVC-NPX46M4PTMTKRN6Y - [0:0]

:KUBE-SVC-NVNLZVDQSGQUD3NM - [0:0]

:KUBE-SVC-PGVURPSX4NOENZDL - [0:0]

:KUBE-SVC-QI3XYPITNLHPCCQT - [0:0]

:KUBE-SVC-RJIBBGIJBATPMI6Z - [0:0]

:KUBE-SVC-SEST5XGLUQ5J34LB - [0:0]

:KUBE-SVC-TCOU7JCQXEZGVUNU - [0:0]

:KUBE-SVC-TRDPJ2HAZ5FCHNBZ - [0:0]

:KUBE-SVC-VL7ZZ6D5B63AFR4Y - [0:0]

:KUBE-SVC-WHNIZNLB5XFXIX2C - [0:0]

:KUBE-SVC-X2LFBJAZKZE3QCOK - [0:0]

:KUBE-SVC-XHUBMW47Y5G3ICIS - [0:0]

:KUBE-SVC-XJMB3L73YF5UUKWH - [0:0]

:KUBE-SVC-Y3OVZYCKHGYTKGDA - [0:0]

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A PREROUTING -d 172.18.0.1/32 -j DOCKER_OUTPUT

-A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -d 172.18.0.1/32 -j DOCKER_OUTPUT

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -d 172.18.0.1/32 -j DOCKER_POSTROUTING

-A POSTROUTING -m addrtype ! --dst-type LOCAL -m comment --comment "kind-masq-agent: ensure nat POSTROUTING directs all non-LOCAL destination traffic to our custom KIND-MASQ-AGENT chain" -j KIND-MASQ-AGENT

-A POSTROUTING -j ISTIO_POSTRT

-A DOCKER_OUTPUT -d 172.18.0.1/32 -p tcp -m tcp --dport 53 -j DNAT --to-destination 127.0.0.11:46183

-A DOCKER_OUTPUT -d 172.18.0.1/32 -p udp -m udp --dport 53 -j DNAT --to-destination 127.0.0.11:40395

-A DOCKER_POSTROUTING -s 127.0.0.11/32 -p tcp -m tcp --sport 46183 -j SNAT --to-source 172.18.0.1:53

-A DOCKER_POSTROUTING -s 127.0.0.11/32 -p udp -m udp --sport 40395 -j SNAT --to-source 172.18.0.1:53

-A ISTIO_POSTRT -p tcp -m owner --socket-exists -m set --match-set istio-inpod-probes-v4 dst -j SNAT --to-source 169.254.7.127

-A KIND-MASQ-AGENT -d 10.10.0.0/16 -m comment --comment "kind-masq-agent: local traffic is not subject to MASQUERADE" -j RETURN

-A KIND-MASQ-AGENT -m comment --comment "kind-masq-agent: outbound traffic is subject to MASQUERADE (must be last in chain)" -j MASQUERADE

-A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully

-A KUBE-SEP-2VZBTCTWVROYNW5S -s 10.10.2.9/32 -m comment --comment "istio-system/loki:http-metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-2VZBTCTWVROYNW5S -p tcp -m comment --comment "istio-system/loki:http-metrics" -m tcp -j DNAT --to-destination 10.10.2.9:3100

-A KUBE-SEP-2XZJVPRY2PQVE3B3 -s 10.10.0.2/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-2XZJVPRY2PQVE3B3 -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 10.10.0.2:53

-A KUBE-SEP-56NKIH2AGMF3225M -s 10.10.2.7/32 -m comment --comment "istio-system/prometheus:http" -j KUBE-MARK-MASQ

-A KUBE-SEP-56NKIH2AGMF3225M -p tcp -m comment --comment "istio-system/prometheus:http" -m tcp -j DNAT --to-destination 10.10.2.7:9090

-A KUBE-SEP-57OB7LMKUA4REB6U -s 10.10.2.5/32 -m comment --comment "istio-system/jaeger-collector:grpc-otel" -j KUBE-MARK-MASQ

-A KUBE-SEP-57OB7LMKUA4REB6U -p tcp -m comment --comment "istio-system/jaeger-collector:grpc-otel" -m tcp -j DNAT --to-destination 10.10.2.5:4317

-A KUBE-SEP-6GODNNVFRWQ66GUT -s 10.10.0.3/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-6GODNNVFRWQ66GUT -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 10.10.0.3:9153

-A KUBE-SEP-AGD4XG6YTTFBC5MP -s 10.10.2.5/32 -m comment --comment "istio-system/zipkin:http-query" -j KUBE-MARK-MASQ

-A KUBE-SEP-AGD4XG6YTTFBC5MP -p tcp -m comment --comment "istio-system/zipkin:http-query" -m tcp -j DNAT --to-destination 10.10.2.5:9411

-A KUBE-SEP-BK4SURRAVDQBOPR4 -s 10.10.1.2/32 -m comment --comment "metallb-system/metallb-webhook-service" -j KUBE-MARK-MASQ

-A KUBE-SEP-BK4SURRAVDQBOPR4 -p tcp -m comment --comment "metallb-system/metallb-webhook-service" -m tcp -j DNAT --to-destination 10.10.1.2:9443

-A KUBE-SEP-BTHPIFJSQR2I7YRG -s 10.10.2.4/32 -m comment --comment "istio-system/grafana:service" -j KUBE-MARK-MASQ

-A KUBE-SEP-BTHPIFJSQR2I7YRG -p tcp -m comment --comment "istio-system/grafana:service" -m tcp -j DNAT --to-destination 10.10.2.4:3000

-A KUBE-SEP-EOOOBC4NP4L4Q2B3 -s 10.10.2.5/32 -m comment --comment "istio-system/jaeger-collector:http-otel" -j KUBE-MARK-MASQ

-A KUBE-SEP-EOOOBC4NP4L4Q2B3 -p tcp -m comment --comment "istio-system/jaeger-collector:http-otel" -m tcp -j DNAT --to-destination 10.10.2.5:4318

-A KUBE-SEP-HYFTSL3KNTBQVM56 -s 10.10.2.9/32 -m comment --comment "istio-system/loki:grpc" -j KUBE-MARK-MASQ

-A KUBE-SEP-HYFTSL3KNTBQVM56 -p tcp -m comment --comment "istio-system/loki:grpc" -m tcp -j DNAT --to-destination 10.10.2.9:9095

-A KUBE-SEP-IHVFB7RO5ADTY6F5 -s 10.10.2.6/32 -m comment --comment "istio-system/kiali:http-metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-IHVFB7RO5ADTY6F5 -p tcp -m comment --comment "istio-system/kiali:http-metrics" -m tcp -j DNAT --to-destination 10.10.2.6:9090

-A KUBE-SEP-IPT6REQVTVHSQECW -s 10.10.2.5/32 -m comment --comment "istio-system/tracing:grpc-query" -j KUBE-MARK-MASQ

-A KUBE-SEP-IPT6REQVTVHSQECW -p tcp -m comment --comment "istio-system/tracing:grpc-query" -m tcp -j DNAT --to-destination 10.10.2.5:16685

-A KUBE-SEP-KFUQ2ADNBCFWH5LK -s 10.10.2.5/32 -m comment --comment "istio-system/jaeger-collector:http-zipkin" -j KUBE-MARK-MASQ

-A KUBE-SEP-KFUQ2ADNBCFWH5LK -p tcp -m comment --comment "istio-system/jaeger-collector:http-zipkin" -m tcp -j DNAT --to-destination 10.10.2.5:9411

-A KUBE-SEP-O7QD6W2Z5ZWUKA37 -s 10.10.2.6/32 -m comment --comment "istio-system/kiali:http" -j KUBE-MARK-MASQ

-A KUBE-SEP-O7QD6W2Z5ZWUKA37 -p tcp -m comment --comment "istio-system/kiali:http" -m tcp -j DNAT --to-destination 10.10.2.6:20001

-A KUBE-SEP-OUCY3KVQXMID5GFB -s 10.10.1.3/32 -m comment --comment "istio-system/istiod:grpc-xds" -j KUBE-MARK-MASQ

-A KUBE-SEP-OUCY3KVQXMID5GFB -p tcp -m comment --comment "istio-system/istiod:grpc-xds" -m tcp -j DNAT --to-destination 10.10.1.3:15010

-A KUBE-SEP-QEFWPNF3O63RIU3Y -s 10.10.2.5/32 -m comment --comment "istio-system/jaeger-collector:jaeger-collector-http" -j KUBE-MARK-MASQ

-A KUBE-SEP-QEFWPNF3O63RIU3Y -p tcp -m comment --comment "istio-system/jaeger-collector:jaeger-collector-http" -m tcp -j DNAT --to-destination 10.10.2.5:14268

-A KUBE-SEP-QKX4QX54UKWK6JIY -s 172.18.0.3/32 -m comment --comment "default/kubernetes:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-QKX4QX54UKWK6JIY -p tcp -m comment --comment "default/kubernetes:https" -m tcp -j DNAT --to-destination 172.18.0.3:6443

-A KUBE-SEP-RT3F6VLY3P67FIV3 -s 10.10.0.2/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-RT3F6VLY3P67FIV3 -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 10.10.0.2:9153

-A KUBE-SEP-SDODBYH4DETTTORW -s 10.10.2.5/32 -m comment --comment "istio-system/tracing:http-query" -j KUBE-MARK-MASQ

-A KUBE-SEP-SDODBYH4DETTTORW -p tcp -m comment --comment "istio-system/tracing:http-query" -m tcp -j DNAT --to-destination 10.10.2.5:16686

-A KUBE-SEP-TMGNMEREWWYSM5SB -s 10.10.1.3/32 -m comment --comment "istio-system/istiod:https-dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-TMGNMEREWWYSM5SB -p tcp -m comment --comment "istio-system/istiod:https-dns" -m tcp -j DNAT --to-destination 10.10.1.3:15012

-A KUBE-SEP-VIIXX4VJ3A6FHLRW -s 10.10.1.3/32 -m comment --comment "istio-system/istiod:https-webhook" -j KUBE-MARK-MASQ

-A KUBE-SEP-VIIXX4VJ3A6FHLRW -p tcp -m comment --comment "istio-system/istiod:https-webhook" -m tcp -j DNAT --to-destination 10.10.1.3:15017

-A KUBE-SEP-VQYPEMZ5ZXGF6COE -s 10.10.2.5/32 -m comment --comment "istio-system/jaeger-collector:jaeger-collector-grpc" -j KUBE-MARK-MASQ

-A KUBE-SEP-VQYPEMZ5ZXGF6COE -p tcp -m comment --comment "istio-system/jaeger-collector:jaeger-collector-grpc" -m tcp -j DNAT --to-destination 10.10.2.5:14250

-A KUBE-SEP-X33LYSV5PIJ3PHXQ -s 10.10.1.3/32 -m comment --comment "istio-system/istiod:http-monitoring" -j KUBE-MARK-MASQ

-A KUBE-SEP-X33LYSV5PIJ3PHXQ -p tcp -m comment --comment "istio-system/istiod:http-monitoring" -m tcp -j DNAT --to-destination 10.10.1.3:15014

-A KUBE-SEP-XVHB3NIW2NQLTFP3 -s 10.10.0.2/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-XVHB3NIW2NQLTFP3 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 10.10.0.2:53

-A KUBE-SEP-XWEOB3JN6VI62DQQ -s 10.10.0.3/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-XWEOB3JN6VI62DQQ -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 10.10.0.3:53

-A KUBE-SEP-ZEA5VGCBA2QNA7AK -s 10.10.0.3/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-ZEA5VGCBA2QNA7AK -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 10.10.0.3:53

-A KUBE-SERVICES -d 10.200.1.163/32 -p tcp -m comment --comment "istio-system/istiod:http-monitoring cluster IP" -m tcp --dport 15014 -j KUBE-SVC-XHUBMW47Y5G3ICIS

-A KUBE-SERVICES -d 10.200.1.144/32 -p tcp -m comment --comment "istio-system/jaeger-collector:jaeger-collector-grpc cluster IP" -m tcp --dport 14250 -j KUBE-SVC-VL7ZZ6D5B63AFR4Y

-A KUBE-SERVICES -d 10.200.1.144/32 -p tcp -m comment --comment "istio-system/jaeger-collector:http-zipkin cluster IP" -m tcp --dport 9411 -j KUBE-SVC-HHG7U5KRFPWQTOCU

-A KUBE-SERVICES -d 10.200.1.133/32 -p tcp -m comment --comment "istio-system/kiali:http-metrics cluster IP" -m tcp --dport 9090 -j KUBE-SVC-KQQXNCC3T6BSGKIX

-A KUBE-SERVICES -d 10.200.1.200/32 -p tcp -m comment --comment "istio-system/loki:http-metrics cluster IP" -m tcp --dport 3100 -j KUBE-SVC-QI3XYPITNLHPCCQT

-A KUBE-SERVICES -d 10.200.1.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-SVC-TCOU7JCQXEZGVUNU

-A KUBE-SERVICES -d 10.200.1.163/32 -p tcp -m comment --comment "istio-system/istiod:https-webhook cluster IP" -m tcp --dport 443 -j KUBE-SVC-WHNIZNLB5XFXIX2C

-A KUBE-SERVICES -d 10.200.1.163/32 -p tcp -m comment --comment "istio-system/istiod:https-dns cluster IP" -m tcp --dport 15012 -j KUBE-SVC-CG3LQLBYYHBKATGN

-A KUBE-SERVICES -d 10.200.1.144/32 -p tcp -m comment --comment "istio-system/jaeger-collector:jaeger-collector-http cluster IP" -m tcp --dport 14268 -j KUBE-SVC-SEST5XGLUQ5J34LB

-A KUBE-SERVICES -d 10.200.1.133/32 -p tcp -m comment --comment "istio-system/kiali:http cluster IP" -m tcp --dport 20001 -j KUBE-SVC-TRDPJ2HAZ5FCHNBZ

-A KUBE-SERVICES -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-SVC-ERIFXISQEP7F7OF4

-A KUBE-SERVICES -d 10.200.1.163/32 -p tcp -m comment --comment "istio-system/istiod:grpc-xds cluster IP" -m tcp --dport 15010 -j KUBE-SVC-NVNLZVDQSGQUD3NM

-A KUBE-SERVICES -d 10.200.1.144/32 -p tcp -m comment --comment "istio-system/jaeger-collector:grpc-otel cluster IP" -m tcp --dport 4317 -j KUBE-SVC-PGVURPSX4NOENZDL

-A KUBE-SERVICES -d 10.200.1.144/32 -p tcp -m comment --comment "istio-system/jaeger-collector:http-otel cluster IP" -m tcp --dport 4318 -j KUBE-SVC-X2LFBJAZKZE3QCOK

-A KUBE-SERVICES -d 10.200.1.200/32 -p tcp -m comment --comment "istio-system/loki:grpc cluster IP" -m tcp --dport 9095 -j KUBE-SVC-XJMB3L73YF5UUKWH

-A KUBE-SERVICES -d 10.200.1.41/32 -p tcp -m comment --comment "istio-system/prometheus:http cluster IP" -m tcp --dport 9090 -j KUBE-SVC-RJIBBGIJBATPMI6Z

-A KUBE-SERVICES -d 10.200.1.229/32 -p tcp -m comment --comment "istio-system/tracing:http-query cluster IP" -m tcp --dport 80 -j KUBE-SVC-A4N66M5KWTPIOJ3M

-A KUBE-SERVICES -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP

-A KUBE-SERVICES -d 10.200.1.136/32 -p tcp -m comment --comment "istio-system/grafana:service cluster IP" -m tcp --dport 3000 -j KUBE-SVC-Y3OVZYCKHGYTKGDA

-A KUBE-SERVICES -d 10.200.1.229/32 -p tcp -m comment --comment "istio-system/tracing:grpc-query cluster IP" -m tcp --dport 16685 -j KUBE-SVC-H7T7T7XMCTV7IA7W

-A KUBE-SERVICES -d 10.200.1.117/32 -p tcp -m comment --comment "istio-system/zipkin:http-query cluster IP" -m tcp --dport 9411 -j KUBE-SVC-LTFVHWNLEPNOR2EL

-A KUBE-SERVICES -d 10.200.1.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-NPX46M4PTMTKRN6Y

-A KUBE-SERVICES -d 10.200.1.89/32 -p tcp -m comment --comment "metallb-system/metallb-webhook-service cluster IP" -m tcp --dport 443 -j KUBE-SVC-GZ25SP4UFGF7SAVL

-A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS

-A KUBE-SVC-A4N66M5KWTPIOJ3M ! -s 10.10.0.0/16 -d 10.200.1.229/32 -p tcp -m comment --comment "istio-system/tracing:http-query cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ

-A KUBE-SVC-A4N66M5KWTPIOJ3M -m comment --comment "istio-system/tracing:http-query -> 10.10.2.5:16686" -j KUBE-SEP-SDODBYH4DETTTORW

-A KUBE-SVC-CG3LQLBYYHBKATGN ! -s 10.10.0.0/16 -d 10.200.1.163/32 -p tcp -m comment --comment "istio-system/istiod:https-dns cluster IP" -m tcp --dport 15012 -j KUBE-MARK-MASQ

-A KUBE-SVC-CG3LQLBYYHBKATGN -m comment --comment "istio-system/istiod:https-dns -> 10.10.1.3:15012" -j KUBE-SEP-TMGNMEREWWYSM5SB

-A KUBE-SVC-ERIFXISQEP7F7OF4 ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 10.10.0.2:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-XVHB3NIW2NQLTFP3

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 10.10.0.3:53" -j KUBE-SEP-ZEA5VGCBA2QNA7AK

-A KUBE-SVC-GZ25SP4UFGF7SAVL ! -s 10.10.0.0/16 -d 10.200.1.89/32 -p tcp -m comment --comment "metallb-system/metallb-webhook-service cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SVC-GZ25SP4UFGF7SAVL -m comment --comment "metallb-system/metallb-webhook-service -> 10.10.1.2:9443" -j KUBE-SEP-BK4SURRAVDQBOPR4

-A KUBE-SVC-H7T7T7XMCTV7IA7W ! -s 10.10.0.0/16 -d 10.200.1.229/32 -p tcp -m comment --comment "istio-system/tracing:grpc-query cluster IP" -m tcp --dport 16685 -j KUBE-MARK-MASQ

-A KUBE-SVC-H7T7T7XMCTV7IA7W -m comment --comment "istio-system/tracing:grpc-query -> 10.10.2.5:16685" -j KUBE-SEP-IPT6REQVTVHSQECW

-A KUBE-SVC-HHG7U5KRFPWQTOCU ! -s 10.10.0.0/16 -d 10.200.1.144/32 -p tcp -m comment --comment "istio-system/jaeger-collector:http-zipkin cluster IP" -m tcp --dport 9411 -j KUBE-MARK-MASQ

-A KUBE-SVC-HHG7U5KRFPWQTOCU -m comment --comment "istio-system/jaeger-collector:http-zipkin -> 10.10.2.5:9411" -j KUBE-SEP-KFUQ2ADNBCFWH5LK

-A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ

-A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.10.0.2:9153" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-RT3F6VLY3P67FIV3

-A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.10.0.3:9153" -j KUBE-SEP-6GODNNVFRWQ66GUT

-A KUBE-SVC-KQQXNCC3T6BSGKIX ! -s 10.10.0.0/16 -d 10.200.1.133/32 -p tcp -m comment --comment "istio-system/kiali:http-metrics cluster IP" -m tcp --dport 9090 -j KUBE-MARK-MASQ

-A KUBE-SVC-KQQXNCC3T6BSGKIX -m comment --comment "istio-system/kiali:http-metrics -> 10.10.2.6:9090" -j KUBE-SEP-IHVFB7RO5ADTY6F5

-A KUBE-SVC-LTFVHWNLEPNOR2EL ! -s 10.10.0.0/16 -d 10.200.1.117/32 -p tcp -m comment --comment "istio-system/zipkin:http-query cluster IP" -m tcp --dport 9411 -j KUBE-MARK-MASQ

-A KUBE-SVC-LTFVHWNLEPNOR2EL -m comment --comment "istio-system/zipkin:http-query -> 10.10.2.5:9411" -j KUBE-SEP-AGD4XG6YTTFBC5MP

-A KUBE-SVC-NPX46M4PTMTKRN6Y ! -s 10.10.0.0/16 -d 10.200.1.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SVC-NPX46M4PTMTKRN6Y -m comment --comment "default/kubernetes:https -> 172.18.0.3:6443" -j KUBE-SEP-QKX4QX54UKWK6JIY

-A KUBE-SVC-NVNLZVDQSGQUD3NM ! -s 10.10.0.0/16 -d 10.200.1.163/32 -p tcp -m comment --comment "istio-system/istiod:grpc-xds cluster IP" -m tcp --dport 15010 -j KUBE-MARK-MASQ

-A KUBE-SVC-NVNLZVDQSGQUD3NM -m comment --comment "istio-system/istiod:grpc-xds -> 10.10.1.3:15010" -j KUBE-SEP-OUCY3KVQXMID5GFB

-A KUBE-SVC-PGVURPSX4NOENZDL ! -s 10.10.0.0/16 -d 10.200.1.144/32 -p tcp -m comment --comment "istio-system/jaeger-collector:grpc-otel cluster IP" -m tcp --dport 4317 -j KUBE-MARK-MASQ

-A KUBE-SVC-PGVURPSX4NOENZDL -m comment --comment "istio-system/jaeger-collector:grpc-otel -> 10.10.2.5:4317" -j KUBE-SEP-57OB7LMKUA4REB6U

-A KUBE-SVC-QI3XYPITNLHPCCQT ! -s 10.10.0.0/16 -d 10.200.1.200/32 -p tcp -m comment --comment "istio-system/loki:http-metrics cluster IP" -m tcp --dport 3100 -j KUBE-MARK-MASQ

-A KUBE-SVC-QI3XYPITNLHPCCQT -m comment --comment "istio-system/loki:http-metrics -> 10.10.2.9:3100" -j KUBE-SEP-2VZBTCTWVROYNW5S

-A KUBE-SVC-RJIBBGIJBATPMI6Z ! -s 10.10.0.0/16 -d 10.200.1.41/32 -p tcp -m comment --comment "istio-system/prometheus:http cluster IP" -m tcp --dport 9090 -j KUBE-MARK-MASQ

-A KUBE-SVC-RJIBBGIJBATPMI6Z -m comment --comment "istio-system/prometheus:http -> 10.10.2.7:9090" -j KUBE-SEP-56NKIH2AGMF3225M

-A KUBE-SVC-SEST5XGLUQ5J34LB ! -s 10.10.0.0/16 -d 10.200.1.144/32 -p tcp -m comment --comment "istio-system/jaeger-collector:jaeger-collector-http cluster IP" -m tcp --dport 14268 -j KUBE-MARK-MASQ

-A KUBE-SVC-SEST5XGLUQ5J34LB -m comment --comment "istio-system/jaeger-collector:jaeger-collector-http -> 10.10.2.5:14268" -j KUBE-SEP-QEFWPNF3O63RIU3Y

-A KUBE-SVC-TCOU7JCQXEZGVUNU ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 10.10.0.2:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-2XZJVPRY2PQVE3B3

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 10.10.0.3:53" -j KUBE-SEP-XWEOB3JN6VI62DQQ

-A KUBE-SVC-TRDPJ2HAZ5FCHNBZ ! -s 10.10.0.0/16 -d 10.200.1.133/32 -p tcp -m comment --comment "istio-system/kiali:http cluster IP" -m tcp --dport 20001 -j KUBE-MARK-MASQ

-A KUBE-SVC-TRDPJ2HAZ5FCHNBZ -m comment --comment "istio-system/kiali:http -> 10.10.2.6:20001" -j KUBE-SEP-O7QD6W2Z5ZWUKA37

-A KUBE-SVC-VL7ZZ6D5B63AFR4Y ! -s 10.10.0.0/16 -d 10.200.1.144/32 -p tcp -m comment --comment "istio-system/jaeger-collector:jaeger-collector-grpc cluster IP" -m tcp --dport 14250 -j KUBE-MARK-MASQ

-A KUBE-SVC-VL7ZZ6D5B63AFR4Y -m comment --comment "istio-system/jaeger-collector:jaeger-collector-grpc -> 10.10.2.5:14250" -j KUBE-SEP-VQYPEMZ5ZXGF6COE

-A KUBE-SVC-WHNIZNLB5XFXIX2C ! -s 10.10.0.0/16 -d 10.200.1.163/32 -p tcp -m comment --comment "istio-system/istiod:https-webhook cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SVC-WHNIZNLB5XFXIX2C -m comment --comment "istio-system/istiod:https-webhook -> 10.10.1.3:15017" -j KUBE-SEP-VIIXX4VJ3A6FHLRW

-A KUBE-SVC-X2LFBJAZKZE3QCOK ! -s 10.10.0.0/16 -d 10.200.1.144/32 -p tcp -m comment --comment "istio-system/jaeger-collector:http-otel cluster IP" -m tcp --dport 4318 -j KUBE-MARK-MASQ

-A KUBE-SVC-X2LFBJAZKZE3QCOK -m comment --comment "istio-system/jaeger-collector:http-otel -> 10.10.2.5:4318" -j KUBE-SEP-EOOOBC4NP4L4Q2B3

-A KUBE-SVC-XHUBMW47Y5G3ICIS ! -s 10.10.0.0/16 -d 10.200.1.163/32 -p tcp -m comment --comment "istio-system/istiod:http-monitoring cluster IP" -m tcp --dport 15014 -j KUBE-MARK-MASQ

-A KUBE-SVC-XHUBMW47Y5G3ICIS -m comment --comment "istio-system/istiod:http-monitoring -> 10.10.1.3:15014" -j KUBE-SEP-X33LYSV5PIJ3PHXQ

-A KUBE-SVC-XJMB3L73YF5UUKWH ! -s 10.10.0.0/16 -d 10.200.1.200/32 -p tcp -m comment --comment "istio-system/loki:grpc cluster IP" -m tcp --dport 9095 -j KUBE-MARK-MASQ

-A KUBE-SVC-XJMB3L73YF5UUKWH -m comment --comment "istio-system/loki:grpc -> 10.10.2.9:9095" -j KUBE-SEP-HYFTSL3KNTBQVM56

-A KUBE-SVC-Y3OVZYCKHGYTKGDA ! -s 10.10.0.0/16 -d 10.200.1.136/32 -p tcp -m comment --comment "istio-system/grafana:service cluster IP" -m tcp --dport 3000 -j KUBE-MARK-MASQ

-A KUBE-SVC-Y3OVZYCKHGYTKGDA -m comment --comment "istio-system/grafana:service -> 10.10.2.4:3000" -j KUBE-SEP-BTHPIFJSQR2I7YRG

COMMIT

# Completed on Sat Jun 7 07:33:35 2025

# Warning: iptables-legacy tables present, use iptables-legacy-save to see them

node : myk8s-worker

...

node : myk8s-worker2

...

13. Istio 관측 도구 NodePort 변경 작업

prometheus(30001), grafana(30002), kiali(30003), tracing(30004)

1

2

3

4

kubectl patch svc -n istio-system prometheus -p '{"spec": {"type": "NodePort", "ports": [{"port": 9090, "targetPort": 9090, "nodePort": 30001}]}}'

kubectl patch svc -n istio-system grafana -p '{"spec": {"type": "NodePort", "ports": [{"port": 3000, "targetPort": 3000, "nodePort": 30002}]}}'

kubectl patch svc -n istio-system kiali -p '{"spec": {"type": "NodePort", "ports": [{"port": 20001, "targetPort": 20001, "nodePort": 30003}]}}'

kubectl patch svc -n istio-system tracing -p '{"spec": {"type": "NodePort", "ports": [{"port": 80, "targetPort": 16686, "nodePort": 30004}]}}'

✅ 출력

1

2

3

4

service/prometheus patched

service/grafana patched

service/kiali patched

service/tracing patched

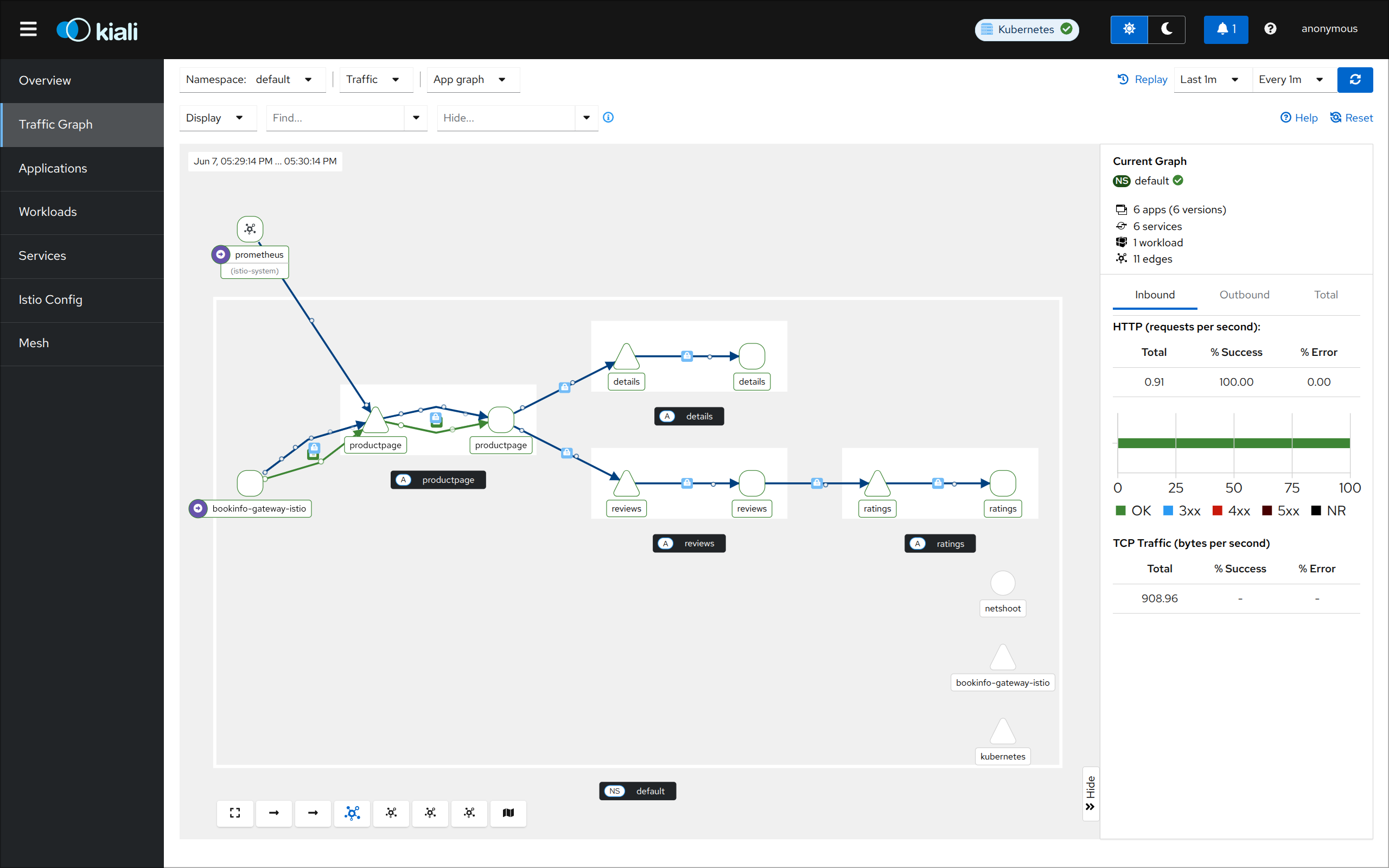

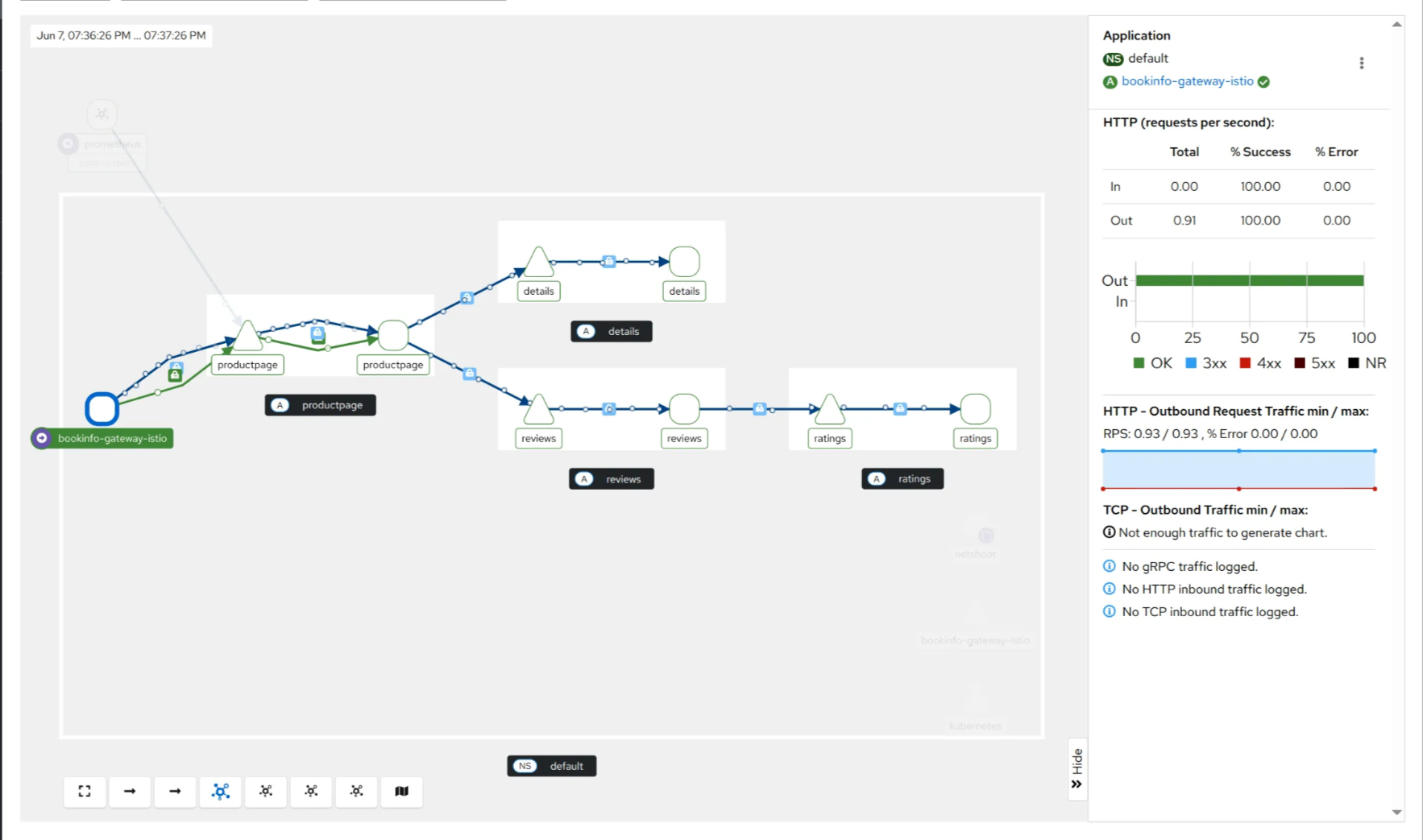

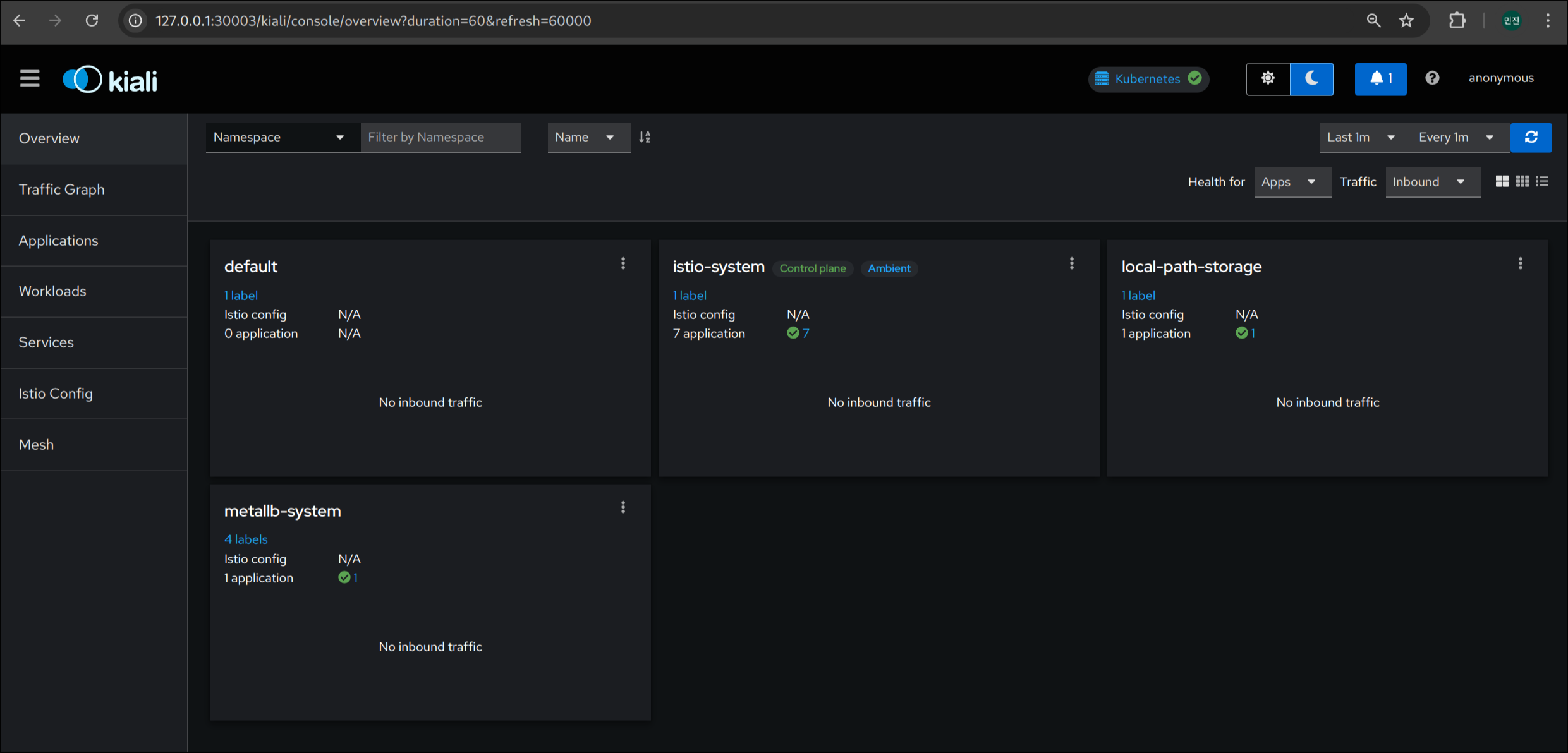

Kiali 접속: http://127.0.0.1:30003

🧩 Istio CNI 상태 및 로그 확인

1. Istio CNI DaemonSet 상태 확인

1

kubectl get ds -n istio-system

✅ 출력

1

2

3

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

istio-cni-node 3 3 3 3 3 kubernetes.io/os=linux 16m

ztunnel 3 3 3 3 3 kubernetes.io/os=linux 16m

2. istio-cni-node Pod 목록 확인

1

kubectl get pod -n istio-system -l k8s-app=istio-cni-node -owide

✅ 출력

1

2

3

4

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

istio-cni-node-7kst6 1/1 Running 0 17m 10.10.1.4 myk8s-worker <none> <none>

istio-cni-node-dpqsv 1/1 Running 0 17m 10.10.2.2 myk8s-worker2 <none> <none>

istio-cni-node-rfx6w 1/1 Running 0 17m 10.10.0.5 myk8s-control-plane <none> <none>

3. istio-cni-node Pod 상세 정보 확인

1

kubectl describe pod -n istio-system -l k8s-app=istio-cni-node

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

Name: istio-cni-node-7kst6

Namespace: istio-system

Priority: 2000001000

Priority Class Name: system-node-critical

Service Account: istio-cni

Node: myk8s-worker/172.18.0.4

Start Time: Sat, 07 Jun 2025 16:25:35 +0900

Labels: app.kubernetes.io/instance=istio

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=istio-cni

app.kubernetes.io/part-of=istio

app.kubernetes.io/version=1.26.0

controller-revision-hash=69565b7cc7

helm.sh/chart=cni-1.26.0

istio.io/dataplane-mode=none

k8s-app=istio-cni-node

pod-template-generation=1

sidecar.istio.io/inject=false

Annotations: container.apparmor.security.beta.kubernetes.io/install-cni: unconfined

prometheus.io/path: /metrics

prometheus.io/port: 15014

prometheus.io/scrape: true

sidecar.istio.io/inject: false

Status: Running

IP: 10.10.1.4

IPs:

IP: 10.10.1.4

Controlled By: DaemonSet/istio-cni-node

Containers:

install-cni:

Container ID: containerd://78f0e92ac48223148fe634580d14e30d3c3c62bb5cd5ab39c4decff81f1b76b5

Image: docker.io/istio/install-cni:1.26.0-distroless

Image ID: docker.io/istio/install-cni@sha256:e69cea606f6fe75907602349081f78ddb0a94417199f9022f7323510abef65cb

Port: 15014/TCP

Host Port: 0/TCP

Command:

install-cni

Args:

--log_output_level=info

State: Running

Started: Sat, 07 Jun 2025 16:25:46 +0900

Ready: True

Restart Count: 0

Requests:

cpu: 100m

memory: 100Mi

Readiness: http-get http://:8000/readyz delay=0s timeout=1s period=10s #success=1 #failure=3

Environment Variables from:

istio-cni-config ConfigMap Optional: false

Environment:

REPAIR_NODE_NAME: (v1:spec.nodeName)

REPAIR_RUN_AS_DAEMON: true

REPAIR_SIDECAR_ANNOTATION: sidecar.istio.io/status

ALLOW_SWITCH_TO_HOST_NS: true

NODE_NAME: (v1:spec.nodeName)

GOMEMLIMIT: node allocatable (limits.memory)

GOMAXPROCS: node allocatable (limits.cpu)

POD_NAME: istio-cni-node-7kst6 (v1:metadata.name)

POD_NAMESPACE: istio-system (v1:metadata.namespace)

Mounts:

/host/etc/cni/net.d from cni-net-dir (rw)

/host/opt/cni/bin from cni-bin-dir (rw)

/host/proc from cni-host-procfs (ro)

/host/var/run/netns from cni-netns-dir (rw)

/var/run/istio-cni from cni-socket-dir (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-6gwk9 (ro)

/var/run/ztunnel from cni-ztunnel-sock-dir (rw)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

cni-bin-dir:

Type: HostPath (bare host directory volume)

Path: /opt/cni/bin

HostPathType:

cni-host-procfs:

Type: HostPath (bare host directory volume)

Path: /proc

HostPathType: Directory

cni-ztunnel-sock-dir:

Type: HostPath (bare host directory volume)

Path: /var/run/ztunnel

HostPathType: DirectoryOrCreate

cni-net-dir:

Type: HostPath (bare host directory volume)

Path: /etc/cni/net.d

HostPathType:

cni-socket-dir:

Type: HostPath (bare host directory volume)

Path: /var/run/istio-cni

HostPathType:

cni-netns-dir:

Type: HostPath (bare host directory volume)

Path: /var/run/netns

HostPathType: DirectoryOrCreate

kube-api-access-6gwk9:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

Optional: false

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: kubernetes.io/os=linux

Tolerations: :NoSchedule op=Exists

:NoExecute op=Exists

CriticalAddonsOnly op=Exists

node.kubernetes.io/disk-pressure:NoSchedule op=Exists

node.kubernetes.io/memory-pressure:NoSchedule op=Exists

node.kubernetes.io/not-ready:NoExecute op=Exists

node.kubernetes.io/pid-pressure:NoSchedule op=Exists

node.kubernetes.io/unreachable:NoExecute op=Exists

node.kubernetes.io/unschedulable:NoSchedule op=Exists

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 17m default-scheduler Successfully assigned istio-system/istio-cni-node-7kst6 to myk8s-worker

Normal Pulling 17m kubelet Pulling image "docker.io/istio/install-cni:1.26.0-distroless"

Normal Pulled 17m kubelet Successfully pulled image "docker.io/istio/install-cni:1.26.0-distroless" in 10.868s (10.868s including waiting). Image size: 47376201 bytes.

Normal Created 17m kubelet Created container: install-cni

Normal Started 17m kubelet Started container install-cni

Warning Unhealthy 17m kubelet Readiness probe failed: HTTP probe failed with statuscode: 503

Name: istio-cni-node-dpqsv

Namespace: istio-system

Priority: 2000001000

Priority Class Name: system-node-critical

Service Account: istio-cni

Node: myk8s-worker2/172.18.0.2

Start Time: Sat, 07 Jun 2025 16:25:35 +0900

Labels: app.kubernetes.io/instance=istio

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=istio-cni

app.kubernetes.io/part-of=istio

app.kubernetes.io/version=1.26.0

controller-revision-hash=69565b7cc7

helm.sh/chart=cni-1.26.0

istio.io/dataplane-mode=none

k8s-app=istio-cni-node

pod-template-generation=1

sidecar.istio.io/inject=false

Annotations: container.apparmor.security.beta.kubernetes.io/install-cni: unconfined

prometheus.io/path: /metrics

prometheus.io/port: 15014

prometheus.io/scrape: true

sidecar.istio.io/inject: false

Status: Running

IP: 10.10.2.2

IPs:

IP: 10.10.2.2

Controlled By: DaemonSet/istio-cni-node

Containers:

install-cni:

Container ID: containerd://daad034515ef510652efe8e1cba35398f63be4091040d6e7d446538bfd36bf0c

Image: docker.io/istio/install-cni:1.26.0-distroless

Image ID: docker.io/istio/install-cni@sha256:e69cea606f6fe75907602349081f78ddb0a94417199f9022f7323510abef65cb

Port: 15014/TCP

Host Port: 0/TCP

Command:

install-cni

Args:

--log_output_level=info

State: Running

Started: Sat, 07 Jun 2025 16:25:46 +0900

Ready: True

Restart Count: 0

Requests:

cpu: 100m

memory: 100Mi

Readiness: http-get http://:8000/readyz delay=0s timeout=1s period=10s #success=1 #failure=3

Environment Variables from:

istio-cni-config ConfigMap Optional: false

Environment:

REPAIR_NODE_NAME: (v1:spec.nodeName)

REPAIR_RUN_AS_DAEMON: true

REPAIR_SIDECAR_ANNOTATION: sidecar.istio.io/status

ALLOW_SWITCH_TO_HOST_NS: true

NODE_NAME: (v1:spec.nodeName)

GOMEMLIMIT: node allocatable (limits.memory)

GOMAXPROCS: node allocatable (limits.cpu)

POD_NAME: istio-cni-node-dpqsv (v1:metadata.name)

POD_NAMESPACE: istio-system (v1:metadata.namespace)

Mounts:

/host/etc/cni/net.d from cni-net-dir (rw)

/host/opt/cni/bin from cni-bin-dir (rw)

/host/proc from cni-host-procfs (ro)

/host/var/run/netns from cni-netns-dir (rw)

/var/run/istio-cni from cni-socket-dir (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-7nv4k (ro)

/var/run/ztunnel from cni-ztunnel-sock-dir (rw)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

cni-bin-dir:

Type: HostPath (bare host directory volume)

Path: /opt/cni/bin

HostPathType:

cni-host-procfs:

Type: HostPath (bare host directory volume)

Path: /proc

HostPathType: Directory

cni-ztunnel-sock-dir:

Type: HostPath (bare host directory volume)

Path: /var/run/ztunnel

HostPathType: DirectoryOrCreate

cni-net-dir:

Type: HostPath (bare host directory volume)

Path: /etc/cni/net.d

HostPathType:

cni-socket-dir:

Type: HostPath (bare host directory volume)

Path: /var/run/istio-cni

HostPathType:

cni-netns-dir:

Type: HostPath (bare host directory volume)

Path: /var/run/netns

HostPathType: DirectoryOrCreate

kube-api-access-7nv4k:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

Optional: false

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: kubernetes.io/os=linux

Tolerations: :NoSchedule op=Exists

:NoExecute op=Exists

CriticalAddonsOnly op=Exists

node.kubernetes.io/disk-pressure:NoSchedule op=Exists

node.kubernetes.io/memory-pressure:NoSchedule op=Exists

node.kubernetes.io/not-ready:NoExecute op=Exists

node.kubernetes.io/pid-pressure:NoSchedule op=Exists

node.kubernetes.io/unreachable:NoExecute op=Exists

node.kubernetes.io/unschedulable:NoSchedule op=Exists

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 17m default-scheduler Successfully assigned istio-system/istio-cni-node-dpqsv to myk8s-worker2

Normal Pulling 17m kubelet Pulling image "docker.io/istio/install-cni:1.26.0-distroless"

Normal Pulled 17m kubelet Successfully pulled image "docker.io/istio/install-cni:1.26.0-distroless" in 10.13s (10.13s including waiting). Image size: 47376201 bytes.

Normal Created 17m kubelet Created container: install-cni

Normal Started 17m kubelet Started container install-cni

Name: istio-cni-node-rfx6w

Namespace: istio-system

Priority: 2000001000

Priority Class Name: system-node-critical

Service Account: istio-cni

Node: myk8s-control-plane/172.18.0.3

Start Time: Sat, 07 Jun 2025 16:25:35 +0900

Labels: app.kubernetes.io/instance=istio

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=istio-cni

app.kubernetes.io/part-of=istio

app.kubernetes.io/version=1.26.0

controller-revision-hash=69565b7cc7

helm.sh/chart=cni-1.26.0

istio.io/dataplane-mode=none

k8s-app=istio-cni-node

pod-template-generation=1

sidecar.istio.io/inject=false

Annotations: container.apparmor.security.beta.kubernetes.io/install-cni: unconfined

prometheus.io/path: /metrics

prometheus.io/port: 15014

prometheus.io/scrape: true

sidecar.istio.io/inject: false

Status: Running

IP: 10.10.0.5

IPs:

IP: 10.10.0.5

Controlled By: DaemonSet/istio-cni-node

Containers:

install-cni:

Container ID: containerd://a329e0aab8b5fc24704bc72db46fa5a7184445aefe51b303e164b5cc5db5d406

Image: docker.io/istio/install-cni:1.26.0-distroless

Image ID: docker.io/istio/install-cni@sha256:e69cea606f6fe75907602349081f78ddb0a94417199f9022f7323510abef65cb

Port: 15014/TCP

Host Port: 0/TCP

Command:

install-cni

Args:

--log_output_level=info

State: Running

Started: Sat, 07 Jun 2025 16:25:45 +0900

Ready: True

Restart Count: 0

Requests:

cpu: 100m

memory: 100Mi

Readiness: http-get http://:8000/readyz delay=0s timeout=1s period=10s #success=1 #failure=3

Environment Variables from:

istio-cni-config ConfigMap Optional: false

Environment:

REPAIR_NODE_NAME: (v1:spec.nodeName)

REPAIR_RUN_AS_DAEMON: true

REPAIR_SIDECAR_ANNOTATION: sidecar.istio.io/status

ALLOW_SWITCH_TO_HOST_NS: true

NODE_NAME: (v1:spec.nodeName)

GOMEMLIMIT: node allocatable (limits.memory)

GOMAXPROCS: node allocatable (limits.cpu)

POD_NAME: istio-cni-node-rfx6w (v1:metadata.name)

POD_NAMESPACE: istio-system (v1:metadata.namespace)

Mounts:

/host/etc/cni/net.d from cni-net-dir (rw)

/host/opt/cni/bin from cni-bin-dir (rw)

/host/proc from cni-host-procfs (ro)

/host/var/run/netns from cni-netns-dir (rw)

/var/run/istio-cni from cni-socket-dir (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-rfpf5 (ro)

/var/run/ztunnel from cni-ztunnel-sock-dir (rw)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

cni-bin-dir:

Type: HostPath (bare host directory volume)

Path: /opt/cni/bin

HostPathType:

cni-host-procfs:

Type: HostPath (bare host directory volume)

Path: /proc

HostPathType: Directory

cni-ztunnel-sock-dir:

Type: HostPath (bare host directory volume)

Path: /var/run/ztunnel

HostPathType: DirectoryOrCreate

cni-net-dir:

Type: HostPath (bare host directory volume)

Path: /etc/cni/net.d

HostPathType:

cni-socket-dir:

Type: HostPath (bare host directory volume)

Path: /var/run/istio-cni

HostPathType:

cni-netns-dir:

Type: HostPath (bare host directory volume)

Path: /var/run/netns

HostPathType: DirectoryOrCreate

kube-api-access-rfpf5:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

Optional: false

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: kubernetes.io/os=linux

Tolerations: :NoSchedule op=Exists

:NoExecute op=Exists

CriticalAddonsOnly op=Exists

node.kubernetes.io/disk-pressure:NoSchedule op=Exists

node.kubernetes.io/memory-pressure:NoSchedule op=Exists

node.kubernetes.io/not-ready:NoExecute op=Exists

node.kubernetes.io/pid-pressure:NoSchedule op=Exists

node.kubernetes.io/unreachable:NoExecute op=Exists

node.kubernetes.io/unschedulable:NoSchedule op=Exists

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 17m default-scheduler Successfully assigned istio-system/istio-cni-node-rfx6w to myk8s-control-plane

Normal Pulling 17m kubelet Pulling image "docker.io/istio/install-cni:1.26.0-distroless"

Normal Pulled 17m kubelet Successfully pulled image "docker.io/istio/install-cni:1.26.0-distroless" in 9.179s (9.18s including waiting). Image size: 47376201 bytes.

Normal Created 17m kubelet Created container: install-cni

Normal Started 17m kubelet Started container install-cni

4. CNI 바이너리 디렉토리 확인 (/opt/cni/bin)

1

for node in control-plane worker worker2; do echo "node : myk8s-$node" ; docker exec -it myk8s-$node sh -c 'ls -l /opt/cni/bin'; echo; done

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

node : myk8s-control-plane

total 70820

-rwxr-xr-x 1 root root 4059492 Mar 5 12:52 host-local

-rwxr-xr-x 1 root root 54538424 Jun 7 07:25 istio-cni

-rwxr-xr-x 1 root root 4114956 Mar 5 12:52 loopback

-rwxr-xr-x 1 root root 4713142 Mar 5 12:52 portmap

-rwxr-xr-x 1 root root 5080390 Mar 5 12:52 ptp

node : myk8s-worker

total 70820

-rwxr-xr-x 1 root root 4059492 Mar 5 12:52 host-local

-rwxr-xr-x 1 root root 54538424 Jun 7 07:25 istio-cni

-rwxr-xr-x 1 root root 4114956 Mar 5 12:52 loopback

-rwxr-xr-x 1 root root 4713142 Mar 5 12:52 portmap

-rwxr-xr-x 1 root root 5080390 Mar 5 12:52 ptp

node : myk8s-worker2

total 70820

-rwxr-xr-x 1 root root 4059492 Mar 5 12:52 host-local

-rwxr-xr-x 1 root root 54538424 Jun 7 07:25 istio-cni

-rwxr-xr-x 1 root root 4114956 Mar 5 12:52 loopback

-rwxr-xr-x 1 root root 4713142 Mar 5 12:52 portmap

-rwxr-xr-x 1 root root 5080390 Mar 5 12:52 ptp

5. CNI 설정 파일 디렉토리 확인 (/etc/cni/net.d)

1

for node in control-plane worker worker2; do echo "node : myk8s-$node" ; docker exec -it myk8s-$node sh -c 'ls -l /etc/cni/net.d'; echo; done

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

node : myk8s-control-plane

total 4

-rw-r--r-- 1 root root 861 Jun 7 07:25 10-kindnet.conflist

node : myk8s-worker

total 4

-rw-r--r-- 1 root root 861 Jun 7 07:25 10-kindnet.conflist

node : myk8s-worker2

total 4

-rw-r--r-- 1 root root 861 Jun 7 07:25 10-kindnet.conflist

6. Istio CNI 런타임 소켓 디렉토리 확인 (/var/run/istio-cni)

1

for node in control-plane worker worker2; do echo "node : myk8s-$node" ; docker exec -it myk8s-$node sh -c 'ls -l /var/run/istio-cni'; echo; done

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

node : myk8s-control-plane

total 8

-rw------- 1 root root 2990 Jun 7 07:25 istio-cni-kubeconfig

-rw------- 1 root root 171 Jun 7 07:25 istio-cni.log

srw-rw-rw- 1 root root 0 Jun 7 07:25 log.sock

srw-rw-rw- 1 root root 0 Jun 7 07:25 pluginevent.sock

node : myk8s-worker

total 8

-rw------- 1 root root 2981 Jun 7 07:25 istio-cni-kubeconfig

-rw------- 1 root root 171 Jun 7 07:25 istio-cni.log

srw-rw-rw- 1 root root 0 Jun 7 07:25 log.sock

srw-rw-rw- 1 root root 0 Jun 7 07:25 pluginevent.sock

node : myk8s-worker2

total 8

-rw------- 1 root root 2982 Jun 7 07:25 istio-cni-kubeconfig

-rw------- 1 root root 1335 Jun 7 07:27 istio-cni.log

srw-rw-rw- 1 root root 0 Jun 7 07:25 log.sock

srw-rw-rw- 1 root root 0 Jun 7 07:25 pluginevent.sock

7. 네임스페이스 파일 확인 (/var/run/netns)

1

for node in control-plane worker worker2; do echo "node : myk8s-$node" ; docker exec -it myk8s-$node sh -c 'ls -l /var/run/netns'; echo; done

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

node : myk8s-control-plane

total 0

-r--r--r-- 1 root root 0 Jun 7 06:54 cni-1ad352d6-873b-0f5d-9c82-54b44e853fd7

-r--r--r-- 1 root root 0 Jun 7 06:54 cni-861f44e6-937b-4d15-8df6-757b9759cc74

-r--r--r-- 1 root root 0 Jun 7 07:25 cni-8f0e1fff-31a4-bc8f-82d9-86ba81886dfd

-r--r--r-- 1 root root 0 Jun 7 07:25 cni-d348a25f-f731-b77c-dbaa-27b8d1dfa25a

-r--r--r-- 1 root root 0 Jun 7 06:54 cni-de7ad285-b86f-3164-14f2-a220466f8aa1

node : myk8s-worker

total 0

-r--r--r-- 1 root root 0 Jun 7 07:25 cni-25f25e6c-522e-f81f-5ebe-7053a7add3f0

-r--r--r-- 1 root root 0 Jun 7 07:25 cni-4afc4244-9e76-c6c2-e7de-2fe41a280b65

-r--r--r-- 1 root root 0 Jun 7 07:18 cni-a3b2c636-67d4-0b64-f34c-4dadb40c753a

-r--r--r-- 1 root root 0 Jun 7 07:25 cni-d6cc6548-5a67-08f7-df08-5b85d4c75cbc

node : myk8s-worker2

total 0

-r--r--r-- 1 root root 0 Jun 7 07:27 cni-1f8eace2-6620-216e-bb4d-c4b86ee5c6c4

-r--r--r-- 1 root root 0 Jun 7 07:25 cni-277efe1a-9bbe-2abf-9a8d-74328563969c

-r--r--r-- 1 root root 0 Jun 7 07:27 cni-3771908e-b0ff-603f-2996-7a7adf7075c7

-r--r--r-- 1 root root 0 Jun 7 07:27 cni-ac03b3ba-9368-6cf8-7cd4-d352d7efc40f

-r--r--r-- 1 root root 0 Jun 7 07:27 cni-ad228616-57c2-1fc6-e9cc-a850f148c51b

-r--r--r-- 1 root root 0 Jun 7 07:27 cni-f3dd8ca3-3f82-195a-d587-4b42ab11d2f8

-r--r--r-- 1 root root 0 Jun 7 07:25 cni-ff483962-b91b-3571-0f8b-66d75d099e53

8. 네트워크 네임스페이스 상세 조회 (lsns -t net)

1

for node in control-plane worker worker2; do echo "node : myk8s-$node" ; docker exec -it myk8s-$node sh -c 'lsns -t net'; echo; done

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

node : myk8s-control-plane

NS TYPE NPROCS PID USER NETNSID NSFS COMMAND

4026533248 net 32 1 root unassigned /sbin/init

4026533441 net 2 1451 65535 1 /run/netns/cni-861f44e6-937b-4d15-8df6-757b9759cc74 /pause

4026533500 net 2 1461 65535 2 /run/netns/cni-de7ad285-b86f-3164-14f2-a220466f8aa1 /pause

4026533559 net 2 1467 65535 3 /run/netns/cni-1ad352d6-873b-0f5d-9c82-54b44e853fd7 /pause

4026533863 net 2 3526 65535 4 /run/netns/cni-8f0e1fff-31a4-bc8f-82d9-86ba81886dfd /pause

4026534128 net 2 3711 65535 5 /run/netns/cni-d348a25f-f731-b77c-dbaa-27b8d1dfa25a /pause

node : myk8s-worker

NS TYPE NPROCS PID USER NETNSID NSFS COMMAND

4026533179 net 2 2049 nobody 1 /run/netns/cni-a3b2c636-67d4-0b64-f34c-4dadb40c753a /pause

4026533313 net 19 1 root unassigned /sbin/init