Istio 6주차 정리

☸️ k8s(1.23.17) 배포 : NodePort(30000 HTTP, 30005 HTTPS)

1. 소스 코드 다운로드

1

2

3

4

5

6

git clone https://github.com/AcornPublishing/istio-in-action

cd istio-in-action/book-source-code-master

pwd # 각자 자신의 pwd 경로

# 결과

/home/devshin/workspace/istio/istio-in-action/book-source-code-master

2. Kind 클러스터 생성

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

kind create cluster --name myk8s --image kindest/node:v1.23.17 --config - <<EOF

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

extraPortMappings:

- containerPort: 30000 # Sample Application (istio-ingrssgateway) HTTP

hostPort: 30000

- containerPort: 30001 # Prometheus

hostPort: 30001

- containerPort: 30002 # Grafana

hostPort: 30002

- containerPort: 30003 # Kiali

hostPort: 30003

- containerPort: 30004 # Tracing

hostPort: 30004

- containerPort: 30005 # Sample Application (istio-ingrssgateway) HTTPS

hostPort: 30005

- containerPort: 30006 # TCP Route

hostPort: 30006

- containerPort: 30007 # kube-ops-view

hostPort: 30007

extraMounts: # 해당 부분 생략 가능

- hostPath: /home/devshin/workspace/istio/istio-in-action/book-source-code-master # 각자 자신의 pwd 경로로 설정

containerPath: /istiobook

networking:

podSubnet: 10.10.0.0/16

serviceSubnet: 10.200.0.0/22

EOF

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

Creating cluster "myk8s" ...

✓ Ensuring node image (kindest/node:v1.23.17) 🖼

✓ Preparing nodes 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

Set kubectl context to "kind-myk8s"

You can now use your cluster with:

kubectl cluster-info --context kind-myk8s

Not sure what to do next? 😅 Check out https://kind.sigs.k8s.io/docs/user/quick-start/

3. 클러스터 생성 확인

1

docker ps

✅ 출력

1

2

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2dc54e55d2af kindest/node:v1.23.17 "/usr/local/bin/entr…" About a minute ago Up About a minute 0.0.0.0:30000-30007->30000-30007/tcp, 127.0.0.1:40987->6443/tcp myk8s-control-plane

4. 노드에 기본 툴 설치

1

docker exec -it myk8s-control-plane sh -c 'apt update && apt install tree psmisc lsof wget bridge-utils net-tools dnsutils tcpdump ngrep iputils-ping git vim -y'

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

...

Setting up bind9-libs:amd64 (1:9.18.33-1~deb12u2) ...

Setting up openssh-client (1:9.2p1-2+deb12u5) ...

Setting up libxext6:amd64 (2:1.3.4-1+b1) ...

Setting up dbus-daemon (1.14.10-1~deb12u1) ...

Setting up libnet1:amd64 (1.1.6+dfsg-3.2) ...

Setting up libpcap0.8:amd64 (1.10.3-1) ...

Setting up dbus (1.14.10-1~deb12u1) ...

invoke-rc.d: policy-rc.d denied execution of start.

/usr/sbin/policy-rc.d returned 101, not running 'start dbus.service'

Setting up libgdbm-compat4:amd64 (1.23-3) ...

Setting up xauth (1:1.1.2-1) ...

Setting up bind9-host (1:9.18.33-1~deb12u2) ...

Setting up libperl5.36:amd64 (5.36.0-7+deb12u2) ...

Setting up tcpdump (4.99.3-1) ...

Setting up ngrep (1.47+ds1-5+b1) ...

Setting up perl (5.36.0-7+deb12u2) ...

Setting up bind9-dnsutils (1:9.18.33-1~deb12u2) ...

Setting up dnsutils (1:9.18.33-1~deb12u2) ...

Setting up liberror-perl (0.17029-2) ...

Setting up git (1:2.39.5-0+deb12u2) ...

Processing triggers for libc-bin (2.36-9+deb12u4) ...

🛡️ Istio 1.17.8 설치

1. myk8s-control-plane 진입

1

2

docker exec -it myk8s-control-plane bash

root@myk8s-control-plane:/#

2. (옵션) 코드 파일 마운트 확인

1

root@myk8s-control-plane:/# tree /istiobook/ -L 1

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

/istiobook/

|-- 2025-04-27-190930_1_roundrobin.json

|-- 2025-04-27-191213_2_roundrobin.json

|-- 2025-04-27-191803_3_random.json

|-- 2025-04-27-220131_4_random.json

|-- 2025-04-27-221302_5_least_conn.json

|-- README.md

|-- appendices

|-- bin

|-- ch10

|-- ch11

|-- ch12

|-- ch13

|-- ch14

|-- ch2

|-- ch3

|-- ch4

|-- ch5

|-- ch6

|-- ch7

|-- ch8

|-- ch9

|-- forum-2.json

|-- prom-values-2.yaml

|-- services

`-- webapp-routes.json

17 directories, 9 files

3. istioctl 설치

1

2

3

4

5

6

root@myk8s-control-plane:/# export ISTIOV=1.17.8

echo 'export ISTIOV=1.17.8' >> /root/.bashrc

curl -s -L https://istio.io/downloadIstio | ISTIO_VERSION=$ISTIOV sh -

cp istio-$ISTIOV/bin/istioctl /usr/local/bin/istioctl

istioctl version --remote=false

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

Downloading istio-1.17.8 from https://github.com/istio/istio/releases/download/1.17.8/istio-1.17.8-linux-amd64.tar.gz ...

Istio 1.17.8 download complete!

The Istio release archive has been downloaded to the istio-1.17.8 directory.

To configure the istioctl client tool for your workstation,

add the /istio-1.17.8/bin directory to your environment path variable with:

export PATH="$PATH:/istio-1.17.8/bin"

Begin the Istio pre-installation check by running:

istioctl x precheck

Try Istio in ambient mode

https://istio.io/latest/docs/ambient/getting-started/

Try Istio in sidecar mode

https://istio.io/latest/docs/setup/getting-started/

Install guides for ambient mode

https://istio.io/latest/docs/ambient/install/

Install guides for sidecar mode

https://istio.io/latest/docs/setup/install/

Need more information? Visit https://istio.io/latest/docs/

1.17.8

4. demo 프로파일로 Istio 컨트롤 플레인 배포

디버깅 레벨 높아짐

1

root@myk8s-control-plane:/# istioctl install --set profile=demo --set values.global.proxy.privileged=true -y

✅ 출력

1

2

3

4

5

6

7

✔ Istio core installed

✔ Istiod installed

✔ Ingress gateways installed

✔ Egress gateways installed

✔ Installation complete Making this installation the default for injection and validation.

Thank you for installing Istio 1.17. Please take a few minutes to tell us about your install/upgrade experience! https://forms.gle/hMHGiwZHPU7UQRWe9

5. 보조 도구 설치

1

kubectl apply -f istio-$ISTIOV/samples/addons

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

serviceaccount/grafana created

configmap/grafana created

service/grafana created

deployment.apps/grafana created

configmap/istio-grafana-dashboards created

configmap/istio-services-grafana-dashboards created

deployment.apps/jaeger created

service/tracing created

service/zipkin created

service/jaeger-collector created

serviceaccount/kiali created

configmap/kiali created

clusterrole.rbac.authorization.k8s.io/kiali-viewer created

clusterrole.rbac.authorization.k8s.io/kiali created

clusterrolebinding.rbac.authorization.k8s.io/kiali created

role.rbac.authorization.k8s.io/kiali-controlplane created

rolebinding.rbac.authorization.k8s.io/kiali-controlplane created

service/kiali created

deployment.apps/kiali created

serviceaccount/prometheus created

configmap/prometheus created

clusterrole.rbac.authorization.k8s.io/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created

service/prometheus created

deployment.apps/prometheus created

6. 컨트롤 플레인 컨테이너에서 빠져나오기

1

2

root@myk8s-control-plane:/# exit

exit

7. 설치 리소스 확인

(1) Istio 시스템 네임스페이스의 모든 리소스 조회

1

kubectl get all,svc,ep,sa,cm,secret,pdb -n istio-system

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

NAME READY STATUS RESTARTS AGE

pod/grafana-b854c6c8-p55xh 1/1 Running 0 64s

pod/istio-egressgateway-85df6b84b7-md4m2 1/1 Running 0 2m3s

pod/istio-ingressgateway-6bb8fb6549-2mlk2 1/1 Running 0 2m3s

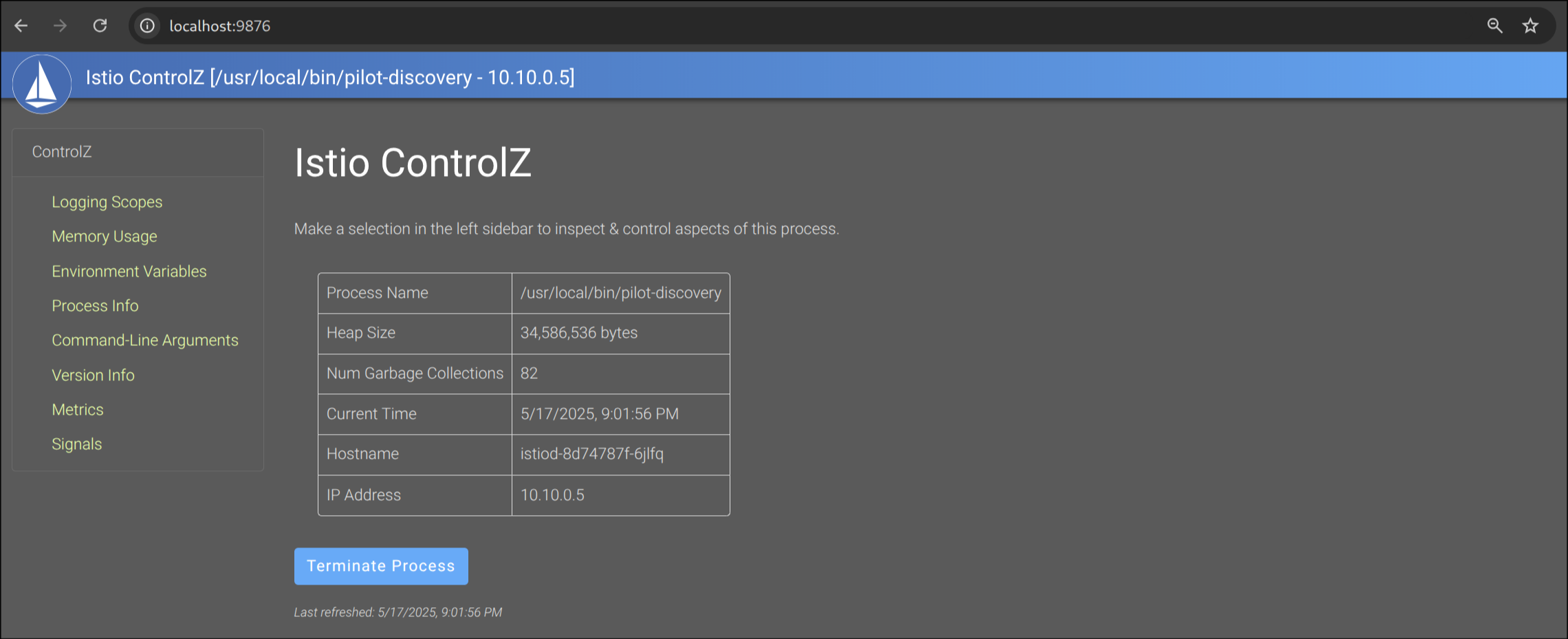

pod/istiod-8d74787f-6jlfq 1/1 Running 0 2m15s

pod/jaeger-5556cd8fcf-gfjtt 1/1 Running 0 64s

pod/kiali-648847c8c4-dsq49 1/1 Running 0 64s

pod/prometheus-7b8b9dd44c-lppsf 2/2 Running 0 64s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/grafana ClusterIP 10.200.1.188 <none> 3000/TCP 64s

service/istio-egressgateway ClusterIP 10.200.3.180 <none> 80/TCP,443/TCP 2m3s

service/istio-ingressgateway LoadBalancer 10.200.0.6 <pending> 15021:31287/TCP,80:32484/TCP,443:32372/TCP,31400:30235/TCP,15443:31757/TCP 2m3s

service/istiod ClusterIP 10.200.2.22 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 2m15s

service/jaeger-collector ClusterIP 10.200.1.90 <none> 14268/TCP,14250/TCP,9411/TCP 64s

service/kiali ClusterIP 10.200.1.108 <none> 20001/TCP,9090/TCP 64s

service/prometheus ClusterIP 10.200.0.173 <none> 9090/TCP 64s

service/tracing ClusterIP 10.200.3.237 <none> 80/TCP,16685/TCP 64s

service/zipkin ClusterIP 10.200.3.199 <none> 9411/TCP 64s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/grafana 1/1 1 1 64s

deployment.apps/istio-egressgateway 1/1 1 1 2m3s

deployment.apps/istio-ingressgateway 1/1 1 1 2m3s

deployment.apps/istiod 1/1 1 1 2m15s

deployment.apps/jaeger 1/1 1 1 64s

deployment.apps/kiali 1/1 1 1 64s

deployment.apps/prometheus 1/1 1 1 64s

NAME DESIRED CURRENT READY AGE

replicaset.apps/grafana-b854c6c8 1 1 1 64s

replicaset.apps/istio-egressgateway-85df6b84b7 1 1 1 2m3s

replicaset.apps/istio-ingressgateway-6bb8fb6549 1 1 1 2m3s

replicaset.apps/istiod-8d74787f 1 1 1 2m15s

replicaset.apps/jaeger-5556cd8fcf 1 1 1 64s

replicaset.apps/kiali-648847c8c4 1 1 1 64s

replicaset.apps/prometheus-7b8b9dd44c 1 1 1 64s

NAME ENDPOINTS AGE

endpoints/grafana 10.10.0.9:3000 64s

endpoints/istio-egressgateway 10.10.0.7:8080,10.10.0.7:8443 2m3s

endpoints/istio-ingressgateway 10.10.0.6:15443,10.10.0.6:15021,10.10.0.6:31400 + 2 more... 2m3s

endpoints/istiod 10.10.0.5:15012,10.10.0.5:15010,10.10.0.5:15017 + 1 more... 2m15s

endpoints/jaeger-collector 10.10.0.8:9411,10.10.0.8:14250,10.10.0.8:14268 64s

endpoints/kiali 10.10.0.10:9090,10.10.0.10:20001 64s

endpoints/prometheus 10.10.0.11:9090 64s

endpoints/tracing 10.10.0.8:16685,10.10.0.8:16686 64s

endpoints/zipkin 10.10.0.8:9411 64s

NAME SECRETS AGE

serviceaccount/default 1 2m17s

serviceaccount/grafana 1 64s

serviceaccount/istio-egressgateway-service-account 1 2m3s

serviceaccount/istio-ingressgateway-service-account 1 2m3s

serviceaccount/istio-reader-service-account 1 2m16s

serviceaccount/istiod 1 2m16s

serviceaccount/istiod-service-account 1 2m16s

serviceaccount/kiali 1 64s

serviceaccount/prometheus 1 64s

NAME DATA AGE

configmap/grafana 4 64s

configmap/istio 2 2m15s

configmap/istio-ca-root-cert 1 2m5s

configmap/istio-gateway-deployment-leader 0 2m5s

configmap/istio-gateway-status-leader 0 2m5s

configmap/istio-grafana-dashboards 2 64s

configmap/istio-leader 0 2m5s

configmap/istio-namespace-controller-election 0 2m5s

configmap/istio-services-grafana-dashboards 4 64s

configmap/istio-sidecar-injector 2 2m15s

configmap/kiali 1 64s

configmap/kube-root-ca.crt 1 2m17s

configmap/prometheus 5 64s

NAME TYPE DATA AGE

secret/default-token-ssdgn kubernetes.io/service-account-token 3 2m17s

secret/grafana-token-4st5q kubernetes.io/service-account-token 3 64s

secret/istio-ca-secret istio.io/ca-root 5 2m5s

secret/istio-egressgateway-service-account-token-6g9gg kubernetes.io/service-account-token 3 2m3s

secret/istio-ingressgateway-service-account-token-9nxhn kubernetes.io/service-account-token 3 2m3s

secret/istio-reader-service-account-token-b5s9x kubernetes.io/service-account-token 3 2m16s

secret/istiod-service-account-token-gsss5 kubernetes.io/service-account-token 3 2m16s

secret/istiod-token-vp4bk kubernetes.io/service-account-token 3 2m16s

secret/kiali-token-fks7p kubernetes.io/service-account-token 3 64s

secret/prometheus-token-54xwl kubernetes.io/service-account-token 3 64s

NAME MIN AVAILABLE MAX UNAVAILABLE ALLOWED DISRUPTIONS AGE

poddisruptionbudget.policy/istio-egressgateway 1 N/A 0 2m3s

poddisruptionbudget.policy/istio-ingressgateway 1 N/A 0 2m3s

poddisruptionbudget.policy/istiod 1 N/A 0 2m15s

(2) Istio 관련 CRD 목록 조회

1

kubectl get crd | grep istio.io | sort

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

authorizationpolicies.security.istio.io 2025-05-17T03:26:25Z

destinationrules.networking.istio.io 2025-05-17T03:26:25Z

envoyfilters.networking.istio.io 2025-05-17T03:26:25Z

gateways.networking.istio.io 2025-05-17T03:26:25Z

istiooperators.install.istio.io 2025-05-17T03:26:25Z

peerauthentications.security.istio.io 2025-05-17T03:26:25Z

proxyconfigs.networking.istio.io 2025-05-17T03:26:25Z

requestauthentications.security.istio.io 2025-05-17T03:26:25Z

serviceentries.networking.istio.io 2025-05-17T03:26:25Z

sidecars.networking.istio.io 2025-05-17T03:26:25Z

telemetries.telemetry.istio.io 2025-05-17T03:26:25Z

virtualservices.networking.istio.io 2025-05-17T03:26:25Z

wasmplugins.extensions.istio.io 2025-05-17T03:26:25Z

workloadentries.networking.istio.io 2025-05-17T03:26:25Z

workloadgroups.networking.istio.io 2025-05-17T03:26:25Z

8. 실습을 위한 네임스페이스 설정

1

2

3

kubectl create ns istioinaction

kubectl label namespace istioinaction istio-injection=enabled

kubectl get ns --show-labels

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

namespace/istioinaction created

namespace/istioinaction labeled

NAME STATUS AGE LABELS

default Active 10m kubernetes.io/metadata.name=default

istio-system Active 4m11s kubernetes.io/metadata.name=istio-system

istioinaction Active 1s istio-injection=enabled,kubernetes.io/metadata.name=istioinaction

kube-node-lease Active 10m kubernetes.io/metadata.name=kube-node-lease

kube-public Active 10m kubernetes.io/metadata.name=kube-public

kube-system Active 10m kubernetes.io/metadata.name=kube-system

local-path-storage Active 10m kubernetes.io/metadata.name=local-path-storage

9. istio-ingressgateway NodePort 및 트래픽 정책 수정

1

2

3

4

kubectl patch svc -n istio-system istio-ingressgateway -p '{"spec": {"type": "NodePort", "ports": [{"port": 80, "targetPort": 8080, "nodePort": 30000}]}}'

kubectl patch svc -n istio-system istio-ingressgateway -p '{"spec": {"type": "NodePort", "ports": [{"port": 443, "targetPort": 8443, "nodePort": 30005}]}}'

kubectl patch svc -n istio-system istio-ingressgateway -p '{"spec":{"externalTrafficPolicy": "Local"}}'

kubectl describe svc -n istio-system istio-ingressgateway

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

service/istio-ingressgateway patched

service/istio-ingressgateway patched

service/istio-ingressgateway patched

Name: istio-ingressgateway

Namespace: istio-system

Labels: app=istio-ingressgateway

install.operator.istio.io/owning-resource=unknown

install.operator.istio.io/owning-resource-namespace=istio-system

istio=ingressgateway

istio.io/rev=default

operator.istio.io/component=IngressGateways

operator.istio.io/managed=Reconcile

operator.istio.io/version=1.17.8

release=istio

Annotations: <none>

Selector: app=istio-ingressgateway,istio=ingressgateway

Type: NodePort

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.200.0.6

IPs: 10.200.0.6

Port: status-port 15021/TCP

TargetPort: 15021/TCP

NodePort: status-port 31287/TCP

Endpoints: 10.10.0.6:15021

Port: http2 80/TCP

TargetPort: 8080/TCP

NodePort: http2 30000/TCP

Endpoints: 10.10.0.6:8080

Port: https 443/TCP

TargetPort: 8443/TCP

NodePort: https 30005/TCP

Endpoints: 10.10.0.6:8443

Port: tcp 31400/TCP

TargetPort: 31400/TCP

NodePort: tcp 30235/TCP

Endpoints: 10.10.0.6:31400

Port: tls 15443/TCP

TargetPort: 15443/TCP

NodePort: tls 31757/TCP

Endpoints: 10.10.0.6:15443

Session Affinity: None

External Traffic Policy: Local

Internal Traffic Policy: Cluster

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Type 0s service-controller LoadBalancer -> NodePort

10. Istio 관측 도구 - NodePort 서비스 포트 일괄 수정

1

2

3

4

kubectl patch svc -n istio-system prometheus -p '{"spec": {"type": "NodePort", "ports": [{"port": 9090, "targetPort": 9090, "nodePort": 30001}]}}'

kubectl patch svc -n istio-system grafana -p '{"spec": {"type": "NodePort", "ports": [{"port": 3000, "targetPort": 3000, "nodePort": 30002}]}}'

kubectl patch svc -n istio-system kiali -p '{"spec": {"type": "NodePort", "ports": [{"port": 20001, "targetPort": 20001, "nodePort": 30003}]}}'

kubectl patch svc -n istio-system tracing -p '{"spec": {"type": "NodePort", "ports": [{"port": 80, "targetPort": 16686, "nodePort": 30004}]}}'

✅ 출력

1

2

3

4

service/prometheus patched

service/grafana patched

service/kiali patched

service/tracing patched

❌ 가장 흔한 실수: 잘못 설정한 데이터 플레인

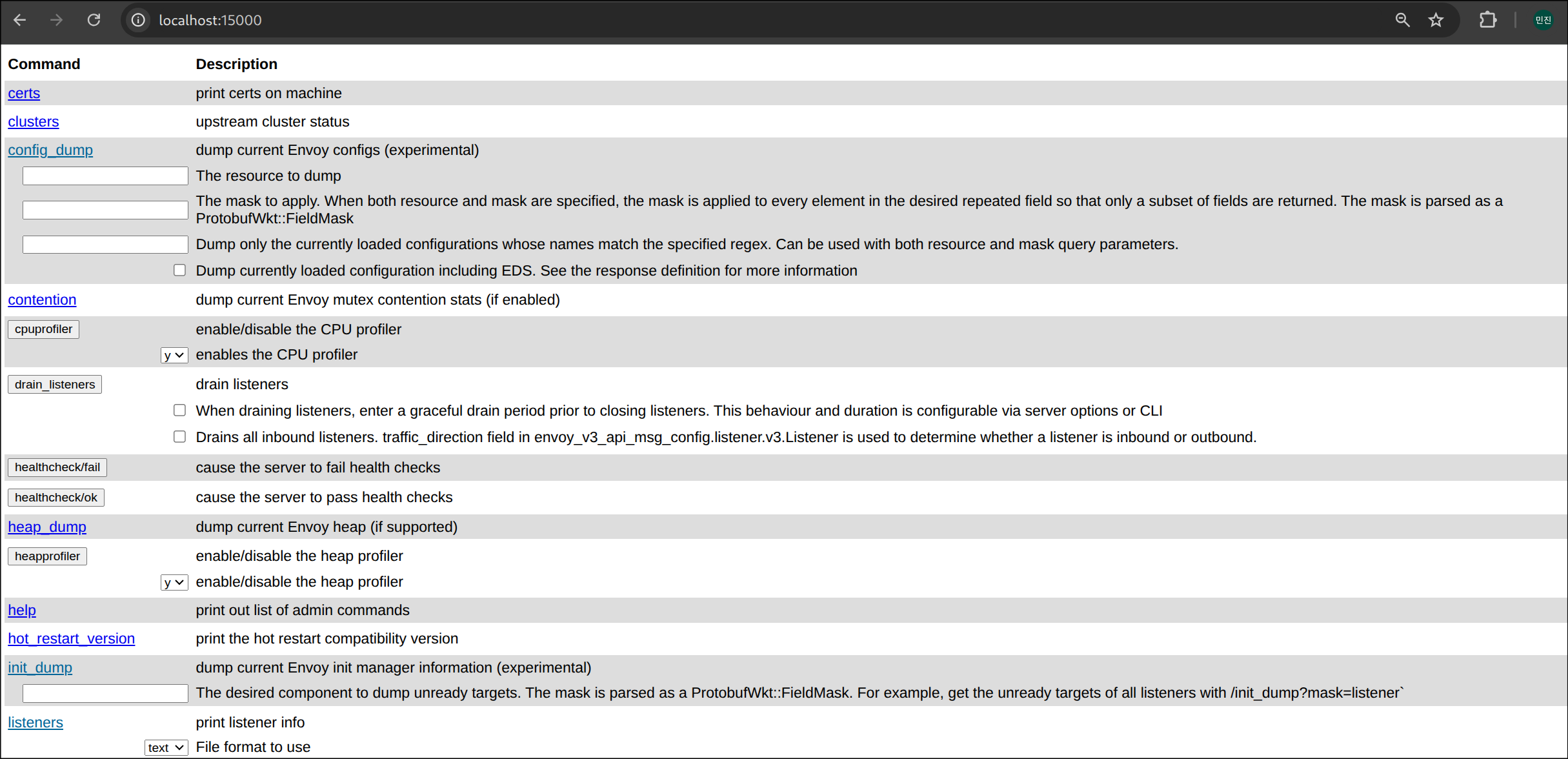

1. 샘플 애플리케이션 배포

1

2

3

4

kubectl apply -f services/catalog/kubernetes/catalog.yaml -n istioinaction # catalog v1 배포

kubectl apply -f ch10/catalog-deployment-v2.yaml -n istioinaction # catalog v2 배포

kubectl apply -f ch10/catalog-gateway.yaml -n istioinaction # catalog-gateway 배포

kubectl apply -f ch10/catalog-virtualservice-subsets-v1-v2.yaml -n istioinaction

✅ 출력

1

2

3

4

5

6

serviceaccount/catalog created

service/catalog created

deployment.apps/catalog created

deployment.apps/catalog-v2 created

gateway.networking.istio.io/catalog-gateway created

virtualservice.networking.istio.io/catalog-v1-v2 created

2. Gateway 리소스 구성 확인

1

cat ch10/catalog-gateway.yaml

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: catalog-gateway

namespace: istioinaction

spec:

selector:

istio: ingressgateway

servers:

- hosts:

- "catalog.istioinaction.io"

port:

number: 80

name: http

protocol: HTTP

3. VirtualService 리소스 구성 확인

1

cat ch10/catalog-virtualservice-subsets-v1-v2.yaml

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: catalog-v1-v2

namespace: istioinaction

spec:

hosts:

- "catalog.istioinaction.io"

gateways:

- "catalog-gateway"

http:

- route:

- destination:

host: catalog.istioinaction.svc.cluster.local

subset: version-v1

port:

number: 80

weight: 20

- destination:

host: catalog.istioinaction.svc.cluster.local

subset: version-v2

port:

number: 80

weight: 80

4. 배포 및 서비스 리소스 상태 확인

1

kubectl get deploy,svc -n istioinaction

✅ 출력

1

2

3

4

5

6

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/catalog 1/1 1 1 116s

deployment.apps/catalog-v2 2/2 2 2 116s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/catalog ClusterIP 10.200.3.2 <none> 80/TCP 116s

5. Gateway 및 VirtualService 리소스 상태 확인

1

kubectl get gw,vs -n istioinaction

✅ 출력

1

2

3

4

5

NAME AGE

gateway.networking.istio.io/catalog-gateway 2m47s

NAME GATEWAYS HOSTS AGE

virtualservice.networking.istio.io/catalog-v1-v2 ["catalog-gateway"] ["catalog.istioinaction.io"] 2m47s

6. 인그레스 게이트웨이 로그 확인

NoClusterFound : Upstream cluster not found

1

kubectl logs -n istio-system -l app=istio-ingressgateway -f

✅ 출력

1

2

3

4

5

6

7

8

9

10

2025-05-17T03:26:46.438741Z info cache Root cert has changed, start rotating root cert

2025-05-17T03:26:46.438764Z info ads XDS: Incremental Pushing:0 ConnectedEndpoints:2 Version:

2025-05-17T03:26:46.438815Z info cache returned workload certificate from cache ttl=23h59m59.561192303s

2025-05-17T03:26:46.438818Z info cache returned workload trust anchor from cache ttl=23h59m59.561184436s

2025-05-17T03:26:46.438914Z info cache returned workload trust anchor from cache ttl=23h59m59.561087612s

2025-05-17T03:26:46.438969Z info ads SDS: PUSH request for node:istio-ingressgateway-6bb8fb6549-2mlk2.istio-system resources:1 size:4.0kB resource:default

2025-05-17T03:26:46.439063Z info ads SDS: PUSH request for node:istio-ingressgateway-6bb8fb6549-2mlk2.istio-system resources:1 size:1.1kB resource:ROOTCA

2025-05-17T03:26:46.439117Z info cache returned workload trust anchor from cache ttl=23h59m59.560887326s

2025-05-17T03:26:47.984580Z info Readiness succeeded in 1.922836442s

2025-05-17T03:26:47.984873Z info Envoy proxy is ready

7. 애플리케이션 엔드포인트 반복 호출 시도

1

for i in {1..100}; do curl http://catalog.istioinaction.io:30000/items -w "\nStatus Code %{http_code}\n"; sleep .5; done

✅ 출력

1

2

3

4

5

6

Status Code 503

Status Code 503

Status Code 503

...

1

2

3

4

[2025-05-17T03:40:27.455Z] "GET /items HTTP/1.1" 503 NC cluster_not_found - "-" 0 0 0 - "172.18.0.1" "curl/8.13.0" "4cf735c9-d09b-9769-9bf0-83fefa1b6931" "catalog.istioinaction.io:30000" "-" - - 10.10.0.6:8080 172.18.0.1:40804 - -

[2025-05-17T03:40:27.970Z] "GET /items HTTP/1.1" 503 NC cluster_not_found - "-" 0 0 0 - "172.18.0.1" "curl/8.13.0" "4f9c451d-9cd4-9508-bb67-2773fc37adbd" "catalog.istioinaction.io:30000" "-" - - 10.10.0.6:8080 172.18.0.1:40808 - -

[2025-05-17T03:40:28.490Z] "GET /items HTTP/1.1" 503 NC cluster_not_found - "-" 0 0 0 - "172.18.0.1" "curl/8.13.0" "53485abd-ca82-9138-89ba-24edb3a24420" "catalog.istioinaction.io:30000" "-" - - 10.10.0.6:8080 172.18.0.1:40814 - -

...

🔍 데이터 플레인이 최신 상태인지 확인하기

1. 데이터 플레인 동기화 상태 확인

1

docker exec -it myk8s-control-plane istioctl proxy-status

✅ 출력

1

2

3

4

5

6

NAME CLUSTER CDS LDS EDS RDS ECDS ISTIOD VERSION

catalog-6cf4b97d-7d6gb.istioinaction Kubernetes SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-8d74787f-6jlfq 1.17.8

catalog-v2-56c97f6db-8wnq6.istioinaction Kubernetes SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-8d74787f-6jlfq 1.17.8

catalog-v2-56c97f6db-fl74q.istioinaction Kubernetes SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-8d74787f-6jlfq 1.17.8

istio-egressgateway-85df6b84b7-md4m2.istio-system Kubernetes SYNCED SYNCED SYNCED NOT SENT NOT SENT istiod-8d74787f-6jlfq 1.17.8

istio-ingressgateway-6bb8fb6549-2mlk2.istio-system Kubernetes SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-8d74787f-6jlfq 1.17.8

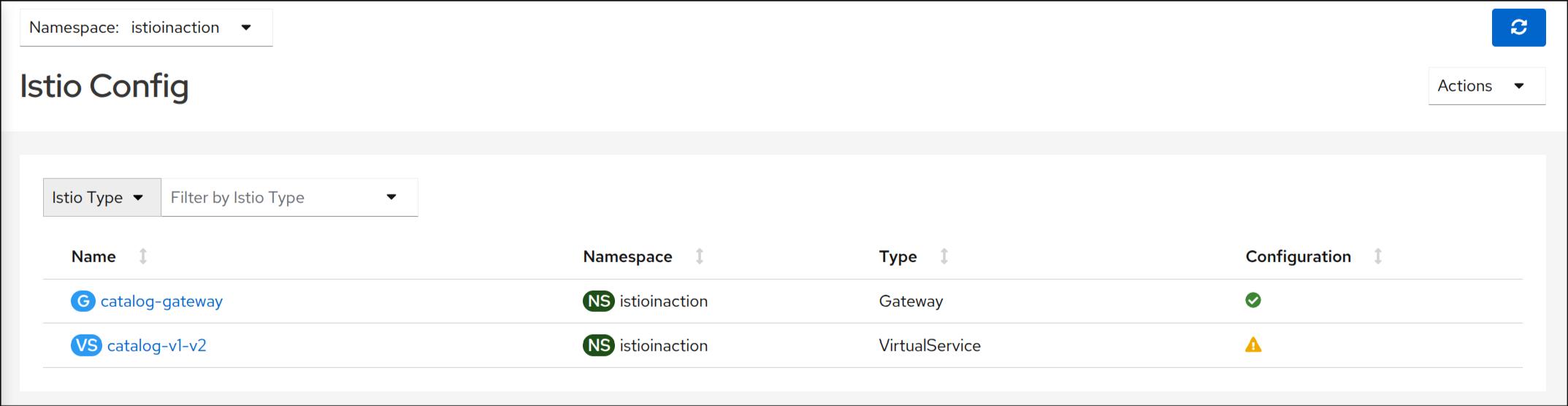

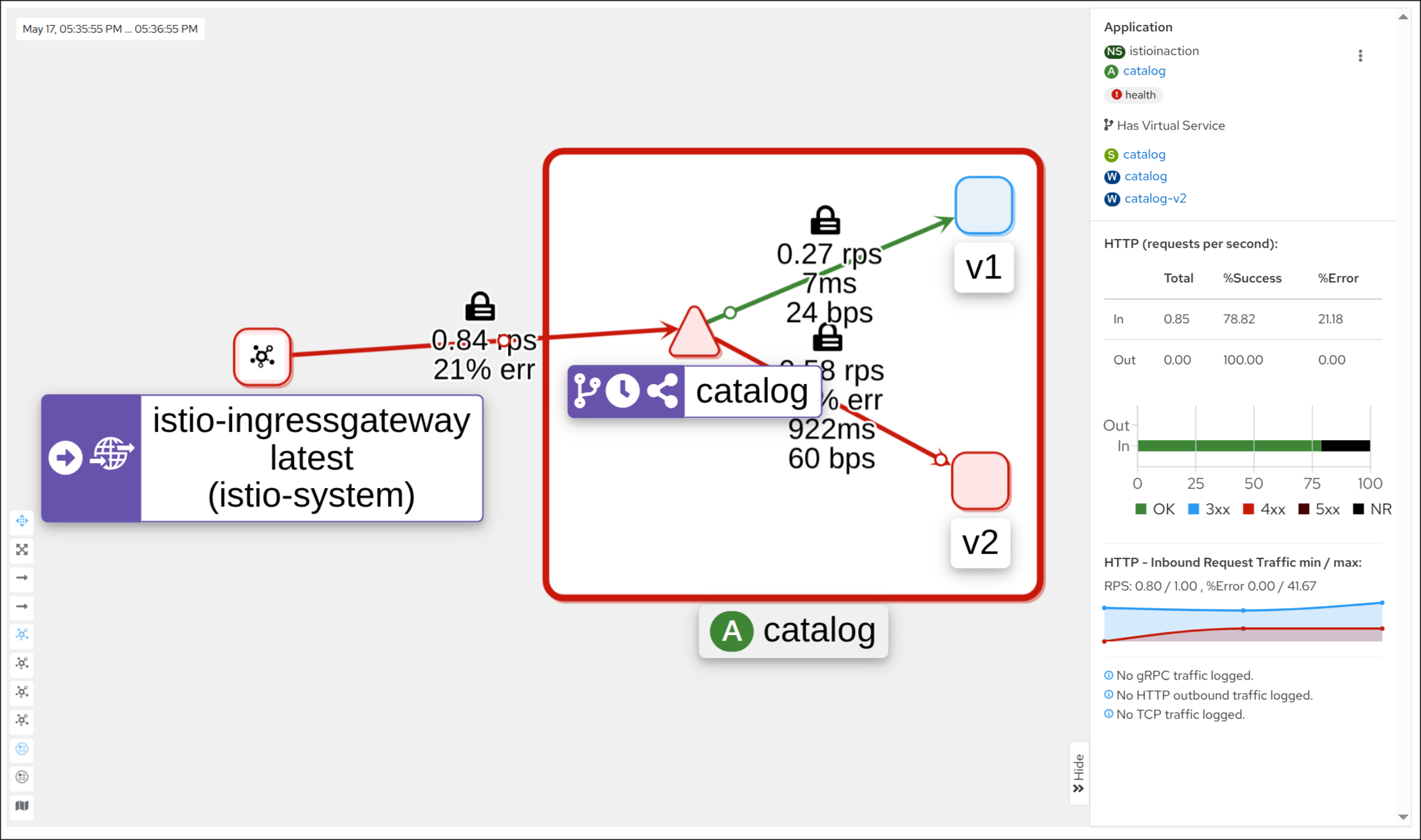

2. 설정 누락 문제 식별: Kiali로 서브셋 검증하기

Subset not found - https://v1-41.kiali.io/docs/features/validations/#kia1107—subset-not-found

⚠️ istioctl로 잘못된 설정 발견하기

1. 네임스페이스 설정 분석

1

docker exec -it myk8s-control-plane istioctl analyze -n istioinaction

✅ 출력

1

2

3

4

Error [IST0101] (VirtualService istioinaction/catalog-v1-v2) Referenced host+subset in destinationrule not found: "catalog.istioinaction.svc.cluster.local+version-v1"

Error [IST0101] (VirtualService istioinaction/catalog-v1-v2) Referenced host+subset in destinationrule not found: "catalog.istioinaction.svc.cluster.local+version-v2"

Error: Analyzers found issues when analyzing namespace: istioinaction.

See https://istio.io/v1.17/docs/reference/config/analysis for more information about causes and resolutions.

https://istio.io/v1.17/docs/reference/config/analysis/

2. analyze 명령어 종료 코드 확인

1

echo $? # (참고) 0 성공

✅ 출력

1

79

3. catalog 파드 이름 변수로 저장

1

2

kubectl get pod -n istioinaction -l app=catalog -o jsonpath='{.items[0].metadata.name}'

CATALOG_POD1=$(kubectl get pod -n istioinaction -l app=catalog -o jsonpath='{.items[0].metadata.name}')

✅ 출력

1

catalog-6cf4b97d-7d6gb

4. 특정 파드의 Istio 리소스 상세 정보 조회

1

docker exec -it myk8s-control-plane istioctl x des pod -n istioinaction $CATALOG_POD1

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

Pod: catalog-6cf4b97d-7d6gb

Pod Revision: default

Pod Ports: 3000 (catalog), 15090 (istio-proxy)

--------------------

Service: catalog

Port: http 80/HTTP targets pod port 3000

--------------------

Effective PeerAuthentication:

Workload mTLS mode: PERMISSIVE

Exposed on Ingress Gateway http://172.18.0.2

VirtualService: catalog-v1-v2

WARNING: No destinations match pod subsets (checked 1 HTTP routes)

Warning: Route to subset version-v1 but NO DESTINATION RULE defining subsets!

Warning: Route to subset version-v2 but NO DESTINATION RULE defining subsets!

5. DestinationRule 구성 확인

1

cat ch10/catalog-destinationrule-v1-v2.yaml

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

---

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: catalog

namespace: istioinaction

spec:

host: catalog.istioinaction.svc.cluster.local

subsets:

- name: version-v1

labels:

version: v1

- name: version-v2

labels:

version: v2

6. DestinationRule 적용 및 설정 재검증

1

2

kubectl apply -f ch10/catalog-destinationrule-v1-v2.yaml

docker exec -it myk8s-control-plane istioctl x des pod -n istioinaction $CATALOG_POD1

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

destinationrule.networking.istio.io/catalog created

Pod: catalog-6cf4b97d-7d6gb

Pod Revision: default

Pod Ports: 3000 (catalog), 15090 (istio-proxy)

--------------------

Service: catalog

Port: http 80/HTTP targets pod port 3000

DestinationRule: catalog for "catalog.istioinaction.svc.cluster.local"

Matching subsets: version-v1 # 일치하는 부분집합

(Non-matching subsets version-v2) # 일치하지 않은 부분집합

No Traffic Policy

--------------------

Effective PeerAuthentication:

Workload mTLS mode: PERMISSIVE

Exposed on Ingress Gateway http://172.18.0.2

VirtualService: catalog-v1-v2 # 이 파드로 트래픽을 라우팅하는 VirtualService

Weight 20%

7. 테스트 후 리소스 원복

1

2

3

4

kubectl delete -f ch10/catalog-destinationrule-v1-v2.yaml

# 결과

destinationrule.networking.istio.io "catalog" deleted

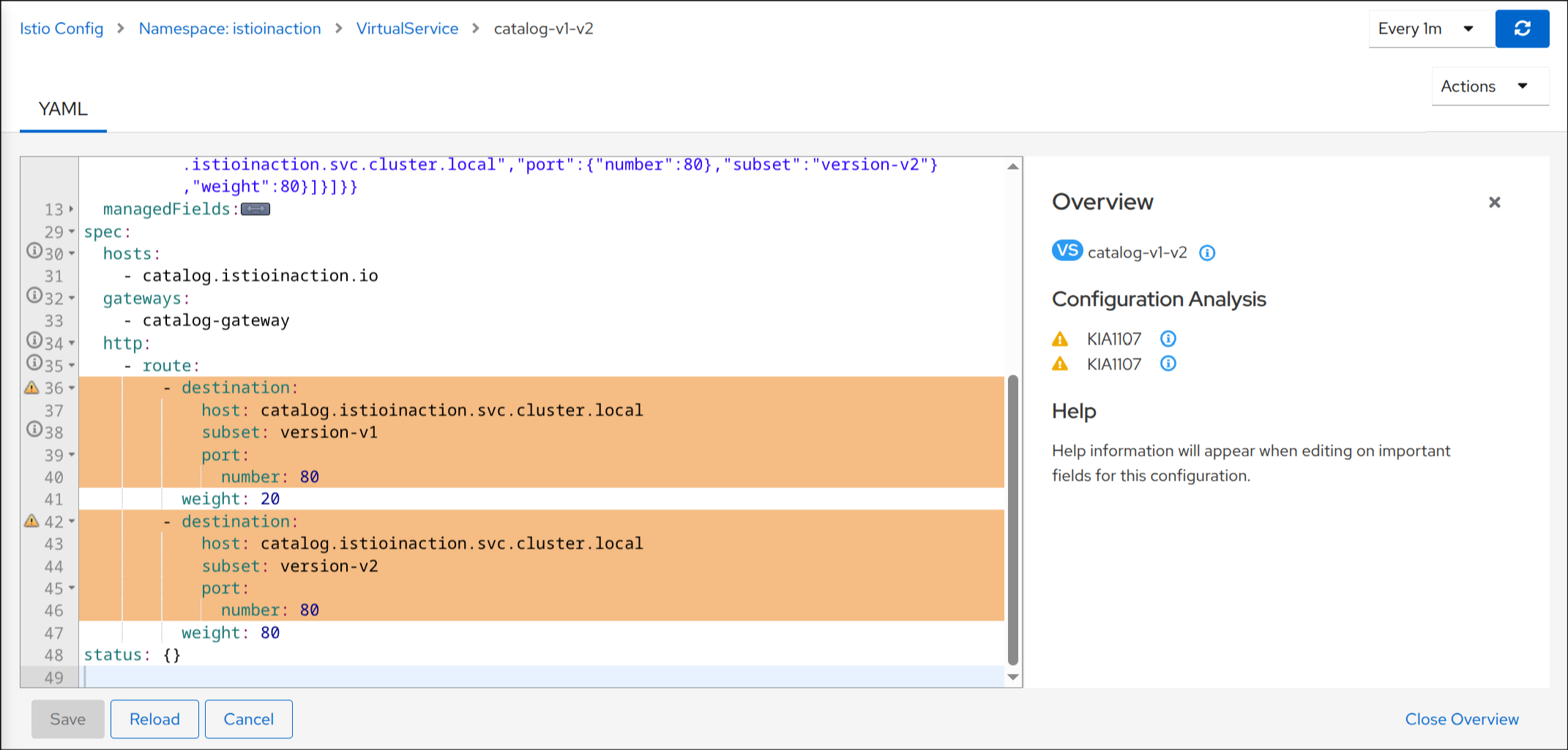

🧰 엔보이 관리(admin) 인터페이스

1. 엔보이 어드민 인터페이스 포트포워딩

1

2

3

4

5

kubectl port-forward deploy/catalog -n istioinaction 15000:15000

# 결과

Forwarding from 127.0.0.1:15000 -> 15000

Forwarding from [::1]:15000 -> 15000

2. 엔보이 설정 전체 덤프 크기 확인

1

curl -s localhost:15000/config_dump | wc -l

✅ 출력

1

13952

🧭 istioctl 로 프록시 설정 쿼리하기

1. ingressgateway의 Envoy 리스너 설정 확인

1

docker exec -it myk8s-control-plane istioctl proxy-config listener deploy/istio-ingressgateway -n istio-system

✅ 출력

1

2

3

4

5

6

ADDRESS PORT MATCH DESTINATION

0.0.0.0 8080 ALL Route: http.8080 # 8080 포트에 대한 요청은 루트 http.8080에 따라 라우팅하도록 설정된다

0.0.0.0 15021 ALL Inline Route: /healthz/ready*

0.0.0.0 15090 ALL Inline Route: /stats/prometheus*

## 리스너는 8080 포트에 설정돼 있다.

## 그 리스너에서 트래픽은 http.8080 이라는 루트에 따라 라우팅된다.

2. ingressgateway 서비스의 포트 매핑 확인

1

kubectl get svc -n istio-system istio-ingressgateway -o yaml | grep "ports:" -A10

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

ports:

- name: status-port

nodePort: 31287

port: 15021

protocol: TCP

targetPort: 15021

- name: http2

nodePort: 30000

port: 80

protocol: TCP

targetPort: 8080

📍 엔보이 루트 설정 쿼리하기

1. http.8080 라우트 설정 확인

1

docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/istio-ingressgateway -n istio-system --name http.8080

✅ 출력

1

2

NAME DOMAINS MATCH VIRTUAL SERVICE

http.8080 catalog.istioinaction.io /* catalog-v1-v2.istioinaction

2. 라우팅 세부 정보(JSON) 확인

1

docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/istio-ingressgateway -n istio-system --name http.8080 -o json

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

[

{

"name": "http.8080",

"virtualHosts": [

{

"name": "catalog.istioinaction.io:80",

"domains": [

"catalog.istioinaction.io"

],

"routes": [

{

"match": {

"prefix": "/" # 일치해야 하는 라우팅 규칙

},

"route": {

"weightedClusters": {

"clusters": [ # 규칙이 일치할 때 트래픽을 라우팅하는 클러스터

{

"name": "outbound|80|version-v1|catalog.istioinaction.svc.cluster.local",

"weight": 20

},

{

"name": "outbound|80|version-v2|catalog.istioinaction.svc.cluster.local",

"weight": 80

}

],

"totalWeight": 100

},

"timeout": "0s",

"retryPolicy": {

"retryOn": "connect-failure,refused-stream,unavailable,cancelled,retriable-status-codes",

"numRetries": 2,

"retryHostPredicate": [

{

"name": "envoy.retry_host_predicates.previous_hosts",

"typedConfig": {

"@type": "type.googleapis.com/envoy.extensions.retry.host.previous_hosts.v3.PreviousHostsPredicate"

}

}

],

"hostSelectionRetryMaxAttempts": "5",

"retriableStatusCodes": [

503

]

},

"maxGrpcTimeout": "0s"

},

"metadata": {

"filterMetadata": {

"istio": {

"config": "/apis/networking.istio.io/v1alpha3/namespaces/istioinaction/virtual-service/catalog-v1-v2"

}

}

},

"decorator": {

"operation": "catalog-v1-v2:80/*"

}

}

],

"includeRequestAttemptCount": true

}

],

"validateClusters": false,

"ignorePortInHostMatching": true

}

]

🧬 엔보이 클러스터 설정 쿼리하기

1. 기본 클러스터 설정 조회 (subset 없이)

1

2

docker exec -it myk8s-control-plane istioctl proxy-config clusters deploy/istio-ingressgateway -n istio-system \

--fqdn catalog.istioinaction.svc.cluster.local --port 80

✅ 출력

1

2

SERVICE FQDN PORT SUBSET DIRECTION TYPE DESTINATION RULE

catalog.istioinaction.svc.cluster.local 80 - outbound EDS

2. 특정 서브셋에 대한 클러스터 조회

1

2

docker exec -it myk8s-control-plane istioctl proxy-config clusters deploy/istio-ingressgateway -n istio-system \

--fqdn catalog.istioinaction.svc.cluster.local --port 80 --subset version-v1

✅ 출력

1

SERVICE FQDN PORT SUBSET DIRECTION TYPE DESTINATION RULE

현재 서브셋으로 구성된 데스티네이션 룰이 없다.

3. DestinationRule 정의 확인 (설정 예정인 YAML 내용 확인)

1

docker exec -it myk8s-control-plane cat /istiobook/ch10/catalog-destinationrule-v1-v2.yaml

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

---

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: catalog

namespace: istioinaction

spec:

host: catalog.istioinaction.svc.cluster.local

subsets:

- name: version-v1

labels:

version: v1

- name: version-v2

labels:

version: v2

4. 미리 설정 파일 분석하여 오류 유무 확인

istioctl analyze <yaml> 명령어로 적용 전 설정 유효성 검사

1

docker exec -it myk8s-control-plane istioctl analyze /istiobook/ch10/catalog-destinationrule-v1-v2.yaml -n istioinaction

✅ 출력

1

✔ No validation issues found when analyzing /istiobook/ch10/catalog-destinationrule-v1-v2.yaml.

5. DestinationRule 적용

1

2

3

4

kubectl apply -f ch10/catalog-destinationrule-v1-v2.yaml

# 결과

destinationrule.networking.istio.io/catalog created

6. 클러스터 재조회로 서브셋 반영 여부 확인

1

2

docker exec -it myk8s-control-plane istioctl proxy-config clusters deploy/istio-ingressgateway -n istio-system \

--fqdn catalog.istioinaction.svc.cluster.local --port 80

✅ 출력

1

2

3

4

SERVICE FQDN PORT SUBSET DIRECTION TYPE DESTINATION RULE

catalog.istioinaction.svc.cluster.local 80 - outbound EDS catalog.istioinaction

catalog.istioinaction.svc.cluster.local 80 version-v1 outbound EDS catalog.istioinaction

catalog.istioinaction.svc.cluster.local 80 version-v2 outbound EDS catalog.istioinaction

7. 파드 기준 라우팅 상태 재확인

istioctl x describe pod 명령어로 VirtualService와 DestinationRule 연결 상태 점검

1

2

CATALOG_POD1=$(kubectl get pod -n istioinaction -l app=catalog -o jsonpath='{.items[0].metadata.name}')

docker exec -it myk8s-control-plane istioctl x des pod -n istioinaction $CATALOG_POD1

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

Pod: catalog-6cf4b97d-7d6gb

Pod Revision: default

Pod Ports: 3000 (catalog), 15090 (istio-proxy)

--------------------

Service: catalog

Port: http 80/HTTP targets pod port 3000

DestinationRule: catalog for "catalog.istioinaction.svc.cluster.local"

Matching subsets: version-v1

(Non-matching subsets version-v2)

No Traffic Policy

--------------------

Effective PeerAuthentication:

Workload mTLS mode: PERMISSIVE

Exposed on Ingress Gateway http://172.18.0.2

VirtualService: catalog-v1-v2

Weight 20%

8. 전체 네임스페이스 설정 재검사

1

docker exec -it myk8s-control-plane istioctl analyze -n istioinaction

✅ 출력

1

✔ No validation issues found when analyzing namespace: istioinaction.

9. 실제 애플리케이션 호출로 문제 해결 여부 검증

1

curl http://catalog.istioinaction.io:30000/items

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

[

{

"id": 1,

"color": "amber",

"department": "Eyewear",

"name": "Elinor Glasses",

"price": "282.00"

},

{

"id": 2,

"color": "cyan",

"department": "Clothing",

"name": "Atlas Shirt",

"price": "127.00"

},

{

"id": 3,

"color": "teal",

"department": "Clothing",

"name": "Small Metal Shoes",

"price": "232.00"

},

{

"id": 4,

"color": "red",

"department": "Watches",

"name": "Red Dragon Watch",

"price": "232.00"

}

]

⚙️ 클러스터는 어떻게 설정되는가?

Envoy 클러스터 설정 상세 쿼리 (JSON 출력)

1

2

docker exec -it myk8s-control-plane istioctl proxy-config clusters deploy/istio-ingressgateway -n istio-system \

--fqdn catalog.istioinaction.svc.cluster.local --port 80 --subset version-v1 -o json

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

[

{

"transportSocketMatches": [

{

"name": "tlsMode-istio",

"match": {

"tlsMode": "istio"

},

"transportSocket": {

"name": "envoy.transport_sockets.tls",

"typedConfig": {

"@type": "type.googleapis.com/envoy.extensions.transport_sockets.tls.v3.UpstreamTlsContext",

"commonTlsContext": {

"tlsParams": {

"tlsMinimumProtocolVersion": "TLSv1_2",

"tlsMaximumProtocolVersion": "TLSv1_3"

},

"tlsCertificateSdsSecretConfigs": [

{

"name": "default",

"sdsConfig": {

"apiConfigSource": {

"apiType": "GRPC",

"transportApiVersion": "V3",

"grpcServices": [

{

"envoyGrpc": {

"clusterName": "sds-grpc"

}

}

],

"setNodeOnFirstMessageOnly": true

},

"initialFetchTimeout": "0s",

"resourceApiVersion": "V3"

}

}

],

"combinedValidationContext": {

"defaultValidationContext": {

"matchSubjectAltNames": [

{

"exact": "spiffe://cluster.local/ns/istioinaction/sa/catalog"

}

]

},

"validationContextSdsSecretConfig": {

"name": "ROOTCA",

"sdsConfig": {

"apiConfigSource": {

"apiType": "GRPC",

"transportApiVersion": "V3",

"grpcServices": [

{

"envoyGrpc": {

"clusterName": "sds-grpc"

}

}

],

"setNodeOnFirstMessageOnly": true

},

"initialFetchTimeout": "0s",

"resourceApiVersion": "V3"

}

}

},

"alpnProtocols": [

"istio-peer-exchange",

"istio"

]

},

"sni": "outbound_.80_.version-v1_.catalog.istioinaction.svc.cluster.local"

}

}

},

{

"name": "tlsMode-disabled",

"match": {},

"transportSocket": {

"name": "envoy.transport_sockets.raw_buffer",

"typedConfig": {

"@type": "type.googleapis.com/envoy.extensions.transport_sockets.raw_buffer.v3.RawBuffer"

}

}

}

],

"name": "outbound|80|version-v1|catalog.istioinaction.svc.cluster.local",

"type": "EDS",

"edsClusterConfig": {

"edsConfig": {

"ads": {},

"initialFetchTimeout": "0s",

"resourceApiVersion": "V3"

},

"serviceName": "outbound|80|version-v1|catalog.istioinaction.svc.cluster.local"

},

"connectTimeout": "10s",

"lbPolicy": "LEAST_REQUEST",

"circuitBreakers": {

"thresholds": [

{

"maxConnections": 4294967295,

"maxPendingRequests": 4294967295,

"maxRequests": 4294967295,

"maxRetries": 4294967295,

"trackRemaining": true

}

]

},

"commonLbConfig": {

"localityWeightedLbConfig": {}

},

"metadata": {

"filterMetadata": {

"istio": {

"config": "/apis/networking.istio.io/v1alpha3/namespaces/istioinaction/destination-rule/catalog",

"default_original_port": 80,

"services": [

{

"host": "catalog.istioinaction.svc.cluster.local",

"name": "catalog",

"namespace": "istioinaction"

}

],

"subset": "version-v1"

}

}

},

"filters": [

{

"name": "istio.metadata_exchange",

"typedConfig": {

"@type": "type.googleapis.com/envoy.tcp.metadataexchange.config.MetadataExchange",

"protocol": "istio-peer-exchange"

}

}

]

}

]

🛰️ 엔보이 클러스터 엔드포인트 쿼리하기

1. 엔보이가 라우팅하는 실제 엔드포인트 정보 확인

1

2

docker exec -it myk8s-control-plane istioctl proxy-config endpoints deploy/istio-ingressgateway -n istio-system \

--cluster "outbound|80|version-v1|catalog.istioinaction.svc.cluster.local"

✅ 출력

1

2

ENDPOINT STATUS OUTLIER CHECK CLUSTER

10.10.0.12:3000 HEALTHY OK outbound|80|version-v1|catalog.istioinaction.svc.cluster.local

2. 엔드포인트 IP에 해당하는 워크로드 존재 여부 검증

1

kubectl get pod -n istioinaction --field-selector status.podIP=10.10.0.12 -owide --show-labels

✅ 출력

1

2

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS

catalog-6cf4b97d-7d6gb 2/2 Running 0 3h50m 10.10.0.12 myk8s-control-plane <none> <none> app=catalog,pod-template-hash=6cf4b97d,security.istio.io/tlsMode=istio,service.istio.io/canonical-name=catalog,service.istio.io/canonical-revision=v1,version=v1

실제 워크로드가 존재하고, 트래픽이 해당 워크로드로 라우팅되도록 엔보이 설정이 완전히 구성되었다.

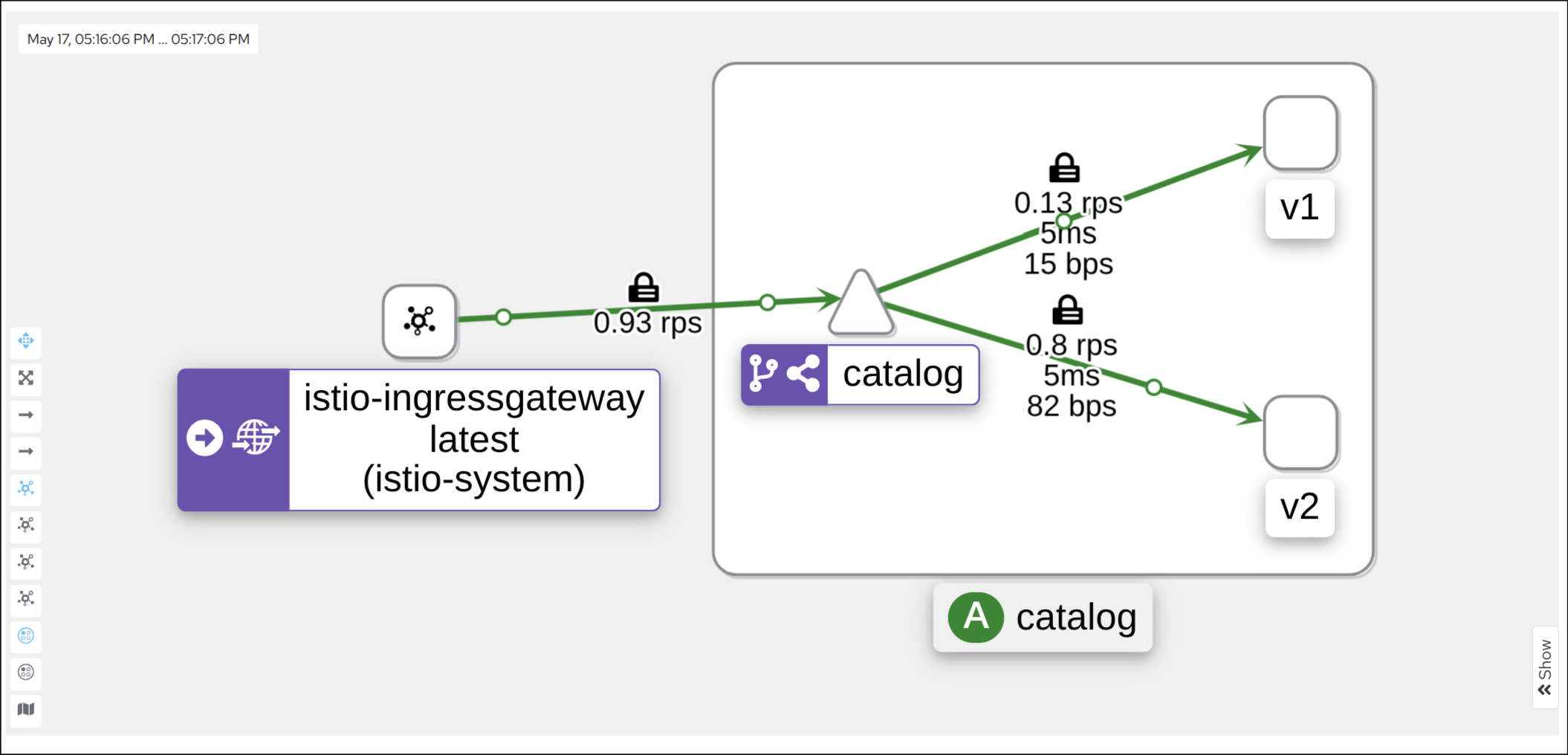

🧯 애플리케이션 문제 트러블슈팅하기

1. 정상 응답 상태 사전 검증

1

for in in {1..9999}; do curl http://catalog.istioinaction.io:30000/items -w "\nStatus Code %{http_code}\n"; sleep 1; done

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

[

{

"id": 1,

"color": "amber",

"department": "Eyewear",

"name": "Elinor Glasses",

"price": "282.00"

},

{

"id": 2,

"color": "cyan",

"department": "Clothing",

"name": "Atlas Shirt",

"price": "127.00"

},

{

"id": 3,

"color": "teal",

"department": "Clothing",

"name": "Small Metal Shoes",

"price": "232.00"

},

{

"id": 4,

"color": "red",

"department": "Watches",

"name": "Red Dragon Watch",

"price": "232.00"

}

]

Status Code 200

...

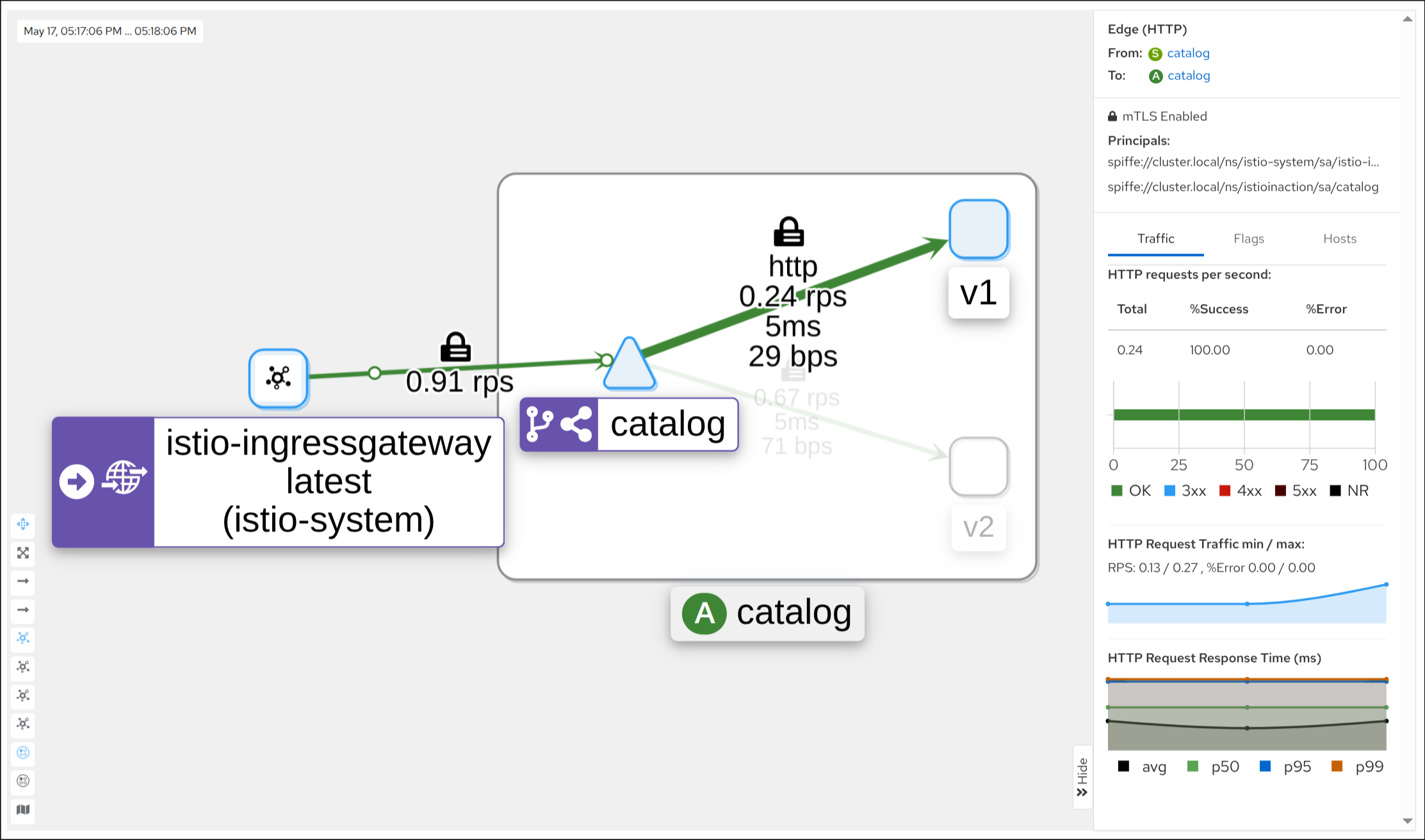

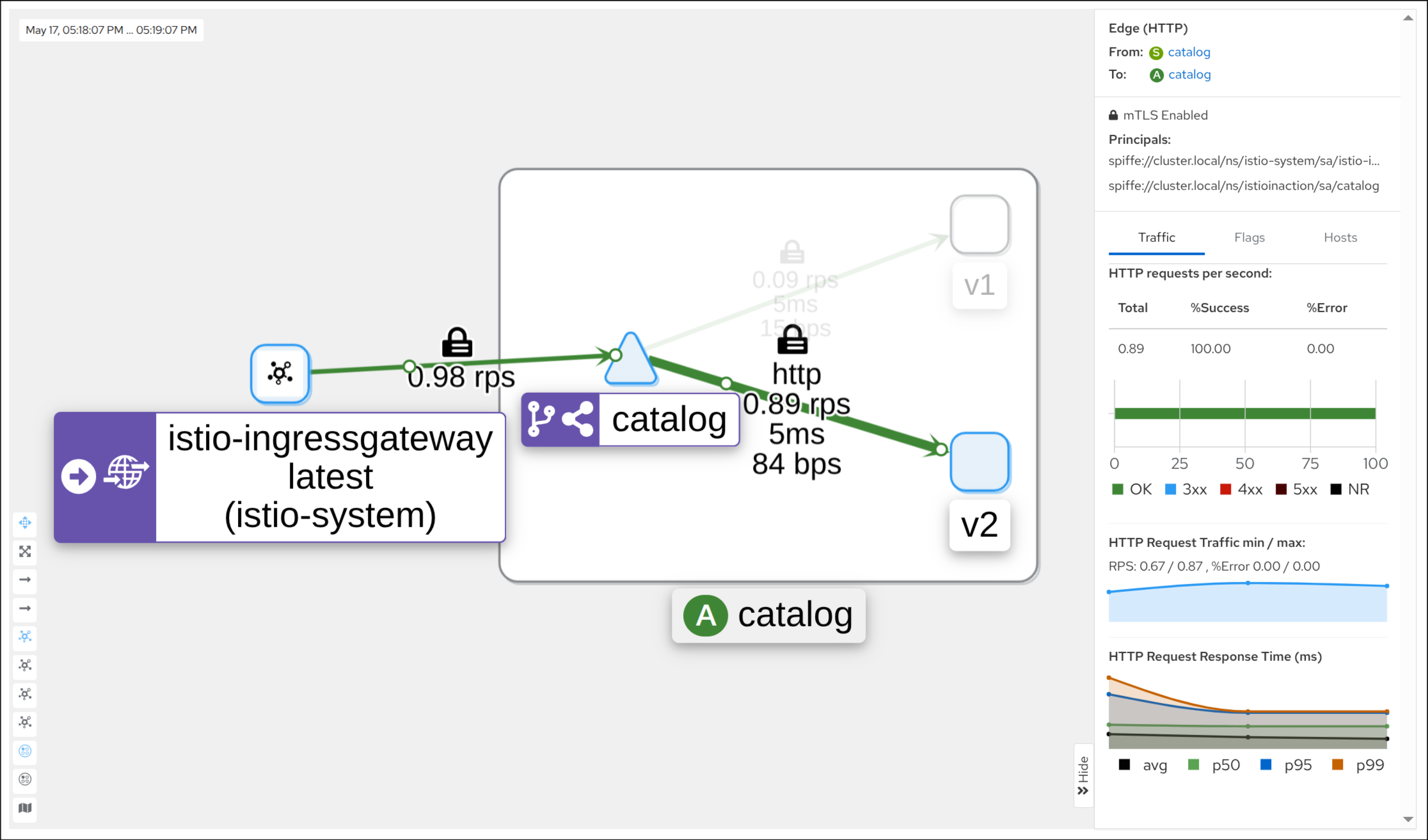

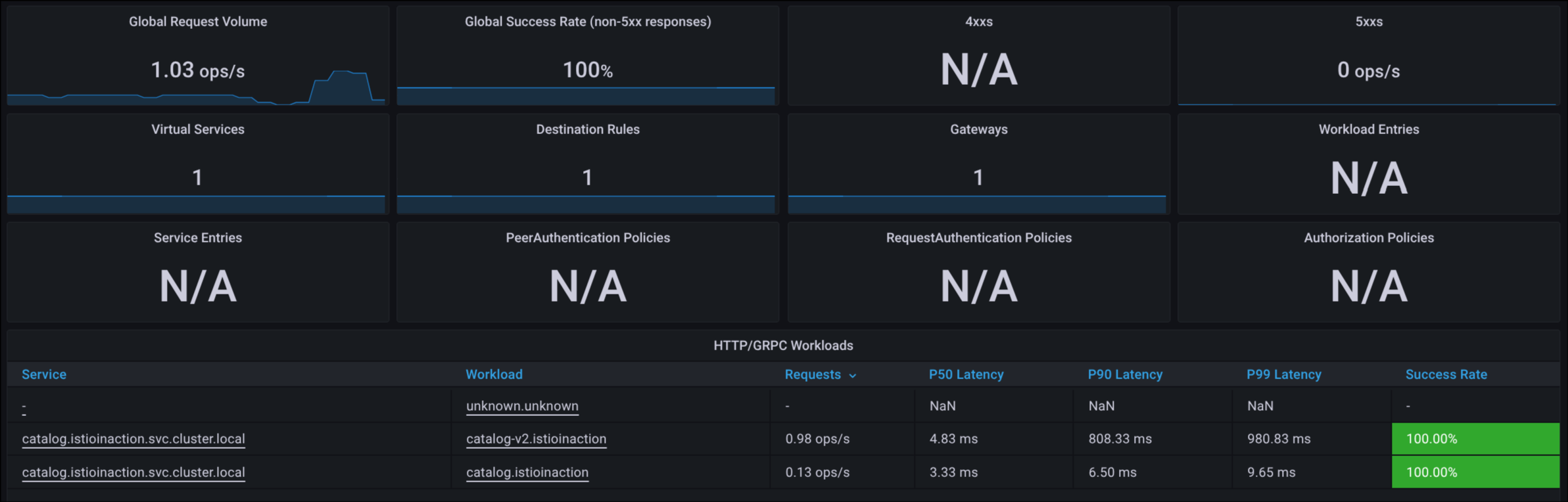

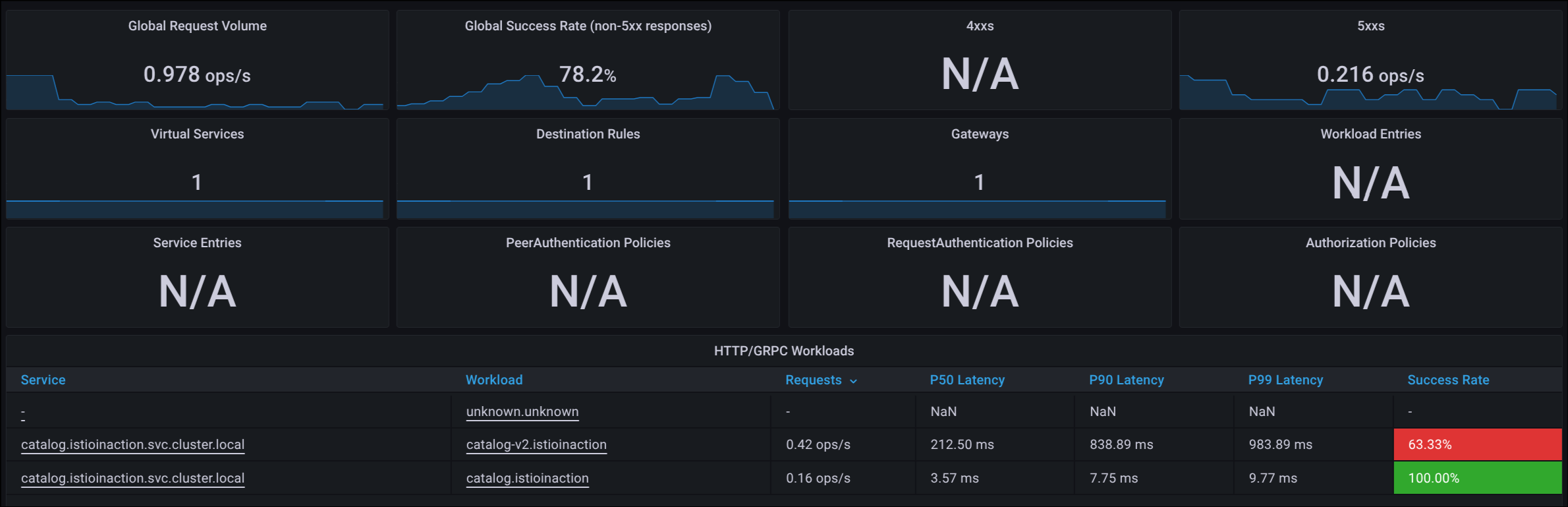

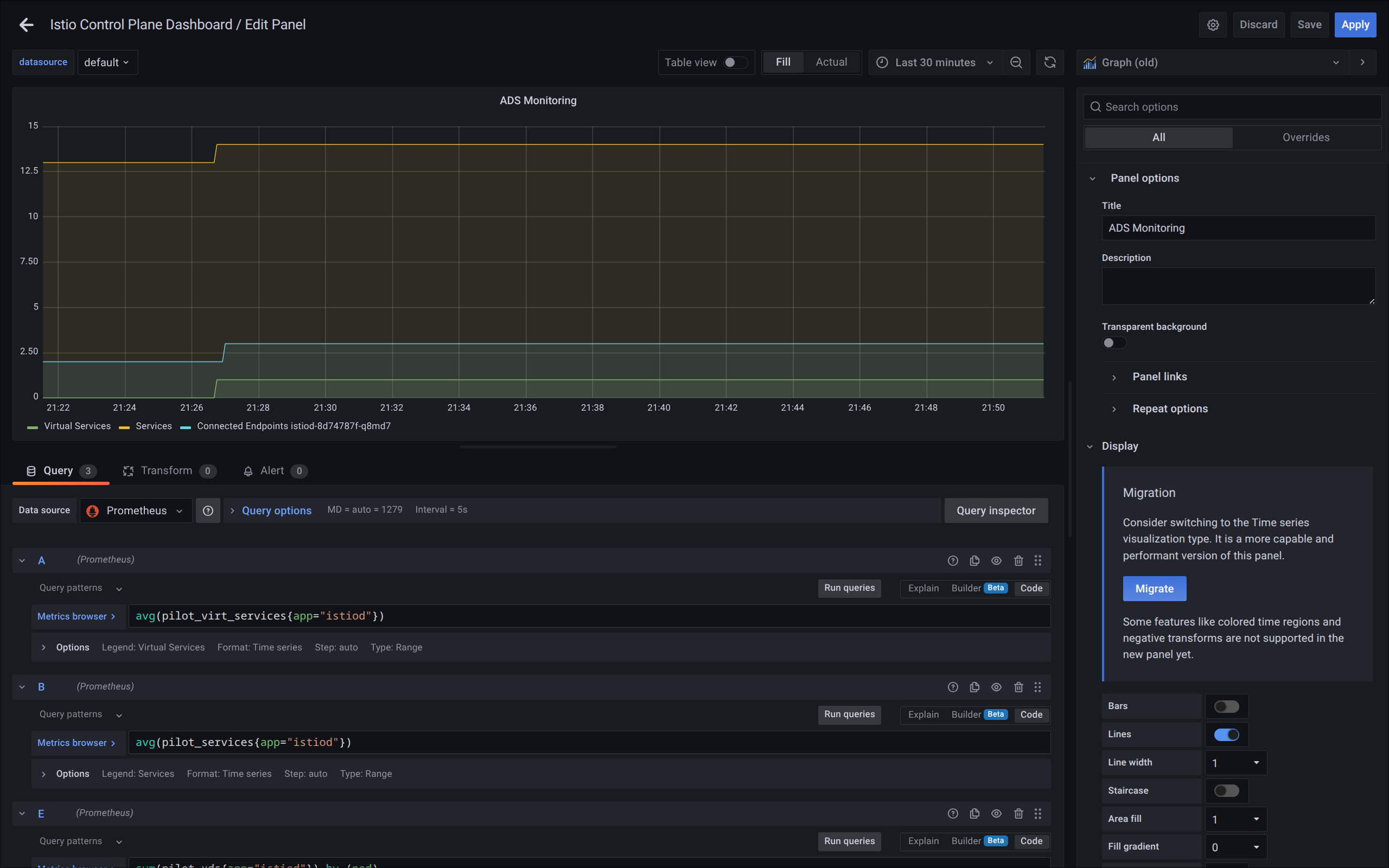

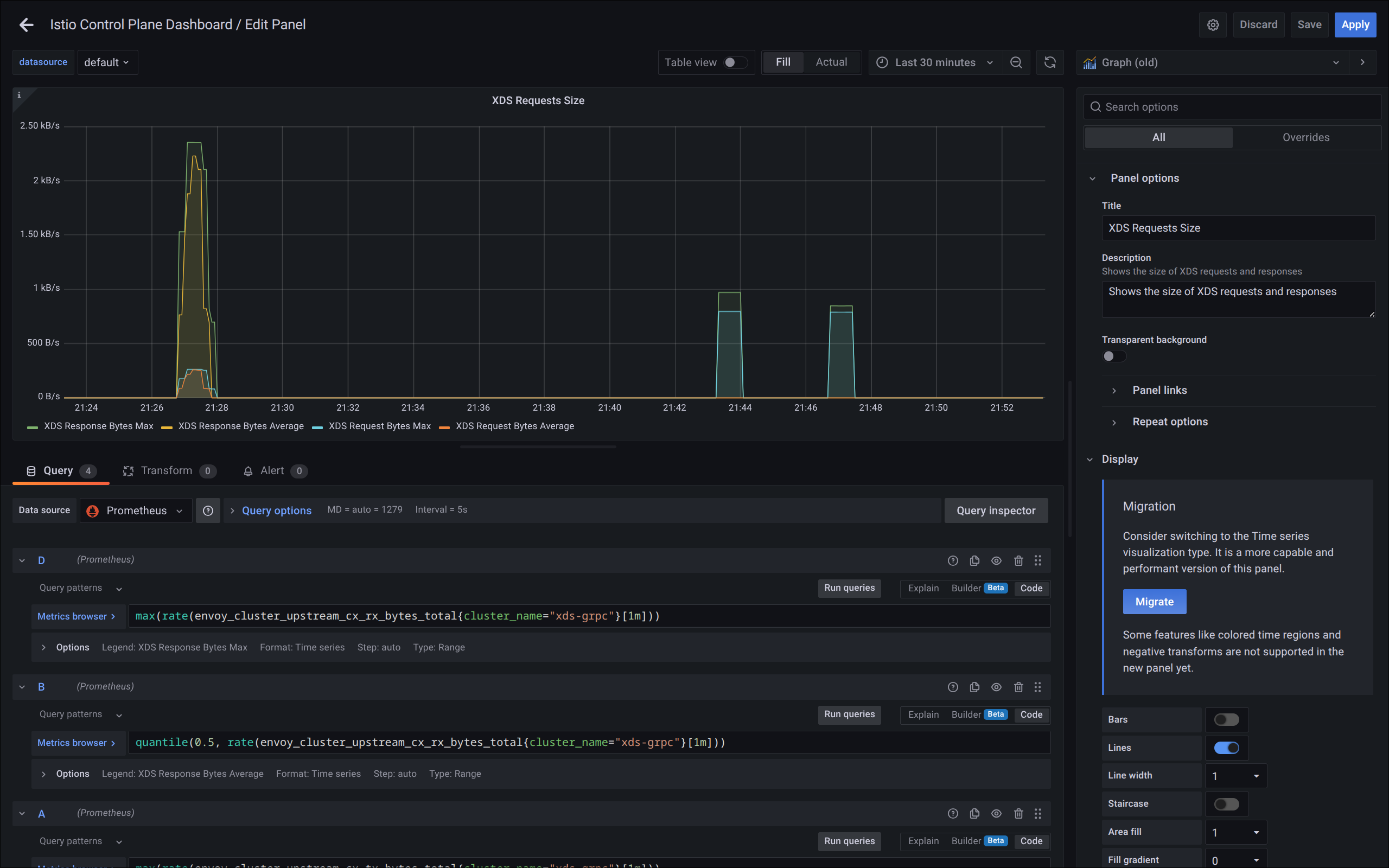

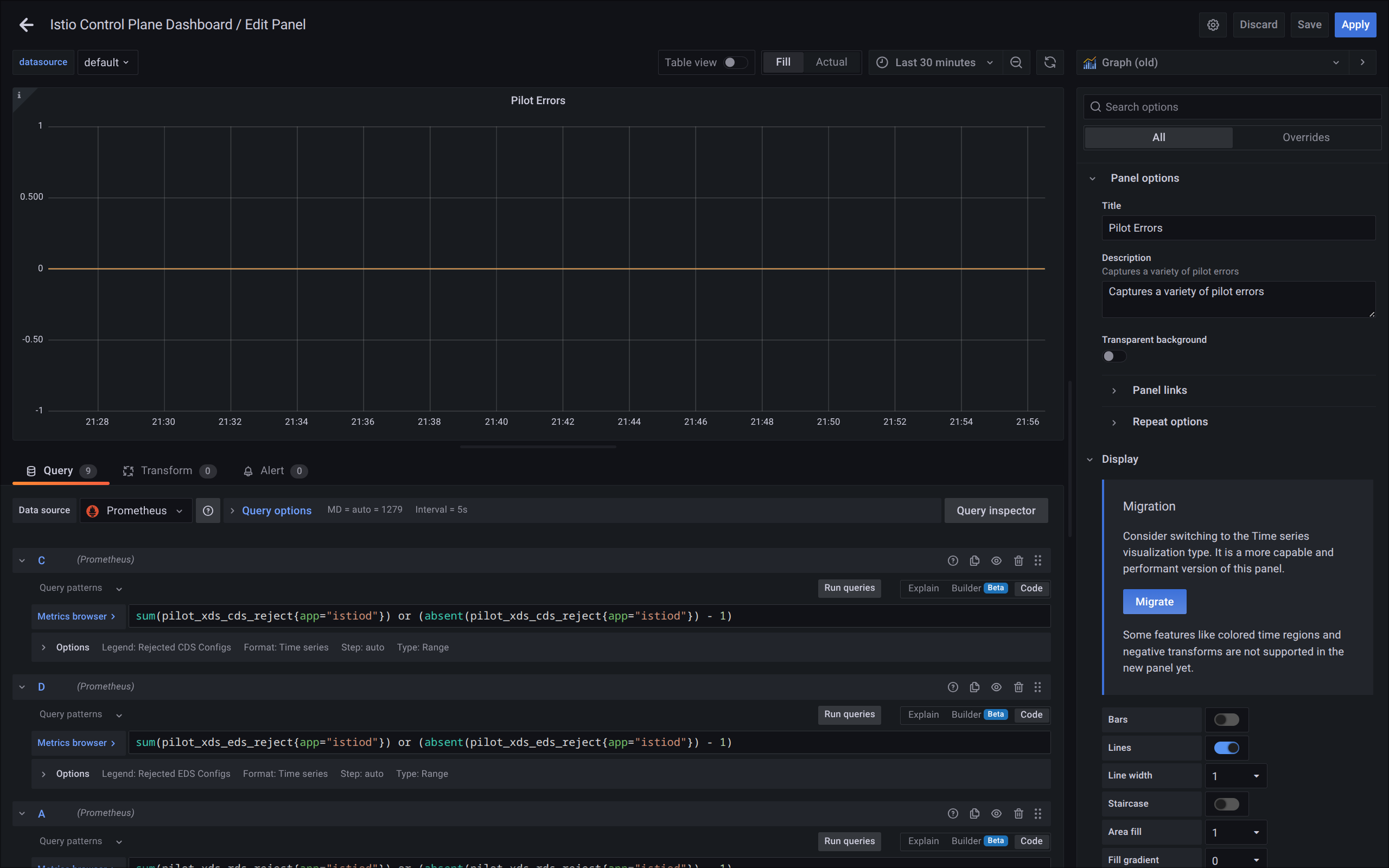

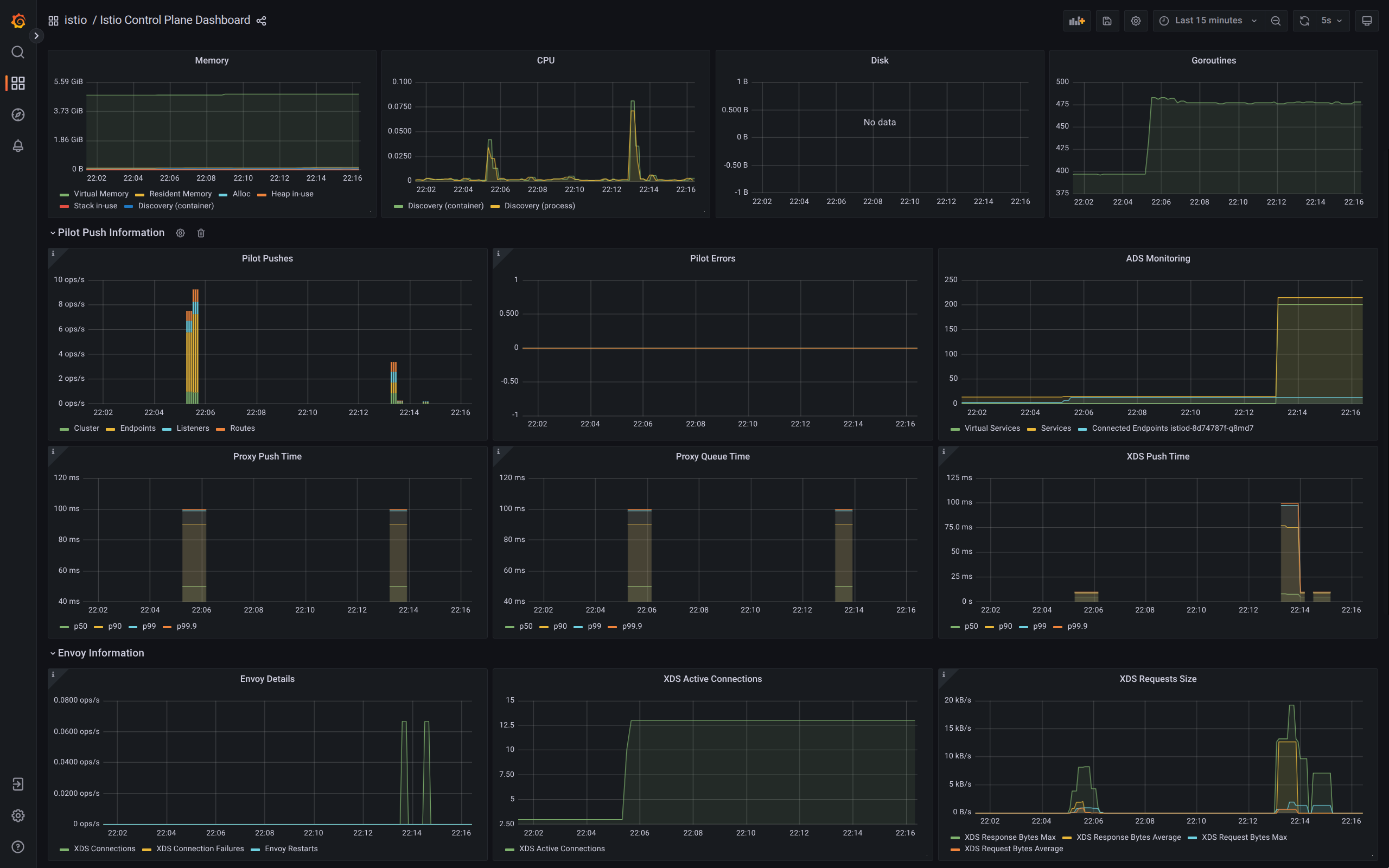

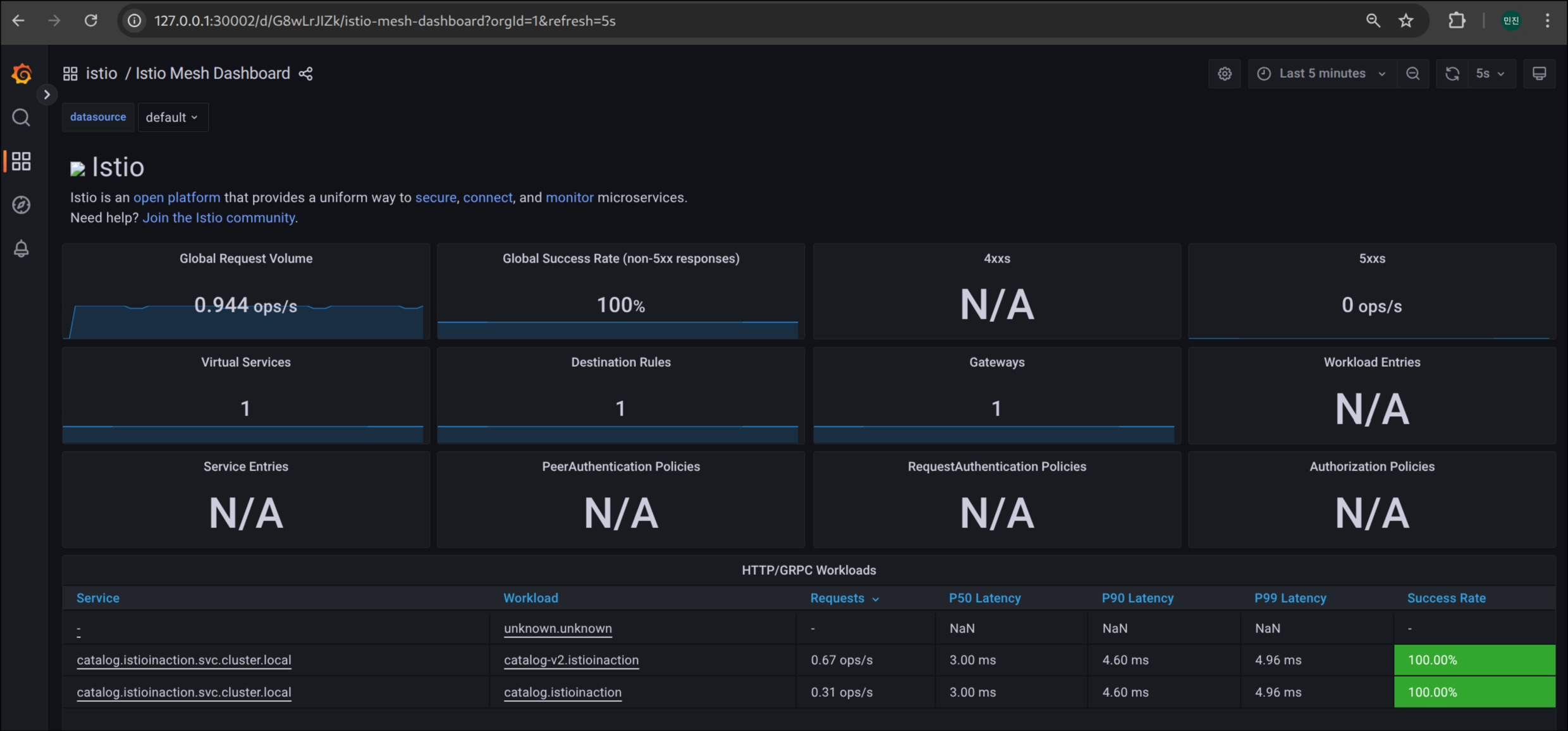

2. Grafana 대시보드로 트래픽 관찰

Istio Mesh 대시보드를 통해 catalog 워크로드 트래픽 및 지표 시각적으로 확인

3. catalog v2 파드 중 하나 선택 및 변수 할당

1

2

CATALOG_POD=$(kubectl get pods -l version=v2 -n istioinaction -o jsonpath={.items..metadata.name} | cut -d ' ' -f1)

echo $CATALOG_POD

✅ 출력

1

catalog-v2-56c97f6db-8wnq6

4. catalog 파드에 latency 유발 요청 전송

1

2

3

4

kubectl -n istioinaction exec -c catalog $CATALOG_POD \

-- curl -s -X POST -H "Content-Type: application/json" \

-d '{"active": true, "type": "latency", "volatile": true}' \

localhost:3000/blowup ;

✅ 출력

1

blowups=[object Object]

5. 서비스 반복 호출로 지연 효과 관찰

1

for in in {1..9999}; do curl http://catalog.istioinaction.io:30000/items -w "\nStatus Code %{http_code}\n"; sleep 1; done

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

[

{

"id": 1,

"color": "amber",

"department": "Eyewear",

"name": "Elinor Glasses",

"price": "282.00"

},

{

"id": 2,

"color": "cyan",

"department": "Clothing",

"name": "Atlas Shirt",

"price": "127.00"

},

{

"id": 3,

"color": "teal",

"department": "Clothing",

"name": "Small Metal Shoes",

"price": "232.00"

},

{

"id": 4,

"color": "red",

"department": "Watches",

"name": "Red Dragon Watch",

"price": "232.00"

}

]

Status Code 200

6. VirtualService 타임아웃(0.5s) 설정 적용

(1) VirtualService 리소스 목록 확인

1

kubectl get vs -n istioinaction

✅ 출력

1

2

NAME GATEWAYS HOSTS AGE

catalog-v1-v2 ["catalog-gateway"] ["catalog.istioinaction.io"] 4h53m

(2) kubectl patch 명령어를 사용하여 HTTP 타임아웃을 0.5초로 지정

1

2

3

4

5

kubectl patch vs catalog-v1-v2 -n istioinaction --type json \

-p '[{"op": "add", "path": "/spec/http/0/timeout", "value": "0.5s"}]'

# 결과

virtualservice.networking.istio.io/catalog-v1-v2 patched

7. VirtualService 설정 적용 여부 검증

1

kubectl get vs catalog-v1-v2 -n istioinaction -o jsonpath='{.spec.http[?(@.timeout=="0.5s")]}' | jq

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

{

"route": [

{

"destination": {

"host": "catalog.istioinaction.svc.cluster.local",

"port": {

"number": 80

},

"subset": "version-v1"

},

"weight": 20

},

{

"destination": {

"host": "catalog.istioinaction.svc.cluster.local",

"port": {

"number": 80

},

"subset": "version-v2"

},

"weight": 80

}

],

"timeout": "0.5s"

}

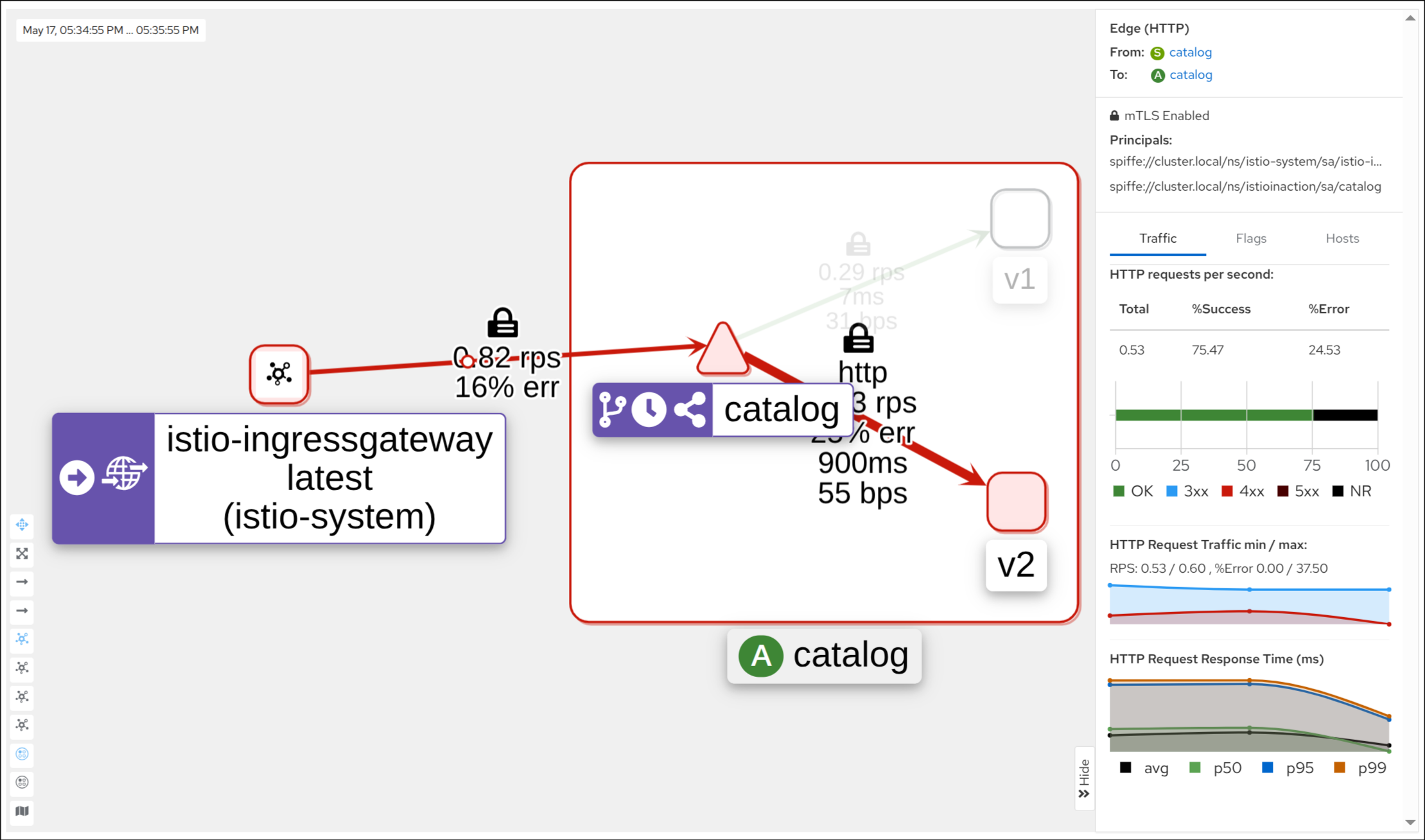

8. 타임아웃 적용 후 서비스 재요청 및 504 발생 확인

1

for in in {1..9999}; do curl http://catalog.istioinaction.io:30000/items -w "\nStatus Code %{http_code}\n"; sleep 1; done

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

[

{

"id": 1,

"color": "amber",

"department": "Eyewear",

"name": "Elinor Glasses",

"price": "282.00"

},

{

"id": 2,

"color": "cyan",

"department": "Clothing",

"name": "Atlas Shirt",

"price": "127.00"

},

{

"id": 3,

"color": "teal",

"department": "Clothing",

"name": "Small Metal Shoes",

"price": "232.00"

},

{

"id": 4,

"color": "red",

"department": "Watches",

"name": "Red Dragon Watch",

"price": "232.00"

}

]

Status Code 200

upstream request timeout

Status Code 504

upstream request timeout

Status Code 504

9. ingressgateway 로그에서 timeout 로그 스트리밍

1

kubectl logs -n istio-system -l app=istio-ingressgateway -f

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

[2025-05-17T08:31:49.673Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 2 2 "172.18.0.1" "curl/8.13.0" "ddbb601f-e23b-9c3c-b4d8-696c510fa62a" "catalog.istioinaction.io:30000" "10.10.0.12:3000" outbound|80|version-v1|catalog.istioinaction.svc.cluster.local 10.10.0.6:60458 10.10.0.6:8080 172.18.0.1:39818 - -

[2025-05-17T08:31:50.296Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 2 2 "172.18.0.1" "curl/8.13.0" "b561e6be-2eb8-967f-a1b8-62f27797de98" "catalog.istioinaction.io:30000" "10.10.0.14:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:51144 10.10.0.6:8080 172.18.0.1:39824 - -

[2025-05-17T08:31:50.690Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 2 2 "172.18.0.1" "curl/8.13.0" "9c8a7f37-97bd-90a5-b2e3-27a5cacd3393" "catalog.istioinaction.io:30000" "10.10.0.14:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:51106 10.10.0.6:8080 172.18.0.1:39830 - -

[2025-05-17T08:31:51.308Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 2 2 "172.18.0.1" "curl/8.13.0" "06442ac5-45aa-9dc1-9df9-a368349324d3" "catalog.istioinaction.io:30000" "10.10.0.14:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:49314 10.10.0.6:8080 172.18.0.1:39840 - -

[2025-05-17T08:31:51.701Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 410 409 "172.18.0.1" "curl/8.13.0" "99f995bf-a090-993f-bdbf-dd1c8caab531" "catalog.istioinaction.io:30000" "10.10.0.13:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:54274 10.10.0.6:8080 172.18.0.1:39850 - -

[2025-05-17T08:31:52.322Z] "GET /items HTTP/1.1" 504 UT response_timeout - "-" 0 24 499 - "172.18.0.1" "curl/8.13.0" "665c29ba-1596-989d-b1d9-603f65457c8e" "catalog.istioinaction.io:30000" "10.10.0.13:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:60144 10.10.0.6:8080 172.18.0.1:39860 - -

[2025-05-17T08:31:53.127Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 2 2 "172.18.0.1" "curl/8.13.0" "b406314f-fef6-9f0e-acaa-293a5ae27d76" "catalog.istioinaction.io:30000" "10.10.0.12:3000" outbound|80|version-v1|catalog.istioinaction.svc.cluster.local 10.10.0.6:56056 10.10.0.6:8080 172.18.0.1:39862 - -

[2025-05-17T08:31:54.139Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 2 1 "172.18.0.1" "curl/8.13.0" "135eb6ae-3f58-9926-a012-25391a84b02b" "catalog.istioinaction.io:30000" "10.10.0.12:3000" outbound|80|version-v1|catalog.istioinaction.svc.cluster.local 10.10.0.6:56056 10.10.0.6:8080 172.18.0.1:39884 - -

[2025-05-17T08:31:53.833Z] "GET /items HTTP/1.1" 504 UT response_timeout - "-" 0 24 500 - "172.18.0.1" "curl/8.13.0" "2878fecb-5f3e-95e8-87a0-cfd080cbb1d0" "catalog.istioinaction.io:30000" "10.10.0.13:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:33400 10.10.0.6:8080 172.18.0.1:39872 - -

[2025-05-17T08:31:55.361Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 497 497 "172.18.0.1" "curl/8.13.0" "24c5650c-b785-97cb-a1dc-f3116ea01a8a" "catalog.istioinaction.io:30000" "10.10.0.13:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:57812 10.10.0.6:8080 172.18.0.1:39900 - -

[2025-05-17T08:31:56.869Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 2 2 "172.18.0.1" "curl/8.13.0" "ac3c564b-db42-9f06-9769-947005879e22" "catalog.istioinaction.io:30000" "10.10.0.12:3000" outbound|80|version-v1|catalog.istioinaction.svc.cluster.local 10.10.0.6:40450 10.10.0.6:8080 172.18.0.1:39914 - -

[2025-05-17T08:31:57.885Z] "GET /items HTTP/1.1" 504 UT response_timeout - "-" 0 24 500 - "172.18.0.1" "curl/8.13.0" "40a31598-e5ea-982f-891e-c8e8122e5ed7" "catalog.istioinaction.io:30000" "10.10.0.13:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:57812 10.10.0.6:8080 172.18.0.1:39004 - -

[2025-05-17T08:31:59.406Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 367 366 "172.18.0.1" "curl/8.13.0" "bb8da2d1-ec36-9874-8d5d-6d61ef638539" "catalog.istioinaction.io:30000" "10.10.0.13:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:34204 10.10.0.6:8080 172.18.0.1:39012 - -

[2025-05-17T08:32:00.783Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 3 2 "172.18.0.1" "curl/8.13.0" "9c5cd684-a71c-9082-84c1-916983f622cd" "catalog.istioinaction.io:30000" "10.10.0.14:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:47318 10.10.0.6:8080 172.18.0.1:39024 - -

[2025-05-17T08:32:01.798Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 289 289 "172.18.0.1" "curl/8.13.0" "896af886-997f-9c17-bfec-765b7a549f8c" "catalog.istioinaction.io:30000" "10.10.0.13:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:34164 10.10.0.6:8080 172.18.0.1:39026 - -

[2025-05-17T08:32:03.097Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 4 3 "172.18.0.1" "curl/8.13.0" "38870d95-8689-9c2b-a955-8702237d9aba" "catalog.istioinaction.io:30000" "10.10.0.12:3000" outbound|80|version-v1|catalog.istioinaction.svc.cluster.local 10.10.0.6:40450 10.10.0.6:8080 172.18.0.1:39032 - -

[2025-05-17T08:32:04.112Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 3 3 "172.18.0.1" "curl/8.13.0" "5d0cfbe6-7f0e-9db0-a80b-86b2e4c6369e" "catalog.istioinaction.io:30000" "10.10.0.12:3000" outbound|80|version-v1|catalog.istioinaction.svc.cluster.local 10.10.0.6:41612 10.10.0.6:8080 172.18.0.1:39034 - -

[2025-05-17T08:32:05.134Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 2 2 "172.18.0.1" "curl/8.13.0" "030b87f1-a0f4-95c7-a2ce-d374389e10a6" "catalog.istioinaction.io:30000" "10.10.0.14:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:36646 10.10.0.6:8080 172.18.0.1:39042 - -

[2025-05-17T08:32:06.155Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 3 2 "172.18.0.1" "curl/8.13.0" "f675b04c-874f-9cdb-81fe-114598960595" "catalog.istioinaction.io:30000" "10.10.0.12:3000" outbound|80|version-v1|catalog.istioinaction.svc.cluster.local 10.10.0.6:41592 10.10.0.6:8080 172.18.0.1:39052 - -

[2025-05-17T08:32:07.168Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 7 7 "172.18.0.1" "curl/8.13.0" "ec3030a1-dfb6-9578-bac1-907d5a2f04bb" "catalog.istioinaction.io:30000" "10.10.0.14:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:40350 10.10.0.6:8080 172.18.0.1:33540 - -

[2025-05-17T08:32:08.187Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 2 1 "172.18.0.1" "curl/8.13.0" "f0230eef-5532-940b-8347-7cb4748611eb" "catalog.istioinaction.io:30000" "10.10.0.14:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:51126 10.10.0.6:8080 172.18.0.1:33548 - -

[2025-05-17T08:32:09.199Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 302 301 "172.18.0.1" "curl/8.13.0" "4ccfd24e-8e9a-9708-8480-d4d953be3f59" "catalog.istioinaction.io:30000" "10.10.0.13:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:45308 10.10.0.6:8080 172.18.0.1:33554 - -

[2025-05-17T08:32:10.514Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 5 5 "172.18.0.1" "curl/8.13.0" "d1535c70-a1e1-9796-ba8d-87438b0c8382" "catalog.istioinaction.io:30000" "10.10.0.14:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:51144 10.10.0.6:8080 172.18.0.1:33556 - -

....

10. IngressGateway에서 504 오류 로그 필터링

1

kubectl logs -n istio-system -l app=istio-ingressgateway -f | grep 504

✅ 출력

1

2

3

4

[2025-05-17T08:33:01.777Z] "GET /items HTTP/1.1" 504 UT response_timeout - "-" 0 24 500 - "172.18.0.1" "curl/8.13.0" "a5072404-fe80-98d5-af94-185adc5f0c92" "catalog.istioinaction.io:30000" "10.10.0.13:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:51846 10.10.0.6:8080 172.18.0.1:48518 - -

[2025-05-17T08:33:09.618Z] "GET /items HTTP/1.1" 504 UT response_timeout - "-" 0 24 501 - "172.18.0.1" "curl/8.13.0" "2492025a-3fea-9468-8d37-89273cef19e5" "catalog.istioinaction.io:30000" "10.10.0.13:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:53376 10.10.0.6:8080 172.18.0.1:38128 - -

[2025-05-17T08:33:11.146Z] "GET /items HTTP/1.1" 504 UT response_timeout - "-" 0 24 501 - "172.18.0.1" "curl/8.13.0" "f829ebde-22b8-9b0f-9e42-22b2e20cfead" "catalog.istioinaction.io:30000" "10.10.0.13:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:53382 10.10.0.6:8080 172.18.0.1:38136 - -

....

11. catalog v2 파드의 istio-proxy 로그 스트리밍

1

kubectl logs -n istioinaction -l version=v2 -c istio-proxy -f

✅ 출력

1

2

3

4

5

6

[2025-05-17T08:33:38.954Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 11 9 "172.18.0.1" "curl/8.13.0" "8ff3dd9b-4803-9924-905c-3aea0f1d1731" "catalog.istioinaction.io:30000" "10.10.0.14:3000" inbound|3000|| 127.0.0.6:42347 10.10.0.14:3000 172.18.0.1:0 outbound_.80_.version-v2_.catalog.istioinaction.svc.cluster.local default

2025-05-17T08:33:44.126862Z info xdsproxy connected to upstream XDS server: istiod.istio-system.svc:15012

[2025-05-17T08:33:49.022Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 2 1 "172.18.0.1" "curl/8.13.0" "169e8391-8409-9e0c-85bb-53e4af150fbc" "catalog.istioinaction.io:30000" "10.10.0.14:3000" inbound|3000|| 127.0.0.6:57703 10.10.0.14:3000 172.18.0.1:0 outbound_.80_.version-v2_.catalog.istioinaction.svc.cluster.local default

[2025-05-17T08:33:52.089Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 1 1 "172.18.0.1" "curl/8.13.0" "4d07c358-6d2c-9650-9362-c4e6b0453445" "catalog.istioinaction.io:30000" "10.10.0.14:3000" inbound|3000|| 127.0.0.6:57703 10.10.0.14:3000 172.18.0.1:0 outbound_.80_.version-v2_.catalog.istioinaction.svc.cluster.local default

[2025-05-17T08:33:56.156Z] "GET /items HTTP/1.1" 200 - via_upstream - "-" 0 502 1 1 "172.18.0.1" "curl/8.13.0" "42fc582d-bb75-9772-8f23-17b9f3b28c3b" "catalog.istioinaction.io:30000" "10.10.0.14:3000" inbound|3000|| 127.0.0.6:44565 10.10.0.14:3000 172.18.0.1:0 outbound_.80_.version-v2_.catalog.istioinaction.svc.cluster.local default

...

🧾 엔보이 액세스 로그 이해하기 + 엔보이 액세스 로그 형식 바꾸기

1. 기존 엔보이 액세스 로그 확인

1

kubectl logs -n istio-system -l app=istio-ingressgateway -f | grep 504

✅ 출력

1

2

3

4

5

[2025-05-17T08:39:35.198Z] "GET /items HTTP/1.1" 504 UT response_timeout - "-" 0 24 500 - "172.18.0.1" "curl/8.13.0" "d42cf869-6b0b-96e1-bf93-7190c05d3d34" "catalog.istioinaction.io:30000" "10.10.0.13:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:59144 10.10.0.6:8080 172.18.0.1:43574 - -

[2025-05-17T08:39:41.111Z] "GET /items HTTP/1.1" 504 UT response_timeout - "-" 0 24 500 - "172.18.0.1" "curl/8.13.0" "00b32cb2-8c4a-991d-83ab-f2a5bf6b81ba" "catalog.istioinaction.io:30000" "10.10.0.13:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:41300 10.10.0.6:8080 172.18.0.1:47294 - -

[2025-05-17T08:40:08.024Z] "GET /items HTTP/1.1" 504 UT response_timeout - "-" 0 24 500 - "172.18.0.1" "curl/8.13.0" "716d72fa-68b7-9152-b35c-4086ee0be7c8" "catalog.istioinaction.io:30000" "10.10.0.13:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:52204 10.10.0.6:8080 172.18.0.1:59286 - -

[2025-05-17T08:40:16.301Z] "GET /items HTTP/1.1" 504 UT response_timeout - "-" 0 24 499 - "172.18.0.1" "curl/8.13.0" "53b55a05-569c-950c-b120-20773dda534f" "catalog.istioinaction.io:30000" "10.10.0.13:3000" outbound|80|version-v2|catalog.istioinaction.svc.cluster.local 10.10.0.6:51026 10.10.0.6:8080 172.18.0.1:59360 - -

....

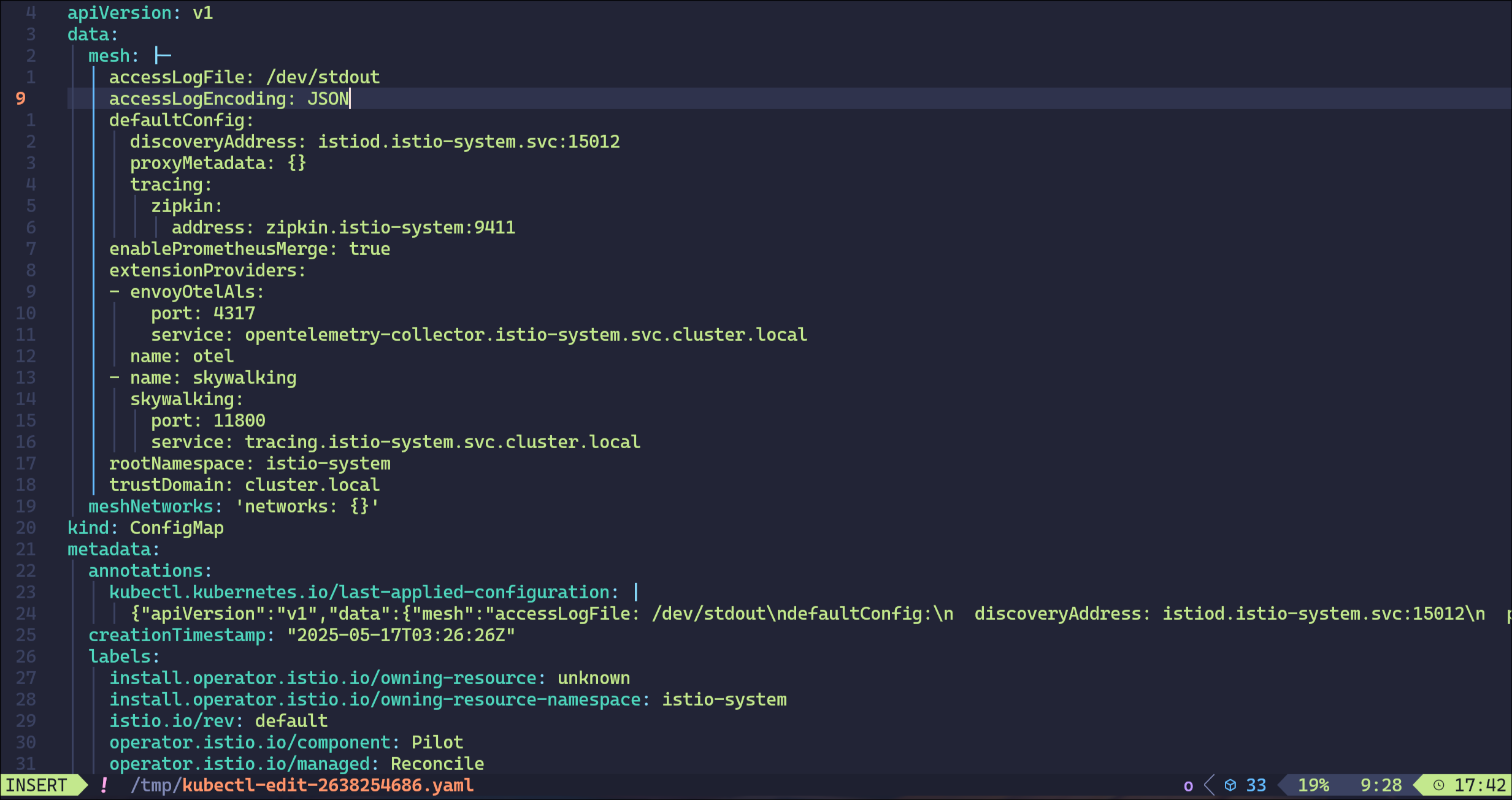

2. MeshConfig에서 로그 형식 JSON으로 변경

accessLogEncoding: JSON 추가

1

kubectl edit -n istio-system cm istio

1

configmap/istio edited

3. 변경된 JSON 로그 포맷 확인

1

kubectl logs -n istio-system -l app=istio-ingressgateway -f | jq

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

...

{

"x_forwarded_for": "172.18.0.1",

"upstream_service_time": null,

"connection_termination_details": null,

"upstream_cluster": "outbound|80|version-v2|catalog.istioinaction.svc.cluster.local",

"request_id": "93b6396c-c45c-9d1e-ac38-b3dabc48dccb",

"method": "GET",

"response_flags": "UT",

"protocol": "HTTP/1.1",

"upstream_host": "10.10.0.13:3000",

"response_code_details": "response_timeout",

"requested_server_name": null,

"downstream_local_address": "10.10.0.6:8080",

"upstream_transport_failure_reason": null,

"route_name": null,

"upstream_local_address": "10.10.0.6:36090",

"path": "/items",

"downstream_remote_address": "172.18.0.1:39542",

"bytes_received": 0,

"bytes_sent": 24,

"response_code": 504,

"start_time": "2025-05-17T08:44:06.127Z",

"user_agent": "curl/8.13.0",

"duration": 500,

"authority": "catalog.istioinaction.io:30000"

}

...

4. 느리게 응답하는 catalog v2 파드의 IP 조회

1

2

CATALOG_POD=$(kubectl get pods -l version=v2 -n istioinaction -o jsonpath={.items..metadata.name} | cut -d ' ' -f1)

kubectl get pod -n istioinaction $CATALOG_POD -owide

✅ 출력

1

2

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

catalog-v2-56c97f6db-8wnq6 2/2 Running 0 5h10m 10.10.0.13 myk8s-control-plane <none> <none>

🔥 엔보이 게이트웨이의 로깅 수준 높이기

1. 현재 게이트웨이 엔보이 로그 수준 확인

1

docker exec -it myk8s-control-plane istioctl proxy-config log deploy/istio-ingressgateway -n istio-system

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

istio-ingressgateway-6bb8fb6549-2mlk2.istio-system:

active loggers:

admin: warning

alternate_protocols_cache: warning

aws: warning

assert: warning

backtrace: warning

cache_filter: warning

client: warning

config: warning

connection: warning

conn_handler: warning

decompression: warning

dns: warning

dubbo: warning

envoy_bug: warning

ext_authz: warning

ext_proc: warning

rocketmq: warning

file: warning

filter: warning

forward_proxy: warning

grpc: warning

happy_eyeballs: warning

hc: warning

health_checker: warning

http: warning

http2: warning

hystrix: warning

init: warning

io: warning

jwt: warning

kafka: warning

key_value_store: warning

lua: warning

main: warning

matcher: warning

misc: error

mongo: warning

multi_connection: warning

oauth2: warning

quic: warning

quic_stream: warning

pool: warning

rate_limit_quota: warning

rbac: warning

rds: warning

redis: warning

router: warning

runtime: warning

stats: warning

secret: warning

tap: warning

testing: warning

thrift: warning

tracing: warning

upstream: warning

udp: warning

wasm: warning

websocket: warning

2. 특정 컴포넌트의 로그 레벨을 debug로 설정

connection, http, router, pool 로거의 수준을 debug 로 높임

1

2

docker exec -it myk8s-control-plane istioctl proxy-config log deploy/istio-ingressgateway -n istio-system \

--level http:debug,router:debug,connection:debug,pool:debug

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

istio-ingressgateway-6bb8fb6549-2mlk2.istio-system:

active loggers:

admin: warning

alternate_protocols_cache: warning

aws: warning

assert: warning

backtrace: warning

cache_filter: warning

client: warning

config: warning

connection: debug

conn_handler: warning

decompression: warning

dns: warning

dubbo: warning

envoy_bug: warning

ext_authz: warning

ext_proc: warning

rocketmq: warning

file: warning

filter: warning

forward_proxy: warning

grpc: warning

happy_eyeballs: warning

hc: warning

health_checker: warning

http: debug

http2: warning

hystrix: warning

init: warning

io: warning

jwt: warning

kafka: warning

key_value_store: warning

lua: warning

main: warning

matcher: warning

misc: error

mongo: warning

multi_connection: warning

oauth2: warning

quic: warning

quic_stream: warning

pool: debug

rate_limit_quota: warning

rbac: warning

rds: warning

redis: warning

router: debug

runtime: warning

stats: warning

secret: warning

tap: warning

testing: warning

thrift: warning

tracing: warning

upstream: warning

udp: warning

wasm: warning

websocket: warning

3. debug 로그 전체 수집 및 파일로 저장

1

k logs -n istio-system -l app=istio-ingressgateway -f > istio-igw-log.txt

4. 504 response_timeout 응답 디버깅

(1) 504 로그 필터링

1

2

3

4

5

6

7

8

2025-05-17T08:50:37.970643Z debug envoy http external/envoy/source/common/http/filter_manager.cc:967 [C11988][S12570926387111490963] Sending local reply with details response_timeout thread=48

2025-05-17T08:50:37.970716Z debug envoy http external/envoy/source/common/http/conn_manager_impl.cc:1687 [C11988][S12570926387111490963] encoding headers via codec (end_stream=false):

':status', '504'

'content-length', '24'

'content-type', 'text/plain'

'date', 'Sat, 17 May 2025 08:50:37 GMT'

'server', 'istio-envoy'

thread=48

(2) Connection ID (C11988) 추적

1

2

3

4

5

6

7

8

2025-05-17T08:50:37.469925Z debug envoy http external/envoy/source/common/http/conn_manager_impl.cc:329 [C11988] new stream thread=48

2025-05-17T08:50:37.469985Z debug envoy http external/envoy/source/common/http/conn_manager_impl.cc:1049 [C11988][S12570926387111490963] request headers complete (end_stream=true):

':authority', 'catalog.istioinaction.io:30000'

':path', '/items'

':method', 'GET'

'user-agent', 'curl/8.13.0'

'accept', '*/*'

thread=48

(3) 요청-클러스터 매칭 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

2025-05-17T08:50:37.469992Z debug envoy http external/envoy/source/common/http/conn_manager_impl.cc:1032 [C11988][S12570926387111490963] request end stream thread=48

2025-05-17T08:50:37.470011Z debug envoy connection external/envoy/source/common/network/connection_impl.h:92 [C11988] current connecting state: false thread=48

2025-05-17T08:50:37.470111Z debug envoy router external/envoy/source/common/router/router.cc:470 [C11988][S12570926387111490963] cluster 'outbound|80|version-v2|catalog.istioinaction.svc.cluster.local' match for URL '/items' thread=48

2025-05-17T08:50:37.470157Z debug envoy router external/envoy/source/common/router/router.cc:678 [C11988][S12570926387111490963] router decoding headers:

':authority', 'catalog.istioinaction.io:30000'

':path', '/items'

':method', 'GET'

':scheme', 'http'

'user-agent', 'curl/8.13.0'

'accept', '*/*'

'x-forwarded-for', '172.18.0.1'

'x-forwarded-proto', 'http'

'x-envoy-internal', 'true'

'x-request-id', '556487c7-4e8b-9465-ba02-4a876f016439'

'x-envoy-decorator-operation', 'catalog-v1-v2:80/*'

'x-envoy-peer-metadata', 'ChQKDkFQUF9DT05UQUlORVJTEgIaAAoaCgpDTFVTVEVSX0lEEgwaCkt1YmVybmV0ZXMKGwoMSU5TVEFOQ0VfSVBTEgsaCTEwLjEwLjAuNgoZCg1JU1RJT19WRVJTSU9OEggaBjEuMTcuOAqcAwoGTEFCRUxTEpEDKo4DCh0KA2FwcBIWGhRpc3Rpby1pbmdyZXNzZ2F0ZXdheQoTCgVjaGFydBIKGghnYXRld2F5cwoUCghoZXJpdGFnZRIIGgZUaWxsZXIKNgopaW5zdGFsbC5vcGVyYXRvci5pc3Rpby5pby9vd25pbmctcmVzb3VyY2USCRoHdW5rbm93bgoZCgVpc3RpbxIQGg5pbmdyZXNzZ2F0ZXdheQoZCgxpc3Rpby5pby9yZXYSCRoHZGVmYXVsdAowChtvcGVyYXRvci5pc3Rpby5pby9jb21wb25lbnQSERoPSW5ncmVzc0dhdGV3YXlzChIKB3JlbGVhc2USBxoFaXN0aW8KOQofc2VydmljZS5pc3Rpby5pby9jYW5vbmljYWwtbmFtZRIWGhRpc3Rpby1pbmdyZXNzZ2F0ZXdheQovCiNzZXJ2aWNlLmlzdGlvLmlvL2Nhbm9uaWNhbC1yZXZpc2lvbhIIGgZsYXRlc3QKIgoXc2lkZWNhci5pc3Rpby5pby9pbmplY3QSBxoFZmFsc2UKGgoHTUVTSF9JRBIPGg1jbHVzdGVyLmxvY2FsCi8KBE5BTUUSJxolaXN0aW8taW5ncmVzc2dhdGV3YXktNmJiOGZiNjU0OS0ybWxrMgobCglOQU1FU1BBQ0USDhoMaXN0aW8tc3lzdGVtCl0KBU9XTkVSElQaUmt1YmVybmV0ZXM6Ly9hcGlzL2FwcHMvdjEvbmFtZXNwYWNlcy9pc3Rpby1zeXN0ZW0vZGVwbG95bWVudHMvaXN0aW8taW5ncmVzc2dhdGV3YXkKFwoRUExBVEZPUk1fTUVUQURBVEESAioACicKDVdPUktMT0FEX05BTUUSFhoUaXN0aW8taW5ncmVzc2dhdGV3YXk='

'x-envoy-peer-metadata-id', 'router~10.10.0.6~istio-ingressgateway-6bb8fb6549-2mlk2.istio-system~istio-system.svc.cluster.local'

'x-envoy-expected-rq-timeout-ms', '500'

'x-envoy-attempt-count', '1'

thread=48

- 클러스터 매핑이 정상적으로 수행된 상태임을 확인

(4) upstream 연결 상태 및 요청 종료 흐름

1

2

3

4

5

6

7

8

9

10

2025-05-17T08:50:37.470174Z debug envoy pool external/envoy/source/common/conn_pool/conn_pool_base.cc:265 [C11950] using existing fully connected connection thread=48

2025-05-17T08:50:37.470178Z debug envoy pool external/envoy/source/common/conn_pool/conn_pool_base.cc:182 [C11950] creating stream thread=48

2025-05-17T08:50:37.470188Z debug envoy router external/envoy/source/common/router/upstream_request.cc:581 [C11988][S12570926387111490963] pool ready thread=48

2025-05-17T08:50:37.970287Z debug envoy router external/envoy/source/common/router/router.cc:947 [C11988][S12570926387111490963] upstream timeout thread=48

2025-05-17T08:50:37.970353Z debug envoy router external/envoy/source/common/router/upstream_request.cc:500 [C11988][S12570926387111490963] resetting pool request thread=48

2025-05-17T08:50:37.970373Z debug envoy connection external/envoy/source/common/network/connection_impl.cc:139 [C11950] closing data_to_write=0 type=1 thread=48

2025-05-17T08:50:37.970380Z debug envoy connection external/envoy/source/common/network/connection_impl.cc:250 [C11950] closing socket: 1 thread=48

2025-05-17T08:50:37.970486Z debug envoy connection external/envoy/source/extensions/transport_sockets/tls/ssl_socket.cc:320 [C11950] SSL shutdown: rc=0 thread=48

2025-05-17T08:50:37.970552Z debug envoy pool external/envoy/source/common/conn_pool/conn_pool_base.cc:484 [C11950] client disconnected, failure reason: thread=48

2025-05-17T08:50:37.970599Z debug envoy pool external/envoy/source/common/conn_pool/conn_pool_base.cc:454 invoking idle callbacks - is_draining_for_deletion_=false thread=48

- 기존 커넥션 재사용 및 스트림 생성 확인

- 타임아웃 발생 로그 확인

- TLS 커넥션 종료 처리 확인

(5) 최종적으로 504 응답 전송

1

2

3

4

5

6

7

8

9

10

11

2025-05-17T08:50:37.970643Z debug envoy http external/envoy/source/common/http/filter_manager.cc:967 [C11988][S12570926387111490963] Sending local reply with details response_timeout thread=48

2025-05-17T08:50:37.970716Z debug envoy http external/envoy/source/common/http/conn_manager_impl.cc:1687 [C11988][S12570926387111490963] encoding headers via codec (end_stream=false):

':status', '504'

'content-length', '24'

'content-type', 'text/plain'

'date', 'Sat, 17 May 2025 08:50:37 GMT'

'server', 'istio-envoy'

thread=48

2025-05-17T08:50:37.971159Z debug envoy pool external/envoy/source/common/conn_pool/conn_pool_base.cc:215 [C11950] destroying stream: 0 remaining thread=48

2025-05-17T08:50:37.971895Z debug envoy connection external/envoy/source/common/network/connection_impl.cc:656 [C11988] remote close thread=48

2025-05-17T08:50:37.971912Z debug envoy connection external/envoy/source/common/network/connection_impl.cc:250 [C11988] closing socket: 0 thread=48

- 엔보이가 클라이언트로 504 응답을 리턴하는 구간을 확인

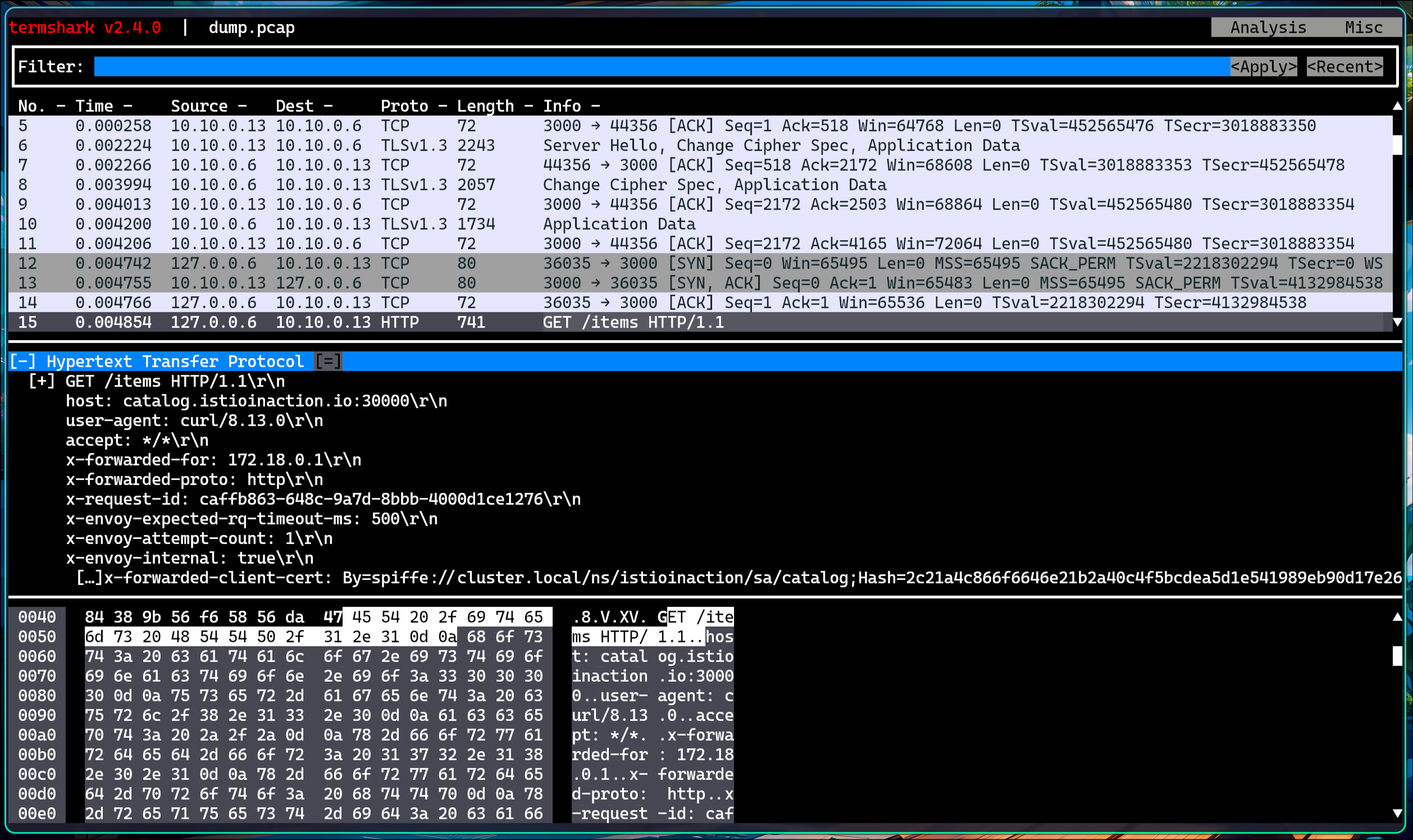

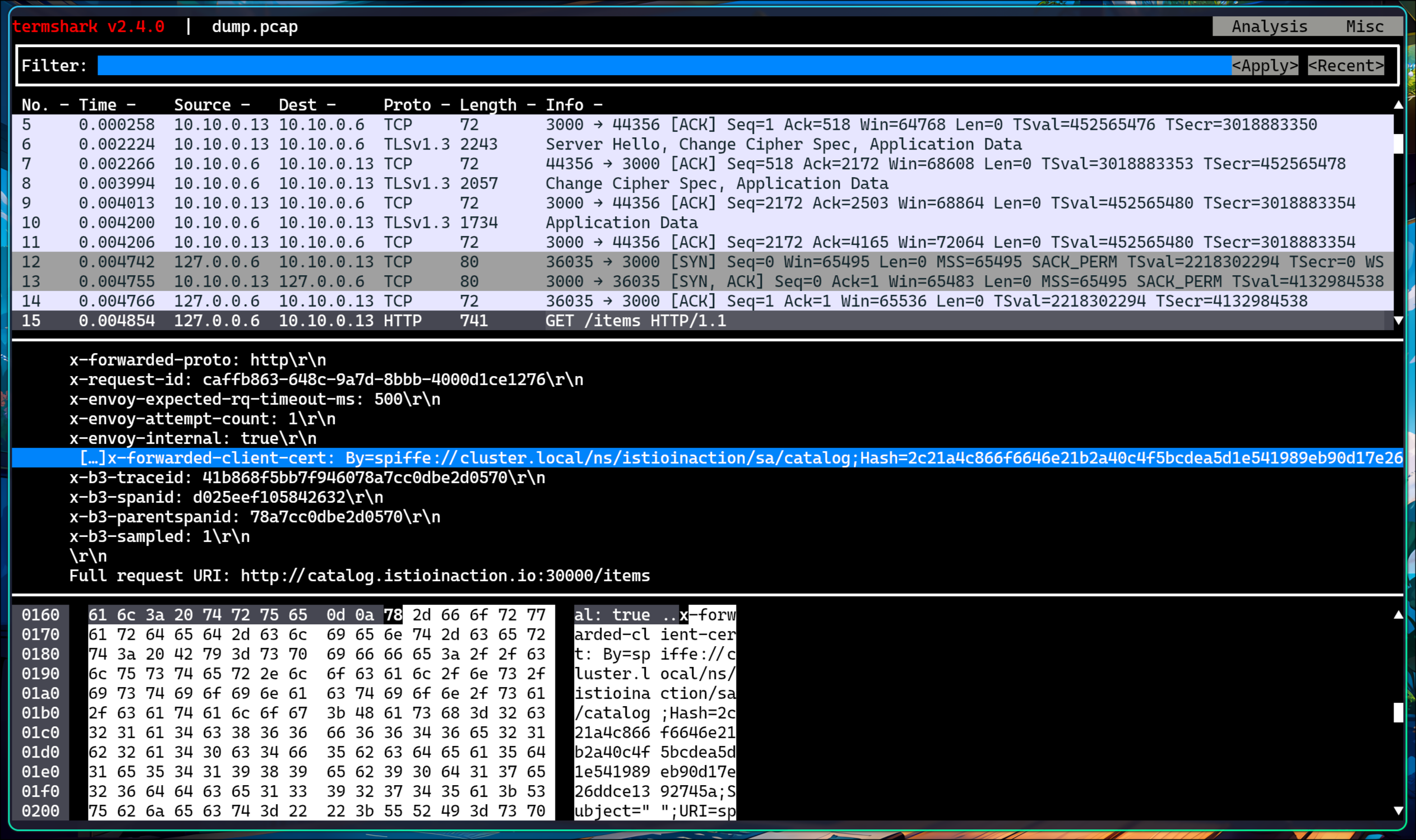

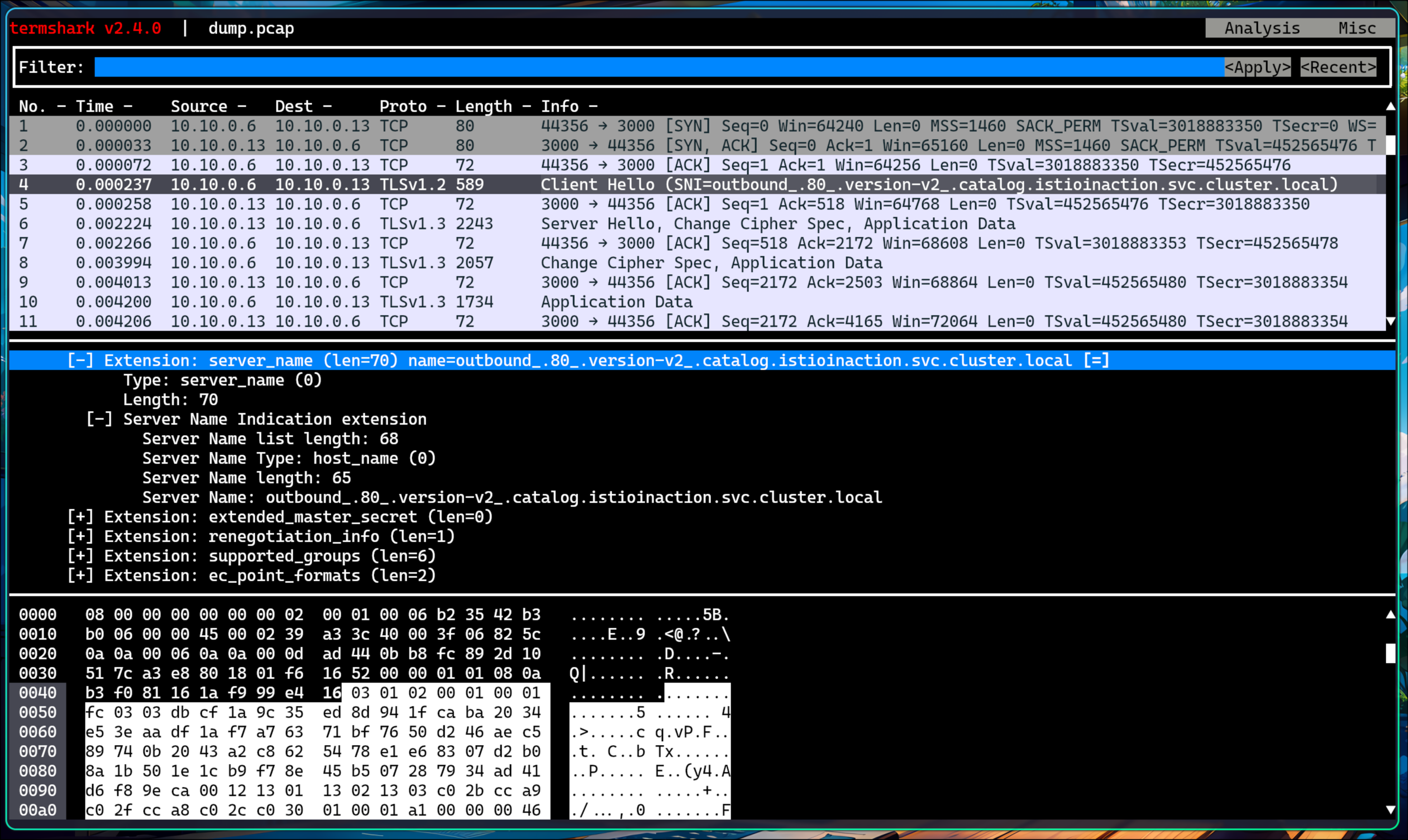

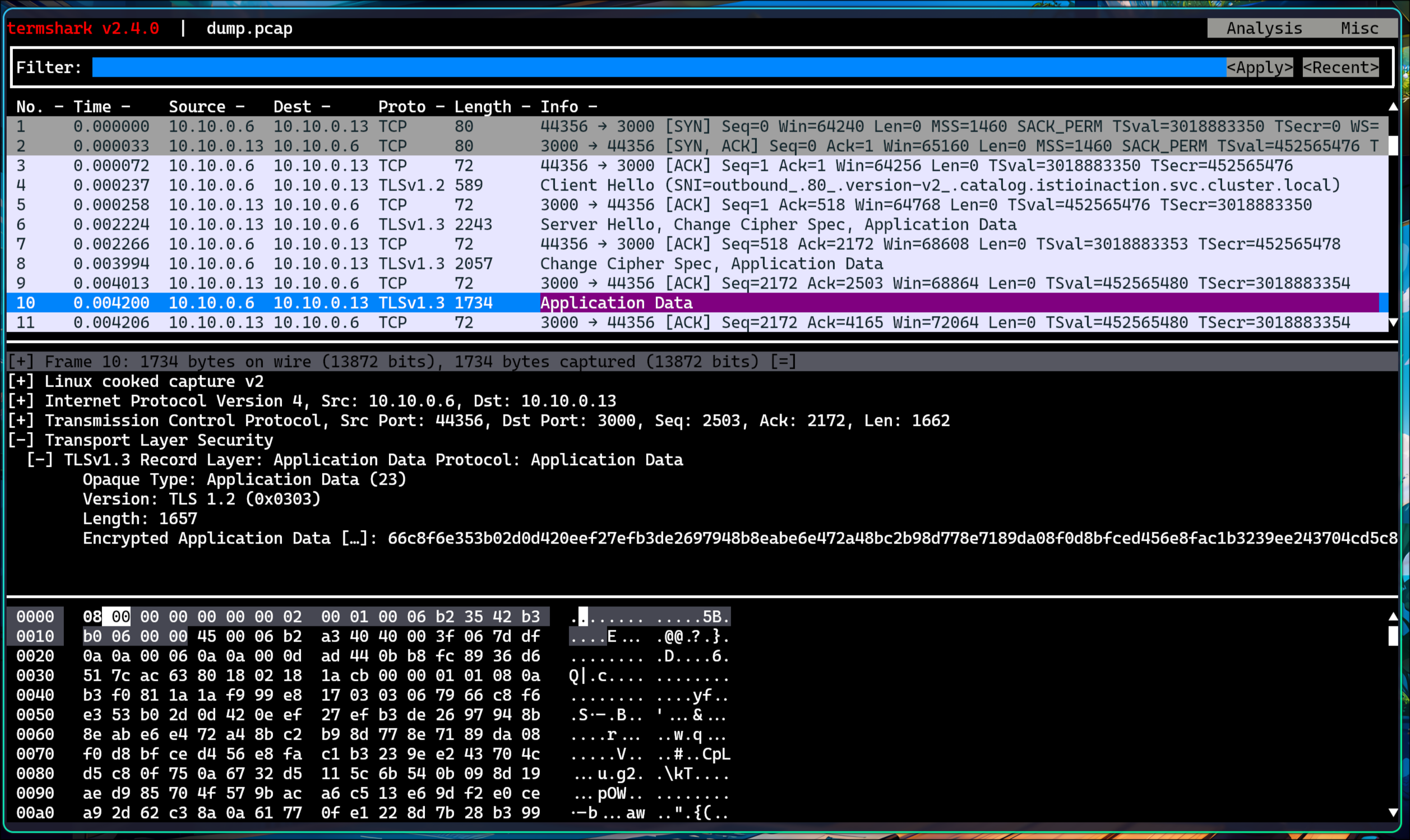

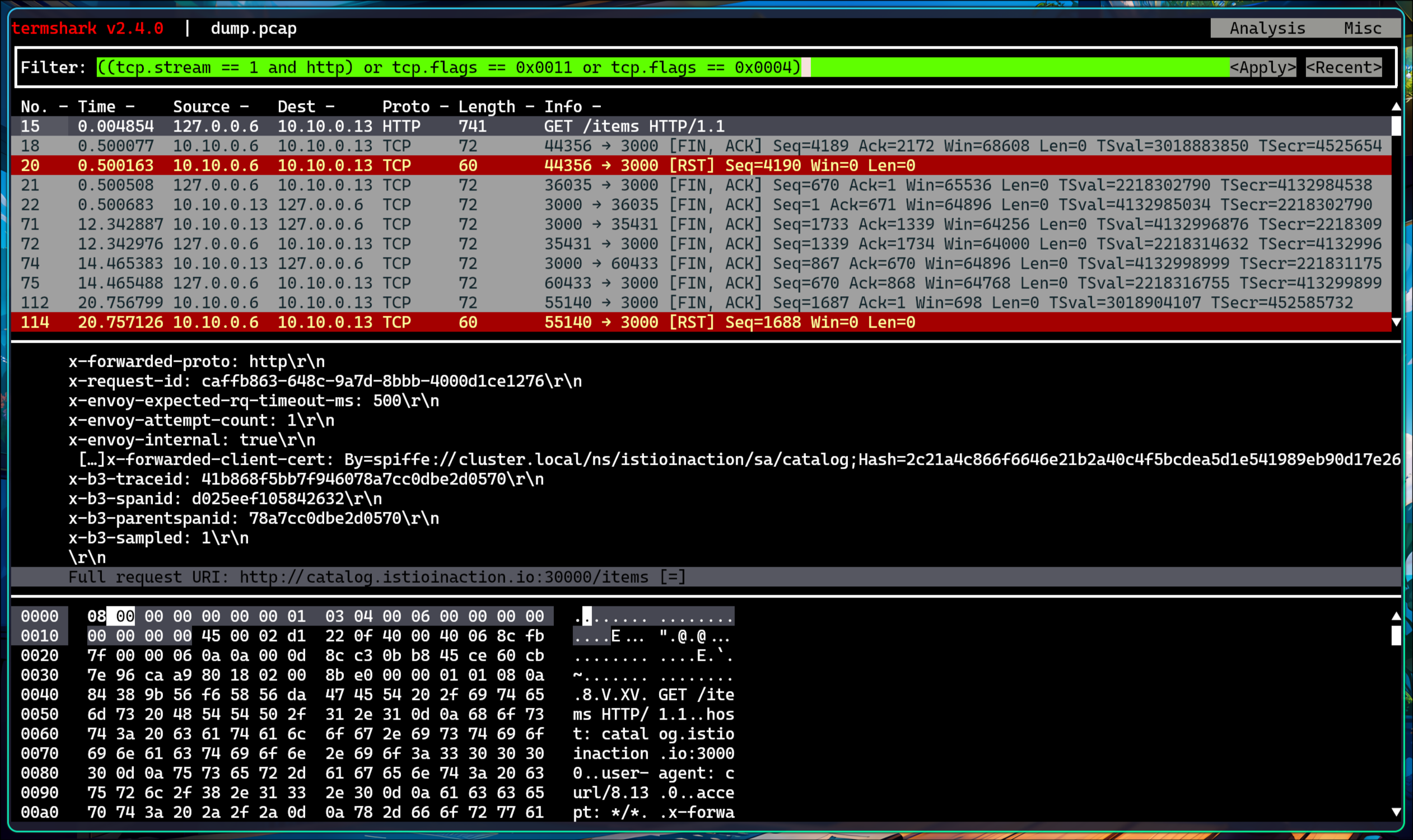

🧪 tcpdump로 네트워크 트래픽 검사

1. slow 파드 정보 확인

1

2

CATALOG_POD=$(kubectl get pods -l version=v2 -n istioinaction -o jsonpath={.items..metadata.name} | cut -d ' ' -f1)

kubectl get pod -n istioinaction $CATALOG_POD -owide

✅ 출력

1

2

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

catalog-v2-56c97f6db-8wnq6 2/2 Running 0 5h31m 10.10.0.13 myk8s-control-plane <none> <none>

2. catalog 서비스 및 endpoint 정보 확인

1

kubectl get svc,ep -n istioinaction

✅ 출력

1

2

3

4

5

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/catalog ClusterIP 10.200.3.2 <none> 80/TCP 5h32m

NAME ENDPOINTS AGE

endpoints/catalog 10.10.0.12:3000,10.10.0.13:3000,10.10.0.14:3000 5h32m

3. istio-proxy 권한 및 네트워크 인터페이스 정보 확인

(1) sudo 권한 확인

1

kubectl exec -it -n istioinaction $CATALOG_POD -c istio-proxy -- sudo whoami

✅ 출력

1

root

(2) eth0, lo 인터페이스 전체 주소 출력

1

kubectl exec -it -n istioinaction $CATALOG_POD -c istio-proxy -- ip -c addr

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0@if14: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 6a:fb:01:b9:43:1f brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.10.0.13/24 brd 10.10.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::68fb:1ff:feb9:431f/64 scope link

valid_lft forever preferred_lft forever