🔧 실습 환경 구성

1. Vagrantfile 다운로드 및 가상머신 구성

1

2

3

| curl -O https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/6w/Vagrantfile

vagrant up

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

| Bringing machine 'k8s-ctr' up with 'virtualbox' provider...

Bringing machine 'k8s-w1' up with 'virtualbox' provider...

Bringing machine 'router' up with 'virtualbox' provider...

==> k8s-ctr: Box 'bento/ubuntu-24.04' could not be found. Attempting to find and install...

k8s-ctr: Box Provider: virtualbox

k8s-ctr: Box Version: 202508.03.0

==> k8s-ctr: Loading metadata for box 'bento/ubuntu-24.04'

k8s-ctr: URL: https://vagrantcloud.com/api/v2/vagrant/bento/ubuntu-24.04

==> k8s-ctr: Adding box 'bento/ubuntu-24.04' (v202508.03.0) for provider: virtualbox (amd64)

k8s-ctr: Downloading: https://vagrantcloud.com/bento/boxes/ubuntu-24.04/versions/202508.03.0/providers/virtualbox/amd64/vagrant.box

==> k8s-ctr: Successfully added box 'bento/ubuntu-24.04' (v202508.03.0) for 'virtualbox (amd64)'!

==> k8s-ctr: Preparing master VM for linked clones...

k8s-ctr: This is a one time operation. Once the master VM is prepared,

k8s-ctr: it will be used as a base for linked clones, making the creation

k8s-ctr: of new VMs take milliseconds on a modern system.

==> k8s-ctr: Importing base box 'bento/ubuntu-24.04'...

==> k8s-ctr: Cloning VM...

==> k8s-ctr: Matching MAC address for NAT networking...

==> k8s-ctr: Checking if box 'bento/ubuntu-24.04' version '202508.03.0' is up to date...

==> k8s-ctr: Setting the name of the VM: k8s-ctr

==> k8s-ctr: Clearing any previously set network interfaces...

==> k8s-ctr: Preparing network interfaces based on configuration...

k8s-ctr: Adapter 1: nat

k8s-ctr: Adapter 2: hostonly

==> k8s-ctr: Forwarding ports...

k8s-ctr: 22 (guest) => 60000 (host) (adapter 1)

==> k8s-ctr: Running 'pre-boot' VM customizations...

==> k8s-ctr: Booting VM...

==> k8s-ctr: Waiting for machine to boot. This may take a few minutes...

k8s-ctr: SSH address: 127.0.0.1:60000

k8s-ctr: SSH username: vagrant

k8s-ctr: SSH auth method: private key

k8s-ctr:

k8s-ctr: Vagrant insecure key detected. Vagrant will automatically replace

k8s-ctr: this with a newly generated keypair for better security.

k8s-ctr:

k8s-ctr: Inserting generated public key within guest...

k8s-ctr: Removing insecure key from the guest if it's present...

k8s-ctr: Key inserted! Disconnecting and reconnecting using new SSH key...

==> k8s-ctr: Machine booted and ready!

==> k8s-ctr: Checking for guest additions in VM...

k8s-ctr: The guest additions on this VM do not match the installed version of

k8s-ctr: VirtualBox! In most cases this is fine, but in rare cases it can

k8s-ctr: prevent things such as shared folders from working properly. If you see

k8s-ctr: shared folder errors, please make sure the guest additions within the

k8s-ctr: virtual machine match the version of VirtualBox you have installed on

k8s-ctr: your host and reload your VM.

k8s-ctr:

k8s-ctr: Guest Additions Version: 7.1.12

k8s-ctr: VirtualBox Version: 7.2

==> k8s-ctr: Setting hostname...

==> k8s-ctr: Configuring and enabling network interfaces...

==> k8s-ctr: Running provisioner: shell...

k8s-ctr: Running: /tmp/vagrant-shell20250823-57237-anxscg.sh

k8s-ctr: >>>> Initial Config Start <<<<

k8s-ctr: [TASK 1] Setting Profile & Bashrc

k8s-ctr: [TASK 2] Disable AppArmor

k8s-ctr: [TASK 3] Disable and turn off SWAP

k8s-ctr: [TASK 4] Install Packages

k8s-ctr: [TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl)

k8s-ctr: [TASK 6] Install Packages & Helm

k8s-ctr: [TASK 7] Install pwru

k8s-ctr: >>>> Initial Config End <<<<

==> k8s-ctr: Running provisioner: shell...

k8s-ctr: Running: /tmp/vagrant-shell20250823-57237-5746j8.sh

k8s-ctr: >>>> K8S Controlplane config Start <<<<

k8s-ctr: [TASK 1] Initial Kubernetes

k8s-ctr: [TASK 2] Setting kube config file

k8s-ctr: [TASK 3] Source the completion

k8s-ctr: [TASK 4] Alias kubectl to k

k8s-ctr: [TASK 5] Install Kubectx & Kubens

k8s-ctr: [TASK 6] Install Kubeps & Setting PS1

k8s-ctr: [TASK 7] Install Cilium CNI

k8s-ctr: [TASK 8] Install Cilium / Hubble CLI

k8s-ctr: cilium

k8s-ctr: hubble

k8s-ctr: [TASK 9] Remove node taint

k8s-ctr: node/k8s-ctr untainted

k8s-ctr: [TASK 10] local DNS with hosts file

k8s-ctr: [TASK 11] Dynamically provisioning persistent local storage with Kubernetes

k8s-ctr: [TASK 13] Install Metrics-server

k8s-ctr: [TASK 14] Install k9s

k8s-ctr: >>>> K8S Controlplane Config End <<<<

==> k8s-ctr: Running provisioner: shell...

k8s-ctr: Running: /tmp/vagrant-shell20250823-57237-aenbtn.sh

k8s-ctr: >>>> Route Add Config Start <<<<

k8s-ctr: >>>> Route Add Config End <<<<

==> k8s-w1: Box 'bento/ubuntu-24.04' could not be found. Attempting to find and install...

k8s-w1: Box Provider: virtualbox

k8s-w1: Box Version: 202508.03.0

==> k8s-w1: Loading metadata for box 'bento/ubuntu-24.04'

k8s-w1: URL: https://vagrantcloud.com/api/v2/vagrant/bento/ubuntu-24.04

==> k8s-w1: Adding box 'bento/ubuntu-24.04' (v202508.03.0) for provider: virtualbox (amd64)

==> k8s-w1: Cloning VM...

==> k8s-w1: Matching MAC address for NAT networking...

==> k8s-w1: Checking if box 'bento/ubuntu-24.04' version '202508.03.0' is up to date...

==> k8s-w1: Setting the name of the VM: k8s-w1

==> k8s-w1: Clearing any previously set network interfaces...

==> k8s-w1: Preparing network interfaces based on configuration...

k8s-w1: Adapter 1: nat

k8s-w1: Adapter 2: hostonly

==> k8s-w1: Forwarding ports...

k8s-w1: 22 (guest) => 60001 (host) (adapter 1)

==> k8s-w1: Running 'pre-boot' VM customizations...

==> k8s-w1: Booting VM...

==> k8s-w1: Waiting for machine to boot. This may take a few minutes...

k8s-w1: SSH address: 127.0.0.1:60001

k8s-w1: SSH username: vagrant

k8s-w1: SSH auth method: private key

k8s-w1:

k8s-w1: Vagrant insecure key detected. Vagrant will automatically replace

k8s-w1: this with a newly generated keypair for better security.

k8s-w1:

k8s-w1: Inserting generated public key within guest...

k8s-w1: Removing insecure key from the guest if it's present...

k8s-w1: Key inserted! Disconnecting and reconnecting using new SSH key...

==> k8s-w1: Machine booted and ready!

==> k8s-w1: Checking for guest additions in VM...

k8s-w1: The guest additions on this VM do not match the installed version of

k8s-w1: VirtualBox! In most cases this is fine, but in rare cases it can

k8s-w1: prevent things such as shared folders from working properly. If you see

k8s-w1: shared folder errors, please make sure the guest additions within the

k8s-w1: virtual machine match the version of VirtualBox you have installed on

k8s-w1: your host and reload your VM.

k8s-w1:

k8s-w1: Guest Additions Version: 7.1.12

k8s-w1: VirtualBox Version: 7.2

==> k8s-w1: Setting hostname...

==> k8s-w1: Configuring and enabling network interfaces...

==> k8s-w1: Running provisioner: shell...

k8s-w1: Running: /tmp/vagrant-shell20250823-57237-ijctwz.sh

k8s-w1: >>>> Initial Config Start <<<<

k8s-w1: [TASK 1] Setting Profile & Bashrc

k8s-w1: [TASK 2] Disable AppArmor

k8s-w1: [TASK 3] Disable and turn off SWAP

k8s-w1: [TASK 4] Install Packages

k8s-w1: [TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl)

k8s-w1: [TASK 6] Install Packages & Helm

k8s-w1: [TASK 7] Install pwru

k8s-w1: >>>> Initial Config End <<<<

==> k8s-w1: Running provisioner: shell...

k8s-w1: Running: /tmp/vagrant-shell20250823-57237-i6tnfg.sh

k8s-w1: >>>> K8S Node config Start <<<<

k8s-w1: [TASK 1] K8S Controlplane Join

k8s-w1: >>>> K8S Node config End <<<<

==> k8s-w1: Running provisioner: shell...

k8s-w1: Running: /tmp/vagrant-shell20250823-57237-u615su.sh

k8s-w1: >>>> Route Add Config Start <<<<

k8s-w1: >>>> Route Add Config End <<<<

==> router: Box 'bento/ubuntu-24.04' could not be found. Attempting to find and install...

router: Box Provider: virtualbox

router: Box Version: 202508.03.0

==> router: Loading metadata for box 'bento/ubuntu-24.04'

router: URL: https://vagrantcloud.com/api/v2/vagrant/bento/ubuntu-24.04

==> router: Adding box 'bento/ubuntu-24.04' (v202508.03.0) for provider: virtualbox (amd64)

==> router: Cloning VM...

==> router: Matching MAC address for NAT networking...

==> router: Checking if box 'bento/ubuntu-24.04' version '202508.03.0' is up to date...

==> router: Setting the name of the VM: router

==> router: Clearing any previously set network interfaces...

==> router: Preparing network interfaces based on configuration...

router: Adapter 1: nat

router: Adapter 2: hostonly

router: Adapter 3: hostonly

==> router: Forwarding ports...

router: 22 (guest) => 60009 (host) (adapter 1)

==> router: Running 'pre-boot' VM customizations...

==> router: Booting VM...

==> router: Waiting for machine to boot. This may take a few minutes...

router: SSH address: 127.0.0.1:60009

router: SSH username: vagrant

router: SSH auth method: private key

router:

router: Vagrant insecure key detected. Vagrant will automatically replace

router: this with a newly generated keypair for better security.

router:

router: Inserting generated public key within guest...

router: Removing insecure key from the guest if it's present...

router: Key inserted! Disconnecting and reconnecting using new SSH key...

==> router: Machine booted and ready!

==> router: Checking for guest additions in VM...

router: The guest additions on this VM do not match the installed version of

router: VirtualBox! In most cases this is fine, but in rare cases it can

router: prevent things such as shared folders from working properly. If you see

router: shared folder errors, please make sure the guest additions within the

router: virtual machine match the version of VirtualBox you have installed on

router: your host and reload your VM.

router:

router: Guest Additions Version: 7.1.12

router: VirtualBox Version: 7.2

==> router: Setting hostname...

==> router: Configuring and enabling network interfaces...

==> router: Running provisioner: shell...

router: Running: /tmp/vagrant-shell20250823-57237-9r906a.sh

router: >>>> Initial Config Start <<<<

router: [TASK 0] Setting eth2

router: [TASK 1] Setting Profile & Bashrc

router: [TASK 2] Disable AppArmor

router: [TASK 3] Add Kernel setting - IP Forwarding

router: [TASK 5] Install Packages

router: [TASK 6] Install Apache

router: >>>> Initial Config End <<<<

|

2. /etc/hosts 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cat /etc/hosts

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

127.0.0.1 localhost

127.0.1.1 vagrant

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

127.0.2.1 k8s-ctr k8s-ctr

192.168.10.100 k8s-ctr

192.168.10.200 router

192.168.20.100 k8s-w0

192.168.10.101 k8s-w1

|

3. 노드간 SSH 접속확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# for i in k8s-w1 router ; do echo ">> node : $i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@$i hostname; echo; done

|

✅ 출력

1

2

3

4

5

6

7

| >> node : k8s-w1 <<

Warning: Permanently added 'k8s-w1' (ED25519) to the list of known hosts.

k8s-w1

>> node : router <<

Warning: Permanently added 'router' (ED25519) to the list of known hosts.

router

|

4. Cilium Pod CIDR 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumnode -o json | grep podCIDRs -A2

|

✅ 출력

1

2

3

4

5

6

7

| "podCIDRs": [

"172.20.0.0/24"

],

--

"podCIDRs": [

"172.20.1.0/24"

],

|

- k8s-ctr:

172.20.0.0/24 - k8s-w1:

172.20.1.0/24

5. Cilium IPAM 모드 확인

1

2

3

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cilium config view | grep ^ipam

ipam cluster-pool

ipam-cilium-node-update-rate 15s

|

6. iptables 규칙 확인

(1) nat 규칙 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# iptables -t nat -S

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

| -P PREROUTING ACCEPT

-P INPUT ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N CILIUM_OUTPUT_nat

-N CILIUM_POST_nat

-N CILIUM_PRE_nat

-N KUBE-KUBELET-CANARY

-A PREROUTING -m comment --comment "cilium-feeder: CILIUM_PRE_nat" -j CILIUM_PRE_nat

-A OUTPUT -m comment --comment "cilium-feeder: CILIUM_OUTPUT_nat" -j CILIUM_OUTPUT_nat

-A POSTROUTING -m comment --comment "cilium-feeder: CILIUM_POST_nat" -j CILIUM_POST_nat

|

(2) filter 규칙 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# iptables -t filter -S

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

| -P INPUT ACCEPT

-P FORWARD ACCEPT

-P OUTPUT ACCEPT

-N CILIUM_FORWARD

-N CILIUM_INPUT

-N CILIUM_OUTPUT

-N KUBE-FIREWALL

-N KUBE-KUBELET-CANARY

-A INPUT -m comment --comment "cilium-feeder: CILIUM_INPUT" -j CILIUM_INPUT

-A INPUT -j KUBE-FIREWALL

-A FORWARD -m comment --comment "cilium-feeder: CILIUM_FORWARD" -j CILIUM_FORWARD

-A OUTPUT -m comment --comment "cilium-feeder: CILIUM_OUTPUT" -j CILIUM_OUTPUT

-A OUTPUT -j KUBE-FIREWALL

-A CILIUM_FORWARD -o cilium_host -m comment --comment "cilium: any->cluster on cilium_host forward accept" -j ACCEPT

-A CILIUM_FORWARD -i cilium_host -m comment --comment "cilium: cluster->any on cilium_host forward accept (nodeport)" -j ACCEPT

-A CILIUM_FORWARD -i lxc+ -m comment --comment "cilium: cluster->any on lxc+ forward accept" -j ACCEPT

-A CILIUM_FORWARD -i cilium_net -m comment --comment "cilium: cluster->any on cilium_net forward accept (nodeport)" -j ACCEPT

-A CILIUM_FORWARD -o lxc+ -m comment --comment "cilium: any->cluster on lxc+ forward accept" -j ACCEPT

-A CILIUM_FORWARD -i lxc+ -m comment --comment "cilium: cluster->any on lxc+ forward accept (nodeport)" -j ACCEPT

-A CILIUM_INPUT -m mark --mark 0x200/0xf00 -m comment --comment "cilium: ACCEPT for proxy traffic" -j ACCEPT

-A CILIUM_OUTPUT -m mark --mark 0xa00/0xe00 -m comment --comment "cilium: ACCEPT for proxy traffic" -j ACCEPT

-A CILIUM_OUTPUT -m mark --mark 0x800/0xe00 -m comment --comment "cilium: ACCEPT for l7 proxy upstream traffic" -j ACCEPT

-A CILIUM_OUTPUT -m mark ! --mark 0xe00/0xf00 -m mark ! --mark 0xd00/0xf00 -m mark ! --mark 0x400/0xf00 -m mark ! --mark 0xa00/0xe00 -m mark ! --mark 0x800/0xe00 -m mark ! --mark 0xf00/0xf00 -m comment --comment "cilium: host->any mark as from host" -j MARK --set-xmark 0xc00/0xf00

-A KUBE-FIREWALL ! -s 127.0.0.0/8 -d 127.0.0.0/8 -m comment --comment "block incoming localnet connections" -m conntrack ! --ctstate RELATED,ESTABLISHED,DNAT -j DROP

|

(3) mangle 규칙 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# iptables -t mangle -S

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| -P PREROUTING ACCEPT

-P INPUT ACCEPT

-P FORWARD ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N CILIUM_POST_mangle

-N CILIUM_PRE_mangle

-N KUBE-IPTABLES-HINT

-N KUBE-KUBELET-CANARY

-A PREROUTING -m comment --comment "cilium-feeder: CILIUM_PRE_mangle" -j CILIUM_PRE_mangle

-A POSTROUTING -m comment --comment "cilium-feeder: CILIUM_POST_mangle" -j CILIUM_POST_mangle

-A CILIUM_PRE_mangle ! -o lo -m socket --transparent -m mark ! --mark 0xe00/0xf00 -m mark ! --mark 0x800/0xf00 -m comment --comment "cilium: any->pod redirect proxied traffic to host proxy" -j MARK --set-xmark 0x200/0xffffffff

-A CILIUM_PRE_mangle -p tcp -m mark --mark 0x99b20200 -m comment --comment "cilium: TPROXY to host cilium-dns-egress proxy" -j TPROXY --on-port 45721 --on-ip 127.0.0.1 --tproxy-mark 0x200/0xffffffff

-A CILIUM_PRE_mangle -p udp -m mark --mark 0x99b20200 -m comment --comment "cilium: TPROXY to host cilium-dns-egress proxy" -j TPROXY --on-port 45721 --on-ip 127.0.0.1 --tproxy-mark 0x200/0xffffffff

|

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# iptables -t mangle -S | grep -i proxy

|

✅ 출력

1

2

3

| -A CILIUM_PRE_mangle ! -o lo -m socket --transparent -m mark ! --mark 0xe00/0xf00 -m mark ! --mark 0x800/0xf00 -m comment --comment "cilium: any->pod redirect proxied traffic to host proxy" -j MARK --set-xmark 0x200/0xffffffff

-A CILIUM_PRE_mangle -p tcp -m mark --mark 0x99b20200 -m comment --comment "cilium: TPROXY to host cilium-dns-egress proxy" -j TPROXY --on-port 45721 --on-ip 127.0.0.1 --tproxy-mark 0x200/0xffffffff

-A CILIUM_PRE_mangle -p udp -m mark --mark 0x99b20200 -m comment --comment "cilium: TPROXY to host cilium-dns-egress proxy" -j TPROXY --on-port 45721 --on-ip 127.0.0.1 --tproxy-mark 0x200/0xffffffff

|

- TCP/UDP 트래픽을

cilium-dns-egress proxy로 TPROXY 리다이렉트 - Pod ↔ Proxy ↔ 외부 서비스 간 트래픽이 올바르게 프록시를 경유하도록 동작

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# iptables -t raw -S

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| -P PREROUTING ACCEPT

-P OUTPUT ACCEPT

-N CILIUM_OUTPUT_raw

-N CILIUM_PRE_raw

-A PREROUTING -m comment --comment "cilium-feeder: CILIUM_PRE_raw" -j CILIUM_PRE_raw

-A OUTPUT -m comment --comment "cilium-feeder: CILIUM_OUTPUT_raw" -j CILIUM_OUTPUT_raw

-A CILIUM_OUTPUT_raw -d 172.20.0.0/16 -m comment --comment "cilium: NOTRACK for pod traffic" -j CT --notrack

-A CILIUM_OUTPUT_raw -s 172.20.0.0/16 -m comment --comment "cilium: NOTRACK for pod traffic" -j CT --notrack

-A CILIUM_OUTPUT_raw -o lxc+ -m mark --mark 0xa00/0xfffffeff -m comment --comment "cilium: NOTRACK for proxy return traffic" -j CT --notrack

-A CILIUM_OUTPUT_raw -o cilium_host -m mark --mark 0xa00/0xfffffeff -m comment --comment "cilium: NOTRACK for proxy return traffic" -j CT --notrack

-A CILIUM_OUTPUT_raw -o lxc+ -m mark --mark 0x800/0xe00 -m comment --comment "cilium: NOTRACK for L7 proxy upstream traffic" -j CT --notrack

-A CILIUM_OUTPUT_raw -o cilium_host -m mark --mark 0x800/0xe00 -m comment --comment "cilium: NOTRACK for L7 proxy upstream traffic" -j CT --notrack

-A CILIUM_PRE_raw -d 172.20.0.0/16 -m comment --comment "cilium: NOTRACK for pod traffic" -j CT --notrack

-A CILIUM_PRE_raw -s 172.20.0.0/16 -m comment --comment "cilium: NOTRACK for pod traffic" -j CT --notrack

-A CILIUM_PRE_raw -m mark --mark 0x200/0xf00 -m comment --comment "cilium: NOTRACK for proxy traffic" -j CT --notrack

|

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# iptables -t raw -S | grep -i proxy

|

✅ 출력

1

2

3

4

5

| -A CILIUM_OUTPUT_raw -o lxc+ -m mark --mark 0xa00/0xfffffeff -m comment --comment "cilium: NOTRACK for proxy return traffic" -j CT --notrack

-A CILIUM_OUTPUT_raw -o cilium_host -m mark --mark 0xa00/0xfffffeff -m comment --comment "cilium: NOTRACK for proxy return traffic" -j CT --notrack

-A CILIUM_OUTPUT_raw -o lxc+ -m mark --mark 0x800/0xe00 -m comment --comment "cilium: NOTRACK for L7 proxy upstream traffic" -j CT --notrack

-A CILIUM_OUTPUT_raw -o cilium_host -m mark --mark 0x800/0xe00 -m comment --comment "cilium: NOTRACK for L7 proxy upstream traffic" -j CT --notrack

-A CILIUM_PRE_raw -m mark --mark 0x200/0xf00 -m comment --comment "cilium: NOTRACK for proxy traffic" -j CT --notrack

|

- Pod 트래픽 및 Proxy 트래픽에 대해 conntrack을 비활성화(NOTRACK) 처리

- Proxy 리턴 트래픽과 L7 Proxy 업스트림 트래픽이 커널 conntrack에 의해 차단되지 않도록 보장

🚀 샘플 어플리케이션 배포 및 통신 확인

1. 샘플 애플리케이션 배포

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cat << EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: webpod

spec:

replicas: 2

selector:

matchLabels:

app: webpod

template:

metadata:

labels:

app: webpod

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- sample-app

topologyKey: "kubernetes.io/hostname"

containers:

- name: webpod

image: traefik/whoami

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: webpod

labels:

app: webpod

spec:

selector:

app: webpod

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

EOF

# 결과

deployment.apps/webpod created

service/webpod created

|

2. curl-pod 파드 배포

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: curl-pod

labels:

app: curl

spec:

nodeName: k8s-ctr

containers:

- name: curl

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

# 결과

pod/curl-pod created

|

3. iptables 차단 규칙 추가

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# iptables -t filter -I OUTPUT 1 -m tcp --proto tcp --dst 1.1.1.1/32 -j DROP

|

- 특정 목적지(

1.1.1.1)로 향하는 TCP 트래픽을 차단하기 위해 iptables OUTPUT 체인에 규칙 삽입

4. pwru 모니터링

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# pwru 'dst host 1.1.1.1 and tcp and dst port 80'

|

1

2

| (⎈|HomeLab:N/A) root@k8s-ctr:~# curl 1.1.1.1 -v

* Trying 1.1.1.1:80...

|

✅ 모니터링 결과

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

2025/08/23 16:08:26 Attaching kprobes (via kprobe-multi)...

1642 / 1642 [-------------------------------------------------------------------------------------------------------------] 100.00% ? p/s

2025/08/23 16:08:26 Attached (ignored 0)

2025/08/23 16:08:26 Listening for events..

SKB CPU PROCESS NETNS MARK/x IFACE PROTO MTU LEN TUPLE FUNC

0xffff9879031f36e8 2 ~r/bin/curl:7924 4026531840 0 0 0x0000 1500 60 10.0.2.15:60312->1.1.1.1:80(tcp) ip_local_out

0xffff9879031f36e8 2 ~r/bin/curl:7924 4026531840 0 0 0x0000 1500 60 10.0.2.15:60312->1.1.1.1:80(tcp) __ip_local_out

0xffff9879031f36e8 2 ~r/bin/curl:7924 4026531840 0 0 0x0800 1500 60 10.0.2.15:60312->1.1.1.1:80(tcp) nf_hook_slow

0xffff9879031f36e8 2 ~r/bin/curl:7924 4026531840 0 0 0x0800 1500 60 10.0.2.15:60312->1.1.1.1:80(tcp) kfree_skb_reason(SKB_DROP_REASON_NETFILTER_DROP)

0xffff9879031f36e8 2 ~r/bin/curl:7924 4026531840 0 0 0x0800 1500 60 10.0.2.15:60312->1.1.1.1:80(tcp) skb_release_head_state

0xffff9879031f36e8 2 ~r/bin/curl:7924 4026531840 0 0 0x0800 0 60 10.0.2.15:60312->1.1.1.1:80(tcp) tcp_wfree

0xffff9879031f36e8 2 ~r/bin/curl:7924 4026531840 0 0 0x0800 0 60 10.0.2.15:60312->1.1.1.1:80(tcp) skb_release_data

0xffff9879031f36e8 2 ~r/bin/curl:7924 4026531840 0 0 0x0800 0 60 10.0.2.15:60312->1.1.1.1:80(tcp) kfree_skbmem

0xffff9879031f36e8 2 <empty>:0 4026531840 0 0 0x0800 0 60 10.0.2.15:60312->1.1.1.1:80(tcp) __skb_clone

0xffff9879031f36e8 2 <empty>:0 0 0 0 0x0800 0 60 10.0.2.15:60312->1.1.1.1:80(tcp) __copy_skb_header

0xffff9879031f36e8 2 <empty>:0 4026531840 0 0 0x0000 1500 60 10.0.2.15:60312->1.1.1.1:80(tcp) ip_local_out

0xffff9879031f36e8 2 <empty>:0 4026531840 0 0 0x0000 1500 60 10.0.2.15:60312->1.1.1.1:80(tcp) __ip_local_out

0xffff9879031f36e8 2 <empty>:0 4026531840 0 0 0x0800 1500 60 10.0.2.15:60312->1.1.1.1:80(tcp) nf_hook_slow

|

pwru를 사용하여 1.1.1.1:80 목적지로 향하는 트래픽 모니터링curl 1.1.1.1 -v 실행 후 패킷 드롭 발생 확인SKB_DROP_REASON_NETFILTER_DROP → iptables DROP 규칙에 의해 패킷이 차단됨

Cilium Service Mesh

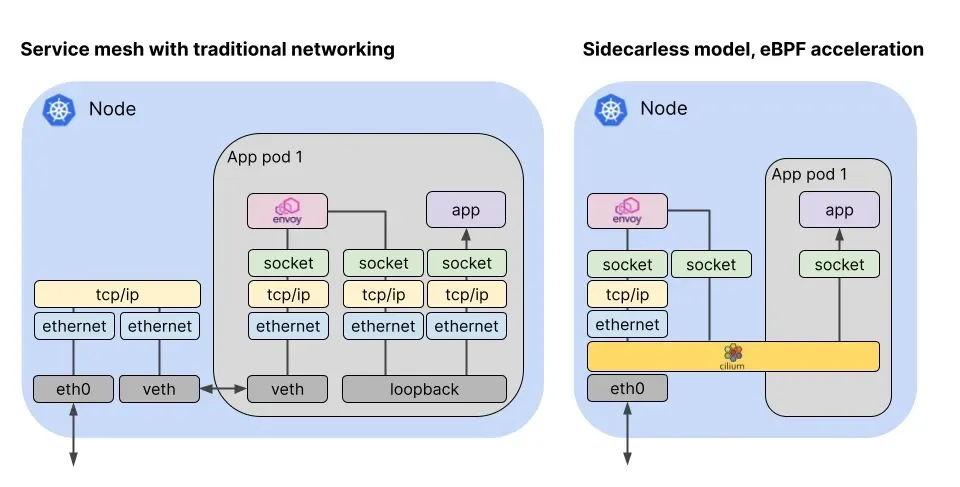

1. 서비스 메시란?

- 서비스 메시는 애플리케이션 네트워킹이라는 공통 관심사를 애플리케이션 코드 밖에서 투명한 방식으로 구현하는 인프라 레이어

- 개발자가 직접 네트워크 기능(mTLS, 라우팅, 로드밸런싱 등)을 구현하지 않아도 서비스 메시가 이를 대신 처리

2. Cilium 기반 서비스 메시

- Cilium은 CNI 기반 플랫폼으로, L3~L7 네트워킹을 eBPF와 Envoy를 통해 처리

- 노드 단위 Envoy(Per-Node Envoy) 가 배포되어 사이드카 없이 서비스 메시 기능을 제공

- 구조와 철학이 Istio Ambient Mesh와 유사하지만 구현 방식에서 차이가 있음

3. 트래픽 처리 방식

(1) L3 / L4 계층

- Cilium이 eBPF로 직접 처리

- 성능상 이점: 커널 레벨에서 트래픽 제어 가능 → 오버헤드 감소

- Istio Ambient Mesh의 Per-Node 모델과 유사하지만, Istio는 Envoy 중심 구조

(2) L7 계층

- Envoy 프록시를 경유하여 트래픽 전달

- 라우팅, 필터링, L7 정책 등은 Envoy가 담당

- Envoy의 설정(Config)은 Cilium Agent가 동적으로 전달

4. Istio Ambient Mesh와의 비교

(1) 공통점

- Per-Node Envoy 모델 사용

- 사이드카 없는 서비스 메시 제공

(2) 차이점

- Istio: L3, L4, L7 모두 Envoy에서 처리

- Cilium: L3, L4는 eBPF 처리, L7만 Envoy 사용

- Cilium은 커널 레벨(eBPF) 기반 처리로 성능 및 자원 효율성이 더 뛰어남

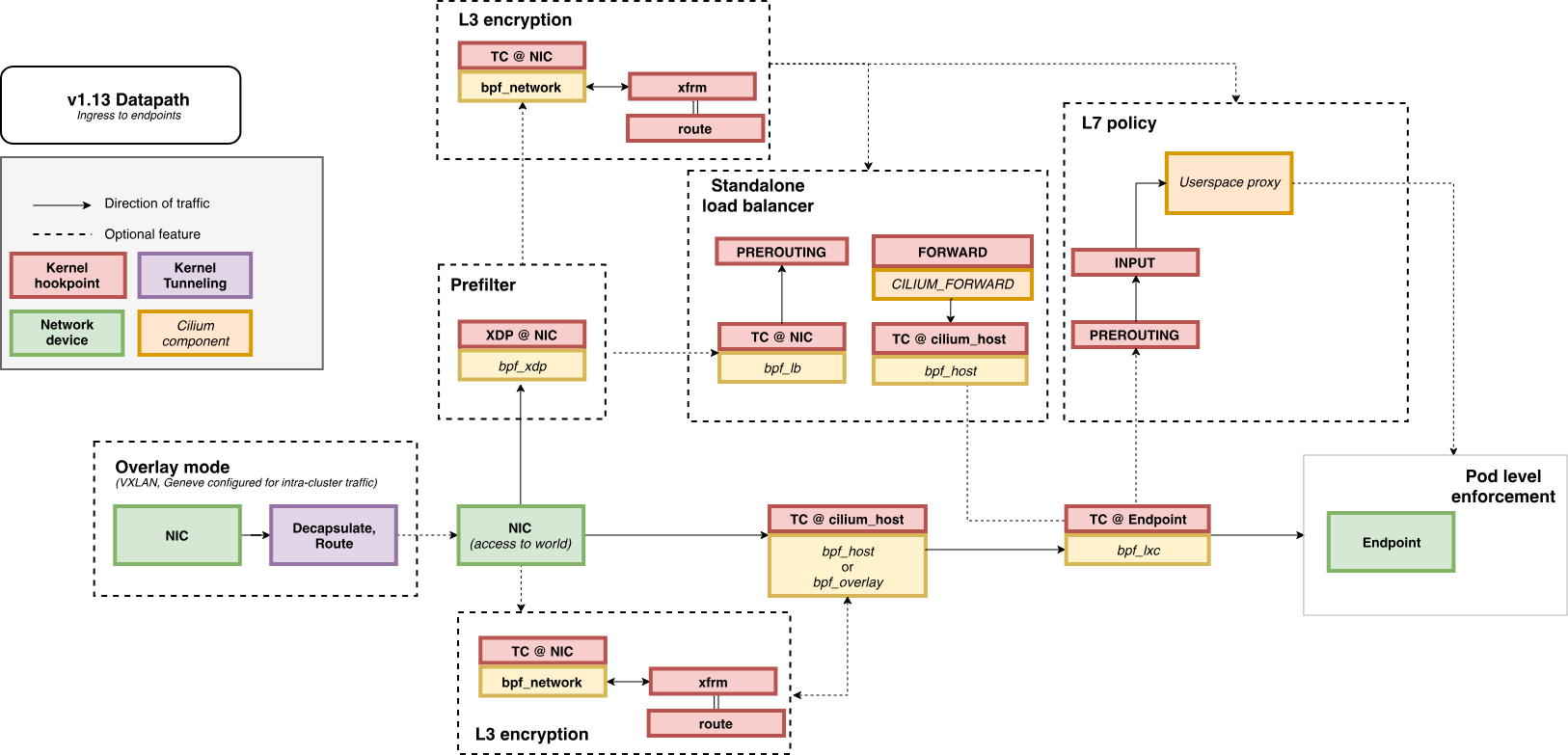

🌐 K8S Ingress Support

1. 필수 조건

- NodePort 활성화 필요

nodePort.enabled=true- 또는 kube-proxy replacement 모드 사용(

kubeProxyReplacement=true)

- L7 Proxy 활성화 필요

l7Proxy=true (기본값 활성화 상태)

2. Ingress 동작 방식

- Cilium Ingress / Gateway API는 LoadBalancer 또는 NodePort 서비스 형태로 노출 가능

- 선택적으로 Host Network 기반 노출도 지원

- 트래픽 흐름

- 서비스 포트로 유입된 트래픽은 Cilium의 eBPF 코드가 가로챔

- 이후 Envoy 프록시(Userspace Proxy) 로 전달 (커널 TPROXY 기능 활용)

- Envoy는 원본 Destination IP/Port, Source IP/Port 정보를 유지한 상태에서 트래픽을 처리

3. 트래픽 데이터 플로우

4. Ingress 관련 주의사항

- Cilium Ingress와 Cilium Gateway API는 동시에 활성화 불가

- 단, 다른 Ingress Controller(ex. NGINX Ingress Controller)와는 함께 사용 가능

5. L7 부하분산 알고리즘 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cilium config view | grep -E '^loadbalancer|l7'

|

✅ 출력

1

2

3

4

| enable-l7-proxy true

loadbalancer-l7 envoy

loadbalancer-l7-algorithm round_robin

loadbalancer-l7-ports

|

- Cilium은 Envoy를 통해 L7 트래픽 부하분산을 지원

- 현재 설정 확인 결과 → round_robin 사용 중

6. Ingress 전용 예약 IP 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it -n kube-system ds/cilium -- cilium ip list | grep ingress

|

✅ 출력

1

2

| 172.20.0.86/32 reserved:ingress

172.20.1.225/32 reserved:ingress

|

- Ingress 트래픽 처리를 위해 노드별 예약 IP 할당

- 각 노드에서 Envoy가 Ingress 트래픽을 처리할 수 있도록 내부적으로 예약된 IP

7. cilium-envoy 데몬셋 배포 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ds -n kube-system cilium-envoy -owide

|

✅ 출력

1

2

| NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE CONTAINERS IMAGES SELECTOR

cilium-envoy 2 2 2 2 2 kubernetes.io/os=linux 137m cilium-envoy quay.io/cilium/cilium-envoy:v1.34.4-1754895458-68cffdfa568b6b226d70a7ef81fc65dda3b890bf@sha256:247e908700012f7ef56f75908f8c965215c26a27762f296068645eb55450bda2 k8s-app=cilium-envoy

|

cilium-envoy는 DaemonSet 형태로 모든 노드에 배포

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get pod -n kube-system -l k8s-app=cilium-envoy -owide

|

✅ 출력

1

2

3

| NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cilium-envoy-hwwwn 1/1 Running 0 137m 192.168.10.100 k8s-ctr <none> <none>

cilium-envoy-jmvfl 1/1 Running 0 135m 192.168.10.101 k8s-w1 <none> <none>

|

- 컨트롤 플레인(k8s-ctr), 워커(k8s-w1) 노드에 각각 Pod 실행 중

8. cilium-envoy Pod 상세 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kc describe pod -n kube-system -l k8s-app=cilium-envoy

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

| ...

Containers:

cilium-envoy:

Container ID: containerd://f0f15510265c3662b0381b0a6a93575bbc7ea6b819720763108942604d4a906f

Image: quay.io/cilium/cilium-envoy:v1.34.4-1754895458-68cffdfa568b6b226d70a7ef81fc65dda3b890bf@sha256:247e908700012f7ef56f75908f8c965215c26a27762f296068645eb55450bda2

Image ID: quay.io/cilium/cilium-envoy@sha256:247e908700012f7ef56f75908f8c965215c26a27762f296068645eb55450bda2

Port: 9964/TCP

Host Port: 9964/TCP

Command:

/usr/bin/cilium-envoy-starter

Args:

--

-c /var/run/cilium/envoy/bootstrap-config.json

--base-id 0

--log-level info

State: Running

Started: Sat, 23 Aug 2025 14:32:49 +0900

Ready: True

Restart Count: 0

Liveness: http-get http://127.0.0.1:9878/healthz delay=0s timeout=5s period=30s #success=1 #failure=10

Readiness: http-get http://127.0.0.1:9878/healthz delay=0s timeout=5s period=30s #success=1 #failure=3

Startup: http-get http://127.0.0.1:9878/healthz delay=5s timeout=1s period=2s #success=1 #failure=105

Environment:

K8S_NODE_NAME: (v1:spec.nodeName)

CILIUM_K8S_NAMESPACE: kube-system (v1:metadata.namespace)

KUBERNETES_SERVICE_HOST: 192.168.10.100

KUBERNETES_SERVICE_PORT: 6443

Mounts:

/sys/fs/bpf from bpf-maps (rw)

/var/run/cilium/envoy/ from envoy-config (ro)

/var/run/cilium/envoy/artifacts from envoy-artifacts (ro)

/var/run/cilium/envoy/sockets from envoy-sockets (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-hpv9k (ro)

...

Volumes:

envoy-sockets:

Type: HostPath (bare host directory volume)

Path: /var/run/cilium/envoy/sockets

HostPathType: DirectoryOrCreate

envoy-artifacts:

Type: HostPath (bare host directory volume)

Path: /var/run/cilium/envoy/artifacts

HostPathType: DirectoryOrCreate

envoy-config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: cilium-envoy-config

Optional: false

bpf-maps:

Type: HostPath (bare host directory volume)

Path: /sys/fs/bpf

HostPathType: DirectoryOrCreate

kube-api-access-hpv9k:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

Optional: false

DownwardAPI: true

|

- 포트 9964/TCP로 Envoy 리스너 동작

- BPF 맵(

/sys/fs/bpf)과 Envoy 설정(/var/run/cilium/envoy/)이 HostPath로 마운트됨

9. Envoy 소켓 및 설정 파일 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# ls -al /var/run/cilium/envoy/sockets

|

✅ 출력

1

2

3

4

5

6

7

| total 0

drwxr-xr-x 3 root root 120 Aug 23 14:33 .

drwxr-xr-x 4 root root 80 Aug 23 14:32 ..

srw-rw---- 1 root 1337 0 Aug 23 14:33 access_log.sock

srwxr-xr-x 1 root root 0 Aug 23 14:32 admin.sock

drwxr-xr-x 3 root root 60 Aug 23 14:33 envoy

srw-rw---- 1 root 1337 0 Aug 23 14:33 xds.sock

|

/var/run/cilium/envoy/sockets 내 Unix 소켓 존재admin.sock, access_log.sock, xds.sock 등

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it -n kube-system ds/cilium-envoy -- ls -al /var/run/cilium/envoy

|

✅ 출력

1

2

3

4

5

6

7

8

| total 12

drwxrwxrwx 5 root root 4096 Aug 23 05:32 .

drwxr-xr-x 3 root root 4096 Aug 23 05:32 ..

drwxr-xr-x 2 root root 4096 Aug 23 05:32 ..2025_08_23_05_32_12.382647784

lrwxrwxrwx 1 root root 31 Aug 23 05:32 ..data -> ..2025_08_23_05_32_12.382647784

drwxr-xr-x 2 root root 40 Aug 23 05:32 artifacts

lrwxrwxrwx 1 root root 28 Aug 23 05:32 bootstrap-config.json -> ..data/bootstrap-config.json

drwxr-xr-x 3 root root 120 Aug 23 05:33 sockets

|

1

2

3

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl -n kube-system get configmap cilium-envoy-config -o json \

| jq -r '.data["bootstrap-config.json"]' \

| jq .

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| {

"admin": {

"address": {

"pipe": {

"path": "/var/run/cilium/envoy/sockets/admin.sock"

}

}

},

...

"listeners": [

{

"address": {

"socketAddress": {

"address": "0.0.0.0",

"portValue": 9964

}

},

...

|

- ConfigMap(

cilium-envoy-config)에서 bootstrap-config.json 로드- 리스너 포트:

9964 - Admin 소켓:

/var/run/cilium/envoy/sockets/admin.sock

10. BPF 맵 및 eBPF 프로그램 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# tree /sys/fs/bpf

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

| /sys/fs/bpf

├── cilium

│ ├── devices

│ │ ├── cilium_host

│ │ │ └── links

│ │ │ ├── cil_from_host

│ │ │ └── cil_to_host

│ │ ├── cilium_net

│ │ │ └── links

│ │ │ └── cil_to_host

│ │ ├── eth0

│ │ │ └── links

│ │ │ ├── cil_from_netdev

│ │ │ └── cil_to_netdev

│ │ └── eth1

│ │ └── links

│ │ ├── cil_from_netdev

│ │ └── cil_to_netdev

│ ├── endpoints

│ │ ├── 1161

│ │ │ └── links

│ │ │ ├── cil_from_container

│ │ │ └── cil_to_container

│ │ ├── 1294

│ │ │ └── links

│ │ │ ├── cil_from_container

│ │ │ └── cil_to_container

│ │ ├── 1308

│ │ │ └── links

│ │ │ ├── cil_from_container

│ │ │ └── cil_to_container

│ │ ├── 1347

│ │ │ └── links

│ │ │ ├── cil_from_container

│ │ │ └── cil_to_container

│ │ ├── 1449

│ │ │ └── links

│ │ │ ├── cil_from_container

│ │ │ └── cil_to_container

│ │ ├── 2479

│ │ │ └── links

│ │ │ ├── cil_from_container

│ │ │ └── cil_to_container

│ │ ├── 3045

│ │ │ └── links

│ │ │ ├── cil_from_container

│ │ │ └── cil_to_container

│ │ └── 83

│ │ └── links

│ │ ├── cil_from_container

│ │ └── cil_to_container

│ └── socketlb

│ └── links

│ └── cgroup

│ ├── cil_sock4_connect

│ ├── cil_sock4_getpeername

│ ├── cil_sock4_post_bind

│ ├── cil_sock4_recvmsg

│ ├── cil_sock4_sendmsg

│ ├── cil_sock6_connect

│ ├── cil_sock6_getpeername

│ ├── cil_sock6_post_bind

│ ├── cil_sock6_recvmsg

│ ├── cil_sock6_sendmsg

│ └── cil_sock_release

└── tc

└── globals

├── cilium_auth_map

├── cilium_call_policy

├── cilium_calls_00083

├── cilium_calls_01161

├── cilium_calls_01294

├── cilium_calls_01308

├── cilium_calls_01347

├── cilium_calls_01449

├── cilium_calls_02479

├── cilium_calls_03045

├── cilium_calls_hostns_00637

├── cilium_calls_netdev_00002

├── cilium_calls_netdev_00003

├── cilium_calls_netdev_00004

├── cilium_ct4_global

├── cilium_ct_any4_global

├── cilium_egresscall_policy

├── cilium_events

├── cilium_ipcache_v2

├── cilium_ipv4_frag_datagrams

├── cilium_l2_responder_v4

├── cilium_lb4_affinity

├── cilium_lb4_backends_v3

├── cilium_lb4_reverse_nat

├── cilium_lb4_reverse_sk

├── cilium_lb4_services_v2

├── cilium_lb4_source_range

├── cilium_lb_affinity_match

├── cilium_lxc

├── cilium_metrics

├── cilium_node_map_v2

├── cilium_nodeport_neigh4

├── cilium_policystats

├── cilium_policy_v2_00083

├── cilium_policy_v2_00637

├── cilium_policy_v2_01161

├── cilium_policy_v2_01294

├── cilium_policy_v2_01308

├── cilium_policy_v2_01347

├── cilium_policy_v2_01449

├── cilium_policy_v2_02479

├── cilium_policy_v2_03045

├── cilium_ratelimit

├── cilium_ratelimit_metrics

├── cilium_runtime_config

├── cilium_signals

├── cilium_skip_lb4

├── cilium_snat_v4_alloc_retries

└── cilium_snat_v4_external

33 directories, 83 files

|

/sys/fs/bpf/cilium 디렉토리에서 다양한 BPF 오브젝트 확인devices, endpoints, socketlb, tc/globals 등

- eBPF를 활용해 소켓 레벨 로드밸런싱, 패킷 처리 경로 제어, 정책 적용 수행

11. cilium-envoy 서비스 및 엔드포인트 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get svc,ep -n kube-system cilium-envoy

|

✅ 출력

1

2

3

4

5

6

| Warning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cilium-envoy ClusterIP None <none> 9964/TCP 145m

NAME ENDPOINTS AGE

endpoints/cilium-envoy 192.168.10.100:9964,192.168.10.101:9964 145m

|

cilium-envoy 서비스 (ClusterIP) 존재- 노드별 Envoy 인스턴스의 9964 포트와 매핑

- Endpoints:

192.168.10.100:9964, 192.168.10.101:9964

12. cilium-ingress 서비스 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get svc,ep -n kube-system cilium-ingress

|

✅ 출력

1

2

3

4

5

6

| Warning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cilium-ingress LoadBalancer 10.96.199.58 <pending> 80:31809/TCP,443:30358/TCP 145m

NAME ENDPOINTS AGE

endpoints/cilium-ingress 192.192.192.192:9999 145m

|

cilium-ingress 서비스는 LoadBalancer 타입으로 생성됨- ClusterIP:

10.96.199.58 - External-IP:

<pending> (LB IPAM 미설정 상태) - 포트:

80, 443

- Endpoints는 내부 논리 IP로 할당됨 (

192.192.192.192:9999) - 모든 노드에서 Ingress 트래픽을 수신할 수 있는 논리적 엔드포인트 역할 수행

⚙️ LB IPAM 설정 후 확인: CiliumL2AnnouncementPolicy

1. L2 Announcement 설정 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cilium config view | grep l2

|

✅ 출력

1

2

| enable-l2-announcements true

enable-l2-neigh-discovery false

|

2. LoadBalancer IP Pool 생성

1

2

3

4

5

6

7

8

9

10

11

12

13

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cat << EOF | kubectl apply -f -

apiVersion: "cilium.io/v2"

kind: CiliumLoadBalancerIPPool

metadata:

name: "cilium-lb-ippool"

spec:

blocks:

- start: "192.168.10.211"

stop: "192.168.10.215"

EOF

# 결과

ciliumloadbalancerippool.cilium.io/cilium-lb-ippool created

|

- CiliumLoadBalancerIPPool 리소스 생성하여 192.168.10.211 ~ 192.168.10.215 범위를 IP 풀로 할당

3. 할당된 LB External IP 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ippool

|

✅ 출력

1

2

| NAME DISABLED CONFLICTING IPS AVAILABLE AGE

cilium-lb-ippool false False 4 16s

|

- 현재 1개 IP(

192.168.10.211)가 이미 사용 중

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# k get svc -A

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

| NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 153m

default webpod ClusterIP 10.96.10.7 <none> 80/TCP 59m

kube-system cilium-envoy ClusterIP None <none> 9964/TCP 153m

kube-system cilium-ingress LoadBalancer 10.96.199.58 192.168.10.211 80:31809/TCP,443:30358/TCP 153m

kube-system hubble-metrics ClusterIP None <none> 9965/TCP 153m

kube-system hubble-peer ClusterIP 10.96.28.176 <none> 443/TCP 153m

kube-system hubble-relay ClusterIP 10.96.83.176 <none> 80/TCP 153m

kube-system hubble-ui NodePort 10.96.222.67 <none> 80:30003/TCP 153m

kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 153m

kube-system metrics-server ClusterIP 10.96.80.249 <none> 443/TCP 153m

|

cilium-ingress 서비스에 External IP로 192.168.10.211 자동 할당- 서비스 타입: LoadBalancer, 포트: 80, 443 오픈

4. Cilium L2 Announcement Policy 설정

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cat << EOF | kubectl apply -f -

apiVersion: "cilium.io/v2alpha1"

kind: CiliumL2AnnouncementPolicy

metadata:

name: policy1

spec:

interfaces:

- eth1

externalIPs: true

loadBalancerIPs: true

EOF

# 결과

ciliuml2announcementpolicy.cilium.io/policy1 created

|

- CiliumL2AnnouncementPolicy 리소스를 생성하여 L2 브로드캐스트 활성화

- 클러스터 외부에서도 LoadBalancer IP 접근 가능

5. L2 Announcement 리더 노드 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl -n kube-system get lease | grep "cilium-l2announce"

|

✅ 출력

1

| cilium-l2announce-kube-system-cilium-ingress k8s-w1

|

- Cilium은 리더 선출(Lease 메커니즘) 을 통해 특정 노드가 LB IP를 광고

- 현재 리더 →

k8s-w1 - 외부에서 LB IP로 들어오는 트래픽은 기본적으로 워커 노드(k8s-w1)로 전달

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl -n kube-system get lease/cilium-l2announce-kube-system-cilium-ingress -o yaml | yq

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| {

"apiVersion": "coordination.k8s.io/v1",

"kind": "Lease",

"metadata": {

"creationTimestamp": "2025-08-23T08:06:52Z",

"name": "cilium-l2announce-kube-system-cilium-ingress",

"namespace": "kube-system",

"resourceVersion": "12038",

"uid": "9bd1ef62-bbb3-4dfe-b7f1-f8108cac6028"

},

"spec": {

"acquireTime": "2025-08-23T08:06:52.738938Z",

"holderIdentity": "k8s-w1",

"leaseDurationSeconds": 15,

"leaseTransitions": 0,

"renewTime": "2025-08-23T08:09:19.294357Z"

}

}

|

6. LB External IP 확인

1

2

3

4

5

| (⎈|HomeLab:N/A) root@k8s-ctr:~# LBIP=$(kubectl get svc -n kube-system cilium-ingress -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

echo $LBIP

# 결과

192.168.10.211

|

7. 클러스터 내부 접근 테스트 (arping)

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# arping -i eth1 $LBIP -c 2

|

✅ 출력

1

2

3

4

5

6

7

| ARPING 192.168.10.211

60 bytes from 08:00:27:92:a6:9d (192.168.10.211): index=0 time=499.579 usec

60 bytes from 08:00:27:92:a6:9d (192.168.10.211): index=1 time=586.194 usec

--- 192.168.10.211 statistics ---

2 packets transmitted, 2 packets received, 0% unanswered (0 extra)

rtt min/avg/max/std-dev = 0.500/0.543/0.586/0.043 ms

|

- 컨트롤 플레인(k8s-ctr)에서 LB IP로 ARP 요청 → 정상 응답 확인

8. 클러스터 외부 접근 테스트 (router 노드)

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@router sudo arping -i eth1 $LBIP -c 2

|

✅ 출력

1

2

3

4

5

6

7

| ARPING 192.168.10.211

60 bytes from 08:00:27:92:a6:9d (192.168.10.211): index=0 time=227.688 usec

60 bytes from 08:00:27:92:a6:9d (192.168.10.211): index=1 time=686.348 usec

--- 192.168.10.211 statistics ---

2 packets transmitted, 2 packets received, 0% unanswered (0 extra)

rtt min/avg/max/std-dev = 0.228/0.457/0.686/0.229 ms

|

- 외부 노드(router)에서 LB IP로 ARP 요청 실행 → 정상 응답 확인

- 즉, 클러스터 외부에서도 LB EX-IP(

192.168.10.211) 접근 가능

🔍 Ingress HTTP Example : XFF 확인

1. Book info 샘플어플리케이션 배포

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl apply -f https://raw.githubusercontent.com/istio/istio/release-1.26/samples/bookinfo/platform/kube/bookinfo.yaml

# 결과

service/details created

serviceaccount/bookinfo-details created

deployment.apps/details-v1 created

service/ratings created

serviceaccount/bookinfo-ratings created

deployment.apps/ratings-v1 created

service/reviews created

serviceaccount/bookinfo-reviews created

deployment.apps/reviews-v1 created

deployment.apps/reviews-v2 created

deployment.apps/reviews-v3 created

service/productpage created

serviceaccount/bookinfo-productpage created

deployment.apps/productpage-v1 created

|

details, ratings, reviews(v1~v3), productpage 서비스 및 파드 생성

2. 서비스 및 파드 상태 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get pod,svc,ep

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

| Warning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice

NAME READY STATUS RESTARTS AGE

pod/curl-pod 1/1 Running 0 71m

pod/details-v1-766844796b-26zq8 1/1 Running 0 64s

pod/productpage-v1-54bb874995-58dcm 1/1 Running 0 64s

pod/ratings-v1-5dc79b6bcd-4ngj8 1/1 Running 0 64s

pod/reviews-v1-598b896c9d-jn7cn 1/1 Running 0 64s

pod/reviews-v2-556d6457d-nhshc 1/1 Running 0 64s

pod/reviews-v3-564544b4d6-hztdc 1/1 Running 0 64s

pod/webpod-697b545f57-8qdms 1/1 Running 0 72m

pod/webpod-697b545f57-cscj8 1/1 Running 0 72m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/details ClusterIP 10.96.86.35 <none> 9080/TCP 64s

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 166m

service/productpage ClusterIP 10.96.6.128 <none> 9080/TCP 64s

service/ratings ClusterIP 10.96.195.236 <none> 9080/TCP 64s

service/reviews ClusterIP 10.96.172.158 <none> 9080/TCP 64s

service/webpod ClusterIP 10.96.10.7 <none> 80/TCP 72m

NAME ENDPOINTS AGE

endpoints/details 172.20.1.43:9080 64s

endpoints/kubernetes 192.168.10.100:6443 166m

endpoints/productpage 172.20.1.147:9080 64s

endpoints/ratings 172.20.1.210:9080 64s

endpoints/reviews 172.20.1.176:9080,172.20.1.178:9080,172.20.1.239:9080 64s

endpoints/webpod 172.20.0.57:80,172.20.1.146:80 72m

|

- Istio 사이드카 방식과 달리 Cilium은 사이드카 없이 Envoy per-node 기반으로 동작 → Ambient Mesh 유사 구조

3. Cilium IngressClass 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ingressclasses.networking.k8s.io

|

✅ 출력

1

2

| NAME CONTROLLER PARAMETERS AGE

cilium cilium.io/ingress-controller <none> 167m

|

4. Ingress 리소스 생성

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cat << EOF | kubectl apply -f -

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: basic-ingress

namespace: default

spec:

ingressClassName: cilium

rules:

- http:

paths:

- backend:

service:

name: details

port:

number: 9080

path: /details

pathType: Prefix

- backend:

service:

name: productpage

port:

number: 9080

path: /

pathType: Prefix

EOF

# 결과

ingress.networking.k8s.io/basic-ingress created

|

- Path 기반 라우팅 규칙 설정

/details → details:9080/ (기본) → productpage:9080

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get svc -n kube-system cilium-ingress

|

✅ 출력

1

2

| NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cilium-ingress LoadBalancer 10.96.199.58 192.168.10.211 80:31809/TCP,443:30358/TCP 171m

|

cilium-ingress LoadBalancer 서비스(192.168.10.211)와 연결됨

5. Ingress 서비스 및 리소스 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ingress

|

✅ 출력

1

2

| NAME CLASS HOSTS ADDRESS PORTS AGE

basic-ingress cilium * 192.168.10.211 80 2m16s

|

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kc describe ingress

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| Name: basic-ingress

Labels: <none>

Namespace: default

Address: 192.168.10.211

Ingress Class: cilium

Default backend: <default>

Rules:

Host Path Backends

---- ---- --------

*

/details details:9080 (172.20.1.43:9080)

/ productpage:9080 (172.20.1.147:9080)

Annotations: <none>

Events: <none>

|

/details 요청 시 details-v1 파드로 전달/ 요청 시 productpage-v1 파드로 전달

6. Ingress 접근 테스트

(1) LB IP 확인

1

2

3

4

5

| (⎈|HomeLab:N/A) root@k8s-ctr:~# LBIP=$(kubectl get svc -n kube-system cilium-ingress -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

echo $LBIP

# 결과

192.168.10.211

|

(2) / 요청

1

2

| (⎈|HomeLab:N/A) root@k8s-ctr:~# curl -so /dev/null -w "%{http_code}\n" http://$LBIP/

200

|

(3) /details/1 요청

1

2

| (⎈|HomeLab:N/A) root@k8s-ctr:~# curl -so /dev/null -w "%{http_code}\n" http://$LBIP/details/1

200

|

(4) /ratings 요청

1

2

| (⎈|HomeLab:N/A) root@k8s-ctr:~# curl -so /dev/null -w "%{http_code}\n" http://$LBIP/ratings

404

|

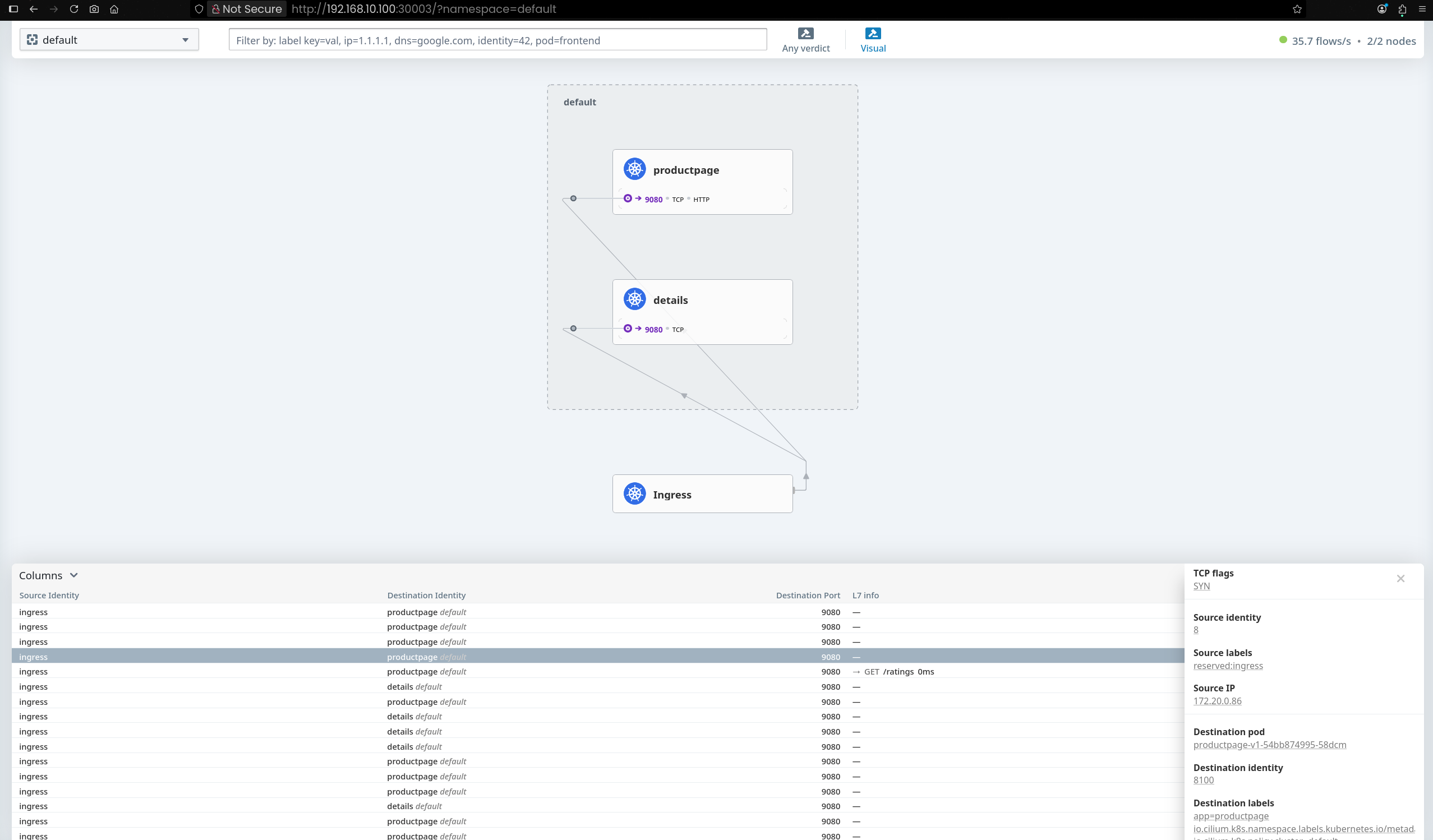

7. Hubble L7 모니터링

1

2

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cilium hubble port-forward&

hubble observe -f -t l7

|

(1) /, /details/1 요청

1

2

| (⎈|HomeLab:N/A) root@k8s-ctr:~# curl -so /dev/null -w "%{http_code}\n" http://$LBIP/

curl -so /dev/null -w "%{http_code}\n" http://$LBIP/details/1

|

✅ 모니터링 결과

1

2

3

4

5

6

7

8

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cilium hubble port-forward&

hubble observe -f -t l7

[1] 9942

ℹ️ Hubble Relay is available at 127.0.0.1:4245

Aug 23 08:43:53.904: 127.0.0.1:58506 (ingress) -> default/productpage-v1-54bb874995-58dcm:9080 (ID:8100) http-request FORWARDED (HTTP/1.1 GET http://192.168.10.211/)

Aug 23 08:43:53.909: 127.0.0.1:58506 (ingress) <- default/productpage-v1-54bb874995-58dcm:9080 (ID:8100) http-response FORWARDED (HTTP/1.1 200 6ms (GET http://192.168.10.211/))

Aug 23 08:43:53.929: 127.0.0.1:58518 (ingress) -> default/details-v1-766844796b-26zq8:9080 (ID:7384) http-request FORWARDED (HTTP/1.1 GET http://192.168.10.211/details/1)

Aug 23 08:43:53.931: 127.0.0.1:58518 (ingress) <- default/details-v1-766844796b-26zq8:9080 (ID:7384) http-response FORWARDED (HTTP/1.1 200 3ms (GET http://192.168.10.211/details/1))

|

/ 요청 → productpage-v1 으로 전달, 200 응답/details/1 요청 → details-v1 으로 전달, 200 응답

(2) /ratings 요청

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# curl -so /dev/null -w "%{http_code}\n" http://$LBIP/ratings

|

✅ 모니터링 결과

1

2

| Aug 23 08:45:30.871: 127.0.0.1:35458 (ingress) -> default/productpage-v1-54bb874995-58dcm:9080 (ID:8100) http-request FORWARDED (HTTP/1.1 GET http://192.168.10.211/ratings)

Aug 23 08:45:30.874: 127.0.0.1:35458 (ingress) <- default/productpage-v1-54bb874995-58dcm:9080 (ID:8100) http-response FORWARDED (HTTP/1.1 404 4ms (GET http://192.168.10.211/ratings))

|

/ratings 요청 → productpage-v1 으로 전달, 404 응답

(3) Hubble UI 확인

8. 응답 헤더에서 Envoy 프록시 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# curl -s -v http://$LBIP/

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| * Trying 192.168.10.211:80...

* Connected to 192.168.10.211 (192.168.10.211) port 80

> GET / HTTP/1.1

> Host: 192.168.10.211

> User-Agent: curl/8.5.0

> Accept: */*

>

< HTTP/1.1 200 OK

< server: envoy

< date: Sat, 23 Aug 2025 08:50:24 GMT

< content-type: text/html; charset=utf-8

< content-length: 2080

< x-envoy-upstream-service-time: 6

<

...

|

- 응답 헤더에

server: envoy, x-envoy-upstream-service-time 포함 - 이는 요청이 Cilium Envoy Proxy를 경유하여 처리되었음을 의미

9. Productpage 파드 위치 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get pod -l app=productpage -owide

|

✅ 출력

1

2

| NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

productpage-v1-54bb874995-58dcm 1/1 Running 0 36m 172.20.1.147 k8s-w1 <none> <none>

|

productpage-v1 파드 IP: 172.20.1.147- 배포 노드:

k8s-w1 - 따라서

k8s-w1 노드에서 veth 인터페이스를 확인해야 함

10. veth 인터페이스 및 라우팅 정보 확인

1

2

| vagrant ssh k8s-w1

root@k8s-w1:~# ip -c route

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

1.214.68.2 via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

61.41.153.2 via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

172.20.0.0/24 via 192.168.10.100 dev eth1 proto kernel

172.20.1.43 dev lxc18af75bb5442 proto kernel scope link

172.20.1.146 dev lxc31dabfbe894f proto kernel scope link

172.20.1.147 dev lxcc960423e84e9 proto kernel scope link

172.20.1.176 dev lxc53d718f372d3 proto kernel scope link

172.20.1.178 dev lxc298e2d514c9d proto kernel scope link

172.20.1.210 dev lxc7edf0846d346 proto kernel scope link

172.20.1.239 dev lxc0214606ce0ed proto kernel scope link

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.101

192.168.20.0/24 via 192.168.10.200 dev eth1 proto static

|

ip -c route 결과172.20.1.147 파드 → veth 인터페이스 lxcc960423e84e9 사용

11. ngrep으로 HTTP 패킷 캡처 준비

1

2

| root@k8s-w1:~# PROID=172.20.1.147

root@k8s-w1:~# PROVETH=lxcc960423e84e9

|

1

2

3

4

5

6

| root@k8s-w1:~# ngrep -tW byline -d $PROVETH '' 'tcp port 9080'

# 모니터링 대기중..

lxcc960423e84e9: no IPv4 address assigned: Cannot assign requested address

interface: lxcc960423e84e9

filter: ( tcp port 9080 ) and ((ip || ip6) || (vlan && (ip || ip6)))

|

- productpage 파드가 사용하는 veth(

lxcc960423e84e9)에서 9080 포트 트래픽 모니터링

12. 패킷 모니터링 결과

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# curl -s http://$LBIP

|

✅ 모니터링 결과

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

| root@k8s-w1:~# ngrep -tW byline -d $PROVETH '' 'tcp port 9080'

lxcc960423e84e9: no IPv4 address assigned: Cannot assign requested address

interface: lxcc960423e84e9

filter: ( tcp port 9080 ) and ((ip || ip6) || (vlan && (ip || ip6)))

###

T 2025/08/23 18:00:13.976515 172.20.1.147:9080 -> 172.20.0.86:41677 [AP] #3

HTTP/1.1 200 OK.

Server: gunicorn.

Date: Sat, 23 Aug 2025 09:00:13 GMT.

Connection: keep-alive.

Content-Type: text/html; charset=utf-8.

Content-Length: 2080.

.

#

T 2025/08/23 18:00:13.976588 172.20.1.147:9080 -> 172.20.0.86:41677 [AP] #4

<meta charset="utf-8">

<meta http-equiv="X-UA-Compatible" content="IE=edge">

<meta name="viewport" content="width=device-width, initial-scale=1">

<style>

table {

color: #333;

background: white;

border: 1px solid grey;

font-size: 12pt;

border-collapse: collapse;

width: 100%;

}

table thead th,

table tfoot th {

color: #fff;

background: #466BB0;

}

table caption {

padding: .5em;

}

table th,

table td {

padding: .5em;

border: 1px solid lightgrey;

}

</style>

<script src="static/tailwind/tailwind.css"></script>

<div class="mx-auto px-4 sm:px-6 lg:px-8">

<div class="flex flex-col space-y-5 py-32 mx-auto max-w-7xl">

<h3 class="text-2xl">Hello! This is a simple bookstore application consisting of three services as shown below

</h3>

<table class="table table-condensed table-bordered table-hover"><tr><th>name</th><td>http://details:9080</td></tr><tr><th>endpoint</th><td>details</td></tr><tr><th>children</th><td><table class="table table-condensed table-bordered table-hover"><thead><tr><th>name</th><th>endpoint</th><th>children</th></tr></thead><tbody><tr><td>http://details:9080</td><td>details</td><td></td></tr><tr><td>http://reviews:9080</td><td>reviews</td><td><table class="table table-condensed table-bordered table-hover"><thead><tr><th>name</th><th>endpoint</th><th>children</th></tr></thead><tbody><tr><td>http://ratings:9080</td><td>ratings</td><td></td></tr></tbody></table></td></tr></tbody></table></td></tr></table>

<p>

Click on one of the links below to auto generate a request to the backend as a real user or a tester

</p>

<ul>

<li>

<a href="/productpage?u=normal" class="text-blue-500 hover:text-blue-600">Normal user</a>

</li>

<li>

<a href="/productpage?u=test" class="text-blue-500 hover:text-blue-600">Test user</a>

</li>

</ul>

</div>

</div>

##

|

📦 Ingress-Nginx 설치 및 설정 : cilium ingress와 공존 확인

1. Ingress-Nginx Helm Repo 추가

1

2

3

4

| (⎈|HomeLab:N/A) root@k8s-ctr:~# helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

# 결과

"ingress-nginx" has been added to your repositories

|

2. Ingress-Nginx 컨트롤러 설치

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

| (⎈|HomeLab:N/A) root@k8s-ctr:~# helm install ingress-nginx ingress-nginx/ingress-nginx --create-namespace -n ingress-nginx

# 결과

NAME: ingress-nginx

LAST DEPLOYED: Sat Aug 23 20:54:59 2025

NAMESPACE: ingress-nginx

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

It may take a few minutes for the load balancer IP to be available.

You can watch the status by running 'kubectl get service --namespace ingress-nginx ingress-nginx-controller --output wide --watch'

An example Ingress that makes use of the controller:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example

namespace: foo

spec:

ingressClassName: nginx

rules:

- host: www.example.com

http:

paths:

- pathType: Prefix

backend:

service:

name: exampleService

port:

number: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls

|

3. IngressClass 확인 (Cilium + Nginx 공존)

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ingressclasses.networking.k8s.io

|

✅ 출력

1

2

3

| NAME CONTROLLER PARAMETERS AGE

cilium cilium.io/ingress-controller <none> 6h24m

nginx k8s.io/ingress-nginx <none> 85s

|

- 두 IngressClass가 동시에 존재 → 공존 가능 확인

4. Ingress-Nginx Service 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get svc -n ingress-nginx

|

✅ 출력

1

2

3

| NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.96.254.255 192.168.10.212 80:31190/TCP,443:30278/TCP 2m5s

ingress-nginx-controller-admission ClusterIP 10.96.103.242 <none> 443/TCP 2m5s

|

ingress-nginx-controller 서비스가 LoadBalancer 타입으로 생성됨- EXTERNAL-IP:

192.168.10.212, 포트: 80, 443

5. Ingress-Nginx Pod 상태 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# k get pod -n ingress-nginx

|

✅ 출력

1

2

| NAME READY STATUS RESTARTS AGE

ingress-nginx-controller-67bbdf7d8d-cwkzh 1/1 Running 0 2m37s

|

6. Webpod 대상 Nginx Ingress 리소스 생성

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cat << EOF | kubectl apply -f -

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: webpod-ingress-nginx

namespace: default

spec:

ingressClassName: nginx

rules:

- host: nginx.webpod.local

http:

paths:

- backend:

service:

name: webpod

port:

number: 80

path: /

pathType: Prefix

EOF

# 결과

ingress.networking.k8s.io/webpod-ingress-nginx created

|

ingressClassName: nginx 지정하여 Ingress 생성nginx.webpod.local 호스트에 대한 요청을 webpod 서비스로 전달

7. Ingress 리소스 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ingress -w

|

✅ 출력

1

2

3

| NAME CLASS HOSTS ADDRESS PORTS AGE

basic-ingress cilium * 192.168.10.211 80 3h38m

webpod-ingress-nginx nginx nginx.webpod.local 192.168.10.212 80 31s

|

basic-ingress → Cilium Ingress (192.168.10.211)webpod-ingress-nginx → Nginx Ingress (192.168.10.212)

8. Nginx Ingress 접근 테스트

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# LB2IP=$(kubectl get svc -n ingress-nginx ingress-nginx-controller -o jsonpath='{.status.loadBalancer.ingress[0].ip}')')

|

1

2

| (⎈|HomeLab:N/A) root@k8s-ctr:~# curl $LB2IP

curl -H "Host: nginx.webpod.local" $LB2IP

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

| <html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx</center>

</body>

</html>

Hostname: webpod-697b545f57-8qdms

IP: 127.0.0.1

IP: ::1

IP: 172.20.0.57

IP: fe80::2421:36ff:fe60:fa6c

RemoteAddr: 172.20.1.96:60838

GET / HTTP/1.1

Host: nginx.webpod.local

User-Agent: curl/8.5.0

Accept: */*

X-Forwarded-For: 192.168.10.100

X-Forwarded-Host: nginx.webpod.local

X-Forwarded-Port: 80

X-Forwarded-Proto: http

X-Forwarded-Scheme: http

X-Real-Ip: 192.168.10.100

X-Request-Id: 750fe9441ca3603d7eebca1fa6adaf42

X-Scheme: http

|

- Webpod 애플리케이션 정상 응답

- 요청 헤더에

X-Forwarded-For, X-Real-Ip, X-Request-Id 등이 포함됨 - Nginx Ingress가 클라이언트 IP와 프록시 정보 헤더를 정상적으로 전달하는 것 확인

📡 dedicated mode

1. Shared Mode 한계 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ingress

|

✅ 출력

1

2

3

| NAME CLASS HOSTS ADDRESS PORTS AGE

basic-ingress cilium * 192.168.10.211 80 3h42m

webpod-ingress-nginx nginx nginx.webpod.local 192.168.10.212 80 4m52s

|

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# k get svc -n kube-system

|

✅ 출력

1

2

3

4

5

6

7

8

9

| NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cilium-envoy ClusterIP None <none> 9964/TCP 6h32m

cilium-ingress LoadBalancer 10.96.199.58 192.168.10.211 80:31809/TCP,443:30358/TCP 6h32m

hubble-metrics ClusterIP None <none> 9965/TCP 6h32m

hubble-peer ClusterIP 10.96.28.176 <none> 443/TCP 6h32m

hubble-relay ClusterIP 10.96.83.176 <none> 80/TCP 6h32m

hubble-ui NodePort 10.96.222.67 <none> 80:30003/TCP 6h32m

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 6h32m

metrics-server ClusterIP 10.96.80.249 <none> 443/TCP 6h32m

|

- 기본적으로 Cilium Ingress는 shared mode에서 동작

- 모든 Ingress 리소스가 동일한 IP(

192.168.10.211)를 공유 - 리소스가 늘어나도 동일한 LoadBalancer IP 사용 → IP 분리가 불가능

2. Dedicated Mode Ingress 생성

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cat << EOF | kubectl apply -f -

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: webpod-ingress

namespace: default

annotations:

ingress.cilium.io/loadbalancer-mode: dedicated

spec:

ingressClassName: cilium

rules:

- http:

paths:

- backend:

service:

name: webpod

port:

number: 80

path: /

pathType: Prefix

EOF

# 결과

ingress.networking.k8s.io/webpod-ingress created

|

ingress.cilium.io/loadbalancer-mode: dedicated 애노테이션 추가

1

2

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kc describe ingress webpod-ingress

kubectl get ingress

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| Name: webpod-ingress

Labels: <none>

Namespace: default

Address: 192.168.10.213

Ingress Class: cilium

Default backend: <default>

Rules:

Host Path Backends

---- ---- --------

*

/ webpod:80 (172.20.0.57:80,172.20.1.146:80)

Annotations: ingress.cilium.io/loadbalancer-mode: dedicated

Events: <none>

NAME CLASS HOSTS ADDRESS PORTS AGE

basic-ingress cilium * 192.168.10.211 80 3h44m

webpod-ingress cilium * 192.168.10.213 80 11s

webpod-ingress-nginx nginx nginx.webpod.local 192.168.10.212 80 7m11s

|

- Ingress 리소스(

webpod-ingress) 생성 시 전용 LoadBalancer IP 할당 192.168.10.213 별도 IP 부여

3. Dedicated Ingress Service 생성 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get svc,ep cilium-ingress-webpod-ingress

|

✅ 출력

1

2

3

4

5

6

| Warning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cilium-ingress-webpod-ingress LoadBalancer 10.96.98.203 192.168.10.213 80:30656/TCP,443:31333/TCP 107s

NAME ENDPOINTS AGE

endpoints/cilium-ingress-webpod-ingress 192.192.192.192:9999 106s

|

- 자동으로

cilium-ingress-webpod-ingress LoadBalancer 서비스 생성 - EXTERNAL-IP:

192.168.10.213

4. Cilium L2 Announcement 리더 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get lease -n kube-system | grep ingress

|

✅ 출력

1

2

3

| cilium-l2announce-default-cilium-ingress-webpod-ingress k8s-w1 3m25s

cilium-l2announce-ingress-nginx-ingress-nginx-controller k8s-w1 14m

cilium-l2announce-kube-system-cilium-ingress k8s-ctr 4h2m

|

cilium-l2announce-default-cilium-ingress-webpod-ingress → k8s-w1webpod-ingress의 외부 IP(192.168.10.213)는 k8s-w1 노드가 광고

5. webpod 파드 위치 및 라우팅 확인

(1) pod 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get pod -l app=webpod -owide

|

✅ 출력

1

2

3

| NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

webpod-697b545f57-8qdms 1/1 Running 0 5h4m 172.20.0.57 k8s-ctr <none> <none>

webpod-697b545f57-cscj8 1/1 Running 0 5h4m 172.20.1.146 k8s-w1 <none> <none>

|

- webpod 파드 2개 →

172.20.0.57 (k8s-ctr), 172.20.1.146 (k8s-w1)

(2) 각 노드별 라우팅 테이블 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c route

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

1.214.68.2 via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

61.41.153.2 via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

172.20.0.26 dev lxccd6108c2c13a proto kernel scope link

172.20.0.51 dev lxc9d0051e26697 proto kernel scope link

172.20.0.57 dev lxc8d6118f480a3 proto kernel scope link

172.20.0.74 dev lxc090a59154842 proto kernel scope link

172.20.0.88 dev lxcbe10a3d0424e proto kernel scope link

172.20.0.94 dev lxc90422e45e688 proto kernel scope link

172.20.0.161 dev lxc3ade05345e37 proto kernel scope link

172.20.0.211 dev lxc406361bdd07d proto kernel scope link

172.20.1.0/24 via 192.168.10.101 dev eth1 proto kernel

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.100

192.168.20.0/24 via 192.168.10.200 dev eth1 proto static

|

1

| root@k8s-w1:~# ip -c route

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

1.214.68.2 via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

61.41.153.2 via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

172.20.0.0/24 via 192.168.10.100 dev eth1 proto kernel

172.20.1.43 dev lxc18af75bb5442 proto kernel scope link

172.20.1.96 dev lxc4ef349547833 proto kernel scope link

172.20.1.146 dev lxc31dabfbe894f proto kernel scope link

172.20.1.147 dev lxcc960423e84e9 proto kernel scope link

172.20.1.176 dev lxc53d718f372d3 proto kernel scope link

172.20.1.178 dev lxc298e2d514c9d proto kernel scope link

172.20.1.210 dev lxc7edf0846d346 proto kernel scope link

172.20.1.239 dev lxc0214606ce0ed proto kernel scope link

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.101

192.168.20.0/24 via 192.168.10.200 dev eth1 proto static

|

1

2

| WPODVETH=lxc8d6118f480a3 # k8s-ctr

WPODVETH=lxc31dabfbe894f # k8s-w1

|

- 파드 IP에 연결된 veth 인터페이스 식별 (

lxc8d6118f480a3, lxc31dabfbe894f)

6. 트래픽 캡처(ngrep)

1

2

3

4

5

| (⎈|HomeLab:N/A) root@k8s-ctr:~# WPODVETH=lxc8d6118f480a3

(⎈|HomeLab:N/A) root@k8s-ctr:~# ngrep -tW byline -d $WPODVETH '' 'tcp port 80'

lxc8d6118f480a3: no IPv4 address assigned: Cannot assign requested address

interface: lxc8d6118f480a3

filter: ( tcp port 80 ) and ((ip || ip6) || (vlan && (ip || ip6)))

|

1

2

3

4

5

| root@k8s-w1:~# WPODVETH=lxc31dabfbe894f

root@k8s-w1:~# ngrep -tW byline -d $WPODVETH '' 'tcp port 80'

lxc31dabfbe894f: no IPv4 address assigned: Cannot assign requested address

interface: lxc31dabfbe894f

filter: ( tcp port 80 ) and ((ip || ip6) || (vlan && (ip || ip6)))

|

- veth 인터페이스에서

tcp port 80 트래픽 캡처 - 외부 요청(

curl http://192.168.10.213) → webpod 파드로 전달되는 흐름 확인

7. 요청 모니터링 결과

1

2

3

4

| (⎈|HomeLab:N/A) root@k8s-ctr:~# LB2IP=$(kubectl get svc cilium-ingress-webpod-ingress -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@router curl -s http://$LB2IP

sshpass -p 'vagrant' ssh vagrant@router curl -s http://$LB2IP