Cilium 5주차 정리

🔧 실습 환경 구성

1. VirtualBox 호환성 이슈

1

2

curl -O https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/Vagrantfile

vagrant up --provider=virtualbox

📢 오류 발생

1

2

3

4

5

6

7

8

9

10

11

vagrant up --provider=virtualbox

The provider 'virtualbox' that was requested to back the machine

'k8s-ctr' is reporting that it isn't usable on this system. The

reason is shown below:

Vagrant has detected that you have a version of VirtualBox installed

that is not supported by this version of Vagrant. Please install one of

the supported versions listed below to use Vagrant:

4.0, 4.1, 4.2, 4.3, 5.0, 5.1, 5.2, 6.0, 6.1, 7.0, 7.1

A Vagrant update may also be available that adds support for the version

you specified. Please check www.vagrantup.com/downloads.html to download

the latest version.

- Vagrant가 지원하는 VirtualBox는 최대

7.1버전 - Arch Linux 롤링 업데이트로 VirtualBox

7.2가 설치되어 호환 불가

2. 해결과정: libvirt 전환

(1) libvirt 환경 설정

1

2

3

sudo systemctl enable --now libvirtd

sudo usermod -a -G libvirt $USER

newgrp libvirt

(2) libvirt 플러그인 설치

1

vagrant plugin install vagrant-libvirt

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

Installing the 'vagrant-libvirt' plugin. This can take a few minutes...

Fetching xml-simple-1.1.9.gem

Fetching racc-1.8.1.gem

Building native extensions. This could take a while...

Fetching nokogiri-1.18.9-x86_64-linux-gnu.gem

Fetching ruby-libvirt-0.8.4.gem

Building native extensions. This could take a while...

Fetching formatador-1.2.0.gem

Fetching fog-core-2.6.0.gem

Fetching fog-xml-0.1.5.gem

Fetching fog-json-1.2.0.gem

Fetching fog-libvirt-0.13.2.gem

Fetching diffy-3.4.4.gem

Fetching vagrant-libvirt-0.12.2.gem

Installed the plugin 'vagrant-libvirt (0.12.2)'!

(3) 네트워크 패키지 설치

1

2

sudo pacman -S dnsmasq bridge-utils iptables-nft

sudo systemctl restart libvirtd

(4) 기본 네트워크 활성화

1

2

3

sudo virsh net-start default

sudo virsh net-autostart default

sudo virsh net-list --all

✅ 출력

1

2

3

4

5

6

Network default started

Network default marked as autostarted

Name State Autostart Persistent

--------------------------------------------

default active yes yes

(5) Vagrantfile 수정

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

# Variables

K8SV = '1.33.2-1.1' # Kubernetes Version

CONTAINERDV = '1.7.27-1' # Containerd Version

CILIUMV = '1.18.0' # Cilium CNI Version

N = 1 # max number of worker nodes

# Base Image

BOX_IMAGE = "bento/ubuntu-24.04"

BOX_VERSION = "202508.03.0"

Vagrant.configure("2") do |config|

#-ControlPlane Node

config.vm.define "k8s-ctr" do |subconfig|

subconfig.vm.box = BOX_IMAGE

subconfig.vm.box_version = BOX_VERSION

subconfig.vm.provider "libvirt" do |libvirt|

libvirt.cpus = 2

libvirt.memory = 2560

end

subconfig.vm.host_name = "k8s-ctr"

subconfig.vm.network "private_network", ip: "192.168.10.100"

subconfig.vm.network "forwarded_port", guest: 22, host: 60000, auto_correct: true, id: "ssh"

subconfig.vm.synced_folder "./", "/vagrant", disabled: true

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/init_cfg.sh", args: [ K8SV, CONTAINERDV ]

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/k8s-ctr.sh", args: [ N, CILIUMV, K8SV ]

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/route-add1.sh"

end

#-Worker Nodes Subnet1

(1..N).each do |i|

config.vm.define "k8s-w#{i}" do |subconfig|

subconfig.vm.box = BOX_IMAGE

subconfig.vm.box_version = BOX_VERSION

subconfig.vm.provider "libvirt" do |libvirt|

libvirt.cpus = 2

libvirt.memory = 1536

end

subconfig.vm.host_name = "k8s-w#{i}"

subconfig.vm.network "private_network", ip: "192.168.10.10#{i}"

subconfig.vm.network "forwarded_port", guest: 22, host: "6000#{i}", auto_correct: true, id: "ssh"

subconfig.vm.synced_folder "./", "/vagrant", disabled: true

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/init_cfg.sh", args: [ K8SV, CONTAINERDV]

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/k8s-w.sh"

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/route-add1.sh"

end

end

#-Router Node

config.vm.define "router" do |subconfig|

subconfig.vm.box = BOX_IMAGE

subconfig.vm.box_version = BOX_VERSION

subconfig.vm.provider "libvirt" do |libvirt|

libvirt.cpus = 1

libvirt.memory = 768

end

subconfig.vm.host_name = "router"

subconfig.vm.network "private_network", ip: "192.168.10.200"

subconfig.vm.network "forwarded_port", guest: 22, host: 60009, auto_correct: true, id: "ssh"

subconfig.vm.network "private_network", ip: "192.168.20.200", auto_config: false

subconfig.vm.synced_folder "./", "/vagrant", disabled: true

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/router.sh"

end

#-Worker Nodes Subnet2

config.vm.define "k8s-w0" do |subconfig|

subconfig.vm.box = BOX_IMAGE

subconfig.vm.box_version = BOX_VERSION

subconfig.vm.provider "libvirt" do |libvirt|

libvirt.cpus = 2

libvirt.memory = 1536

end

subconfig.vm.host_name = "k8s-w0"

subconfig.vm.network "private_network", ip: "192.168.20.100"

subconfig.vm.network "forwarded_port", guest: 22, host: 60010, auto_correct: true, id: "ssh"

subconfig.vm.synced_folder "./", "/vagrant", disabled: true

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/init_cfg.sh", args: [ K8SV, CONTAINERDV]

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/k8s-w.sh"

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/route-add2.sh"

end

end

- VirtualBox 관련 설정 제거 (

vb.customize,vb.name,vb.linked_clone) - provider를

libvirt로 변경

(6) 클러스터 실행

1

vagrant up --provider=libvirt

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

...

==> k8s-ctr: Running provisioner: shell...

k8s-ctr: Running: /tmp/vagrant-shell20250815-120434-uhtz9s.sh

k8s-ctr: >>>> K8S Controlplane config Start <<<<

k8s-ctr: [TASK 1] Initial Kubernetes

k8s-w1: >>>> Initial Config End <<<<

==> k8s-w1: Running provisioner: shell...

k8s-w1: Running: /tmp/vagrant-shell20250815-120434-susr2r.sh

k8s-w1: >>>> K8S Node config Start <<<<

k8s-w1: [TASK 1] K8S Controlplane Join

k8s-w0: >>>> Initial Config End <<<<

==> k8s-w0: Running provisioner: shell...

k8s-w0: Running: /tmp/vagrant-shell20250815-120434-gmyjn3.sh

k8s-w0: >>>> K8S Node config Start <<<<

k8s-w0: [TASK 1] K8S Controlplane Join

k8s-ctr: [TASK 2] Setting kube config file

k8s-ctr: [TASK 3] Source the completion

k8s-ctr: [TASK 4] Alias kubectl to k

k8s-ctr: [TASK 5] Install Kubectx & Kubens

k8s-ctr: [TASK 6] Install Kubeps & Setting PS1

k8s-ctr: [TASK 7] Install Cilium CNI

k8s-ctr: [TASK 8] Install Cilium / Hubble CLI

k8s-ctr: cilium

k8s-w1: >>>> K8S Node config End <<<<

==> k8s-w1: Running provisioner: shell...

k8s-w1: Running: /tmp/vagrant-shell20250815-120434-45fmm8.sh

k8s-w1: >>>> Route Add Config Start <<<<

k8s-w1: >>>> Route Add Config End <<<<

k8s-ctr: hubble

k8s-ctr: [TASK 9] Remove node taint

k8s-ctr: node/k8s-ctr untainted

k8s-ctr: [TASK 10] local DNS with hosts file

k8s-ctr: [TASK 11] Dynamically provisioning persistent local storage with Kubernetes

k8s-ctr: [TASK 13] Install Metrics-server

k8s-ctr: [TASK 14] Install k9s

k8s-ctr: >>>> K8S Controlplane Config End <<<<

==> k8s-ctr: Running provisioner: shell...

k8s-ctr: Running: /tmp/vagrant-shell20250815-120434-5mw3lc.sh

k8s-ctr: >>>> Route Add Config Start <<<<

k8s-ctr: >>>> Route Add Config End <<<<

k8s-w0: >>>> K8S Node config End <<<<

==> k8s-w0: Running provisioner: shell...

k8s-w0: Running: /tmp/vagrant-shell20250815-120434-dtpox6.sh

k8s-w0: >>>> Route Add Config Start <<<<

k8s-w0: >>>> Route Add Config End <<<<

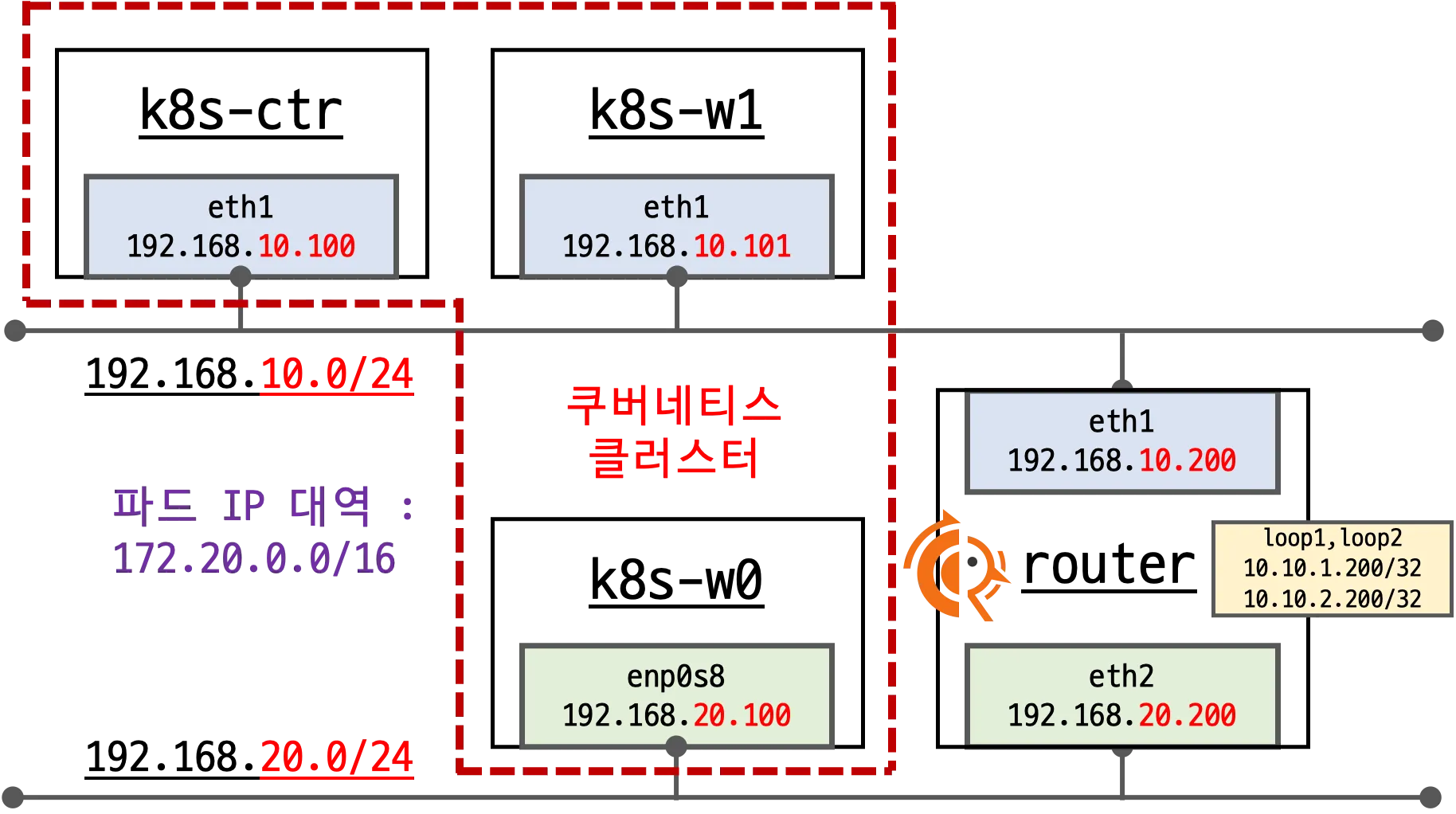

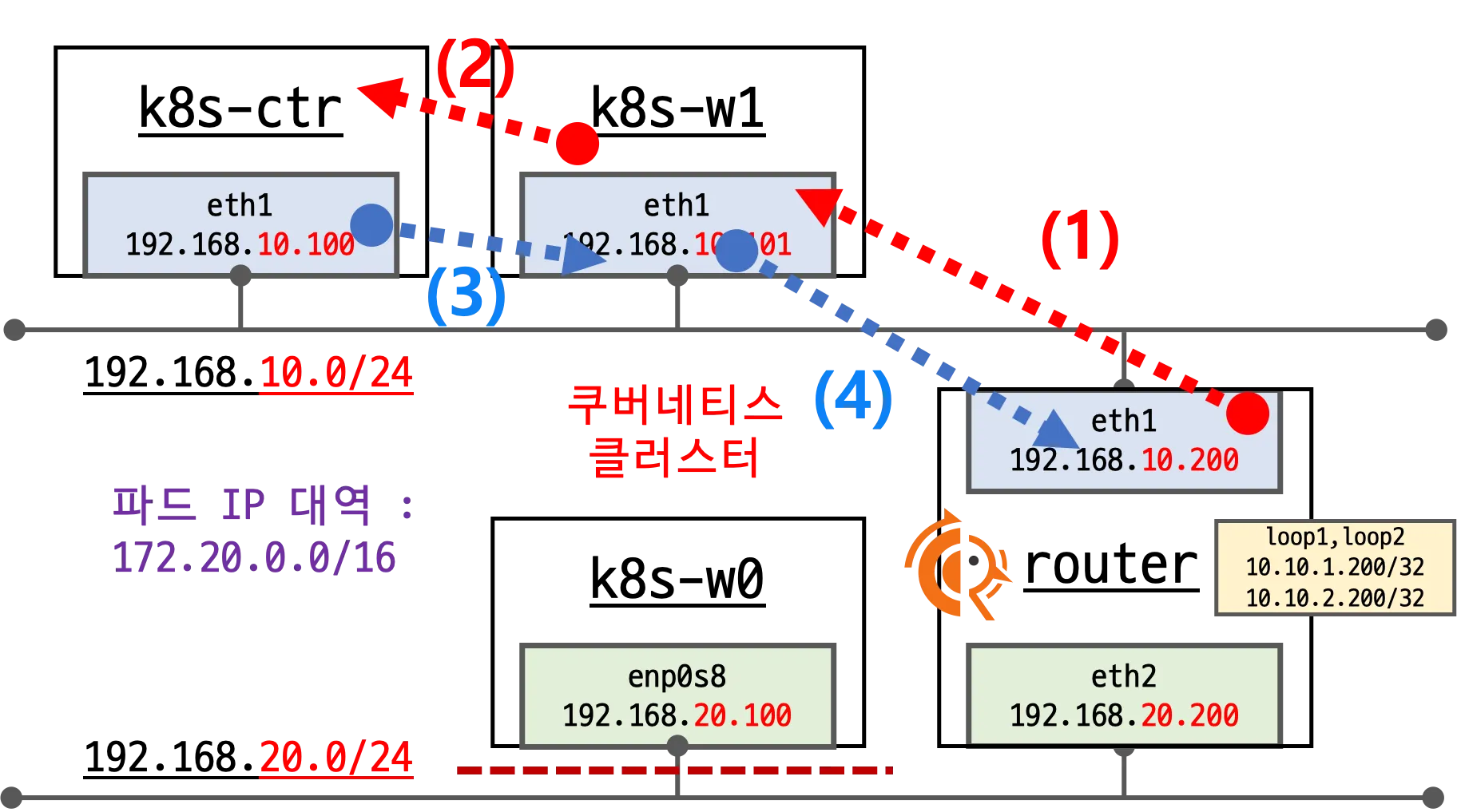

3. 라우팅 및 BGP 실습

- https://docs.frrouting.org/en/stable-10.4/about.html

- FRR(FRRouting) 설치 후 BGP 기반 네트워크 실습 구성

bgpControlPlane.enabled=true설정autoDirectNodeRoutes=false→ 같은 네트워크 노드에서 다른 노드의 pod CIDR 추가하는 것 비활성화

1

2

3

4

5

6

7

cat <<EOT>> /etc/netplan/50-vagrant.yaml

routes:

- to: 192.168.20.0/24

via: 192.168.10.200

# - to: 172.20.0.0/16

# via: 192.168.10.200

EOT

1

2

3

4

5

6

7

cat <<EOT>> /etc/netplan/50-vagrant.yaml

routes:

- to: 192.168.10.0/24

via: 192.168.20.200

# - to: 172.20.0.0/16

# via: 192.168.20.200

EOT

- 내부망 최소 라우팅 규칙만 추가

- BGP를 통해 라우팅 정보 교환 및 광고 설정

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

echo "[TASK 7] Configure FRR"

apt install frr -y >/dev/null 2>&1

sed -i "s/^bgpd=no/bgpd=yes/g" /etc/frr/daemons

NODEIP=$(ip -4 addr show eth1 | grep -oP '(?<=inet\s)\d+(\.\d+){3}')

cat << EOF >> /etc/frr/frr.conf

!

router bgp 65000

bgp router-id $NODEIP

bgp graceful-restart

no bgp ebgp-requires-policy

bgp bestpath as-path multipath-relax

maximum-paths 4

network 10.10.1.0/24

EOF

systemctl daemon-reexec >/dev/null 2>&1

systemctl restart frr >/dev/null 2>&1

systemctl enable frr >/dev/null 2>&1

- 라우터 VM에도 FRR 설치 및

bgpd=yes적용 후 BGP 라우터로 동작

🖥️ [k8s-ctr] 접속 후 기본 정보 확인

1. 컨트롤 플레인에서 각 노드(k8s-w0, k8s-w1, router)에 SSH 접근 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# for i in k8s-w0 k8s-w1 router ; do echo ">> node : $i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@$i hostname; echo; done

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

>> node : k8s-w0 <<

Warning: Permanently added 'k8s-w0' (ED25519) to the list of known hosts.

k8s-w0

>> node : k8s-w1 <<

Warning: Permanently added 'k8s-w1' (ED25519) to the list of known hosts.

k8s-w1

>> node : router <<

Warning: Permanently added 'router' (ED25519) to the list of known hosts.

router

2. 노드 Join 문제 확인

(1) 문제 상황

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get node -owide

✅ 출력

1

2

3

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-ctr Ready control-plane 4m25s v1.33.2 192.168.10.100 <none> Ubuntu 24.04.2 LTS 6.8.0-64-generic containerd://1.7.27

k8s-w1 Ready <none> 4m11s v1.33.2 192.168.10.101 <none> Ubuntu 24.04.2 LTS 6.8.0-64-generic containerd://1.7.27

kubectl get node결과에서k8s-w0노드가 표시되지 않음

(2) 원인 파악

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

vagrant ssh k8s-w0

root@k8s-w0:~# cat /root/kubeadm-join-worker-config.yaml

apiVersion: kubeadm.k8s.io/v1beta4

kind: JoinConfiguration

discovery:

bootstrapToken:

token: "123456.1234567890123456"

apiServerEndpoint: "192.168.10.100:6443"

unsafeSkipCAVerification: true

nodeRegistration:

criSocket: "unix:///run/containerd/containerd.sock"

kubeletExtraArgs:

- name: node-ip

value: "192.168.20.100"

- 설정파일에 더미토큰이 하드코딩 되어있어서 join 실패

(3) 해결 과정

1

2

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubeadm token create --ttl=72h

9llwjd.azxcrk0wd8lkrh45

- 컨트롤 플레인에서 새 토큰 생성

1

2

3

4

5

6

7

8

9

10

11

12

13

14

root@k8s-w0:~# sudo sed -i 's/123456.1234567890123456/9llwjd.azxcrk0wd8lkrh45/g' /root/kubeadm-join-worker-config.yaml

root@k8s-w0:~# cat /root/kubeadm-join-worker-config.yaml

apiVersion: kubeadm.k8s.io/v1beta4

kind: JoinConfiguration

discovery:

bootstrapToken:

token: "9llwjd.azxcrk0wd8lkrh45"

apiServerEndpoint: "192.168.10.100:6443"

unsafeSkipCAVerification: true

nodeRegistration:

criSocket: "unix:///run/containerd/containerd.sock"

kubeletExtraArgs:

- name: node-ip

value: "192.168.20.100"

- 워커노드0 설정 파일 내 토큰 값 교체

1

2

root@k8s-w0:~# sudo kubeadm reset -f

root@k8s-w0:~# sudo kubeadm join --config="/root/kubeadm-join-worker-config.yaml"

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

[preflight] Running pre-flight checks

W0815 22:41:14.831416 4224 removeetcdmember.go:106] [reset] No kubeadm config, using etcd pod spec to get data directory

[reset] Deleted contents of the etcd data directory: /var/lib/etcd

[reset] Stopping the kubelet service

[reset] Unmounting mounted directories in "/var/lib/kubelet"

[reset] Deleting contents of directories: [/etc/kubernetes/manifests /var/lib/kubelet /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/super-admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

The reset process does not perform cleanup of CNI plugin configuration,

network filtering rules and kubeconfig files.

For information on how to perform this cleanup manually, please see:

https://k8s.io/docs/reference/setup-tools/kubeadm/kubeadm-reset/

[preflight] Running pre-flight checks

[preflight] Reading configuration from the "kubeadm-config" ConfigMap in namespace "kube-system"...

[preflight] Use 'kubeadm init phase upload-config --config your-config-file' to re-upload it.

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-check] Waiting for a healthy kubelet at http://127.0.0.1:10248/healthz. This can take up to 4m0s

[kubelet-check] The kubelet is healthy after 1.002299091s

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

kubeadm reset -f후kubeadm join재실행

(4) 결과

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get nodes -owide

✅ 출력

1

2

3

4

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-ctr Ready control-plane 10m v1.33.2 192.168.10.100 <none> Ubuntu 24.04.2 LTS 6.8.0-64-generic containerd://1.7.27

k8s-w0 Ready <none> 53s v1.33.2 192.168.20.100 <none> Ubuntu 24.04.2 LTS 6.8.0-64-generic containerd://1.7.27

k8s-w1 Ready <none> 10m v1.33.2 192.168.10.101 <none> Ubuntu 24.04.2 LTS 6.8.0-64-generic containerd://1.7.27

- 모든 노드(

k8s-ctr,k8s-w0,k8s-w1) 정상 연결

⚙️ [k8s-ctr] cilium 설정 확인

Cilium에서 BGP Control Plane 기능 활성화 여부 확인

1

2

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium config view | grep -i bgp

✅ 출력

1

2

3

4

5

bgp-router-id-allocation-ip-pool

bgp-router-id-allocation-mode default

bgp-secrets-namespace kube-system

enable-bgp-control-plane true

enable-bgp-control-plane-status-report true

enable-bgp-control-plane = true상태 확인 완료

🌐 네트워크 정보 확인

1. router 네트워크 인터페이스 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@router ip -br -c -4 addr

✅ 출력

1

2

3

4

5

6

lo UNKNOWN 127.0.0.1/8

eth0 UP 192.168.121.180/24 metric 100

eth1 UP 192.168.10.200/24

eth2 UP 192.168.20.200/24

loop1 UNKNOWN 10.10.1.200/24

loop2 UNKNOWN 10.10.2.200/24

2. 컨트롤 플레인 네트워크 인터페이스 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c -4 addr show dev eth1

✅ 출력

1

2

3

4

5

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

altname enp0s6

altname ens6

inet 192.168.10.100/24 brd 192.168.10.255 scope global eth1

valid_lft forever preferred_lft forever

3. 워커노드 네트워크 인터페이스 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# for i in w1 w0 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i ip -c -4 addr show dev eth1; echo; done

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

>> node : k8s-w1 <<

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

altname enp0s6

altname ens6

inet 192.168.10.101/24 brd 192.168.10.255 scope global eth1

valid_lft forever preferred_lft forever

>> node : k8s-w0 <<

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

altname enp0s6

altname ens6

inet 192.168.20.100/24 brd 192.168.20.255 scope global eth1

valid_lft forever preferred_lft forever

k8s-w1:192.168.10.101/24→ 컨트롤 플레인과 같은 대역k8s-w0:192.168.20.100/24

4. router 라우팅 정보 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@router ip -c route

✅ 출력

1

2

3

4

5

6

7

default via 192.168.121.1 dev eth0 proto dhcp src 192.168.121.25 metric 100

10.10.1.0/24 dev loop1 proto kernel scope link src 10.10.1.200

10.10.2.0/24 dev loop2 proto kernel scope link src 10.10.2.200

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.200

192.168.20.0/24 dev eth2 proto kernel scope link src 192.168.20.200

192.168.121.0/24 dev eth0 proto kernel scope link src 192.168.121.25 metric 100

192.168.121.1 dev eth0 proto dhcp scope link src 192.168.121.25 metric 100

192.168.10.0/24와192.168.20.0/24라우팅 처리

5. 컨트롤 플레인 라우팅 정보 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c route

✅ 출력

1

2

3

4

5

6

7

default via 192.168.121.1 dev eth0 proto dhcp src 192.168.121.70 metric 100

172.20.0.0/24 via 172.20.0.230 dev cilium_host proto kernel src 172.20.0.230

172.20.0.230 dev cilium_host proto kernel scope link

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.100

192.168.20.0/24 via 192.168.10.200 dev eth1 proto static

192.168.121.0/24 dev eth0 proto kernel scope link src 192.168.121.70 metric 100

192.168.121.1 dev eth0 proto dhcp scope link src 192.168.121.70 metric 100

autoDirectNodeRoutes=false설정으로 인해 Pod CIDR 자동 경로 등록이 비활성화됨- 따라서 같은 네트워크 대역에 있더라도 상대방 Pod CIDR가 라우팅 테이블에 존재하지 않음

- 컨트롤 플레인(

k8s-ctr)은 자신의 PodCIDR(172.20.0.0/24)만 가지고 있음

6. 워커노드 라우팅 정보 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# for i in w1 w0 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i ip -c route; echo; done

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

>> node : k8s-w1 <<

default via 192.168.121.1 dev eth0 proto dhcp src 192.168.121.62 metric 100

172.20.1.0/24 via 172.20.1.4 dev cilium_host proto kernel src 172.20.1.4

172.20.1.4 dev cilium_host proto kernel scope link

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.101

192.168.20.0/24 via 192.168.10.200 dev eth1 proto static

192.168.121.0/24 dev eth0 proto kernel scope link src 192.168.121.62 metric 100

192.168.121.1 dev eth0 proto dhcp scope link src 192.168.121.62 metric 100

>> node : k8s-w0 <<

default via 192.168.121.1 dev eth0 proto dhcp src 192.168.121.122 metric 100

172.20.2.0/24 via 172.20.2.89 dev cilium_host proto kernel src 172.20.2.89

172.20.2.89 dev cilium_host proto kernel scope link

192.168.10.0/24 via 192.168.20.200 dev eth1 proto static

192.168.20.0/24 dev eth1 proto kernel scope link src 192.168.20.100

192.168.121.0/24 dev eth0 proto kernel scope link src 192.168.121.122 metric 100

192.168.121.1 dev eth0 proto dhcp scope link src 192.168.121.122 metric 100

- 각 워커 노드(

k8s-w1,k8s-w0)도 자신의 PodCIDR만 등록되어 있음 autoDirectNodeRoutes=false때문에 다른 노드의 Pod CIDR은 자동으로 추가되지 않음

📦 샘플 애플리케이션 배포 및 통신 문제 확인

1. 샘플 애플리케이션 배포

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

(⎈|HomeLab:N/A) root@k8s-ctr:~# cat << EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: webpod

spec:

replicas: 3

selector:

matchLabels:

app: webpod

template:

metadata:

labels:

app: webpod

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- sample-app

topologyKey: "kubernetes.io/hostname"

containers:

- name: webpod

image: traefik/whoami

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: webpod

labels:

app: webpod

spec:

selector:

app: webpod

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

EOF

# 결과

deployment.apps/webpod created

service/webpod created

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

(⎈|HomeLab:N/A) root@k8s-ctr:~# cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: curl-pod

labels:

app: curl

spec:

nodeName: k8s-ctr

containers:

- name: curl

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

# 결과

pod/curl-pod created

2. Pod 스케줄링 및 서비스 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get deploy,svc,ep webpod -owide

✅ 출력

1

2

3

4

5

6

7

8

9

Warning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/webpod 3/3 3 3 2m23s webpod traefik/whoami app=webpod

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/webpod ClusterIP 10.96.54.159 <none> 80/TCP 2m23s app=webpod

NAME ENDPOINTS AGE

endpoints/webpod 172.20.0.158:80,172.20.1.65:80,172.20.2.204:80 2m23s

podAntiAffinity설정으로 인해 파드가 노드별로 분산 배치됨- Service: ClusterIP

10.96.54.159할당 - Endpoints: 3개 파드 각각 다른 PodCIDR(

172.20.0.x,172.20.1.x,172.20.2.x)에 분산

3. Cilium Endpoint 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumendpoints

✅ 출력

1

2

3

4

5

NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6

curl-pod 64126 ready 172.20.0.64

webpod-697b545f57-fbtbj 38082 ready 172.20.0.158

webpod-697b545f57-pxhvr 38082 ready 172.20.1.65

webpod-697b545f57-rpblf 38082 ready 172.20.2.204

curl-pod→172.20.0.64(컨트롤 플레인)webpod3개 파드 →172.20.0.158,172.20.1.65,172.20.2.204

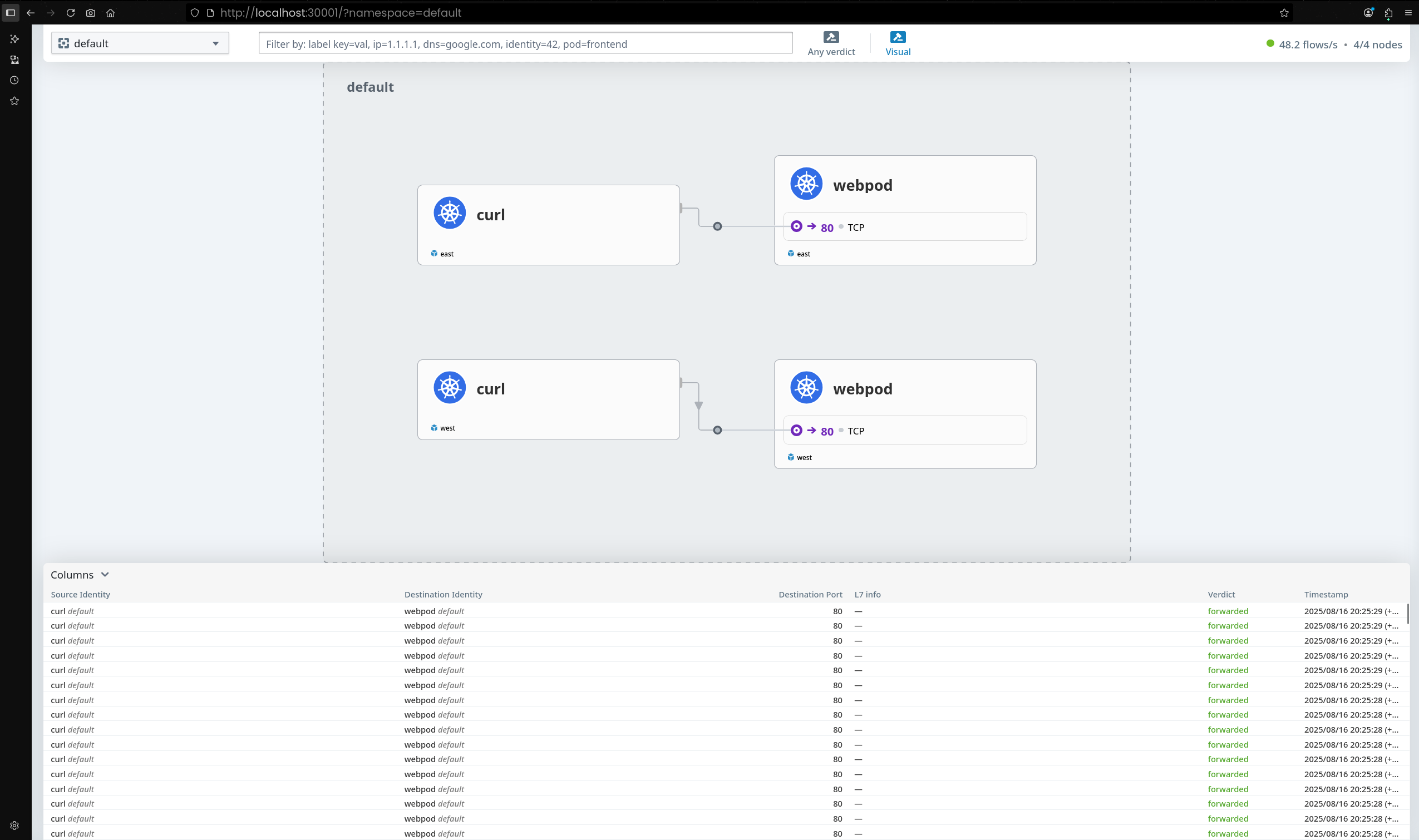

4. 통신 테스트 진행

curl-pod에서 webpod 서비스로 반복 요청 수행

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- sh -c 'while true; do curl -s --connect-timeout 1 webpod | grep Hostname; echo "---" ; sleep 1; done'

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

---

---

---

---

---

Hostname: webpod-697b545f57-fbtbj

---

---

---

Hostname: webpod-697b545f57-fbtbj

---

---

---

Hostname: webpod-697b545f57-fbtbj

---

Hostname: webpod-697b545f57-fbtbj

---

Hostname: webpod-697b545f57-fbtbj

---

---

...

--connect-timeout 1옵션을 줘서 1초 이내 응답 없으면 연결 종료k8s-ctr에 배포된webpod파드(172.20.0.158)만 응답- 다른 노드(

k8s-w1,k8s-w0)에 위치한webpod파드들은 응답하지 못함

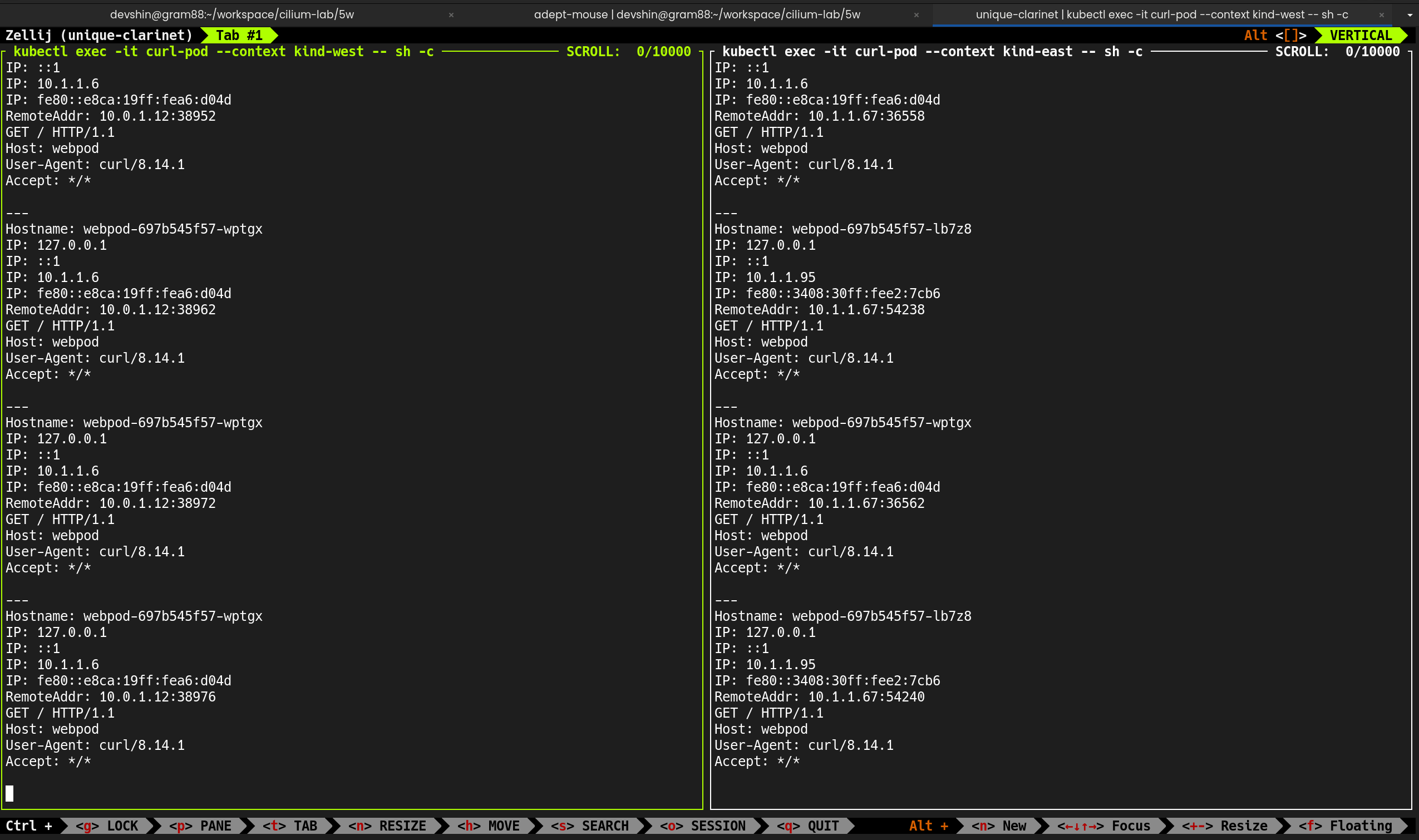

📡 Cilium BGP Control Plane

- https://docs.cilium.io/en/stable/network/bgp-control-plane/bgp-control-plane-v2/

- BGP 설정 후 통신 확인 : Cilium의 BGP는 기본적으로 외부 경로를 커널 라우팅 테이블에 주입하지 않음

1. FRR 프로세스 상태 확인

1

2

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@router

root@router:~# ss -tnlp | grep -iE 'zebra|bgpd'

✅ 출력

1

2

3

4

LISTEN 0 3 127.0.0.1:2601 0.0.0.0:* users:(("zebra",pid=3827,fd=23))

LISTEN 0 3 127.0.0.1:2605 0.0.0.0:* users:(("bgpd",pid=3832,fd=18))

LISTEN 0 4096 0.0.0.0:179 0.0.0.0:* users:(("bgpd",pid=3832,fd=22))

LISTEN 0 4096 [::]:179 [::]:* users:(("bgpd",pid=3832,fd=23))

- BGP 관련 포트(

179) 리슨 중

1

root@router:~# ps -ef |grep frr

✅ 출력

1

2

3

4

5

root 3814 1 0 22:30 ? 00:00:00 /usr/lib/frr/watchfrr -d -F traditional zebra bgpd staticd

frr 3827 1 0 22:30 ? 00:00:00 /usr/lib/frr/zebra -d -F traditional -A 127.0.0.1 -s 90000000

frr 3832 1 0 22:30 ? 00:00:00 /usr/lib/frr/bgpd -d -F traditional -A 127.0.0.1

frr 3839 1 0 22:30 ? 00:00:00 /usr/lib/frr/staticd -d -F traditional -A 127.0.0.1

root 4417 4399 0 23:02 pts/1 00:00:00 grep --color=auto frr

watchfrr,zebra,bgpd,staticd프로세스 구동 확인

2. FRR 설정 확인 (vtysh)

1

root@router:~# vtysh -c 'show running'

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

Building configuration...

Current configuration:

!

frr version 8.4.4

frr defaults traditional

hostname router

log syslog informational

no ipv6 forwarding

service integrated-vtysh-config

!

router bgp 65000

bgp router-id 192.168.10.200

no bgp ebgp-requires-policy

bgp graceful-restart

bgp bestpath as-path multipath-relax

!

address-family ipv4 unicast

network 10.10.1.0/24

maximum-paths 4

exit-address-family

exit

!

end

- Router BGP AS 번호는

65000 - Loopback 네트워크

10.10.1.0/24를 광고하도록 설정됨 - 멀티패스(

maximum-paths 4) 허용 설정 적용

3. FRR 설정 파일 확인

1

root@router:~# cat /etc/frr/frr.conf

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

# default to using syslog. /etc/rsyslog.d/45-frr.conf places the log in

# /var/log/frr/frr.log

#

# Note:

# FRR's configuration shell, vtysh, dynamically edits the live, in-memory

# configuration while FRR is running. When instructed, vtysh will persist the

# live configuration to this file, overwriting its contents. If you want to

# avoid this, you can edit this file manually before starting FRR, or instruct

# vtysh to write configuration to a different file.

log syslog informational

!

router bgp 65000

bgp router-id 192.168.10.200

bgp graceful-restart

no bgp ebgp-requires-policy

bgp bestpath as-path multipath-relax

maximum-paths 4

network 10.10.1.0/24

/etc/frr/frr.conf파일에서 동일한 설정 확인 가능network 10.10.1.0/24광고 중

4. BGP 상태 확인

1

root@router:~# vtysh -c 'show ip bgp summary'

✅ 출력

1

% No BGP neighbors found in VRF default

- 아직 Neighbor 없음

1

root@router:~# vtysh -c 'show ip bgp'

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

BGP table version is 1, local router ID is 192.168.10.200, vrf id 0

Default local pref 100, local AS 65000

Status codes: s suppressed, d damped, h history, * valid, > best, = multipath,

i internal, r RIB-failure, S Stale, R Removed

Nexthop codes: @NNN nexthop's vrf id, < announce-nh-self

Origin codes: i - IGP, e - EGP, ? - incomplete

RPKI validation codes: V valid, I invalid, N Not found

Network Next Hop Metric LocPrf Weight Path

*> 10.10.1.0/24 0.0.0.0 0 32768 i

Displayed 1 routes and 1 total paths

- 자신이 보유한

10.10.1.0/24네트워크만 광고 중 - 외부 노드와 연결되지 않아 BGP 테이블은 단일 엔트리만 존재

5. router 네트워크 인터페이스 확인

1

root@router:~# ip -c addr

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host noprefixroute

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:97:4b:c4 brd ff:ff:ff:ff:ff:ff

altname enp0s5

altname ens5

inet 192.168.121.25/24 metric 100 brd 192.168.121.255 scope global dynamic eth0

valid_lft 2869sec preferred_lft 2869sec

inet6 fe80::5054:ff:fe97:4bc4/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:24:2d:30 brd ff:ff:ff:ff:ff:ff

altname enp0s6

altname ens6

inet 192.168.10.200/24 brd 192.168.10.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe24:2d30/64 scope link

valid_lft forever preferred_lft forever

4: eth2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:50:33:eb brd ff:ff:ff:ff:ff:ff

altname enp0s7

altname ens7

inet 192.168.20.200/24 brd 192.168.20.255 scope global eth2

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe50:33eb/64 scope link

valid_lft forever preferred_lft forever

5: loop1: <BROADCAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 22:63:e6:d9:f6:95 brd ff:ff:ff:ff:ff:ff

inet 10.10.1.200/24 scope global loop1

valid_lft forever preferred_lft forever

inet6 fe80::2063:e6ff:fed9:f695/64 scope link

valid_lft forever preferred_lft forever

6: loop2: <BROADCAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 6e:08:a4:e5:88:c0 brd ff:ff:ff:ff:ff:ff

inet 10.10.2.200/24 scope global loop2

valid_lft forever preferred_lft forever

inet6 fe80::6c08:a4ff:fee5:88c0/64 scope link

valid_lft forever preferred_lft forever

loop1:10.10.1.200/24

6. router 라우팅 테이블 확인

1

root@router:~# ip -c route

✅ 출력

1

2

3

4

5

6

7

default via 192.168.121.1 dev eth0 proto dhcp src 192.168.121.25 metric 100

10.10.1.0/24 dev loop1 proto kernel scope link src 10.10.1.200

10.10.2.0/24 dev loop2 proto kernel scope link src 10.10.2.200

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.200

192.168.20.0/24 dev eth2 proto kernel scope link src 192.168.20.200

192.168.121.0/24 dev eth0 proto kernel scope link src 192.168.121.25 metric 100

192.168.121.1 dev eth0 proto dhcp scope link src 192.168.121.25 metric 100

7. BGP Neighbor 설정 추가

router에서 Cilium 노드(k8s-ctr, k8s-w1, k8s-w0)를 Neighbor로 등록

1

2

3

4

5

6

7

root@router:~# cat << EOF >> /etc/frr/frr.conf

neighbor CILIUM peer-group

neighbor CILIUM remote-as external

neighbor 192.168.10.100 peer-group CILIUM

neighbor 192.168.10.101 peer-group CILIUM

neighbor 192.168.20.100 peer-group CILIUM

EOF

peer-groupCILIUM으로 묶어 관리 단순화remote-as external옵션으로 다른 AS 자동 수용

1

root@router:~# cat /etc/frr/frr.conf

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

# default to using syslog. /etc/rsyslog.d/45-frr.conf places the log in

# /var/log/frr/frr.log

#

# Note:

# FRR's configuration shell, vtysh, dynamically edits the live, in-memory

# configuration while FRR is running. When instructed, vtysh will persist the

# live configuration to this file, overwriting its contents. If you want to

# avoid this, you can edit this file manually before starting FRR, or instruct

# vtysh to write configuration to a different file.

log syslog informational

!

router bgp 65000

bgp router-id 192.168.10.200

bgp graceful-restart

no bgp ebgp-requires-policy

bgp bestpath as-path multipath-relax

maximum-paths 4

network 10.10.1.0/24

neighbor CILIUM peer-group

neighbor CILIUM remote-as external

neighbor 192.168.10.100 peer-group CILIUM

neighbor 192.168.10.101 peer-group CILIUM

neighbor 192.168.20.100 peer-group CILIUM

- router AS:

65000, 노드 AS:65001

8. FRR 서비스 재시작 및 상태 확인

1

2

root@router:~# systemctl daemon-reexec && systemctl restart frr

root@router:~# systemctl status frr --no-pager --full

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

● frr.service - FRRouting

Loaded: loaded (/usr/lib/systemd/system/frr.service; enabled; preset: enabled)

Active: active (running) since Fri 2025-08-15 23:20:38 KST; 17s ago

Docs: https://frrouting.readthedocs.io/en/latest/setup.html

Process: 4540 ExecStart=/usr/lib/frr/frrinit.sh start (code=exited, status=0/SUCCESS)

Main PID: 4550 (watchfrr)

Status: "FRR Operational"

Tasks: 13 (limit: 757)

Memory: 19.5M (peak: 27.4M)

CPU: 321ms

CGroup: /system.slice/frr.service

├─4550 /usr/lib/frr/watchfrr -d -F traditional zebra bgpd staticd

├─4563 /usr/lib/frr/zebra -d -F traditional -A 127.0.0.1 -s 90000000

├─4568 /usr/lib/frr/bgpd -d -F traditional -A 127.0.0.1

└─4575 /usr/lib/frr/staticd -d -F traditional -A 127.0.0.1

Aug 15 23:20:38 router watchfrr[4550]: [YFT0P-5Q5YX] Forked background command [pid 4551]: /usr/lib/frr/watchfrr.sh restart all

Aug 15 23:20:38 router zebra[4563]: [VTVCM-Y2NW3] Configuration Read in Took: 00:00:00

Aug 15 23:20:38 router bgpd[4568]: [VTVCM-Y2NW3] Configuration Read in Took: 00:00:00

Aug 15 23:20:38 router staticd[4575]: [VTVCM-Y2NW3] Configuration Read in Took: 00:00:00

Aug 15 23:20:38 router watchfrr[4550]: [QDG3Y-BY5TN] zebra state -> up : connect succeeded

Aug 15 23:20:38 router frrinit.sh[4540]: * Started watchfrr

Aug 15 23:20:38 router watchfrr[4550]: [QDG3Y-BY5TN] bgpd state -> up : connect succeeded

Aug 15 23:20:38 router watchfrr[4550]: [QDG3Y-BY5TN] staticd state -> up : connect succeeded

Aug 15 23:20:38 router watchfrr[4550]: [KWE5Q-QNGFC] all daemons up, doing startup-complete notify

Aug 15 23:20:38 router systemd[1]: Started frr.service - FRRouting.

9. 모니터링

1

root@router:~# journalctl -u frr -f

✅ 출력

1

2

3

4

5

6

7

8

9

10

Aug 15 23:20:38 router watchfrr[4550]: [YFT0P-5Q5YX] Forked background command [pid 4551]: /usr/lib/frr/watchfrr.sh restart all

Aug 15 23:20:38 router zebra[4563]: [VTVCM-Y2NW3] Configuration Read in Took: 00:00:00

Aug 15 23:20:38 router bgpd[4568]: [VTVCM-Y2NW3] Configuration Read in Took: 00:00:00

Aug 15 23:20:38 router staticd[4575]: [VTVCM-Y2NW3] Configuration Read in Took: 00:00:00

Aug 15 23:20:38 router watchfrr[4550]: [QDG3Y-BY5TN] zebra state -> up : connect succeeded

Aug 15 23:20:38 router frrinit.sh[4540]: * Started watchfrr

Aug 15 23:20:38 router watchfrr[4550]: [QDG3Y-BY5TN] bgpd state -> up : connect succeeded

Aug 15 23:20:38 router watchfrr[4550]: [QDG3Y-BY5TN] staticd state -> up : connect succeeded

Aug 15 23:20:38 router watchfrr[4550]: [KWE5Q-QNGFC] all daemons up, doing startup-complete notify

Aug 15 23:20:38 router systemd[1]: Started frr.service - FRRouting.

🛰️ Cilium에 BGP 설정

1. BGP 활성화 노드 라벨 설정

1

2

3

4

5

6

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl label nodes k8s-ctr k8s-w0 k8s-w1 enable-bgp=true

# 결과

node/k8s-ctr labeled

node/k8s-w0 labeled

node/k8s-w1 labeled

- Cilium BGP를 실행할 노드에

enable-bgp=true라벨 부여

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get node -l enable-bgp=true

✅ 출력

1

2

3

4

NAME STATUS ROLES AGE VERSION

k8s-ctr Ready control-plane 53m v1.33.2

k8s-w0 Ready <none> 43m v1.33.2

k8s-w1 Ready <none> 53m v1.33.2

- 3개 노드 라벨링 확인

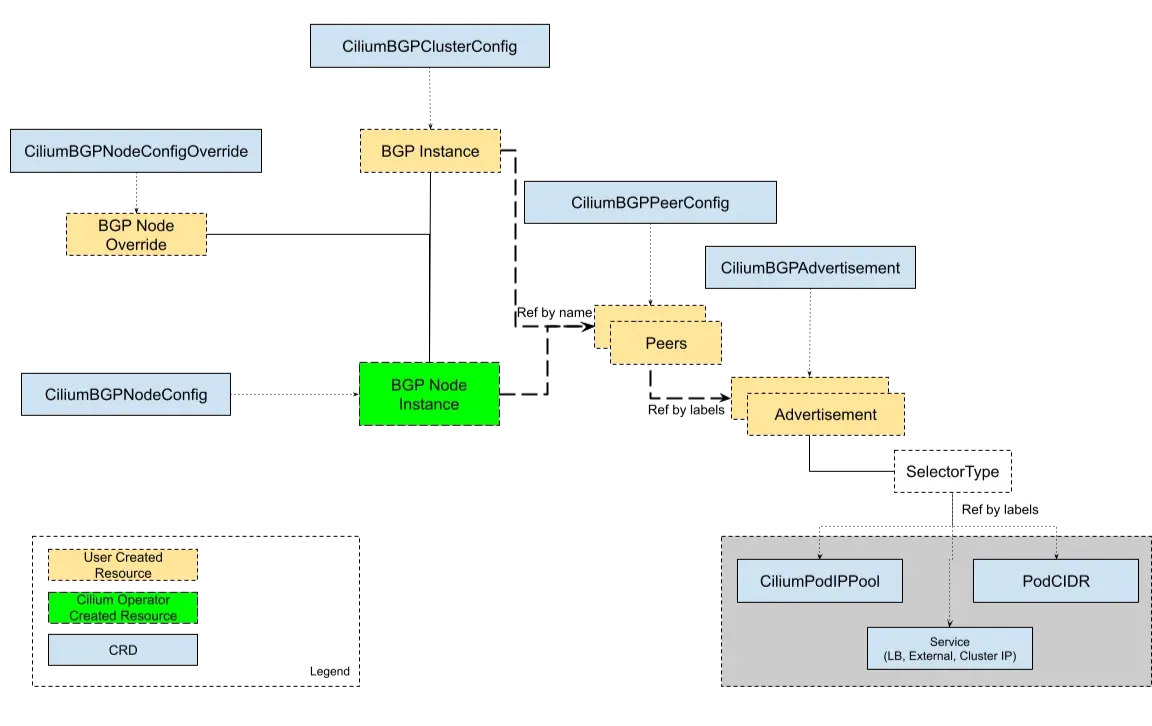

2. Cilium BGP 리소스 설정

Cilium에서 BGP 동작을 정의하기 위해 3가지 CRD 생성

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

(⎈|HomeLab:N/A) root@k8s-ctr:~# cat << EOF | kubectl apply -f -

apiVersion: cilium.io/v2

kind: CiliumBGPAdvertisement

metadata:

name: bgp-advertisements

labels:

advertise: bgp

spec:

advertisements:

- advertisementType: "PodCIDR"

---

apiVersion: cilium.io/v2

kind: CiliumBGPPeerConfig

metadata:

name: cilium-peer

spec:

timers:

holdTimeSeconds: 9

keepAliveTimeSeconds: 3

ebgpMultihop: 2

gracefulRestart:

enabled: true

restartTimeSeconds: 15

families:

- afi: ipv4

safi: unicast

advertisements:

matchLabels:

advertise: "bgp"

---

apiVersion: cilium.io/v2

kind: CiliumBGPClusterConfig

metadata:

name: cilium-bgp

spec:

nodeSelector:

matchLabels:

"enable-bgp": "true"

bgpInstances:

- name: "instance-65001"

localASN: 65001

peers:

- name: "tor-switch"

peerASN: 65000

peerAddress: 192.168.10.200 # router ip address

peerConfigRef:

name: "cilium-peer"

EOF

ciliumbgpadvertisement.cilium.io/bgp-advertisements created

ciliumbgppeerconfig.cilium.io/cilium-peer created

ciliumbgpclusterconfig.cilium.io/cilium-bgp created

CiliumBGPAdvertisementadvertisementType: PodCIDR설정 → 각 노드의 PodCIDR를 BGP로 광고

CiliumBGPPeerConfigadvertisements.matchLabels: advertise=bgp로 필터링

CiliumBGPClusterConfig- 라벨(

enable-bgp=true)이 지정된 노드만 BGP 동작 대상 - localASN: 65001, peerASN: 65000

- peerAddress:

192.168.10.200(Router IP) - Peer 설정은

cilium-peer레퍼런스를 참조

- 라벨(

1

2

3

4

5

6

7

8

9

10

11

12

13

Aug 15 23:20:38 router watchfrr[4550]: [YFT0P-5Q5YX] Forked background command [pid 4551]: /usr/lib/frr/watchfrr.sh restart all

Aug 15 23:20:38 router zebra[4563]: [VTVCM-Y2NW3] Configuration Read in Took: 00:00:00

Aug 15 23:20:38 router bgpd[4568]: [VTVCM-Y2NW3] Configuration Read in Took: 00:00:00

Aug 15 23:20:38 router staticd[4575]: [VTVCM-Y2NW3] Configuration Read in Took: 00:00:00

Aug 15 23:20:38 router watchfrr[4550]: [QDG3Y-BY5TN] zebra state -> up : connect succeeded

Aug 15 23:20:38 router frrinit.sh[4540]: * Started watchfrr

Aug 15 23:20:38 router watchfrr[4550]: [QDG3Y-BY5TN] bgpd state -> up : connect succeeded

Aug 15 23:20:38 router watchfrr[4550]: [QDG3Y-BY5TN] staticd state -> up : connect succeeded

Aug 15 23:20:38 router watchfrr[4550]: [KWE5Q-QNGFC] all daemons up, doing startup-complete notify

Aug 15 23:20:38 router systemd[1]: Started frr.service - FRRouting.

Aug 15 23:27:15 router bgpd[4568]: [M59KS-A3ZXZ] bgp_update_receive: rcvd End-of-RIB for IPv4 Unicast from 192.168.20.100 in vrf default

Aug 15 23:27:15 router bgpd[4568]: [M59KS-A3ZXZ] bgp_update_receive: rcvd End-of-RIB for IPv4 Unicast from 192.168.10.100 in vrf default

Aug 15 23:27:15 router bgpd[4568]: [M59KS-A3ZXZ] bgp_update_receive: rcvd End-of-RIB for IPv4 Unicast from 192.168.10.101 in vrf default

🔍 통신 확인

1. BGP 세션 연결 확인

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# ss -tnlp | grep 179

(⎈|HomeLab:N/A) root@k8s-ctr:~# ss -tnp | grep 179

ESTAB 0 0 192.168.10.100:35791 192.168.10.200:179 users:(("cilium-agent",pid=5170,fd=50))

ESTAB 0 0 [::ffff:192.168.10.100]:6443 [::ffff:172.20.0.179]:46928 users:(("kube-apiserver",pid=3868,fd=105))

- Cilium은 BGP Listener가 아니라 Initiator로 동작하여 네트워크 장비(FRR 라우터)와 TCP 179 포트로 연결을 수립함

- 컨트롤 플레인 노드(

192.168.10.100:35791)가 라우터(192.168.10.200:179)와 세션을 맺은 상태 확인됨

2. Cilium BGP Peer 연결 상태 확인

1

2

3

4

5

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium bgp peers

Node Local AS Peer AS Peer Address Session State Uptime Family Received Advertised

k8s-ctr 65001 65000 192.168.10.200 established 10m59s ipv4/unicast 4 2

k8s-w0 65001 65000 192.168.10.200 established 10m59s ipv4/unicast 4 2

k8s-w1 65001 65000 192.168.10.200 established 10m59s ipv4/unicast 4 2

- 3개 노드(

k8s-ctr,k8s-w0,k8s-w1) 모두 라우터와 established 상태 확인 - Local ASN은 65001, Peer ASN은 65000으로 정상적으로 매칭

3. PodCIDR 광고 상태 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium bgp routes available ipv4 unicast

✅ 출력

1

2

3

4

Node VRouter Prefix NextHop Age Attrs

k8s-ctr 65001 172.20.0.0/24 0.0.0.0 12m43s [{Origin: i} {Nexthop: 0.0.0.0}]

k8s-w0 65001 172.20.2.0/24 0.0.0.0 12m42s [{Origin: i} {Nexthop: 0.0.0.0}]

k8s-w1 65001 172.20.1.0/24 0.0.0.0 12m43s [{Origin: i} {Nexthop: 0.0.0.0}]

- 각 노드의 PodCIDR 광고 확인됨

4. Cilium BGP 리소스 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumbgpadvertisements,ciliumbgppeerconfigs,ciliumbgpclusterconfigs

✅ 출력

1

2

3

4

5

6

7

8

NAME AGE

ciliumbgpadvertisement.cilium.io/bgp-advertisements 13m

NAME AGE

ciliumbgppeerconfig.cilium.io/cilium-peer 13m

NAME AGE

ciliumbgpclusterconfig.cilium.io/cilium-bgp 13m

각 노드별 CiliumBGPNodeConfig 리소스 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumbgpnodeconfigs -o yaml | yq

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

{

"apiVersion": "v1",

"items": [

{

"apiVersion": "cilium.io/v2",

"kind": "CiliumBGPNodeConfig",

"metadata": {

"creationTimestamp": "2025-08-15T14:27:12Z",

"generation": 1,

"name": "k8s-ctr",

"ownerReferences": [

{

"apiVersion": "cilium.io/v2",

"controller": true,

"kind": "CiliumBGPClusterConfig",

"name": "cilium-bgp",

"uid": "e1f4b328-d375-4a7c-a99b-ed2658602a14"

}

],

"resourceVersion": "7578",

"uid": "a72d5068-f106-4b37-a0a7-2ad0e72e8f9d"

},

"spec": {

"bgpInstances": [

{

"localASN": 65001,

"name": "instance-65001",

"peers": [

{

"name": "tor-switch",

"peerASN": 65000,

"peerAddress": "192.168.10.200",

"peerConfigRef": {

"name": "cilium-peer"

}

}

]

}

]

},

"status": {

"bgpInstances": [

{

"localASN": 65001,

"name": "instance-65001",

"peers": [

{

"establishedTime": "2025-08-15T14:27:14Z",

"name": "tor-switch",

"peerASN": 65000,

"peerAddress": "192.168.10.200",

"peeringState": "established",

"routeCount": [

{

"advertised": 2,

"afi": "ipv4",

"received": 1,

"safi": "unicast"

}

],

"timers": {

"appliedHoldTimeSeconds": 9,

"appliedKeepaliveSeconds": 3

}

}

]

}

]

}

},

{

"apiVersion": "cilium.io/v2",

"kind": "CiliumBGPNodeConfig",

"metadata": {

"creationTimestamp": "2025-08-15T14:27:12Z",

"generation": 1,

"name": "k8s-w0",

"ownerReferences": [

{

"apiVersion": "cilium.io/v2",

"controller": true,

"kind": "CiliumBGPClusterConfig",

"name": "cilium-bgp",

"uid": "e1f4b328-d375-4a7c-a99b-ed2658602a14"

}

],

"resourceVersion": "7575",

"uid": "395cc9e2-0f3e-47f3-bce0-169110494292"

},

"spec": {

"bgpInstances": [

{

"localASN": 65001,

"name": "instance-65001",

"peers": [

{

"name": "tor-switch",

"peerASN": 65000,

"peerAddress": "192.168.10.200",

"peerConfigRef": {

"name": "cilium-peer"

}

}

]

}

]

},

"status": {

"bgpInstances": [

{

"localASN": 65001,

"name": "instance-65001",

"peers": [

{

"establishedTime": "2025-08-15T14:27:14Z",

"name": "tor-switch",

"peerASN": 65000,

"peerAddress": "192.168.10.200",

"peeringState": "established",

"routeCount": [

{

"advertised": 2,

"afi": "ipv4",

"received": 1,

"safi": "unicast"

}

],

"timers": {

"appliedHoldTimeSeconds": 9,

"appliedKeepaliveSeconds": 3

}

}

]

}

]

}

},

{

"apiVersion": "cilium.io/v2",

"kind": "CiliumBGPNodeConfig",

"metadata": {

"creationTimestamp": "2025-08-15T14:27:12Z",

"generation": 1,

"name": "k8s-w1",

"ownerReferences": [

{

"apiVersion": "cilium.io/v2",

"controller": true,

"kind": "CiliumBGPClusterConfig",

"name": "cilium-bgp",

"uid": "e1f4b328-d375-4a7c-a99b-ed2658602a14"

}

],

"resourceVersion": "7581",

"uid": "d98cdab1-5d96-4ecf-ae47-1cc3c80a3071"

},

"spec": {

"bgpInstances": [

{

"localASN": 65001,

"name": "instance-65001",

"peers": [

{

"name": "tor-switch",

"peerASN": 65000,

"peerAddress": "192.168.10.200",

"peerConfigRef": {

"name": "cilium-peer"

}

}

]

}

]

},

"status": {

"bgpInstances": [

{

"localASN": 65001,

"name": "instance-65001",

"peers": [

{

"establishedTime": "2025-08-15T14:27:14Z",

"name": "tor-switch",

"peerASN": 65000,

"peerAddress": "192.168.10.200",

"peeringState": "established",

"routeCount": [

{

"advertised": 2,

"afi": "ipv4",

"received": 1,

"safi": "unicast"

}

],

"timers": {

"appliedHoldTimeSeconds": 9,

"appliedKeepaliveSeconds": 3

}

}

]

}

]

}

}

],

"kind": "List",

"metadata": {

"resourceVersion": ""

}

}

- Local ASN 65001

- Peer Address

192.168.10.200, Peer ASN 65000 - Peering State

established - RouteCount:

advertised 2,received 1

5. 라우터 커널 라우팅 테이블 확인

1

2

3

4

root@router:~# ip -c route | grep bgp

172.20.0.0/24 nhid 29 via 192.168.10.100 dev eth1 proto bgp metric 20

172.20.1.0/24 nhid 30 via 192.168.10.101 dev eth1 proto bgp metric 20

172.20.2.0/24 nhid 28 via 192.168.20.100 dev eth2 proto bgp metric 20

- FRR 라우터 커널 라우팅 테이블에 Pod CIDR 경로가 BGP 프로토콜을 통해 학습되어 등록됨

- 특정 Pod 대역과 통신하려면 반드시 해당 노드로 전달되도록 라우팅 경로가 잡힘

6. BGP Neighbor 관계 확인

1

root@router:~# vtysh -c 'show ip bgp summary'

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

IPv4 Unicast Summary (VRF default):

BGP router identifier 192.168.10.200, local AS number 65000 vrf-id 0

BGP table version 4

RIB entries 7, using 1344 bytes of memory

Peers 3, using 2172 KiB of memory

Peer groups 1, using 64 bytes of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt Desc

192.168.10.100 4 65001 353 356 0 0 0 00:17:29 1 4 N/A

192.168.10.101 4 65001 353 356 0 0 0 00:17:29 1 4 N/A

192.168.20.100 4 65001 353 356 0 0 0 00:17:28 1 4 N/A

Total number of neighbors 3

- FRR 라우터(AS 65000)는 Cilium이 구동 중인 3개 노드(AS 65001)와 BGP neighbor를 맺음

- 모든 neighbor가

Established상태로 정상 연결됨

7. BGP 광고 경로 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

root@router:~# vtysh -c 'show ip bgp'

BGP table version is 4, local router ID is 192.168.10.200, vrf id 0

Default local pref 100, local AS 65000

Status codes: s suppressed, d damped, h history, * valid, > best, = multipath,

i internal, r RIB-failure, S Stale, R Removed

Nexthop codes: @NNN nexthop's vrf id, < announce-nh-self

Origin codes: i - IGP, e - EGP, ? - incomplete

RPKI validation codes: V valid, I invalid, N Not found

Network Next Hop Metric LocPrf Weight Path

*> 10.10.1.0/24 0.0.0.0 0 32768 i

*> 172.20.0.0/24 192.168.10.100 0 65001 i

*> 172.20.1.0/24 192.168.10.101 0 65001 i

*> 172.20.2.0/24 192.168.20.100 0 65001 i

Displayed 4 routes and 4 total paths

- Pod CIDR (

172.20.0.0/24,172.20.1.0/24,172.20.2.0/24)가 모두 수신됨 - nexthop은 각각 노드의 내부 IP로 표시되며, BGP를 통해 올바르게 광고 전파가 이뤄진 것 확인

8. BGP Neighbor 설정 후 통신 불가 현상

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

Hostname: webpod-697b545f57-fbtbj

---

---

---

---

---

---

---

---

---

---

---

Hostname: webpod-697b545f57-fbtbj

---

---

---

---

---

...

- Cilium의 특성상 BGP 세션을 맺더라도 기본적으로 커널 라우팅 테이블에 경로를 주입하지 않음 (

disable-fib상태)

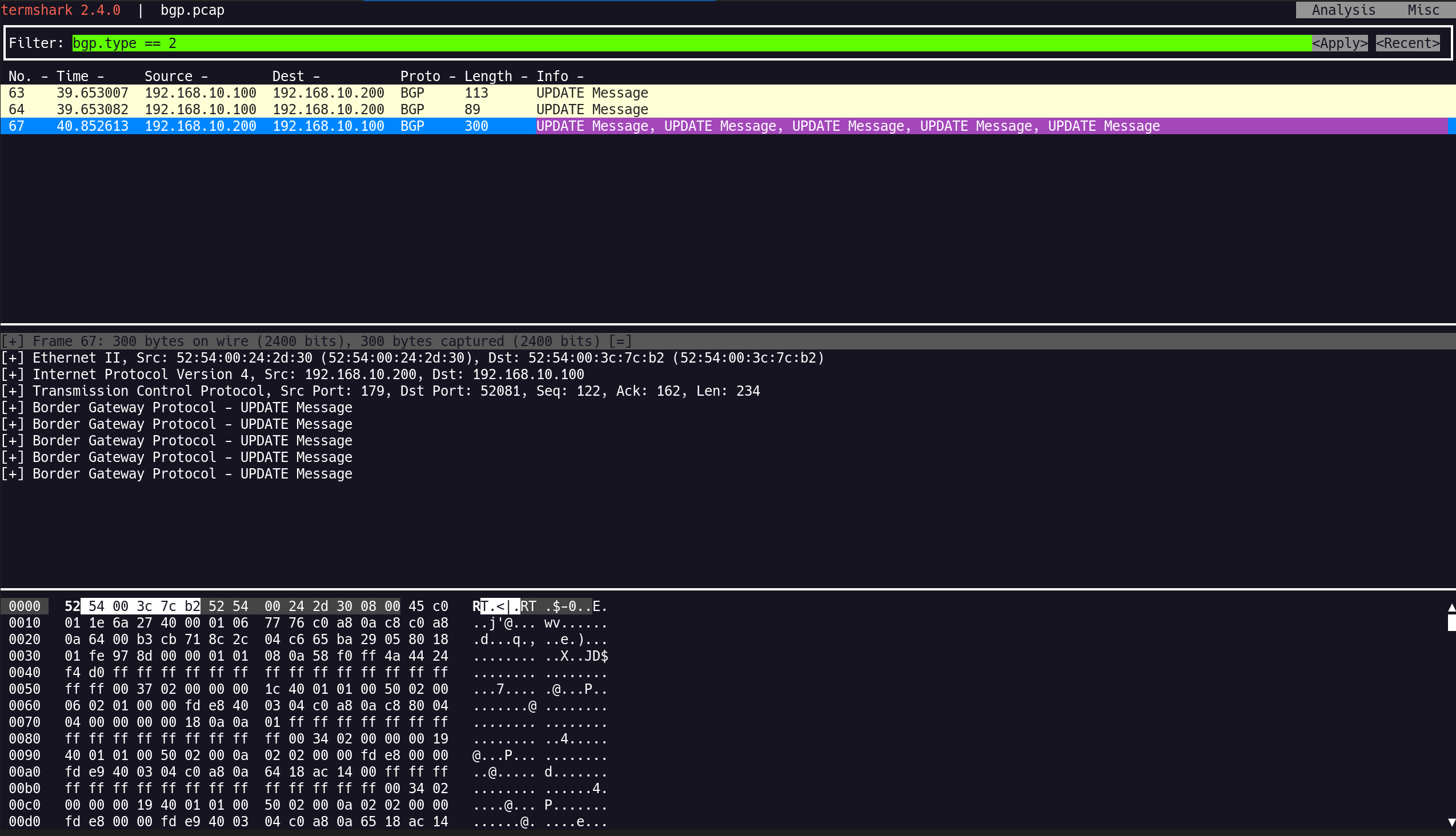

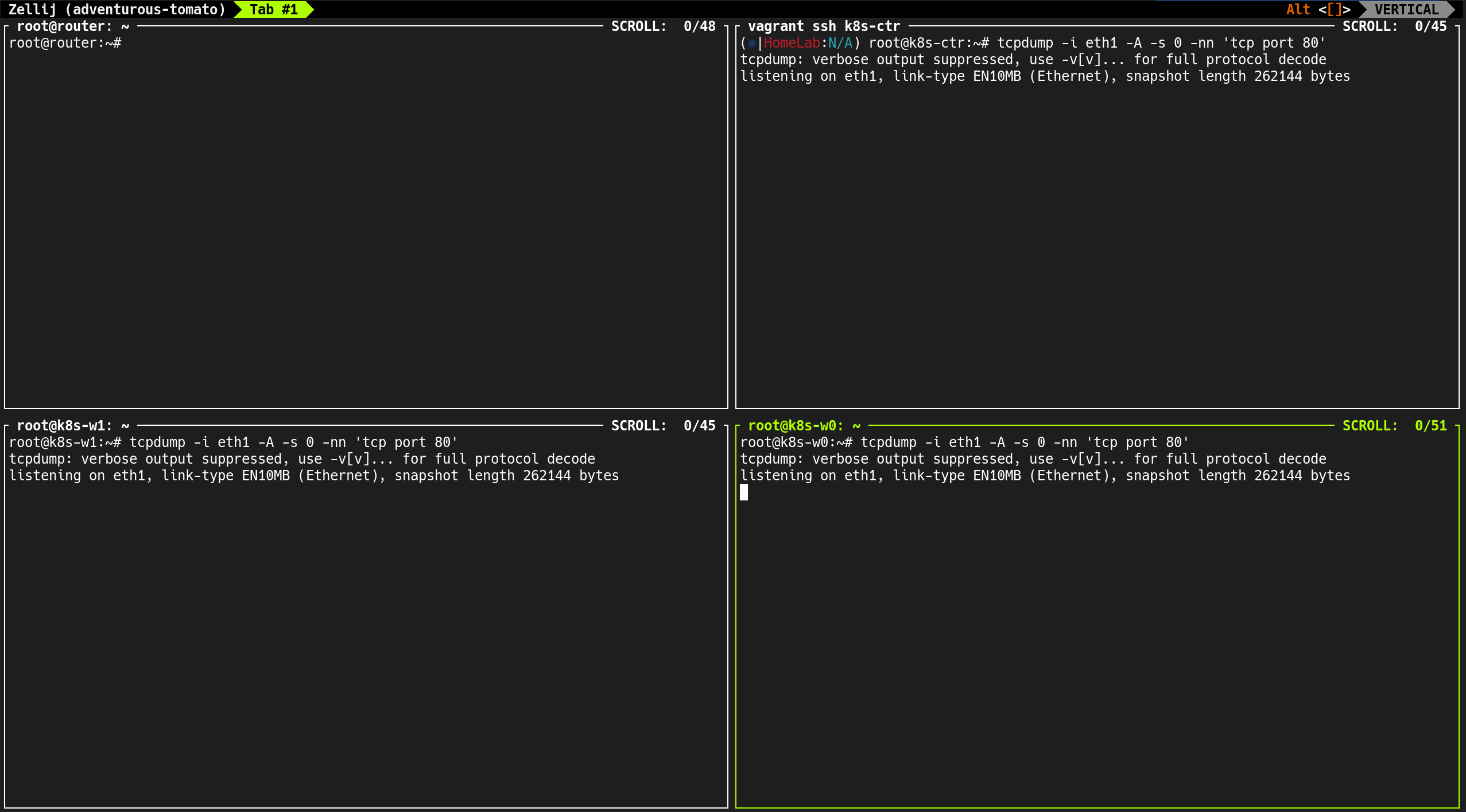

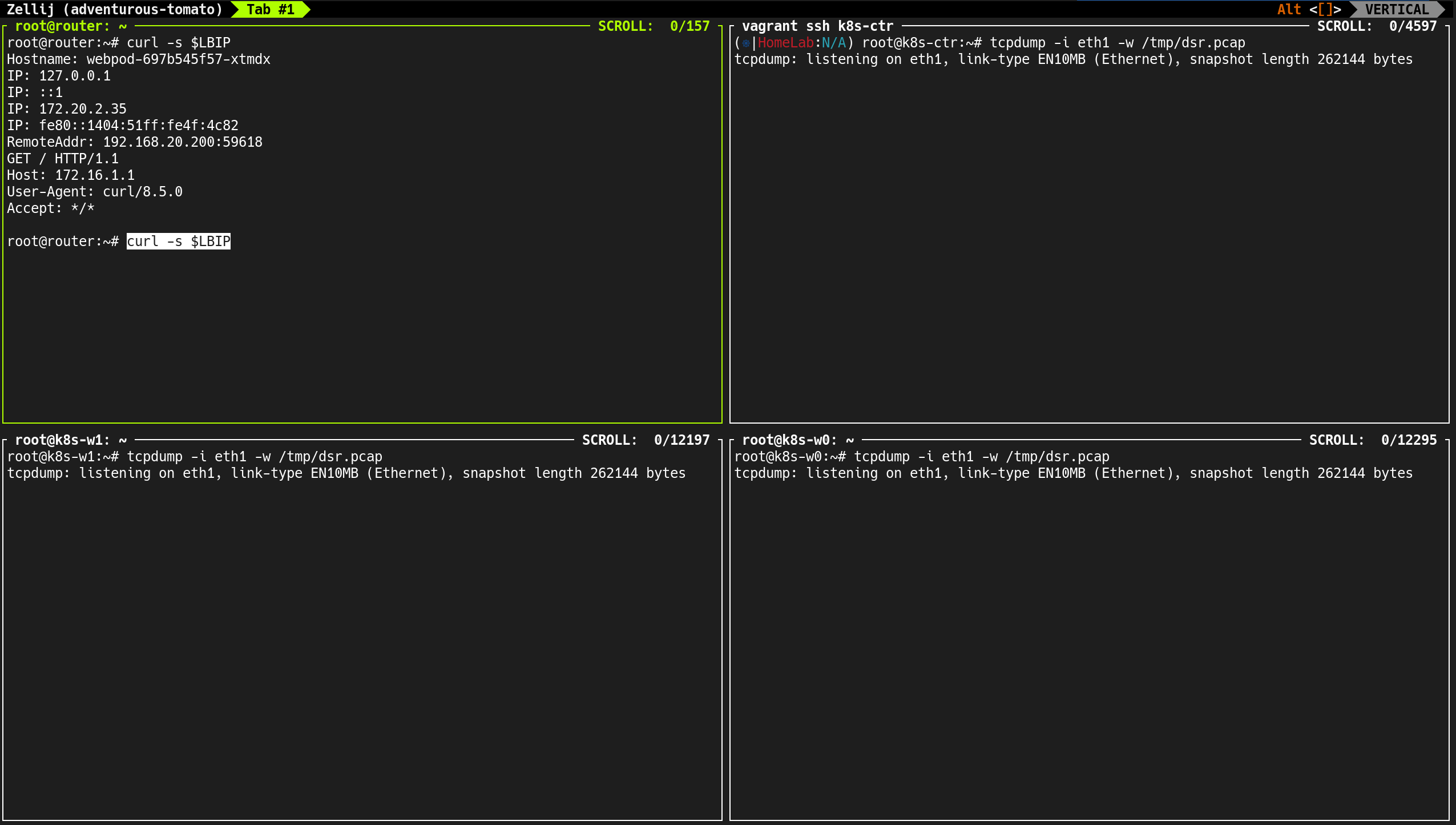

🔀 BGP 정보 전달 확인

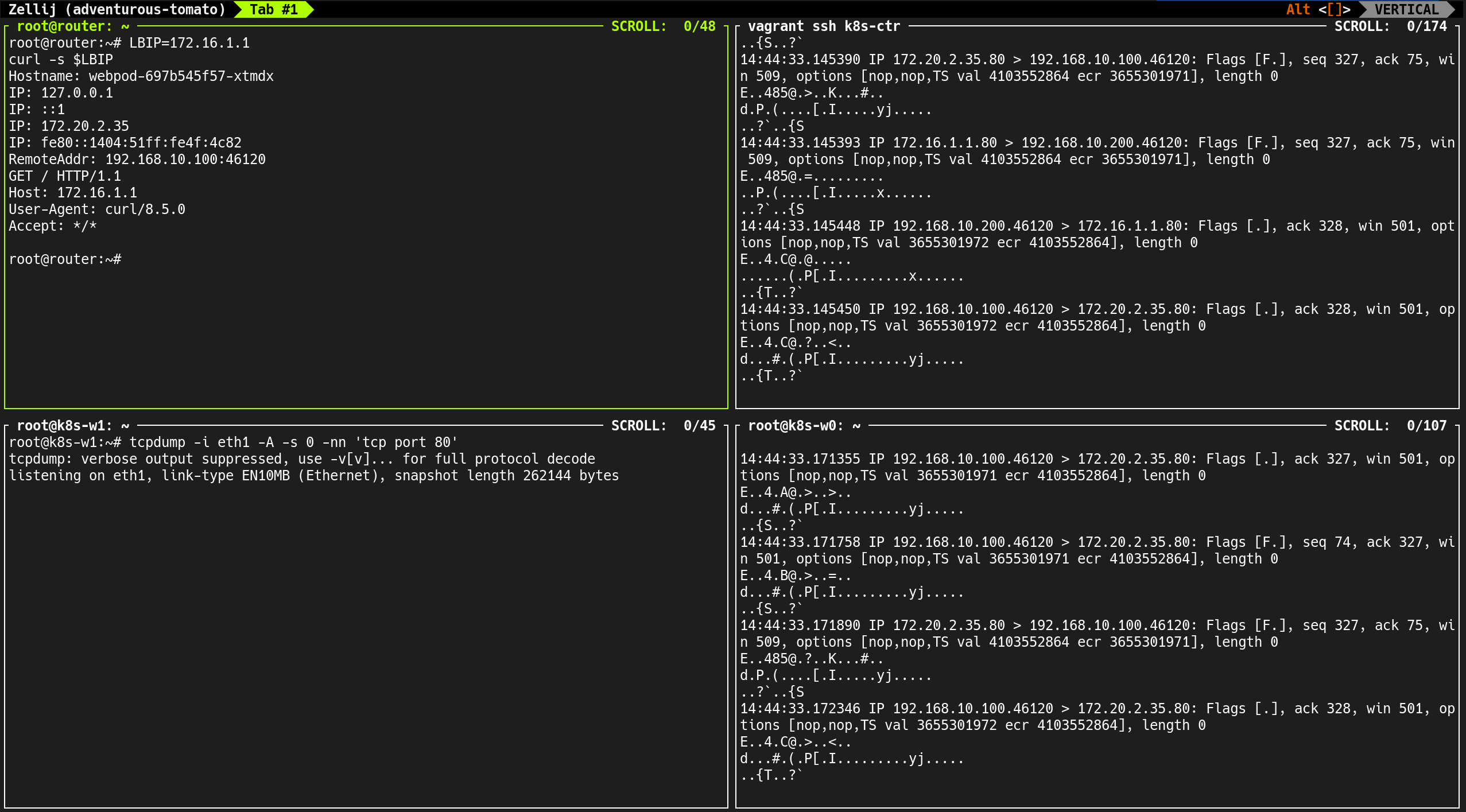

1. 컨트롤 플레인 tcpdump 진행

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# tcpdump -i eth1 tcp port 179 -w /tmp/bgp.pcap

# 결과

tcpdump: listening on eth1, link-type EN10MB (Ethernet), snapshot length 262144 bytes

2. FRR 재시작 및 BGP 세션 확인

1

root@router:~# systemctl restart frr && journalctl -u frr -f

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

Aug 16 00:00:33 router watchfrr.sh[4856]: Cannot stop zebra: pid file not found

Aug 16 00:00:33 router zebra[4858]: [VTVCM-Y2NW3] Configuration Read in Took: 00:00:00

Aug 16 00:00:33 router bgpd[4863]: [VTVCM-Y2NW3] Configuration Read in Took: 00:00:00

Aug 16 00:00:33 router staticd[4870]: [VTVCM-Y2NW3] Configuration Read in Took: 00:00:00

Aug 16 00:00:33 router frrinit.sh[4835]: * Started watchfrr

Aug 16 00:00:33 router systemd[1]: Started frr.service - FRRouting.

Aug 16 00:00:33 router watchfrr[4845]: [QDG3Y-BY5TN] zebra state -> up : connect succeeded

Aug 16 00:00:33 router watchfrr[4845]: [QDG3Y-BY5TN] bgpd state -> up : connect succeeded

Aug 16 00:00:33 router watchfrr[4845]: [QDG3Y-BY5TN] staticd state -> up : connect succeeded

Aug 16 00:00:33 router watchfrr[4845]: [KWE5Q-QNGFC] all daemons up, doing startup-complete notify

Aug 16 00:00:39 router bgpd[4863]: [M59KS-A3ZXZ] bgp_update_receive: rcvd End-of-RIB for IPv4 Unicast from 192.168.10.100 in vrf default

Aug 16 00:00:39 router bgpd[4863]: [M59KS-A3ZXZ] bgp_update_receive: rcvd End-of-RIB for IPv4 Unicast from 192.168.10.101 in vrf default

Aug 16 00:00:40 router bgpd[4863]: [M59KS-A3ZXZ] bgp_update_receive: rcvd End-of-RIB for IPv4 Unicast from 192.168.20.100 in vrf default

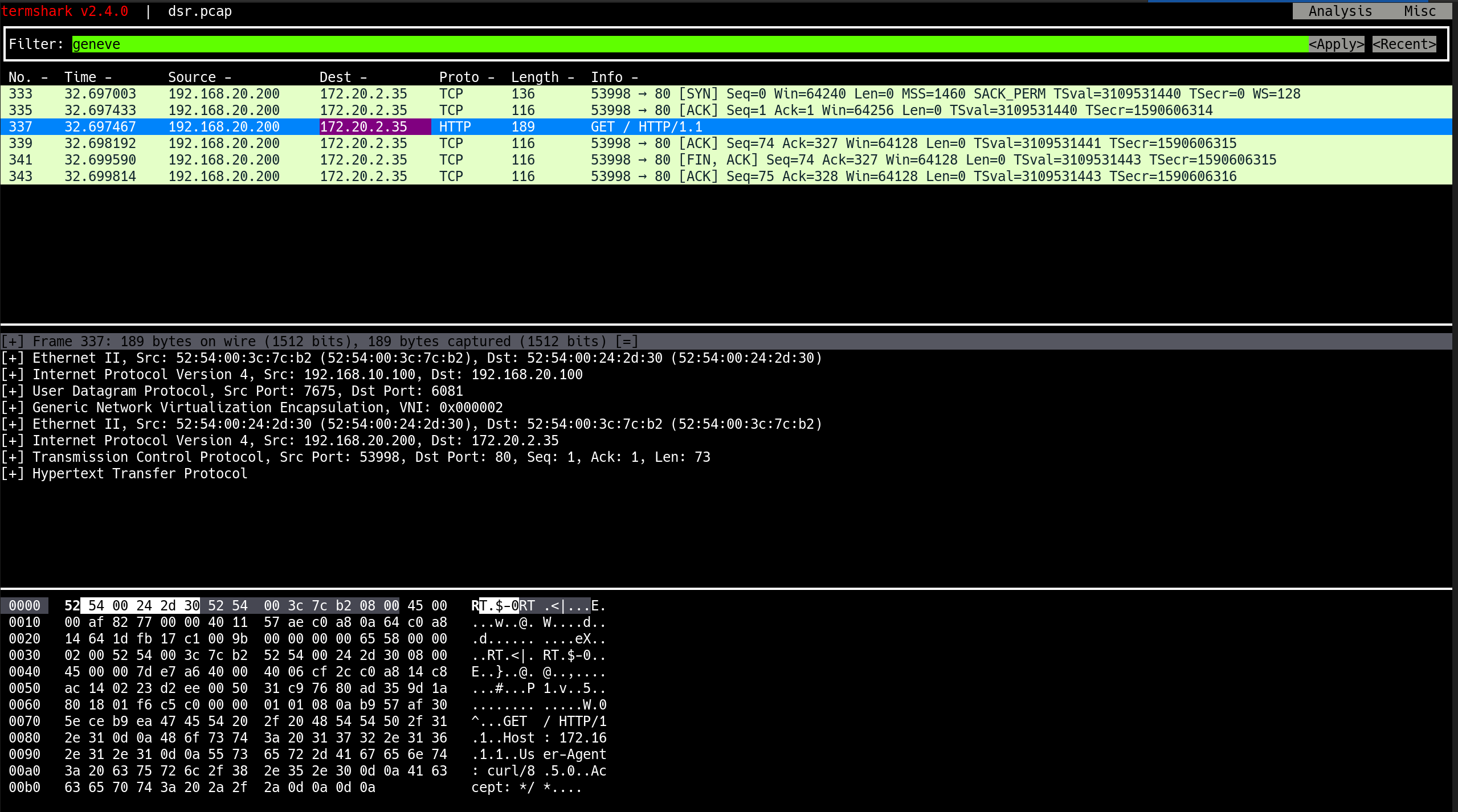

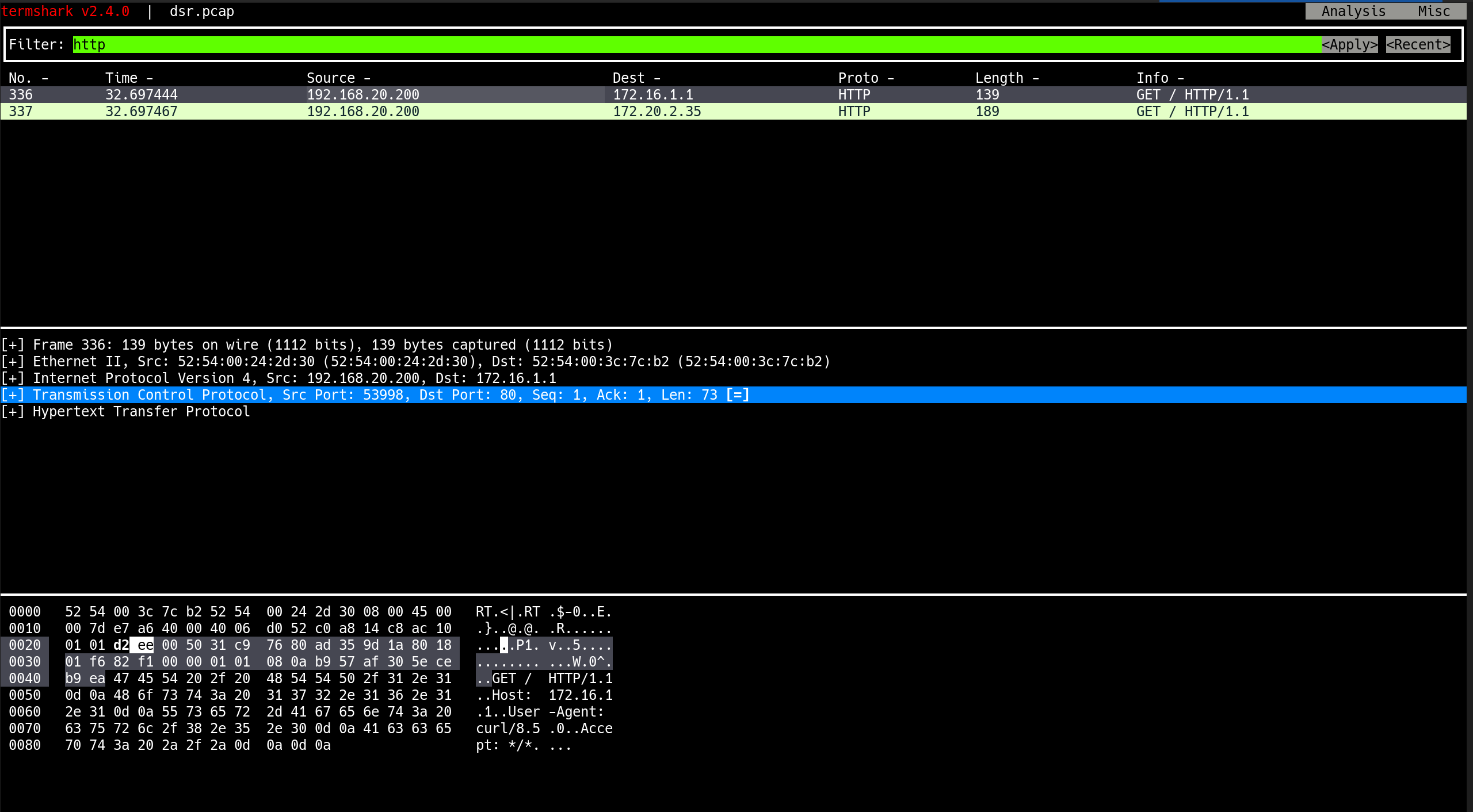

3. Termshark 확인

(1) BGP 패킷 캡처 파일 분석

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# termshark -r /tmp/bgp.pcap

(2) 패킷 분석 중, 컨트롤 플레인 연결이 끊김

1

vagrant halt k8s-ctr --force

- 컨트롤 플레인 VM 강제 종료

1

2

3

4

sudo virsh start 5w_k8s-ctr

# 결과

Domain '5w_k8s-ctr' started

- 컨트롤 플레인 VM 재기동

🛠️ 문제 해결 후 통신 확인

1. Cilium BGP 동작 특성 확인

- Cilium의 BGP는 기본적으로 외부 경로를 커널 라우팅 테이블에 주입하지 않음

disable-fib옵션으로 빌드되어 있음 → 커널 라우팅 테이블(FIB)에 BGP 경로를 반영하지 않겠다는 의미- 따라서 BGP 피어로부터 경로는 수신했지만,

ip route출력에는 해당 CIDR 대역이 나타나지 않음

2. BGP 경로 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c route

✅ 출력

1

2

3

4

5

6

7

default via 192.168.121.1 dev eth0 proto dhcp src 192.168.121.70 metric 100

172.20.0.0/24 via 172.20.0.230 dev cilium_host proto kernel src 172.20.0.230

172.20.0.230 dev cilium_host proto kernel scope link

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.100

192.168.20.0/24 via 192.168.10.200 dev eth1 proto static

192.168.121.0/24 dev eth0 proto kernel scope link src 192.168.121.70 metric 100

192.168.121.1 dev eth0 proto dhcp scope link src 192.168.121.70 metric 100

172.20.1.0/24,172.20.2.0/24대역이 누락됨

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium bgp routes

✅ 출력

1

2

3

4

5

6

(Defaulting to `available ipv4 unicast` routes, please see help for more options)

Node VRouter Prefix NextHop Age Attrs

k8s-ctr 65001 172.20.0.0/24 0.0.0.0 9m26s [{Origin: i} {Nexthop: 0.0.0.0}]

k8s-w0 65001 172.20.2.0/24 0.0.0.0 57m18s [{Origin: i} {Nexthop: 0.0.0.0}]

k8s-w1 65001 172.20.1.0/24 0.0.0.0 57m18s [{Origin: i} {Nexthop: 0.0.0.0}]

- 각 노드 CIDR 경로가 BGP로 정상 수신된 상태 확인 가능

즉, BGP 수신은 정상적이나, 커널 라우팅 반영이 안 되어 통신 불가 상태임

1

2

3

4

5

6

7

8

9

10

11

12

13

Hostname: webpod-697b545f57-fbtbj

---

Hostname: webpod-697b545f57-fbtbj

---

---

---

---

---

---

Hostname: webpod-697b545f57-fbtbj

---

---

...

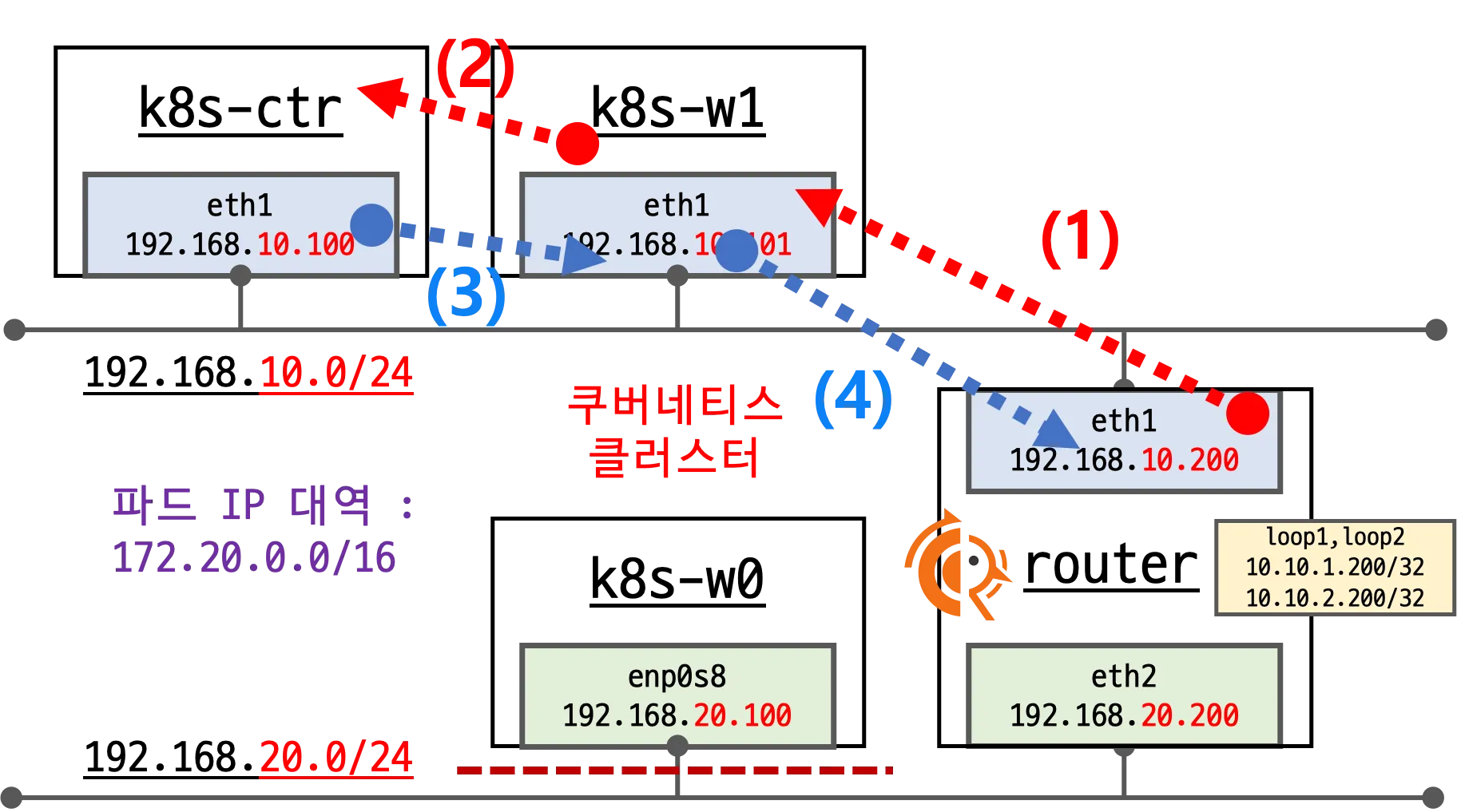

3. k8s 파드 대역 통신을 eth1 경로로 지정

1

2

3

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip route add 172.20.0.0/16 via 192.168.10.200

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@k8s-w1 sudo ip route add 172.20.0.0/16 via 192.168.10.200

sshpass -p 'vagrant' ssh vagrant@k8s-w0 sudo ip route add 172.20.0.0/16 via 192.168.20.200

- eth0 → 인터넷 통신 전용

- eth1 → Kubernetes 파드 통신 전용

- 따라서 파드 대역(

172.20.0.0/16)을 eth1을 통해 라우팅하도록 명시적 경로 추가

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

---

Hostname: webpod-697b545f57-fbtbj

---

Hostname: webpod-697b545f57-fbtbj

---

Hostname: webpod-697b545f57-rpblf

---

Hostname: webpod-697b545f57-fbtbj

---

Hostname: webpod-697b545f57-rpblf

---

Hostname: webpod-697b545f57-rpblf

---

Hostname: webpod-697b545f57-rpblf

---

Hostname: webpod-697b545f57-fbtbj

---

Hostname: webpod-697b545f57-pxhvr

---

Hostname: webpod-697b545f57-fbtbj

---

Hostname: webpod-697b545f57-rpblf

---

Hostname: webpod-697b545f57-fbtbj

---

Hostname: webpod-697b545f57-pxhvr

---

...

- 경로 추가 후 파드 간 통신 정상 동작 확인

⏸️ 노드(k8s-w0) 유지보수 상황

1. 노드 유지보수 시작 (k8s-w0 Drain)

1

2

3

4

5

6

7

8

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl drain k8s-w0 --ignore-daemonsets

# 결과

node/k8s-w0 cordoned

Warning: ignoring DaemonSet-managed Pods: kube-system/cilium-envoy-8tgrn, kube-system/cilium-wszbk, kube-system/kube-proxy-xhjtq

evicting pod default/webpod-697b545f57-rpblf

pod/webpod-697b545f57-rpblf evicted

node/k8s-w0 drained

kubectl drain명령어를 사용하여k8s-w0노드의 파드를 안전하게 제거하고 스케줄링을 막음

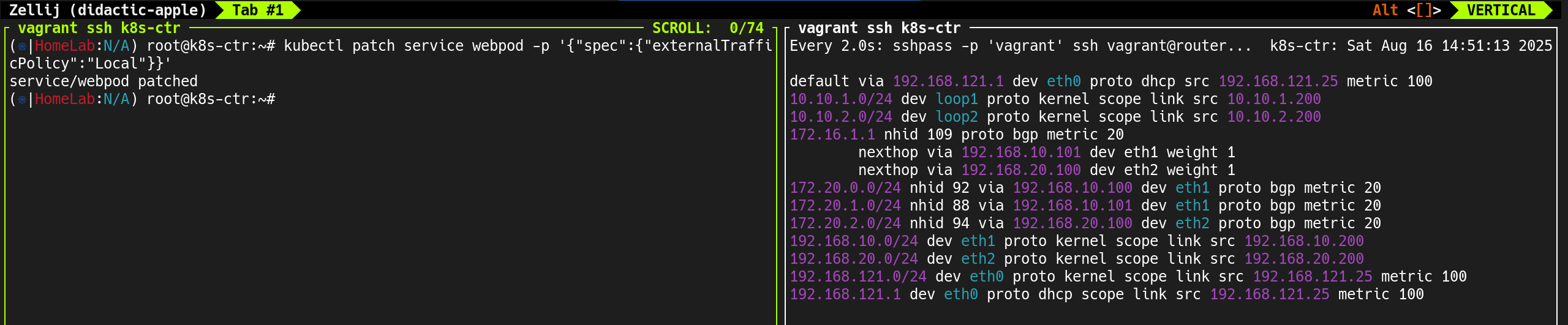

2. BGP 기능 비활성화 (enable-bgp=false)

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl label nodes k8s-w0 enable-bgp=false --overwrite

# 결과

node/k8s-w0 labeled

- 유지보수를 위해

k8s-w0노드의enable-bgp라벨을false로 변경 - 이로 인해 Cilium의 BGP 데몬이 해당 노드에서는 동작하지 않게 됨

3. 노드 상태 확인 (SchedulingDisabled)

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get node

✅ 출력

1

2

3

4

NAME STATUS ROLES AGE VERSION

k8s-ctr Ready control-plane 124m v1.33.2

k8s-w0 Ready,SchedulingDisabled <none> 115m v1.33.2

k8s-w1 Ready <none> 124m v1.33.2

k8s-w0노드 상태가Ready,SchedulingDisabled로 표시됨

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumbgpnodeconfigs

NAME AGE

k8s-ctr 70m

k8s-w1 70m

k8s-w0노드는 BGP NodeConfig 목록에서도 제외됨

4. BGP 라우트/피어 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium bgp routes

✅ 출력

1

2

3

4

5

(Defaulting to `available ipv4 unicast` routes, please see help for more options)

Node VRouter Prefix NextHop Age Attrs

k8s-ctr 65001 172.20.0.0/24 0.0.0.0 23m21s [{Origin: i} {Nexthop: 0.0.0.0}]

k8s-w1 65001 172.20.1.0/24 0.0.0.0 1h11m13s [{Origin: i} {Nexthop: 0.0.0.0}]

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium bgp peers

✅ 출력

1

2

3

Node Local AS Peer AS Peer Address Session State Uptime Family Received Advertised

k8s-ctr 65001 65000 192.168.10.200 established 15m58s ipv4/unicast 3 2

k8s-w1 65001 65000 192.168.10.200 established 15m58s ipv4/unicast 3 2

- 출력에서

k8s-w0라우트 정보가 사라짐

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@router "sudo vtysh -c 'show ip bgp summary'"

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

IPv4 Unicast Summary (VRF default):

BGP router identifier 192.168.10.200, local AS number 65000 vrf-id 0

BGP table version 5

RIB entries 5, using 960 bytes of memory

Peers 3, using 2172 KiB of memory

Peer groups 1, using 64 bytes of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt Desc

192.168.10.100 4 65001 400 404 0 0 0 00:19:48 1 3 N/A

192.168.10.101 4 65001 400 404 0 0 0 00:19:49 1 3 N/A

192.168.20.100 4 65001 266 267 0 0 0 00:06:48 Active 0 N/A

Total number of neighbors 3

- FRR 라우터에서도 상태가 Active 로 표시됨

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@router "sudo vtysh -c 'show ip route bgp'"

✅ 출력

1

2

3

4

5

6

7

8

9

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, F - PBR,

f - OpenFabric,

> - selected route, * - FIB route, q - queued, r - rejected, b - backup

t - trapped, o - offload failure

B>* 172.20.0.0/24 [20/0] via 192.168.10.100, eth1, weight 1, 00:18:28

B>* 172.20.1.0/24 [20/0] via 192.168.10.101, eth1, weight 1, 00:18:28

- 라우팅 테이블에서도

k8s-w0의 CIDR이 제거됨

5. 노드 복구 (enable-bgp=true & uncordon)

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl label nodes k8s-w0 enable-bgp=true --overwrite

# 결과

node/k8s-w0 labeled

- 유지보수가 끝난 후

k8s-w0라벨을 다시enable-bgp=true로 원복

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl uncordon k8s-w0

# 결과

node/k8s-w0 uncordoned

kubectl uncordon명령어로 스케줄링을 허용하여 정상 상태로 복구

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get node

kubectl get ciliumbgpnodeconfigs

cilium bgp routes

cilium bgp peers

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

NAME STATUS ROLES AGE VERSION

k8s-ctr Ready control-plane 132m v1.33.2

k8s-w0 Ready <none> 123m v1.33.2

k8s-w1 Ready <none> 132m v1.33.2

NAME AGE

k8s-ctr 77m

k8s-w0 47s

k8s-w1 77m

(Defaulting to `available ipv4 unicast` routes, please see help for more options)

Node VRouter Prefix NextHop Age Attrs

k8s-ctr 65001 172.20.0.0/24 0.0.0.0 29m55s [{Origin: i} {Nexthop: 0.0.0.0}]

k8s-w0 65001 172.20.2.0/24 0.0.0.0 48s [{Origin: i} {Nexthop: 0.0.0.0}]

k8s-w1 65001 172.20.1.0/24 0.0.0.0 1h17m47s [{Origin: i} {Nexthop: 0.0.0.0}]

Node Local AS Peer AS Peer Address Session State Uptime Family Received Advertised

k8s-ctr 65001 65000 192.168.10.200 established 22m2s ipv4/unicast 4 2

k8s-w0 65001 65000 192.168.10.200 established 46s ipv4/unicast 4 2

k8s-w1 65001 65000 192.168.10.200 established 22m2s ipv4/unicast 4 2

6. 노드별 파드 분배 실행

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get pod -owide

✅ 출력

1

2

3

4

5

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

curl-pod 1/1 Running 1 (31m ago) 117m 172.20.0.35 k8s-ctr <none> <none>

webpod-697b545f57-fbtbj 1/1 Running 1 (31m ago) 119m 172.20.0.6 k8s-ctr <none> <none>

webpod-697b545f57-lzxbc 1/1 Running 0 10m 172.20.1.98 k8s-w1 <none> <none>

webpod-697b545f57-pxhvr 1/1 Running 0 119m 172.20.1.65 k8s-w1 <none> <none>

1

2

3

4

5

6

7

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl scale deployment webpod --replicas 0

# 결과

deployment.apps/webpod scaled

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl scale deployment webpod --replicas 3

# 결과

deployment.apps/webpod scaled

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get pod -owide

✅ 출력

1

2

3

4

5

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

curl-pod 1/1 Running 1 (31m ago) 117m 172.20.0.35 k8s-ctr <none> <none>

webpod-697b545f57-5twrq 1/1 Running 0 7s 172.20.1.119 k8s-w1 <none> <none>

webpod-697b545f57-cp7xq 1/1 Running 0 7s 172.20.0.15 k8s-ctr <none> <none>

webpod-697b545f57-xtmdx 1/1 Running 0 7s 172.20.2.35 k8s-w0 <none> <none>

kubectl scale명령어로webpod배포를 줄였다가 다시 확장하여 파드가k8s-w0에도 정상 배치됨을 확인

🚫 CRD 상태 보고 비활성화

- https://docs.cilium.io/en/stable/network/bgp-control-plane/bgp-control-plane-operation/#disabling-crd-status-report

- Cilium BGP는 기본적으로

CiliumBGPNodeConfig리소스의status필드에 피어 상태, 세션, 라우트 정보를 기록함 - 그러나 대규모 클러스터에서는 상태 보고가 빈번히 발생해 Kubernetes API 서버에 부하를 줄 수 있음

- 따라서 공식 문서에서도

bgp status reporting off옵션 사용을 권장함

1. 현재 BGP 상태 확인 (Status Report 활성화 상태)

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumbgpnodeconfigs -o yaml | yq

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

{

"apiVersion": "v1",

"items": [

{

"apiVersion": "cilium.io/v2",

"kind": "CiliumBGPNodeConfig",

"metadata": {

"creationTimestamp": "2025-08-15T14:27:12Z",

"generation": 1,

"name": "k8s-ctr",

"ownerReferences": [

{

"apiVersion": "cilium.io/v2",

"controller": true,

"kind": "CiliumBGPClusterConfig",

"name": "cilium-bgp",

"uid": "e1f4b328-d375-4a7c-a99b-ed2658602a14"

}

],

"resourceVersion": "15080",

"uid": "a72d5068-f106-4b37-a0a7-2ad0e72e8f9d"

},

"spec": {

"bgpInstances": [

{

"localASN": 65001,

"name": "instance-65001",

"peers": [

{

"name": "tor-switch",

"peerASN": 65000,

"peerAddress": "192.168.10.200",

"peerConfigRef": {

"name": "cilium-peer"

}

}

]

}

]

},

"status": {

"bgpInstances": [

{

"localASN": 65001,

"name": "instance-65001",

"peers": [

{

"establishedTime": "2025-08-15T15:22:57Z",

"name": "tor-switch",

"peerASN": 65000,

"peerAddress": "192.168.10.200",

"peeringState": "established",

"routeCount": [

{

"advertised": 2,

"afi": "ipv4",

"received": 3,

"safi": "unicast"

}

],

"timers": {

"appliedHoldTimeSeconds": 9,

"appliedKeepaliveSeconds": 3

}

}

]

}

]

}

},

{

"apiVersion": "cilium.io/v2",

"kind": "CiliumBGPNodeConfig",

"metadata": {

"creationTimestamp": "2025-08-15T15:44:11Z",

"generation": 1,

"name": "k8s-w0",

"ownerReferences": [

{

"apiVersion": "cilium.io/v2",

"controller": true,

"kind": "CiliumBGPClusterConfig",

"name": "cilium-bgp",

"uid": "e1f4b328-d375-4a7c-a99b-ed2658602a14"

}

],

"resourceVersion": "16068",

"uid": "fd222576-cc33-4f4f-b7cd-c8157fbc8009"

},

"spec": {

"bgpInstances": [

{

"localASN": 65001,

"name": "instance-65001",

"peers": [

{

"name": "tor-switch",

"peerASN": 65000,

"peerAddress": "192.168.10.200",

"peerConfigRef": {

"name": "cilium-peer"

}

}

]

}

]

},

"status": {

"bgpInstances": [

{

"localASN": 65001,

"name": "instance-65001",

"peers": [

{

"establishedTime": "2025-08-15T15:44:13Z",

"name": "tor-switch",

"peerASN": 65000,

"peerAddress": "192.168.10.200",

"peeringState": "established",

"routeCount": [

{

"advertised": 2,

"afi": "ipv4",

"received": 3,

"safi": "unicast"

}

],

"timers": {

"appliedHoldTimeSeconds": 9,

"appliedKeepaliveSeconds": 3

}

}

]

}

]

}

},

{

"apiVersion": "cilium.io/v2",

"kind": "CiliumBGPNodeConfig",

"metadata": {

"creationTimestamp": "2025-08-15T14:27:12Z",

"generation": 1,

"name": "k8s-w1",

"ownerReferences": [

{

"apiVersion": "cilium.io/v2",

"controller": true,

"kind": "CiliumBGPClusterConfig",

"name": "cilium-bgp",

"uid": "e1f4b328-d375-4a7c-a99b-ed2658602a14"

}

],

"resourceVersion": "15076",

"uid": "d98cdab1-5d96-4ecf-ae47-1cc3c80a3071"

},

"spec": {

"bgpInstances": [

{

"localASN": 65001,

"name": "instance-65001",

"peers": [

{

"name": "tor-switch",

"peerASN": 65000,

"peerAddress": "192.168.10.200",

"peerConfigRef": {

"name": "cilium-peer"

}

}

]

}

]

},

"status": {

"bgpInstances": [

{

"localASN": 65001,

"name": "instance-65001",

"peers": [

{

"establishedTime": "2025-08-15T15:22:57Z",

"name": "tor-switch",

"peerASN": 65000,

"peerAddress": "192.168.10.200",

"peeringState": "established",

"routeCount": [

{

"advertised": 2,

"afi": "ipv4",

"received": 3,

"safi": "unicast"

}

],

"timers": {

"appliedHoldTimeSeconds": 9,

"appliedKeepaliveSeconds": 3

}

}

]

}

]

}

}

],

"kind": "List",

"metadata": {

"resourceVersion": ""

}

}

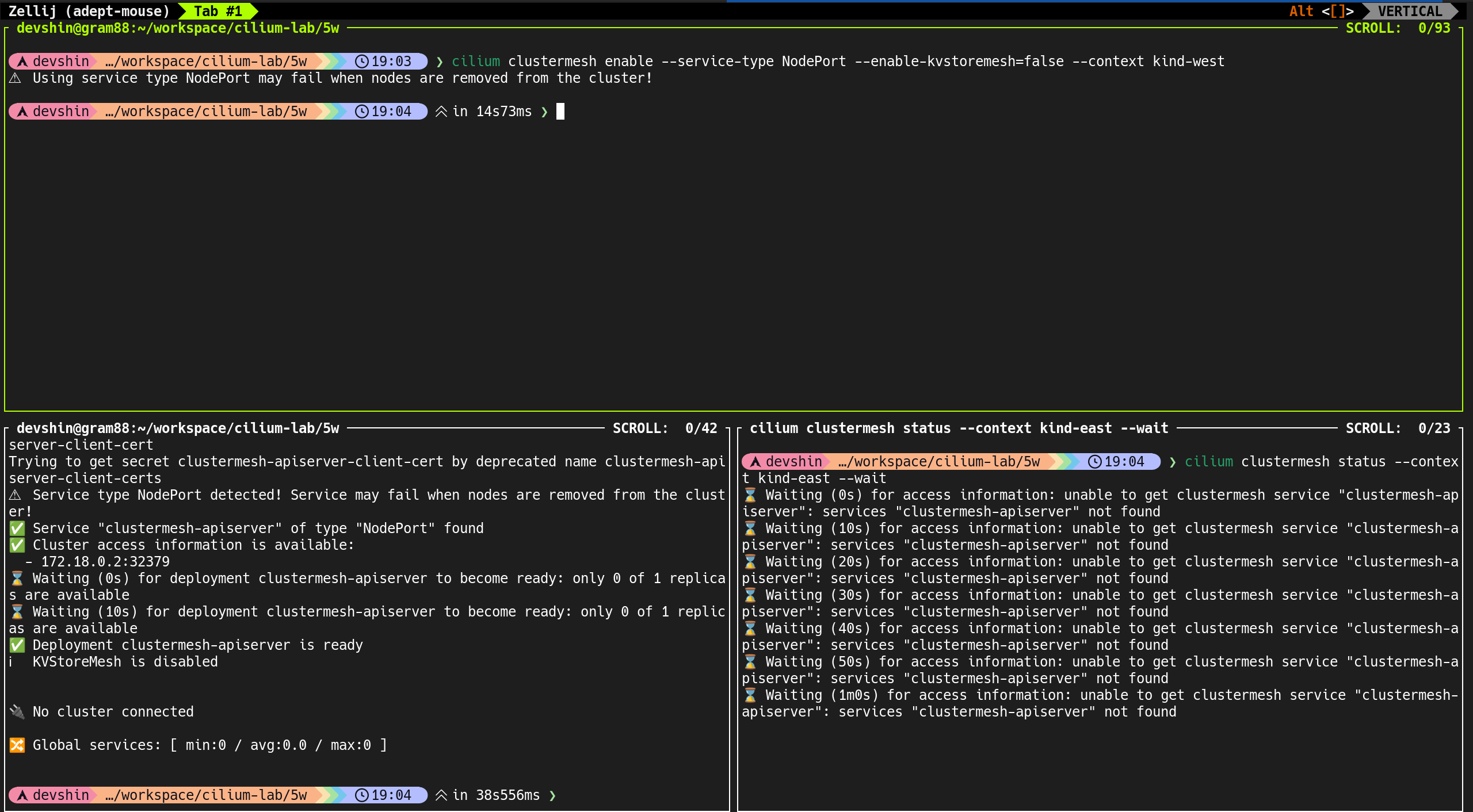

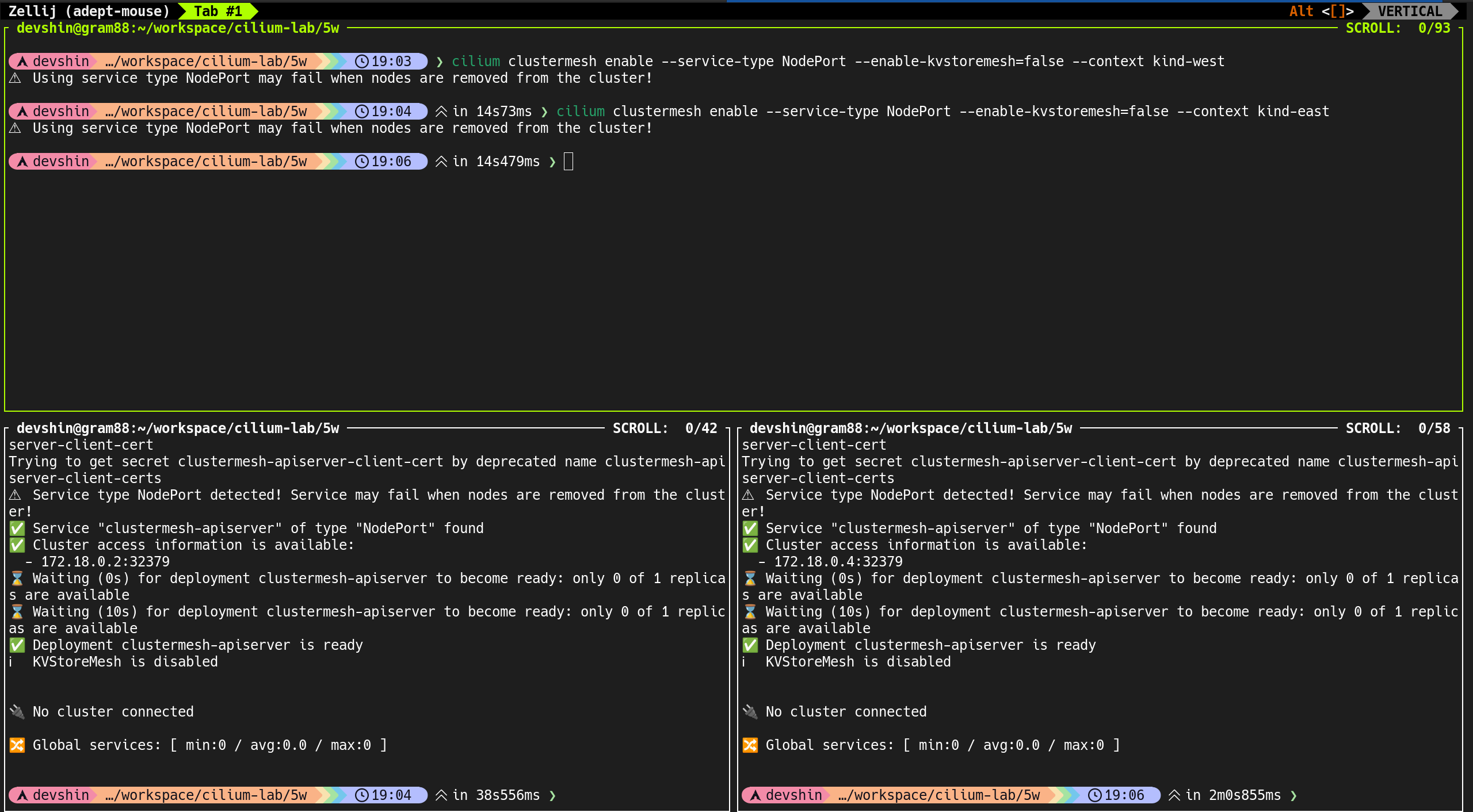

- 각 노드(

k8s-ctr,k8s-w0,k8s-w1)의status에 피어 연결 상태 (established), Advertised / Received Routes, Keepalive / HoldTime 값 등의 정보가 상세히 기록됨

2. Helm 업그레이드로 상태 보고 비활성화

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

(⎈|HomeLab:N/A) root@k8s-ctr:~# helm upgrade cilium cilium/cilium --version 1.18.0 --namespace kube-system --reuse-values \

--set bgpControlPlane.statusReport.enabled=false

# 결과

Release "cilium" has been upgraded. Happy Helming!

NAME: cilium

LAST DEPLOYED: Sat Aug 16 00:51:38 2025

NAMESPACE: kube-system

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

You have successfully installed Cilium with Hubble Relay and Hubble UI.

Your release version is 1.18.0.

For any further help, visit https://docs.cilium.io/en/v1.18/gettinghelp

- 성공적으로

cilium차트가 재배포되며, 이후 BGP 상태 보고가 중단됨

3. Cilium DaemonSet 롤링 재시작

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl -n kube-system rollout restart ds/cilium

# 결과

daemonset.apps/cilium restarted

4. 결과 확인 (Status Report 제거됨)

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumbgpnodeconfigs -o yaml | yq

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

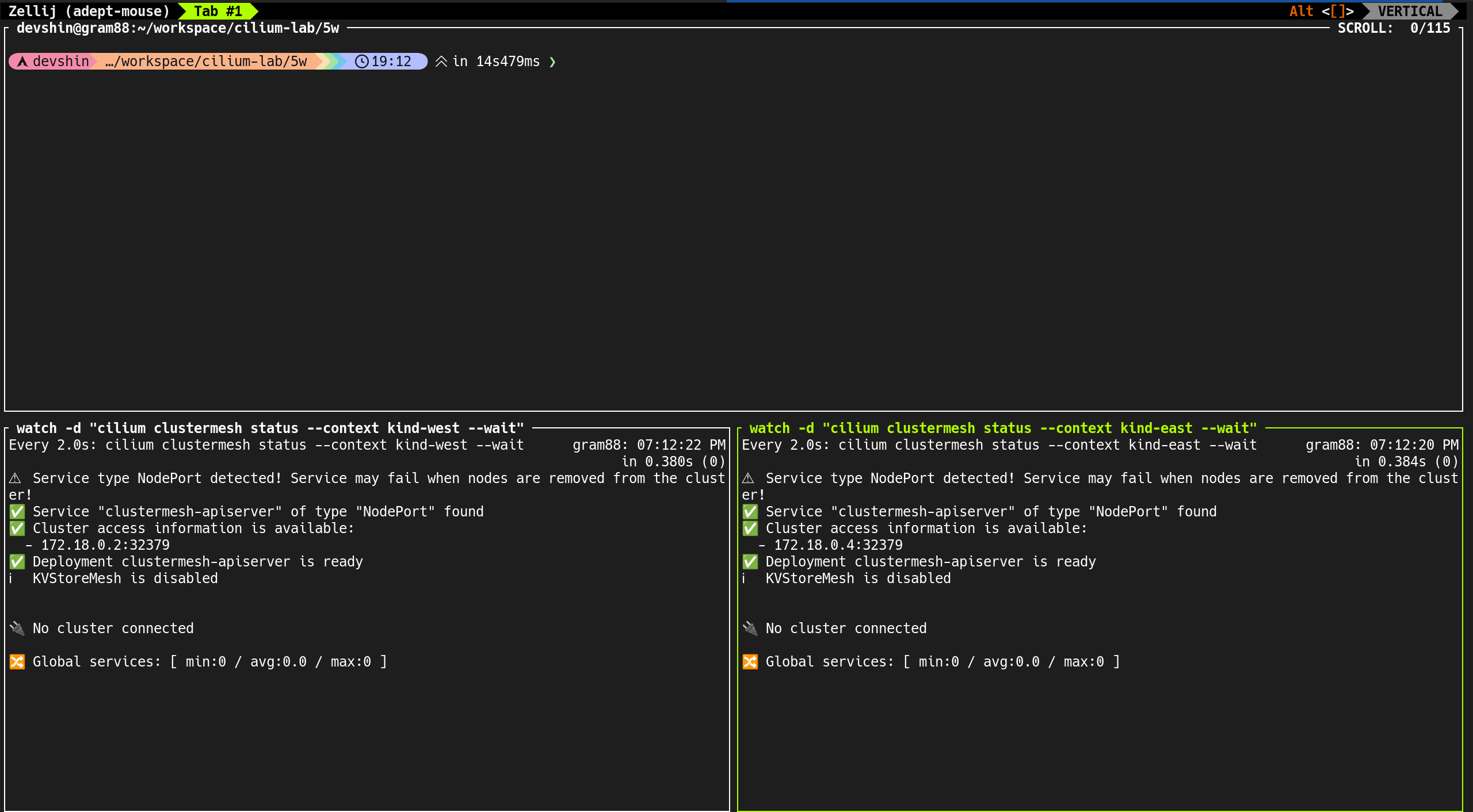

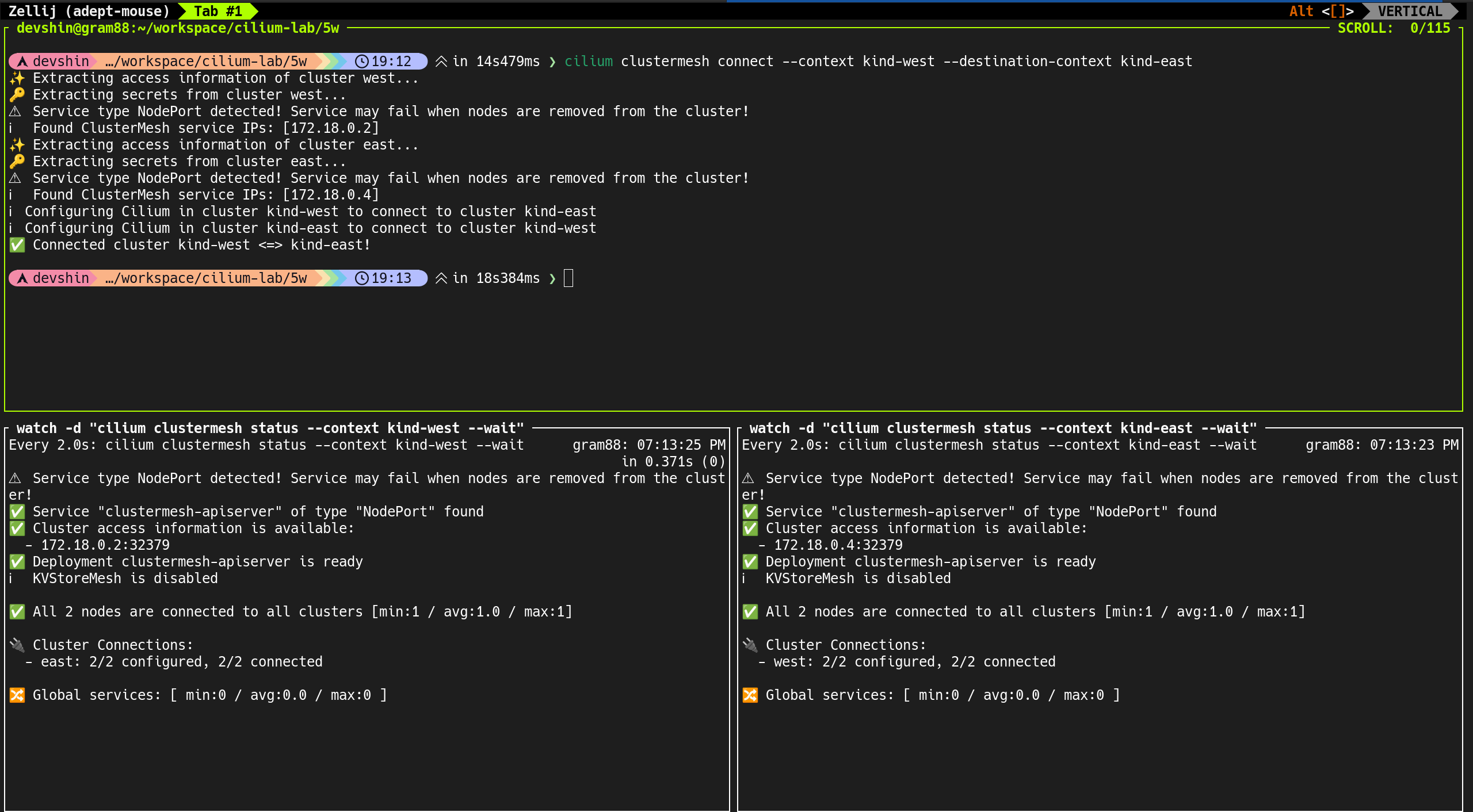

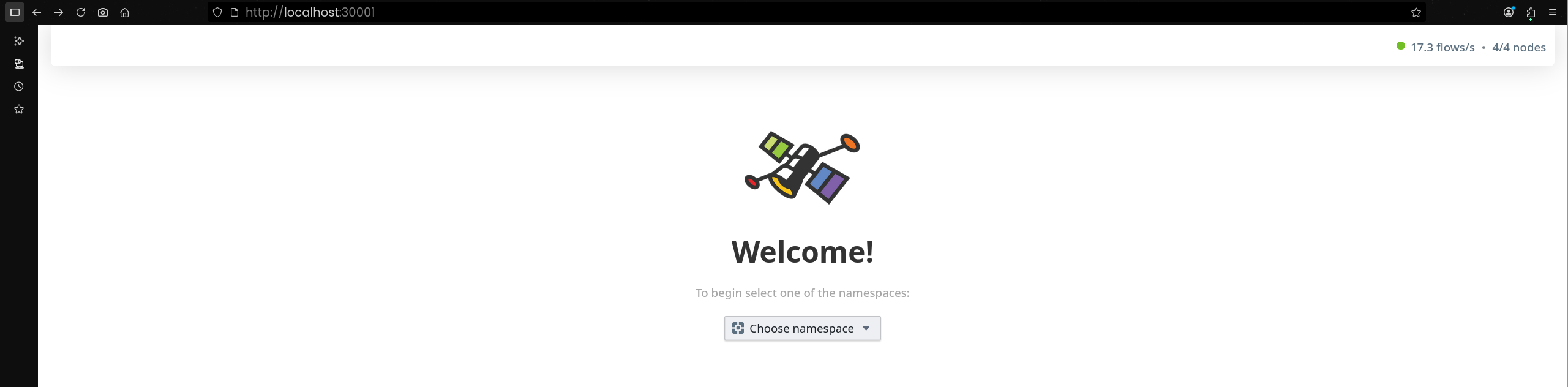

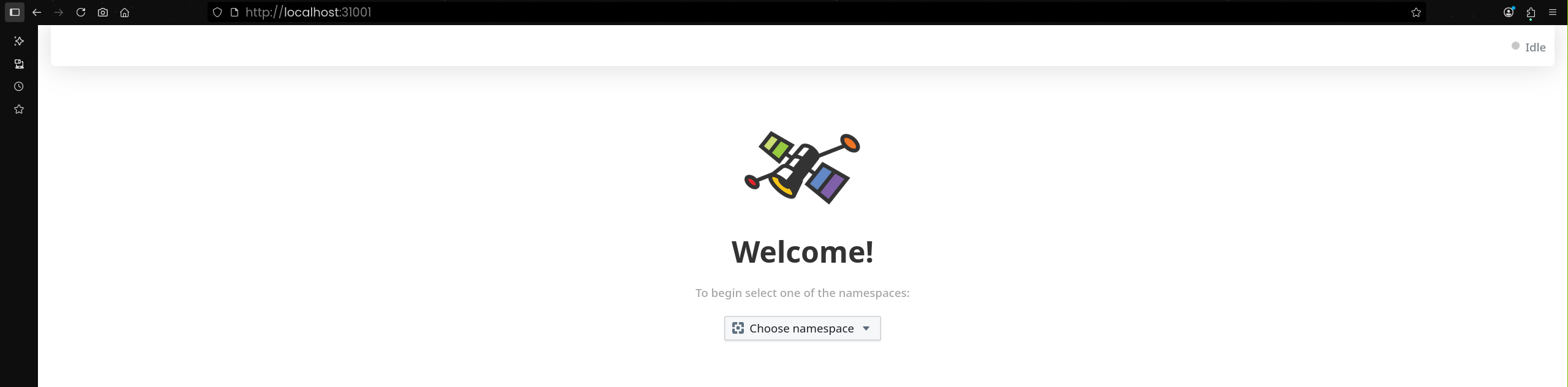

57