Cilium 4주차 정리

🔧 실습 환경 구성

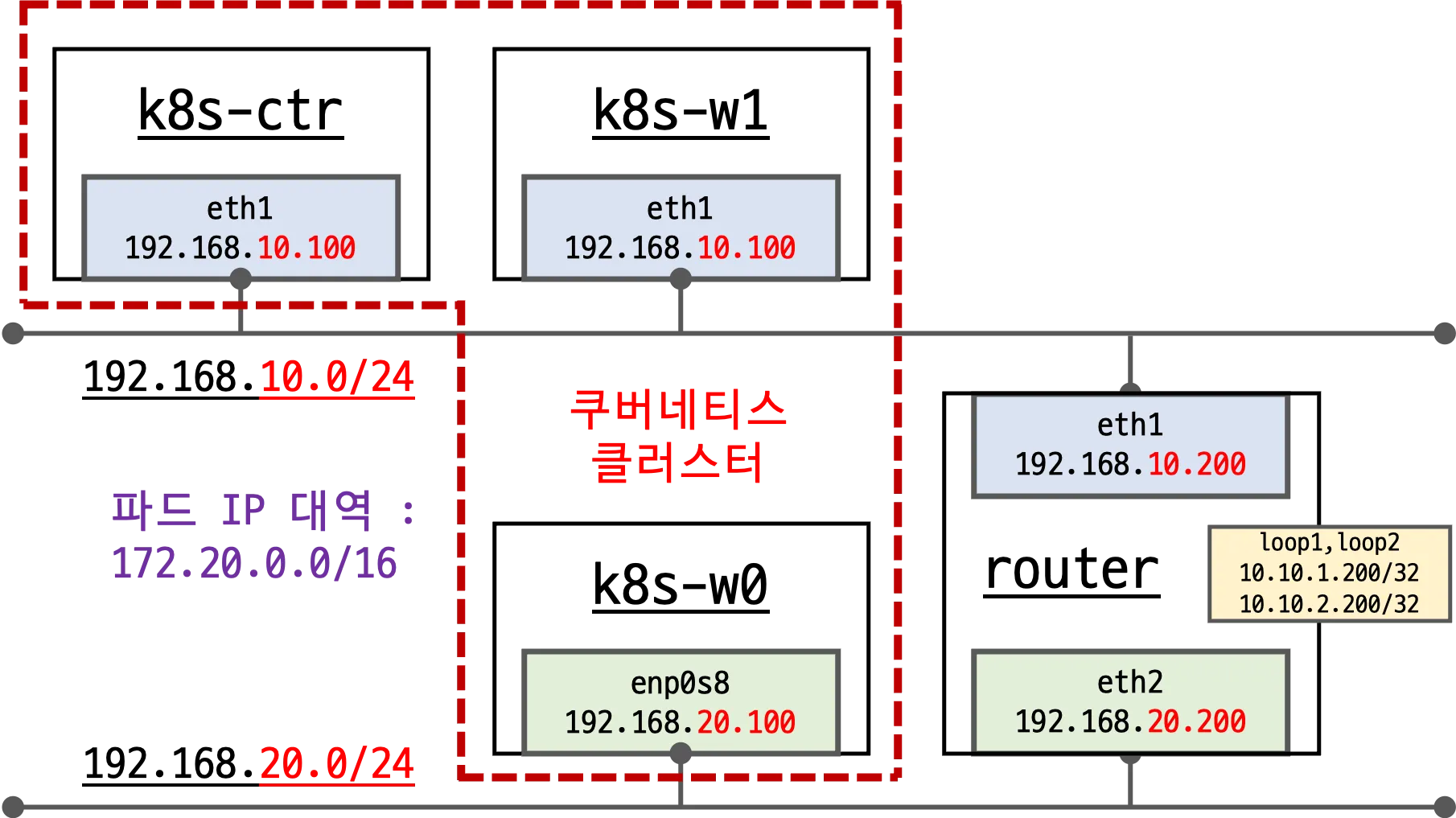

1. 네트워크 구조 변화

- 지난 주 실습에서는 모든 k8s 노드가 동일 네트워크 대역에 존재

- 변경 지점

- 컨트롤플레인(

192.168.10.100), 워커노드1(192.168.10.101) →192.168.10.0/24 - 워커노드0(

192.168.20.100) →192.168.20.0/24

- 컨트롤플레인(

- 서로 다른 네트워크 대역 간 통신을 위해 라우터(eth1:

192.168.10.200, eth2:192.168.20.200) 사용 - 라우터에는 loopback 인터페이스(loop1, loop2)와 IP Forward 활성화

2. 클러스터 구성 및 Pod CIDR 설정

- Cilium Cluster Scope IPAM 사용

1

2

3

--set ipam.mode="cluster-pool" \

--set ipam.operator.clusterPoolIPv4PodCIDRList={"172.20.0.0/16"} \

--set ipv4NativeRoutingCIDR=172.20.0.0/16

- 노드별 할당된 Pod CIDR

- k8s-ctr:

172.20.0.0/24 - k8s-w1:

172.20.1.0/24 - k8s-w0:

172.20.2.0/24

- k8s-ctr:

3. VirtualBox IP 대역 제한 문제 해결

1

2

3

curl -O https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/4w/Vagrantfile

vagrant up

📢 오류 발생

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

...

==> router: Cloning VM...

==> router: Matching MAC address for NAT networking...

==> router: Checking if box 'bento/ubuntu-24.04' version '202502.21.0' is up to date...

==> router: Setting the name of the VM: router

==> router: Clearing any previously set network interfaces...

The IP address configured for the host-only network is not within the

allowed ranges. Please update the address used to be within the allowed

ranges and run the command again.

Address: 192.168.20.200

Ranges: 192.168.10.0/24

Valid ranges can be modified in the /etc/vbox/networks.conf file. For

more information including valid format see:

https://www.virtualbox.org/manual/ch06.html#network_hostonly

- 오류 원인:

192.168.20.200이 VirtualBox host-only 네트워크 허용 범위 밖 - 해결 방법

/etc/vbox/networks.conf편집- 허용 범위 추가

* 192.168.0.0/16

vagrant halt후vagrant up재실행

4. Vagrant 기반 k8s 실습 환경 VM 부팅 및 초기 설정

1

vagrant up

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

Bringing machine 'k8s-ctr' up with 'virtualbox' provider...

Bringing machine 'k8s-w1' up with 'virtualbox' provider...

Bringing machine 'router' up with 'virtualbox' provider...

Bringing machine 'k8s-w0' up with 'virtualbox' provider...

==> k8s-ctr: Cloning VM...

==> k8s-ctr: Matching MAC address for NAT networking...

==> k8s-ctr: Checking if box 'bento/ubuntu-24.04' version '202502.21.0' is up to date...

==> k8s-ctr: Setting the name of the VM: k8s-ctr

==> k8s-ctr: Clearing any previously set network interfaces...

==> k8s-ctr: Preparing network interfaces based on configuration...

k8s-ctr: Adapter 1: nat

k8s-ctr: Adapter 2: hostonly

==> k8s-ctr: Forwarding ports...

k8s-ctr: 22 (guest) => 60000 (host) (adapter 1)

==> k8s-ctr: Running 'pre-boot' VM customizations...

==> k8s-ctr: Booting VM...

==> k8s-ctr: Waiting for machine to boot. This may take a few minutes...

k8s-ctr: SSH address: 127.0.0.1:60000

k8s-ctr: SSH username: vagrant

k8s-ctr: SSH auth method: private key

k8s-ctr:

k8s-ctr: Vagrant insecure key detected. Vagrant will automatically replace

k8s-ctr: this with a newly generated keypair for better security.

k8s-ctr:

k8s-ctr: Inserting generated public key within guest...

k8s-ctr: Removing insecure key from the guest if it's present...

k8s-ctr: Key inserted! Disconnecting and reconnecting using new SSH key...

==> k8s-ctr: Machine booted and ready!

==> k8s-ctr: Checking for guest additions in VM...

==> k8s-ctr: Setting hostname...

==> k8s-ctr: Configuring and enabling network interfaces...

==> k8s-ctr: Running provisioner: shell...

k8s-ctr: Running: /tmp/vagrant-shell20250807-101867-bkdz3.sh

k8s-ctr: >>>> Initial Config Start <<<<

k8s-ctr: [TASK 1] Setting Profile & Bashrc

k8s-ctr: [TASK 2] Disable AppArmor

k8s-ctr: [TASK 3] Disable and turn off SWAP

k8s-ctr: [TASK 4] Install Packages

k8s-ctr: [TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl)

k8s-ctr: [TASK 6] Install Packages & Helm

k8s-ctr: >>>> Initial Config End <<<<

==> k8s-ctr: Running provisioner: shell...

k8s-ctr: Running: /tmp/vagrant-shell20250807-101867-7y47ad.sh

k8s-ctr: >>>> K8S Controlplane config Start <<<<

k8s-ctr: [TASK 1] Initial Kubernetes

k8s-ctr: [TASK 2] Setting kube config file

k8s-ctr: [TASK 3] Source the completion

k8s-ctr: [TASK 4] Alias kubectl to k

k8s-ctr: [TASK 5] Install Kubectx & Kubens

k8s-ctr: [TASK 6] Install Kubeps & Setting PS1

k8s-ctr: [TASK 7] Install Cilium CNI

k8s-ctr: [TASK 8] Install Cilium / Hubble CLI

k8s-ctr: cilium

k8s-ctr: hubble

k8s-ctr: [TASK 9] Remove node taint

k8s-ctr: node/k8s-ctr untainted

k8s-ctr: [TASK 10] local DNS with hosts file

k8s-ctr: [TASK 11] Dynamically provisioning persistent local storage with Kubernetes

k8s-ctr: [TASK 12] Install Prometheus & Grafana

k8s-ctr: [TASK 13] Install Metrics-server

k8s-ctr: [TASK 14] Install k9s

k8s-ctr: >>>> K8S Controlplane Config End <<<<

==> k8s-ctr: Running provisioner: shell...

k8s-ctr: Running: /tmp/vagrant-shell20250807-101867-ret6h0.sh

k8s-ctr: >>>> Route Add Config Start <<<<

k8s-ctr: >>>> Route Add Config End <<<<

==> k8s-w1: Cloning VM...

==> k8s-w1: Matching MAC address for NAT networking...

==> k8s-w1: Checking if box 'bento/ubuntu-24.04' version '202502.21.0' is up to date...

==> k8s-w1: Setting the name of the VM: k8s-w1

==> k8s-w1: Clearing any previously set network interfaces...

==> k8s-w1: Preparing network interfaces based on configuration...

k8s-w1: Adapter 1: nat

k8s-w1: Adapter 2: hostonly

==> k8s-w1: Forwarding ports...

k8s-w1: 22 (guest) => 60001 (host) (adapter 1)

==> k8s-w1: Running 'pre-boot' VM customizations...

==> k8s-w1: Booting VM...

==> k8s-w1: Waiting for machine to boot. This may take a few minutes...

k8s-w1: SSH address: 127.0.0.1:60001

k8s-w1: SSH username: vagrant

k8s-w1: SSH auth method: private key

k8s-w1:

k8s-w1: Vagrant insecure key detected. Vagrant will automatically replace

k8s-w1: this with a newly generated keypair for better security.

k8s-w1:

k8s-w1: Inserting generated public key within guest...

k8s-w1: Removing insecure key from the guest if it's present...

k8s-w1: Key inserted! Disconnecting and reconnecting using new SSH key...

==> k8s-w1: Machine booted and ready!

==> k8s-w1: Checking for guest additions in VM...

==> k8s-w1: Setting hostname...

==> k8s-w1: Configuring and enabling network interfaces...

==> k8s-w1: Running provisioner: shell...

k8s-w1: Running: /tmp/vagrant-shell20250807-101867-mkfn7y.sh

k8s-w1: >>>> Initial Config Start <<<<

k8s-w1: [TASK 1] Setting Profile & Bashrc

k8s-w1: [TASK 2] Disable AppArmor

k8s-w1: [TASK 3] Disable and turn off SWAP

k8s-w1: [TASK 4] Install Packages

k8s-w1: [TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl)

k8s-w1: [TASK 6] Install Packages & Helm

k8s-w1: >>>> Initial Config End <<<<

==> k8s-w1: Running provisioner: shell...

k8s-w1: Running: /tmp/vagrant-shell20250807-101867-nsboky.sh

k8s-w1: >>>> K8S Node config Start <<<<

k8s-w1: [TASK 1] K8S Controlplane Join

k8s-w1: >>>> K8S Node config End <<<<

==> k8s-w1: Running provisioner: shell...

k8s-w1: Running: /tmp/vagrant-shell20250807-101867-ub8q45.sh

k8s-w1: >>>> Route Add Config Start <<<<

k8s-w1: >>>> Route Add Config End <<<<

==> router: Cloning VM...

==> router: Matching MAC address for NAT networking...

==> router: Checking if box 'bento/ubuntu-24.04' version '202502.21.0' is up to date...

==> router: Setting the name of the VM: router

==> router: Clearing any previously set network interfaces...

==> router: Preparing network interfaces based on configuration...

router: Adapter 1: nat

router: Adapter 2: hostonly

router: Adapter 3: hostonly

==> router: Forwarding ports...

router: 22 (guest) => 60009 (host) (adapter 1)

==> router: Running 'pre-boot' VM customizations...

==> router: Booting VM...

==> router: Waiting for machine to boot. This may take a few minutes...

router: SSH address: 127.0.0.1:60009

router: SSH username: vagrant

router: SSH auth method: private key

router:

router: Vagrant insecure key detected. Vagrant will automatically replace

router: this with a newly generated keypair for better security.

router:

router: Inserting generated public key within guest...

router: Removing insecure key from the guest if it's present...

router: Key inserted! Disconnecting and reconnecting using new SSH key...

==> router: Machine booted and ready!

==> router: Checking for guest additions in VM...

==> router: Setting hostname...

==> router: Configuring and enabling network interfaces...

==> router: Running provisioner: shell...

router: Running: /tmp/vagrant-shell20250807-101867-8m4vi7.sh

router: >>>> Initial Config Start <<<<

router: [TASK 0] Setting eth2

router: [TASK 1] Setting Profile & Bashrc

router: [TASK 2] Disable AppArmor

router: [TASK 3] Add Kernel setting - IP Forwarding

router: [TASK 4] Setting Dummy Interface

router: [TASK 5] Install Packages

router: [TASK 6] Install Apache

router: >>>> Initial Config End <<<<

==> k8s-w0: Cloning VM...

==> k8s-w0: Matching MAC address for NAT networking...

==> k8s-w0: Checking if box 'bento/ubuntu-24.04' version '202502.21.0' is up to date...

==> k8s-w0: Setting the name of the VM: k8s-w0

==> k8s-w0: Clearing any previously set network interfaces...

==> k8s-w0: Preparing network interfaces based on configuration...

k8s-w0: Adapter 1: nat

k8s-w0: Adapter 2: hostonly

==> k8s-w0: Forwarding ports...

k8s-w0: 22 (guest) => 60010 (host) (adapter 1)

==> k8s-w0: Running 'pre-boot' VM customizations...

==> k8s-w0: Booting VM...

==> k8s-w0: Waiting for machine to boot. This may take a few minutes...

k8s-w0: SSH address: 127.0.0.1:60010

k8s-w0: SSH username: vagrant

k8s-w0: SSH auth method: private key

k8s-w0:

k8s-w0: Vagrant insecure key detected. Vagrant will automatically replace

k8s-w0: this with a newly generated keypair for better security.

k8s-w0:

k8s-w0: Inserting generated public key within guest...

k8s-w0: Removing insecure key from the guest if it's present...

k8s-w0: Key inserted! Disconnecting and reconnecting using new SSH key...

==> k8s-w0: Machine booted and ready!

==> k8s-w0: Checking for guest additions in VM...

==> k8s-w0: Setting hostname...

==> k8s-w0: Configuring and enabling network interfaces...

==> k8s-w0: Running provisioner: shell...

k8s-w0: Running: /tmp/vagrant-shell20250807-101867-5qlp47.sh

k8s-w0: >>>> Initial Config Start <<<<

k8s-w0: [TASK 1] Setting Profile & Bashrc

k8s-w0: [TASK 2] Disable AppArmor

k8s-w0: [TASK 3] Disable and turn off SWAP

k8s-w0: [TASK 4] Install Packages

k8s-w0: [TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl)

k8s-w0: [TASK 6] Install Packages & Helm

k8s-w0: >>>> Initial Config End <<<<

==> k8s-w0: Running provisioner: shell...

k8s-w0: Running: /tmp/vagrant-shell20250807-101867-vdcosy.sh

k8s-w0: >>>> K8S Node config Start <<<<

k8s-w0: [TASK 1] K8S Controlplane Join

k8s-w0: >>>> K8S Node config End <<<<

==> k8s-w0: Running provisioner: shell...

k8s-w0: Running: /tmp/vagrant-shell20250807-101867-53icua.sh

k8s-w0: >>>> Route Add Config Start <<<<

k8s-w0: >>>> Route Add Config End <<<<

5. 컨트롤플레인 SSH 접속

1

vagrant ssh k8s-ctr

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

Welcome to Ubuntu 24.04.2 LTS (GNU/Linux 6.8.0-53-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/pro

System information as of Thu Aug 7 11:32:20 PM KST 2025

System load: 0.5

Usage of /: 29.6% of 30.34GB

Memory usage: 49%

Swap usage: 0%

Processes: 216

Users logged in: 0

IPv4 address for eth0: 10.0.2.15

IPv6 address for eth0: fd17:625c:f037:2:a00:27ff:fe6b:69c9

This system is built by the Bento project by Chef Software

More information can be found at https://github.com/chef/bento

Use of this system is acceptance of the OS vendor EULA and License Agreements.

(⎈|HomeLab:N/A) root@k8s-ctr:~#

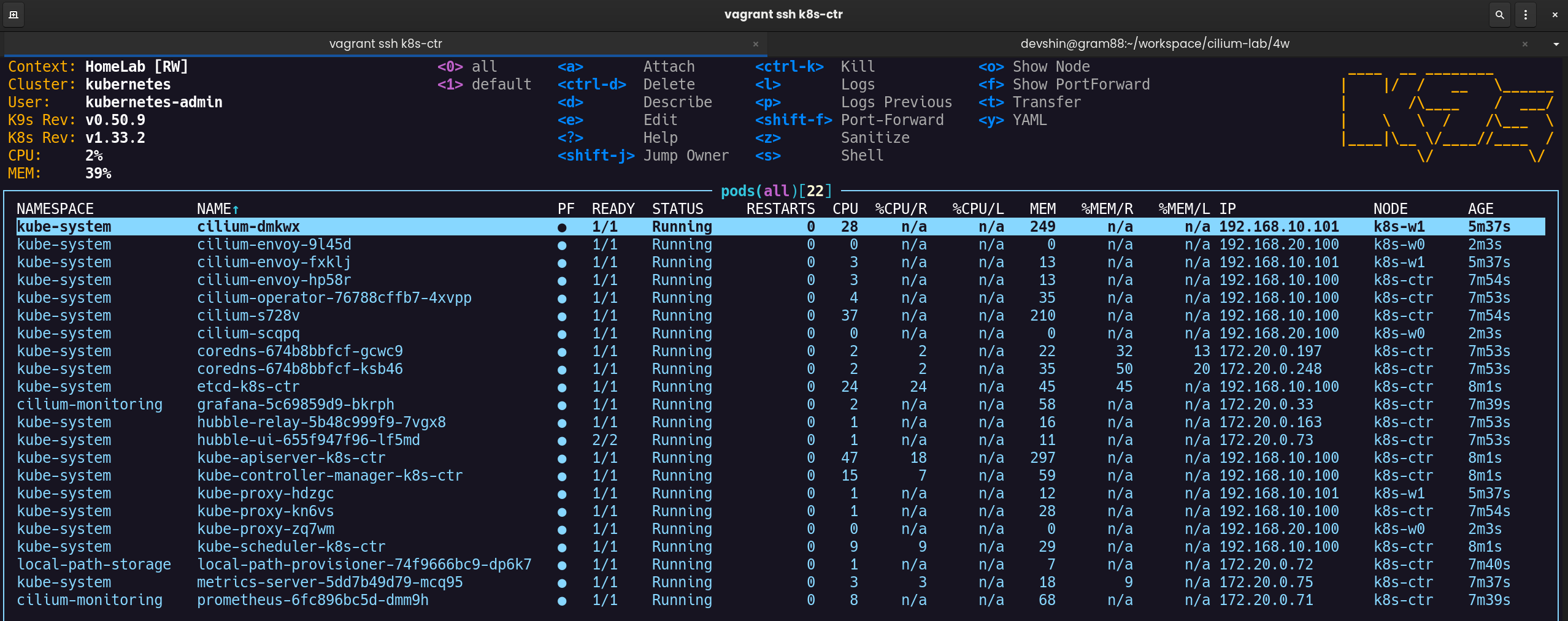

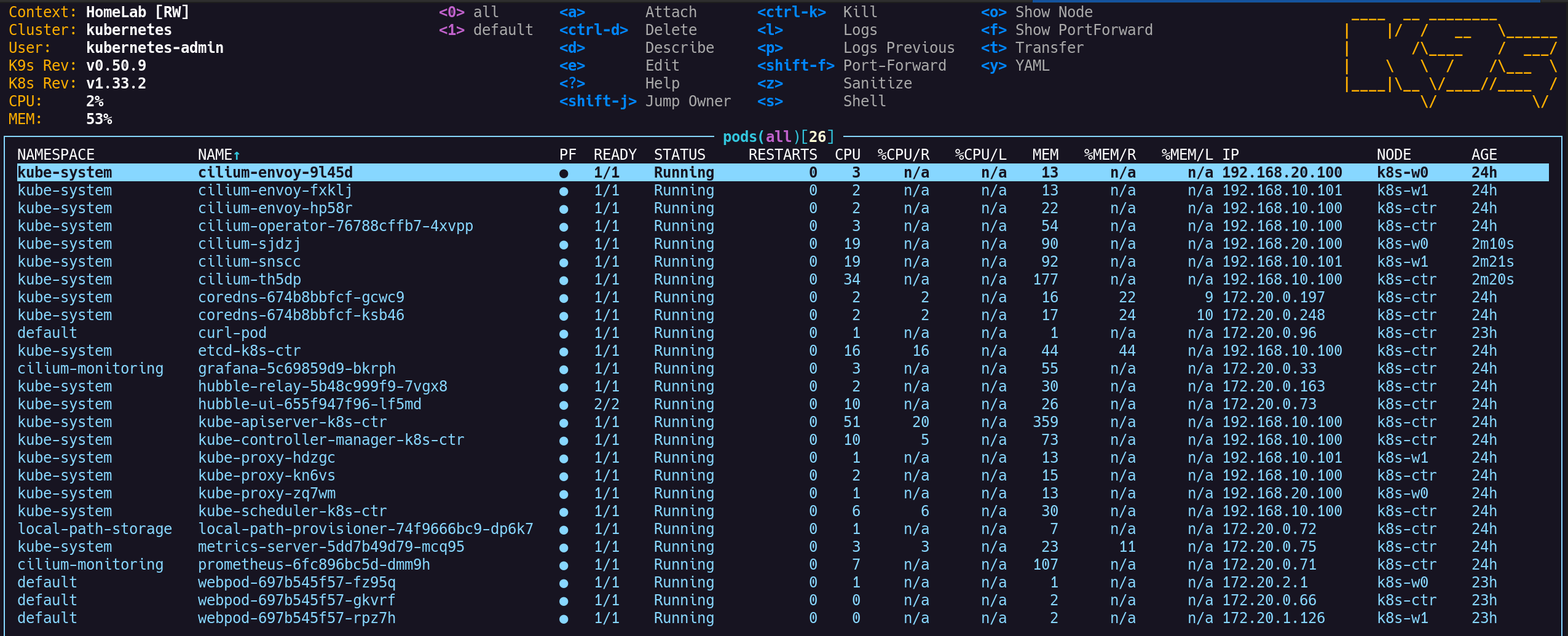

6. k9s 실행

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# k9s

7. 워커노드 및 라우터 원격 접속 확인

1

2

3

4

5

6

7

8

9

10

11

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-w0 hostname

Warning: Permanently added 'k8s-w0' (ED25519) to the list of known hosts.

k8s-w0

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-w1 hostname

Warning: Permanently added 'k8s-w1' (ED25519) to the list of known hosts.

k8s-w1

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@router hostname

Warning: Permanently added 'router' (ED25519) to the list of known hosts.

router

8. Cilium Pod CIDR 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumnode -o json | grep podCIDRs -A2

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

"podCIDRs": [

"172.20.0.0/24"

],

--

"podCIDRs": [

"172.20.2.0/24"

],

--

"podCIDRs": [

"172.20.1.0/24"

],

- Cilium Cluster-Scope IPAM에서 각 노드별 Pod CIDR 할당 확인

🌐 네트워크 정보 확인

1. 노드 네트워크 정보 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# k get node -owide

✅ 출력

1

2

3

4

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-ctr Ready control-plane 11m v1.33.2 192.168.10.100 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

k8s-w0 Ready <none> 5m9s v1.33.2 192.168.20.100 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

k8s-w1 Ready <none> 8m43s v1.33.2 192.168.10.101 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

192.168.10.x대역(k8s-ctr, k8s-w1)과192.168.20.x대역(k8s-w0)으로 분리

2. 라우터 인터페이스 정보 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@router ip -br -c -4 addr

✅ 출력

1

2

3

4

5

6

lo UNKNOWN 127.0.0.1/8

eth0 UP 10.0.2.15/24 metric 100

eth1 UP 192.168.10.200/24

eth2 UP 192.168.20.200/24

loop1 UNKNOWN 10.10.1.200/24

loop2 UNKNOWN 10.10.2.200/24

- eth1(

192.168.10.200), eth2(192.168.20.200)가 각 네트워크 대역 게이트웨이 역할 수행, loopback 인터페이스 존재

3. 워커노드 인터페이스 정보 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@k8s-w1 ip -c -4 addr show dev eth1

✅ 출력

1

2

3

4

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

altname enp0s8

inet 192.168.10.101/24 brd 192.168.10.255 scope global eth1

valid_lft forever preferred_lft forever

- 워커노드1:

eth1→192.168.10.101/24

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@k8s-w0 ip -c -4 addr show dev eth1

✅ 출력

1

2

3

4

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

altname enp0s8

inet 192.168.20.100/24 brd 192.168.20.255 scope global eth1

valid_lft forever preferred_lft forever

- 워커노드0:

eth1→192.168.20.100/24

4. 라우터의 라우팅 테이블 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@router ip -c route

✅ 출력

1

2

3

4

5

6

7

8

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.10.1.0/24 dev loop1 proto kernel scope link src 10.10.1.200

10.10.2.0/24 dev loop2 proto kernel scope link src 10.10.2.200

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.200

192.168.20.0/24 dev eth2 proto kernel scope link src 192.168.20.200

5. 컨트롤플레인 Static Route 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c route | grep static

✅ 출력

1

2

3

10.10.0.0/16 via 192.168.10.200 dev eth1 proto static # 라우터 더미 인터페이스

172.20.0.0/16 via 192.168.10.200 dev eth1 proto static

192.168.20.0/24 via 192.168.10.200 dev eth1 proto static # 워커노드0 통신용

6. autoDirectNodeRoutes 동작 확인

--set routingMode=native --set autoDirectNodeRoutes=true

(1) 컨트롤플레인 라우팅테이블 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c route

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.10.0.0/16 via 192.168.10.200 dev eth1 proto static

172.20.0.0/24 via 172.20.0.253 dev cilium_host proto kernel src 172.20.0.253

172.20.0.0/16 via 192.168.10.200 dev eth1 proto static

172.20.0.253 dev cilium_host proto kernel scope link

172.20.1.0/24 via 192.168.10.101 dev eth1 proto kernel

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.100

192.168.20.0/24 via 192.168.10.200 dev eth1 proto static

- 같은 네트워크 대역 노드끼리만 PodCIDR 자동 라우팅 추가됨

- 다른 네트워크 대역 노드는 자동 라우팅 불가

- L3 네트워크 장비에서 별도 라우팅 필요

(2) 워커노드 라우팅테이블 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@k8s-w1 ip -c route

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.10.0.0/16 via 192.168.10.200 dev eth1 proto static

172.20.0.0/24 via 192.168.10.100 dev eth1 proto kernel

172.20.0.0/16 via 192.168.10.200 dev eth1 proto static

172.20.1.0/24 via 172.20.1.238 dev cilium_host proto kernel src 172.20.1.238

172.20.1.238 dev cilium_host proto kernel scope link

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.101

192.168.20.0/24 via 192.168.10.200 dev eth1 proto static

172.20.0.0/24 via 192.168.10.100 dev eth1 proto kernel- k8s-w1: 컨트롤플레인 PodCIDR(

172.20.0.0/24) 자동 라우팅 존재

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@k8s-w0 ip -c route

✅ 출력

1

2

3

4

5

6

7

8

9

10

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.10.0.0/16 via 192.168.20.200 dev eth1 proto static

172.20.0.0/16 via 192.168.20.200 dev eth1 proto static

172.20.2.0/24 via 172.20.2.13 dev cilium_host proto kernel src 172.20.2.13

172.20.2.13 dev cilium_host proto kernel scope link

192.168.10.0/24 via 192.168.20.200 dev eth1 proto static

192.168.20.0/24 dev eth1 proto kernel scope link src 192.168.20.100

- k8s-w0: 다른 노드 PodCIDR 정보 없음 → 통신 불가

7. 통신 확인

(1) 라우터 더미 인터페이스 통신 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ping -c 1 10.10.1.200

✅ 출력

1

2

3

4

5

6

PING 10.10.1.200 (10.10.1.200) 56(84) bytes of data.

64 bytes from 10.10.1.200: icmp_seq=1 ttl=64 time=0.949 ms

--- 10.10.1.200 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.949/0.949/0.949/0.000 ms

- 컨트롤플레인(

k8s-ctr)에서 라우터 더미 인터페이스(10.10.1.200)로 ping 테스트 - 응답 정상 수신 → 라우터와의 연결 상태 정상 확인

(2) 워커노드 물리 인터페이스 통신 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ping -c 1 192.168.20.100

✅ 출력

1

2

3

4

5

6

PING 192.168.20.100 (192.168.20.100) 56(84) bytes of data.

64 bytes from 192.168.20.100: icmp_seq=1 ttl=63 time=2.22 ms

--- 192.168.20.100 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 2.215/2.215/2.215/0.000 ms

- 컨트롤플레인에서 워커노드

k8s-w0의 물리 인터페이스(192.168.20.100)로 ping 테스트 - 정상 응답 수신 → 서로 다른 네트워크 대역 간 물리 IP 통신 가능

(3) 노드 조인 상태 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# k get node -owide

✅ 출력

1

2

3

4

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-ctr Ready control-plane 45m v1.33.2 192.168.10.100 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

k8s-w0 Ready <none> 39m v1.33.2 192.168.20.100 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

k8s-w1 Ready <none> 42m v1.33.2 192.168.10.101 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

k8s-w0(192.168.20.100) 포함 모든 노드가Ready상태- 물리 IP 통신 가능하므로 클러스터 조인 정상

(4) L3 장비 경유 경로 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# tracepath -n 192.168.20.100

✅ 출력

1

2

3

4

5

1?: [LOCALHOST] pmtu 1500

1: 192.168.10.200 0.816ms

1: 192.168.10.200 0.546ms

2: 192.168.20.100 1.048ms reached

Resume: pmtu 1500 hops 2 back 2

tracepath실행 결과,192.168.20.100경유 전192.168.10.200(라우터) 경유 확인- 라우터 → 목적지 노드로 2홉 경로 정상

🚦Native Routing mode

1. 샘플 애플리케이션 배포

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

(⎈|HomeLab:N/A) root@k8s-ctr:~# cat << EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: webpod

spec:

replicas: 3

selector:

matchLabels:

app: webpod

template:

metadata:

labels:

app: webpod

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- sample-app

topologyKey: "kubernetes.io/hostname"

containers:

- name: webpod

image: traefik/whoami

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: webpod

labels:

app: webpod

spec:

selector:

app: webpod

EOFype: ClusterIP0

# 결과

deployment.apps/webpod created

service/webpod created

webpodDeployment 3개 파드가 컨트롤플레인, w1, w0 노드에 분산 배치됨

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

(⎈|HomeLab:N/A) root@k8s-ctr:~# cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: curl-pod

labels:

app: curl

spec:

nodeName: k8s-ctr

containers:

- name: curl

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

# 결과

pod/curl-pod created

- 컨트롤플레인 노드에

curl-pod배포해 테스트 환경 준비

2. 엔드포인트 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get deploy,svc,ep webpod -owide

✅ 출력

1

2

3

4

5

6

7

8

9

Warning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/webpod 3/3 3 3 116s webpod traefik/whoami app=webpod

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/webpod ClusterIP 10.96.163.90 <none> 80/TCP 116s app=webpod

NAME ENDPOINTS AGE

endpoints/webpod 172.20.0.66:80,172.20.1.126:80,172.20.2.1:80 116s

172.20.0.66,172.20.1.126,172.20.2.13개 파드 IP 확인

3. Cilium 엔드포인트 조회

(1) 각 파드의 Cilium 관리 IP 및 Identity 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumendpoints

✅ 출력

1

2

3

4

5

NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6

curl-pod 63446 ready 172.20.0.96

webpod-697b545f57-fz95q 8469 ready 172.20.2.1

webpod-697b545f57-gkvrf 8469 ready 172.20.0.66

webpod-697b545f57-rpz7h 8469 ready 172.20.1.126

(2) IP-to-Identity 매핑 정보 조회

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg ip list

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

IP IDENTITY SOURCE

0.0.0.0/0 reserved:world

172.20.1.0/24 reserved:world

172.20.2.0/24 reserved:world

10.0.2.15/32 reserved:host

reserved:kube-apiserver

172.20.0.33/32 k8s:app=grafana custom-resource

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=cilium-monitoring

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=cilium-monitoring

172.20.0.66/32 k8s:app=webpod custom-resource

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

172.20.0.71/32 k8s:app=prometheus custom-resource

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=cilium-monitoring

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=prometheus-k8s

k8s:io.kubernetes.pod.namespace=cilium-monitoring

172.20.0.72/32 k8s:app=local-path-provisioner custom-resource

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=local-path-storage

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=local-path-provisioner-service-account

k8s:io.kubernetes.pod.namespace=local-path-storage

172.20.0.73/32 k8s:app.kubernetes.io/name=hubble-ui custom-resource

k8s:app.kubernetes.io/part-of=cilium

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=hubble-ui

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=hubble-ui

172.20.0.75/32 k8s:app.kubernetes.io/instance=metrics-server custom-resource

k8s:app.kubernetes.io/name=metrics-server

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=metrics-server

k8s:io.kubernetes.pod.namespace=kube-system

172.20.0.96/32 k8s:app=curl custom-resource

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

172.20.0.163/32 k8s:app.kubernetes.io/name=hubble-relay custom-resource

k8s:app.kubernetes.io/part-of=cilium

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=hubble-relay

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=hubble-relay

172.20.0.197/32 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system custom-resource

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

172.20.0.248/32 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system custom-resource

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

172.20.0.253/32 reserved:host

reserved:kube-apiserver

172.20.1.126/32 k8s:app=webpod custom-resource

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

172.20.1.238/32 reserved:remote-node

172.20.2.1/32 k8s:app=webpod custom-resource

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

172.20.2.13/32 reserved:remote-node

192.168.10.100/32 reserved:host

reserved:kube-apiserver

192.168.10.101/32 reserved:remote-node

192.168.20.100/32 reserved:remote-node

(3) 엔드포인트 상세 정보 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg endpoint list

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS

ENFORCEMENT ENFORCEMENT

275 Disabled Disabled 1 k8s:node-role.kubernetes.io/control-plane ready

k8s:node.kubernetes.io/exclude-from-external-load-balancers

reserved:host

364 Disabled Disabled 18480 k8s:app=prometheus 172.20.0.71 ready

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=cilium-monitoring

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=prometheus-k8s

k8s:io.kubernetes.pod.namespace=cilium-monitoring

406 Disabled Disabled 63446 k8s:app=curl 172.20.0.96 ready

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

465 Disabled Disabled 48231 k8s:app=local-path-provisioner 172.20.0.72 ready

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=local-path-storage

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=local-path-provisioner-service-account

k8s:io.kubernetes.pod.namespace=local-path-storage

810 Disabled Disabled 58623 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system 172.20.0.248 ready

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

1203 Disabled Disabled 58623 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system 172.20.0.197 ready

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

1769 Disabled Disabled 8469 k8s:app=webpod 172.20.0.66 ready

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

2060 Disabled Disabled 5827 k8s:app.kubernetes.io/instance=metrics-server 172.20.0.75 ready

k8s:app.kubernetes.io/name=metrics-server

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=metrics-server

k8s:io.kubernetes.pod.namespace=kube-system

2321 Disabled Disabled 22595 k8s:app.kubernetes.io/name=hubble-ui 172.20.0.73 ready

k8s:app.kubernetes.io/part-of=cilium

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=hubble-ui

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=hubble-ui

2795 Disabled Disabled 7496 k8s:app=grafana 172.20.0.33 ready

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=cilium-monitoring

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=cilium-monitoring

3315 Disabled Disabled 32707 k8s:app.kubernetes.io/name=hubble-relay 172.20.0.163 ready

k8s:app.kubernetes.io/part-of=cilium

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=hubble-relay

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=hubble-relay

4. Cilium 서비스 리스트 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg service list

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

ID Frontend Service Type Backend

1 0.0.0.0:30003/TCP NodePort 1 => 172.20.0.73:8081/TCP (active)

4 10.96.67.8:80/TCP ClusterIP 1 => 172.20.0.73:8081/TCP (active)

5 10.96.0.10:53/TCP ClusterIP 1 => 172.20.0.197:53/TCP (active)

2 => 172.20.0.248:53/TCP (active)

6 10.96.0.10:53/UDP ClusterIP 1 => 172.20.0.197:53/UDP (active)

2 => 172.20.0.248:53/UDP (active)

7 10.96.0.10:9153/TCP ClusterIP 1 => 172.20.0.197:9153/TCP (active)

2 => 172.20.0.248:9153/TCP (active)

8 10.96.133.165:443/TCP ClusterIP 1 => 172.20.0.75:10250/TCP (active)

9 0.0.0.0:30002/TCP NodePort 1 => 172.20.0.33:3000/TCP (active)

12 10.96.60.80:3000/TCP ClusterIP 1 => 172.20.0.33:3000/TCP (active)

13 0.0.0.0:30001/TCP NodePort 1 => 172.20.0.71:9090/TCP (active)

16 10.96.97.168:9090/TCP ClusterIP 1 => 172.20.0.71:9090/TCP (active)

17 10.96.213.163:443/TCP ClusterIP 1 => 192.168.10.100:4244/TCP (active)

18 10.96.33.53:80/TCP ClusterIP 1 => 172.20.0.163:4245/TCP (active)

19 10.96.0.1:443/TCP ClusterIP 1 => 192.168.10.100:6443/TCP (active)

20 10.96.163.90:80/TCP ClusterIP 1 => 172.20.0.66:80/TCP (active)

2 => 172.20.1.126:80/TCP (active)

3 => 172.20.2.1:80/TCP (active)

- 서비스의 Frontend(ClusterIP, NodePort)와 Backend 파드 매핑 조회

webpod서비스10.96.163.90:80/TCP→172.20.0.66,172.20.1.126,172.20.2.1백엔드 파드로 분산

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# k get svc

✅ 출력

1

2

3

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 58m

webpod ClusterIP 10.96.163.90 <none> 80/TCP 7m19s

5. BPF 로드밸런싱 매핑 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg bpf lb list | grep 10.96.163.90

✅ 출력

1

2

3

4

10.96.163.90:80/TCP (2) 172.20.1.126:80/TCP (20) (2)

10.96.163.90:80/TCP (1) 172.20.0.66:80/TCP (20) (1)

10.96.163.90:80/TCP (0) 0.0.0.0:0 (20) (0) [ClusterIP, non-routable]

10.96.163.90:80/TCP (3) 172.20.2.1:80/TCP (20) (3)

- ClusterIP에 대한 BPF LB 매핑 조회

- 각 백엔드 파드로 인덱스 기반 분산 처리 확인

6. BPF NAT 테이블 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg bpf nat list

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

ICMP OUT 192.168.10.100:8403 -> 10.10.1.200:0 XLATE_SRC 192.168.10.100:8403 Created=1305sec ago NeedsCT=1

TCP IN 104.16.100.215:443 -> 10.0.2.15:42902 XLATE_DST 10.0.2.15:42902 Created=665sec ago NeedsCT=1

TCP OUT 10.0.2.15:42902 -> 104.16.100.215:443 XLATE_SRC 10.0.2.15:42902 Created=665sec ago NeedsCT=1

TCP OUT 10.0.2.15:35146 -> 3.94.224.37:443 XLATE_SRC 10.0.2.15:35146 Created=712sec ago NeedsCT=1

TCP OUT 10.0.2.15:40482 -> 98.85.153.80:443 XLATE_SRC 10.0.2.15:40482 Created=665sec ago NeedsCT=1

TCP IN 104.16.98.215:443 -> 10.0.2.15:57124 XLATE_DST 10.0.2.15:57124 Created=708sec ago NeedsCT=1

TCP OUT 10.0.2.15:49870 -> 44.208.254.194:443 XLATE_SRC 10.0.2.15:49870 Created=668sec ago NeedsCT=1

ICMP IN 192.168.20.100:0 -> 192.168.10.100:8432 XLATE_DST 192.168.10.100:8432 Created=1241sec ago NeedsCT=1

TCP IN 44.208.254.194:443 -> 10.0.2.15:49884 XLATE_DST 10.0.2.15:49884 Created=665sec ago NeedsCT=1

TCP OUT 10.0.2.15:57908 -> 3.94.224.37:443 XLATE_SRC 10.0.2.15:57908 Created=666sec ago NeedsCT=1

TCP OUT 10.0.2.15:49884 -> 44.208.254.194:443 XLATE_SRC 10.0.2.15:49884 Created=665sec ago NeedsCT=1

UDP OUT 192.168.10.100:56494 -> 192.168.20.100:44444 XLATE_SRC 192.168.10.100:56494 Created=945sec ago NeedsCT=1

TCP OUT 10.0.2.15:57888 -> 3.94.224.37:443 XLATE_SRC 10.0.2.15:57888 Created=669sec ago NeedsCT=1

UDP OUT 10.0.2.15:52293 -> 10.0.2.3:53 XLATE_SRC 10.0.2.15:52293 Created=712sec ago NeedsCT=1

TCP IN 44.208.254.194:443 -> 10.0.2.15:34700 XLATE_DST 10.0.2.15:34700 Created=710sec ago NeedsCT=1

TCP IN 192.168.10.101:10250 -> 192.168.10.100:43366 XLATE_DST 192.168.10.100:43366 Created=3613sec ago NeedsCT=1

TCP IN 3.94.224.37:443 -> 10.0.2.15:57908 XLATE_DST 10.0.2.15:57908 Created=666sec ago NeedsCT=1

UDP OUT 10.0.2.15:44980 -> 10.0.2.3:53 XLATE_SRC 10.0.2.15:44980 Created=712sec ago NeedsCT=1

UDP IN 192.168.20.100:44445 -> 192.168.10.100:56494 XLATE_DST 192.168.10.100:56494 Created=944sec ago NeedsCT=1

TCP OUT 10.0.2.15:34714 -> 44.208.254.194:443 XLATE_SRC 10.0.2.15:34714 Created=708sec ago NeedsCT=1

TCP IN 3.94.224.37:443 -> 10.0.2.15:35146 XLATE_DST 10.0.2.15:35146 Created=712sec ago NeedsCT=1

TCP OUT 10.0.2.15:49464 -> 104.16.98.215:443 XLATE_SRC 10.0.2.15:49464 Created=664sec ago NeedsCT=1

TCP OUT 10.0.2.15:40472 -> 98.85.153.80:443 XLATE_SRC 10.0.2.15:40472 Created=668sec ago NeedsCT=1

UDP OUT 10.0.2.15:51117 -> 10.0.2.3:53 XLATE_SRC 10.0.2.15:51117 Created=712sec ago NeedsCT=1

TCP OUT 192.168.10.100:36046 -> 192.168.20.100:10250 XLATE_SRC 192.168.10.100:36046 Created=3401sec ago NeedsCT=1

TCP OUT 10.0.2.15:34700 -> 44.208.254.194:443 XLATE_SRC 10.0.2.15:34700 Created=710sec ago NeedsCT=1

TCP OUT 10.0.2.15:51854 -> 98.85.153.80:443 XLATE_SRC 10.0.2.15:51854 Created=711sec ago NeedsCT=1

TCP IN 44.208.254.194:443 -> 10.0.2.15:34684 XLATE_DST 10.0.2.15:34684 Created=712sec ago NeedsCT=1

TCP IN 104.16.98.215:443 -> 10.0.2.15:49454 XLATE_DST 10.0.2.15:49454 Created=665sec ago NeedsCT=1

UDP IN 192.168.20.100:44446 -> 192.168.10.100:56494 XLATE_DST 192.168.10.100:56494 Created=944sec ago NeedsCT=1

TCP IN 44.208.254.194:443 -> 10.0.2.15:34714 XLATE_DST 10.0.2.15:34714 Created=708sec ago NeedsCT=1

TCP IN 104.16.97.215:443 -> 10.0.2.15:43064 XLATE_DST 10.0.2.15:43064 Created=708sec ago NeedsCT=1

TCP IN 98.85.153.80:443 -> 10.0.2.15:40472 XLATE_DST 10.0.2.15:40472 Created=668sec ago NeedsCT=1

UDP IN 10.0.2.3:53 -> 10.0.2.15:39251 XLATE_DST 10.0.2.15:39251 Created=708sec ago NeedsCT=1

TCP OUT 192.168.10.100:43366 -> 192.168.10.101:10250 XLATE_SRC 192.168.10.100:43366 Created=3613sec ago NeedsCT=1

TCP IN 98.85.153.80:443 -> 10.0.2.15:51854 XLATE_DST 10.0.2.15:51854 Created=711sec ago NeedsCT=1

UDP IN 10.0.2.3:53 -> 10.0.2.15:44980 XLATE_DST 10.0.2.15:44980 Created=712sec ago NeedsCT=1

UDP IN 10.0.2.3:53 -> 10.0.2.15:52293 XLATE_DST 10.0.2.15:52293 Created=712sec ago NeedsCT=1

UDP IN 192.168.20.100:44444 -> 192.168.10.100:56494 XLATE_DST 192.168.10.100:56494 Created=945sec ago NeedsCT=1

TCP OUT 10.0.2.15:34684 -> 44.208.254.194:443 XLATE_SRC 10.0.2.15:34684 Created=712sec ago NeedsCT=1

TCP IN 3.94.224.37:443 -> 10.0.2.15:35154 XLATE_DST 10.0.2.15:35154 Created=708sec ago NeedsCT=1

TCP IN 44.208.254.194:443 -> 10.0.2.15:49870 XLATE_DST 10.0.2.15:49870 Created=668sec ago NeedsCT=1

TCP IN 98.85.153.80:443 -> 10.0.2.15:51860 XLATE_DST 10.0.2.15:51860 Created=710sec ago NeedsCT=1

UDP IN 10.0.2.3:53 -> 10.0.2.15:60066 XLATE_DST 10.0.2.15:60066 Created=708sec ago NeedsCT=1

TCP OUT 10.0.2.15:43064 -> 104.16.97.215:443 XLATE_SRC 10.0.2.15:43064 Created=708sec ago NeedsCT=1

UDP OUT 10.0.2.15:60066 -> 10.0.2.3:53 XLATE_SRC 10.0.2.15:60066 Created=708sec ago NeedsCT=1

TCP IN 192.168.20.100:10250 -> 192.168.10.100:36046 XLATE_DST 192.168.10.100:36046 Created=3401sec ago NeedsCT=1

TCP IN 98.85.153.80:443 -> 10.0.2.15:40482 XLATE_DST 10.0.2.15:40482 Created=665sec ago NeedsCT=1

TCP OUT 10.0.2.15:49454 -> 104.16.98.215:443 XLATE_SRC 10.0.2.15:49454 Created=665sec ago NeedsCT=1

TCP OUT 10.0.2.15:57902 -> 3.94.224.37:443 XLATE_SRC 10.0.2.15:57902 Created=667sec ago NeedsCT=1

UDP OUT 192.168.10.100:56494 -> 192.168.20.100:44446 XLATE_SRC 192.168.10.100:56494 Created=944sec ago NeedsCT=1

UDP OUT 10.0.2.15:39251 -> 10.0.2.3:53 XLATE_SRC 10.0.2.15:39251 Created=708sec ago NeedsCT=1

UDP OUT 10.0.2.15:52382 -> 10.0.2.3:53 XLATE_SRC 10.0.2.15:52382 Created=712sec ago NeedsCT=1

TCP IN 3.94.224.37:443 -> 10.0.2.15:57902 XLATE_DST 10.0.2.15:57902 Created=667sec ago NeedsCT=1

UDP IN 10.0.2.3:53 -> 10.0.2.15:52382 XLATE_DST 10.0.2.15:52382 Created=712sec ago NeedsCT=1

TCP OUT 10.0.2.15:35154 -> 3.94.224.37:443 XLATE_SRC 10.0.2.15:35154 Created=708sec ago NeedsCT=1

TCP OUT 10.0.2.15:51860 -> 98.85.153.80:443 XLATE_SRC 10.0.2.15:51860 Created=710sec ago NeedsCT=1

TCP IN 104.16.98.215:443 -> 10.0.2.15:49464 XLATE_DST 10.0.2.15:49464 Created=664sec ago NeedsCT=1

ICMP IN 10.10.1.200:0 -> 192.168.10.100:8403 XLATE_DST 192.168.10.100:8403 Created=1305sec ago NeedsCT=1

ICMP OUT 192.168.10.100:8432 -> 192.168.20.100:0 XLATE_SRC 192.168.10.100:8432 Created=1241sec ago NeedsCT=1

TCP OUT 10.0.2.15:57124 -> 104.16.98.215:443 XLATE_SRC 10.0.2.15:57124 Created=708sec ago NeedsCT=1

TCP IN 3.94.224.37:443 -> 10.0.2.15:57888 XLATE_DST 10.0.2.15:57888 Created=669sec ago NeedsCT=1

UDP IN 10.0.2.3:53 -> 10.0.2.15:51117 XLATE_DST 10.0.2.15:51117 Created=712sec ago NeedsCT=1

UDP OUT 192.168.10.100:56494 -> 192.168.20.100:44445 XLATE_SRC 192.168.10.100:56494 Created=944sec ago NeedsCT=1

- IN/OUT 트래픽에 대해

XLATE_SRC(소스 변환),XLATE_DST(목적지 변환) 매핑 정보 조회 - 외부 IP ↔ 내부 파드/노드 IP 변환 내역 포함

7. 현재 사용중인 맵 필터링

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg map list | grep -v '0 0'

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

Name Num entries Num errors Cache enabled

cilium_policy_v2_01769 3 0 true

cilium_policy_v2_00406 3 0 true

cilium_ipcache_v2 22 0 true

cilium_lb4_services_v2 45 0 true

cilium_lb4_reverse_nat 20 0 true

cilium_lb4_reverse_sk 9 0 true

cilium_policy_v2_00275 2 0 true

cilium_policy_v2_00810 3 0 true

cilium_policy_v2_00465 3 0 true

cilium_policy_v2_02060 3 0 true

cilium_policy_v2_00364 3 0 true

cilium_policy_v2_03315 3 0 true

cilium_policy_v2_02321 3 0 true

cilium_policy_v2_02795 3 0 true

cilium_runtime_config 256 0 true

cilium_lxc 13 0 true

cilium_lb4_backends_v3 16 0 true

cilium_policy_v2_01203 3 0 true

- 정책, IP 캐시, LB 서비스, 백엔드 등 활성 맵 확인 가능

8. 서비스 맵 상세 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg map get cilium_lb4_services_v2

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

Key Value State Error

10.96.60.80:3000/TCP (0) 0 1[0] (12) [0x0 0x0]

10.0.2.15:30003/TCP (0) 0 1[0] (2) [0x42 0x0]

10.96.0.10:53/UDP (0) 0 2[0] (6) [0x0 0x0]

10.0.2.15:30002/TCP (1) 10 0[0] (10) [0x42 0x0]

10.96.67.8:80/TCP (0) 0 1[0] (4) [0x0 0x0]

10.96.0.10:53/TCP (1) 3 0[0] (5) [0x0 0x0]

10.96.0.10:53/TCP (0) 0 2[0] (5) [0x0 0x0]

10.0.2.15:30002/TCP (0) 0 1[0] (10) [0x42 0x0]

10.96.163.90:80/TCP (1) 14 0[0] (20) [0x0 0x0]

192.168.10.100:30001/TCP (1) 11 0[0] (15) [0x42 0x0]

10.96.97.168:9090/TCP (1) 11 0[0] (16) [0x0 0x0]

10.96.0.10:9153/TCP (2) 8 0[0] (7) [0x0 0x0]

10.96.33.53:80/TCP (0) 0 1[0] (18) [0x0 0x0]

192.168.10.100:30001/TCP (0) 0 1[0] (15) [0x42 0x0]

10.96.0.1:443/TCP (0) 0 1[0] (19) [0x0 0x0]

0.0.0.0:30002/TCP (1) 10 0[0] (9) [0x2 0x0]

10.96.133.165:443/TCP (0) 0 1[0] (8) [0x0 0x0]

0.0.0.0:30002/TCP (0) 0 1[0] (9) [0x2 0x0]

10.0.2.15:30001/TCP (1) 11 0[0] (14) [0x42 0x0]

10.96.67.8:80/TCP (1) 12 0[0] (4) [0x0 0x0]

0.0.0.0:30003/TCP (0) 0 1[0] (1) [0x2 0x0]

10.96.163.90:80/TCP (3) 15 0[0] (20) [0x0 0x0]

10.96.60.80:3000/TCP (1) 10 0[0] (12) [0x0 0x0]

0.0.0.0:30001/TCP (1) 11 0[0] (13) [0x2 0x0]

10.96.0.10:9153/TCP (1) 7 0[0] (7) [0x0 0x0]

10.96.163.90:80/TCP (0) 0 3[0] (20) [0x0 0x0]

192.168.10.100:30003/TCP (0) 0 1[0] (3) [0x42 0x0]

10.0.2.15:30001/TCP (0) 0 1[0] (14) [0x42 0x0]

10.96.213.163:443/TCP (1) 2 0[0] (17) [0x0 0x10]

10.96.0.1:443/TCP (1) 1 0[0] (19) [0x0 0x0]

10.96.133.165:443/TCP (1) 9 0[0] (8) [0x0 0x0]

10.96.0.10:53/UDP (1) 5 0[0] (6) [0x0 0x0]

10.96.97.168:9090/TCP (0) 0 1[0] (16) [0x0 0x0]

10.96.0.10:9153/TCP (0) 0 2[0] (7) [0x0 0x0]

10.96.163.90:80/TCP (2) 16 0[0] (20) [0x0 0x0]

192.168.10.100:30002/TCP (1) 10 0[0] (11) [0x42 0x0]

10.96.33.53:80/TCP (1) 13 0[0] (18) [0x0 0x0]

10.96.0.10:53/UDP (2) 6 0[0] (6) [0x0 0x0]

192.168.10.100:30003/TCP (1) 12 0[0] (3) [0x42 0x0]

10.0.2.15:30003/TCP (1) 12 0[0] (2) [0x42 0x0]

192.168.10.100:30002/TCP (0) 0 1[0] (11) [0x42 0x0]

0.0.0.0:30003/TCP (1) 12 0[0] (1) [0x2 0x0]

0.0.0.0:30001/TCP (0) 0 1[0] (13) [0x2 0x0]

10.96.213.163:443/TCP (0) 0 1[0] (17) [0x0 0x10]

10.96.0.10:53/TCP (2) 4 0[0] (5) [0x0 0x0]

webpod서비스 IP(10.96.163.90:80/TCP)가 3개의 백엔드 파드로 매핑되어 있음- NodePort, ClusterIP, 외부 노출 IP까지 모든 매핑 정보 확인 가능

📡 통신 확인 & 허블

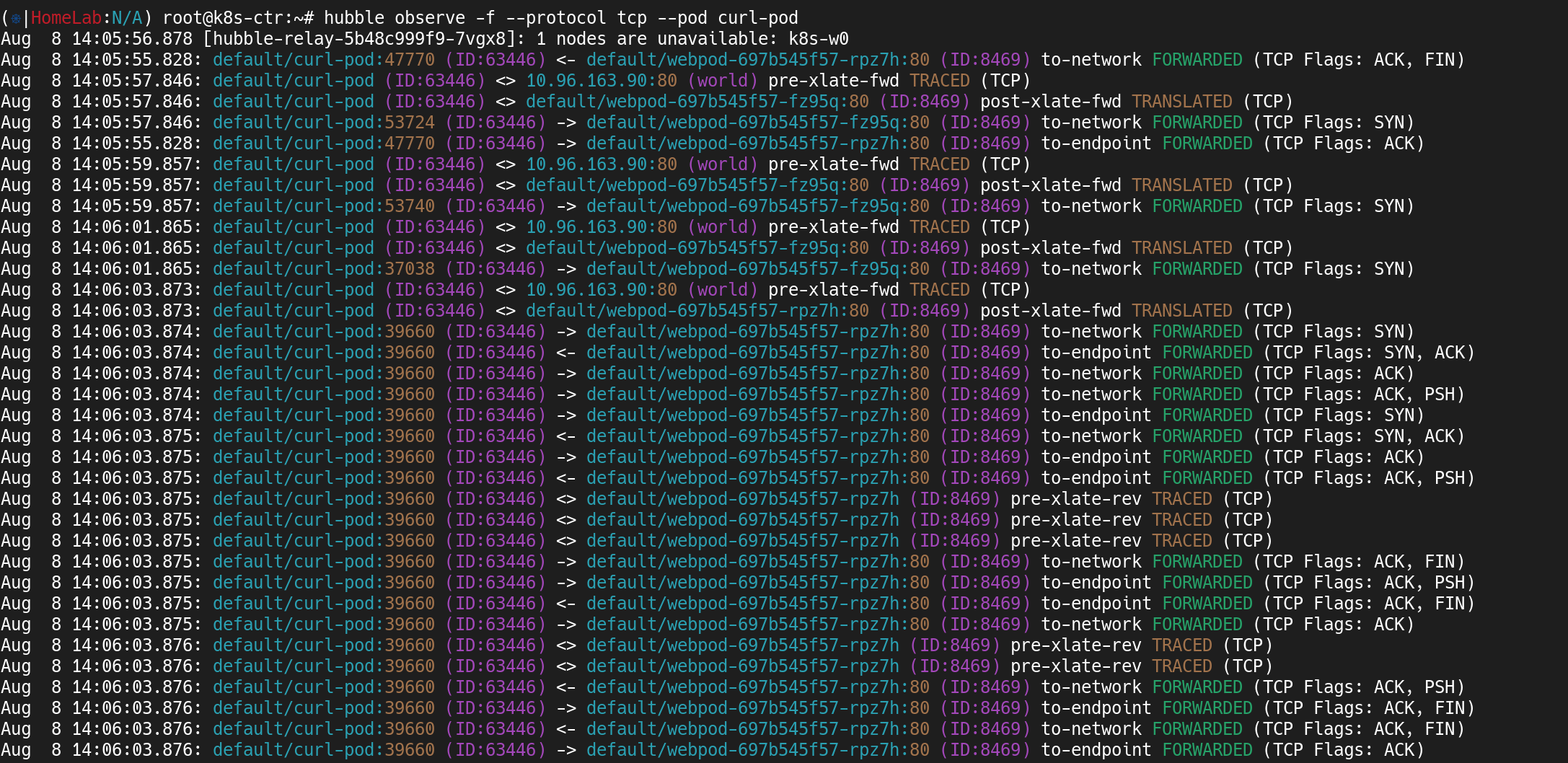

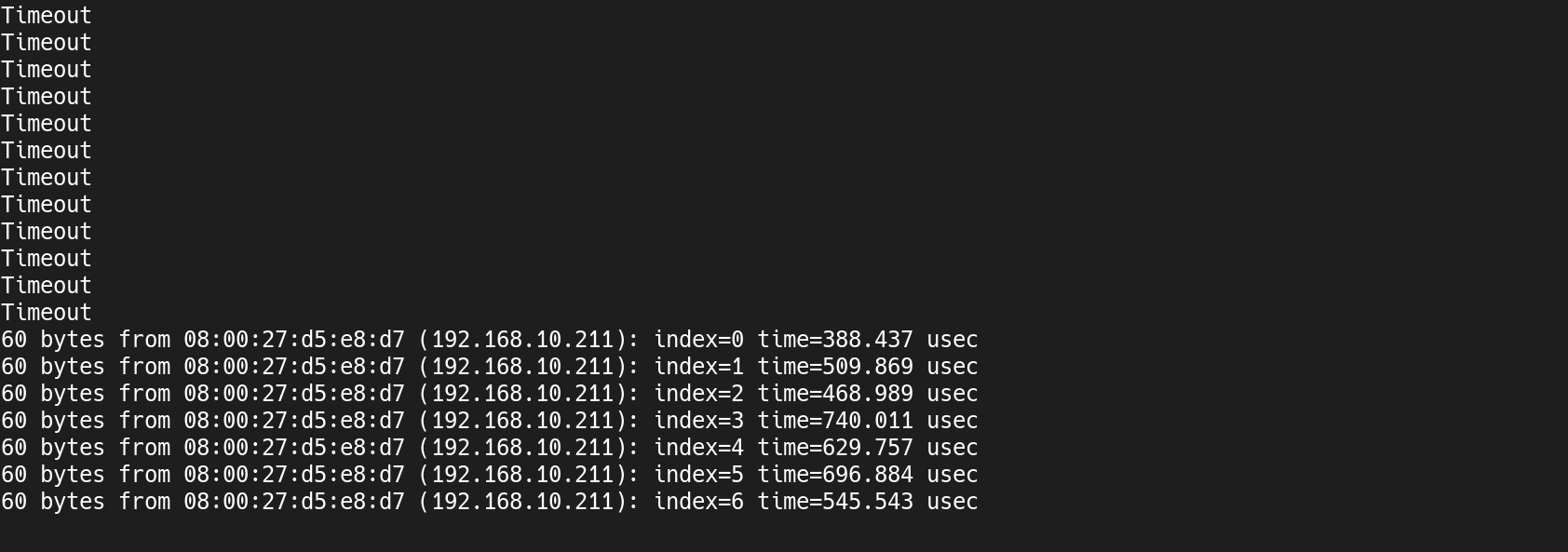

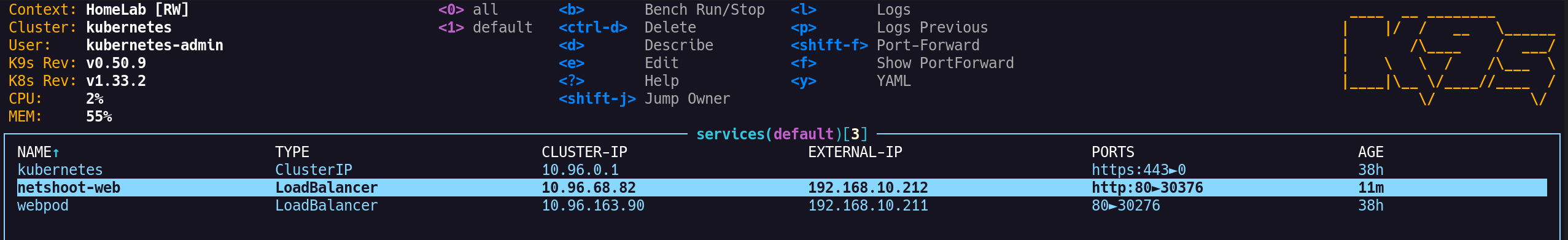

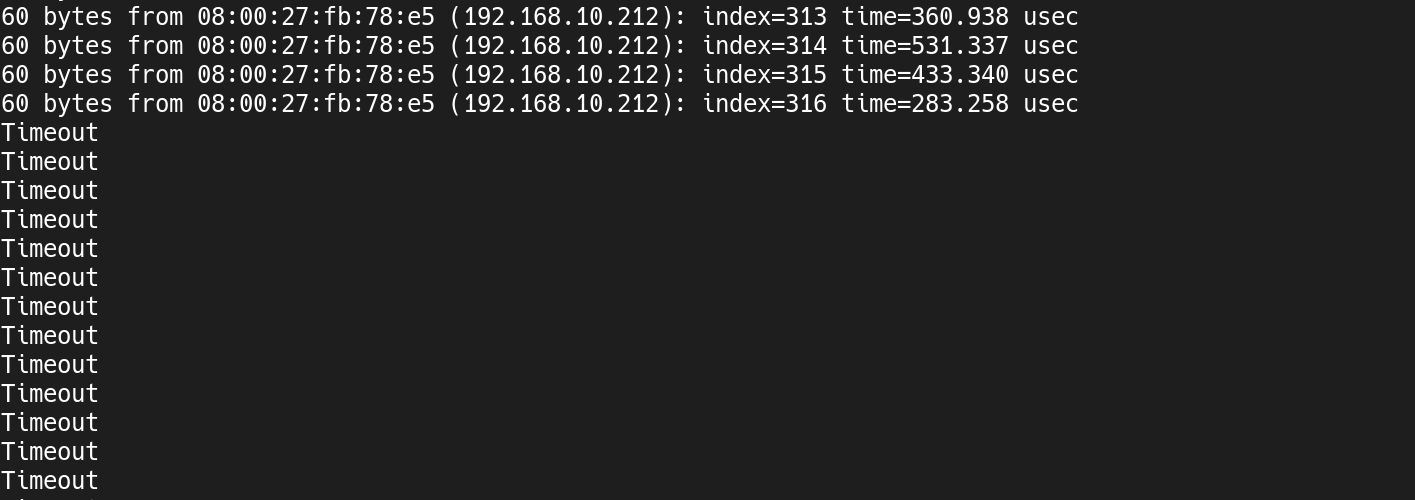

1. 통신 불안정 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- sh -c 'while true; do curl -s --connect-timeout 1 webpod | grep Hostname; echo "---" ; sleep 1; done'

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

Hostname: webpod-697b545f57-rpz7h

---

Hostname: webpod-697b545f57-rpz7h

---

Hostname: webpod-697b545f57-rpz7h

---

Hostname: webpod-697b545f57-gkvrf

---

Hostname: webpod-697b545f57-rpz7h

---

---

Hostname: webpod-697b545f57-rpz7h

---

Hostname: webpod-697b545f57-gkvrf

---

Hostname: webpod-697b545f57-gkvrf

---

Hostname: webpod-697b545f57-rpz7h

---

Hostname: webpod-697b545f57-rpz7h

---

---

Hostname: webpod-697b545f57-rpz7h

---

curl명령에--connect-timeout 1옵션을 적용하여webpod서비스 호출 시 간헐적으로 응답이 없음을 확인- 정상 응답 시

Hostname이webpod-697b545f57-rpz7h또는webpod-697b545f57-gkvrf로 표시됨

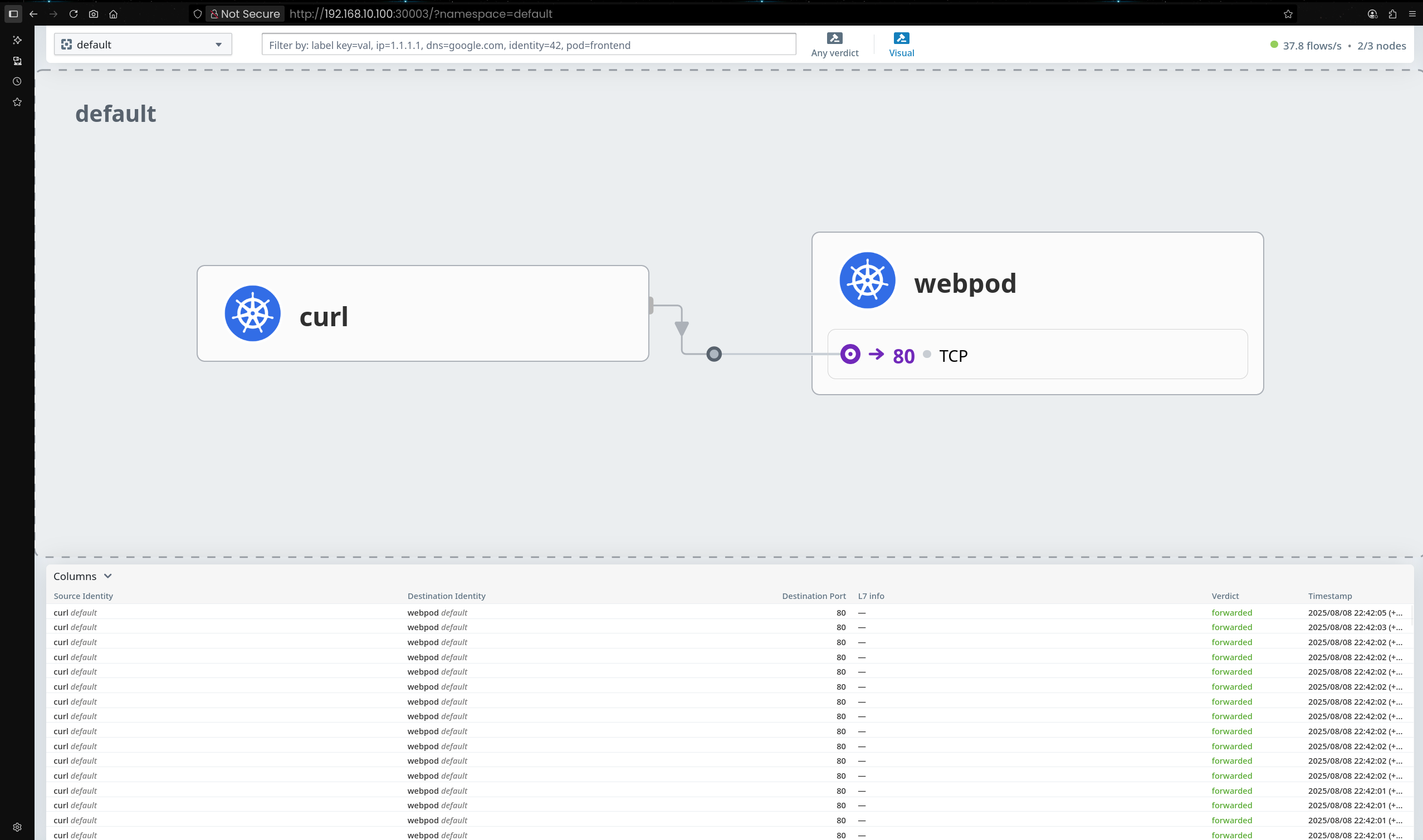

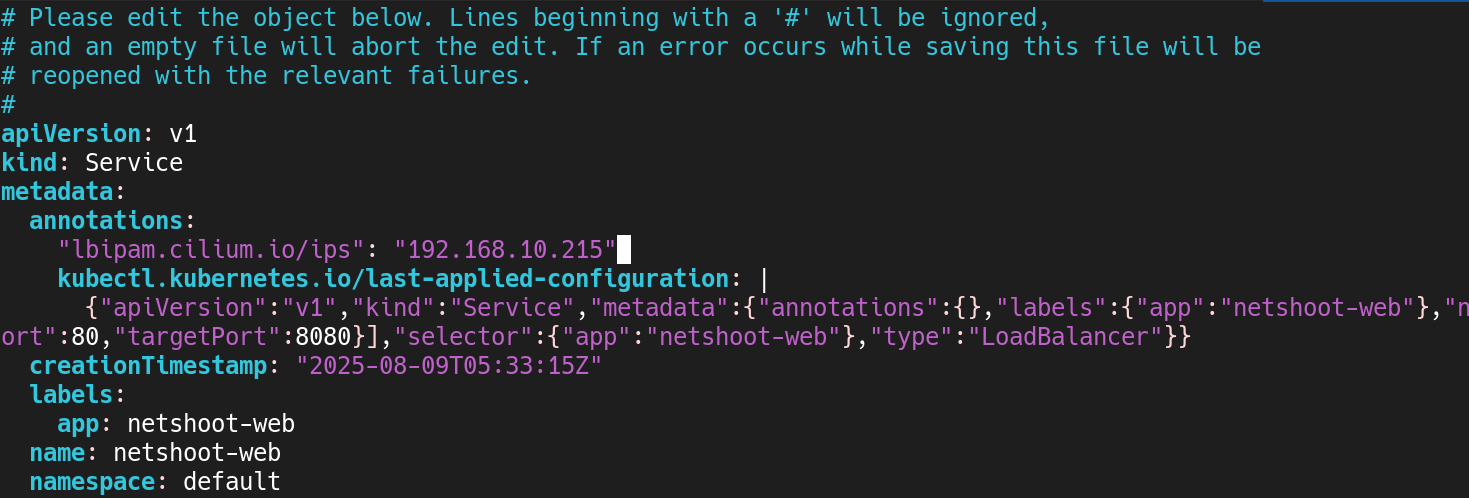

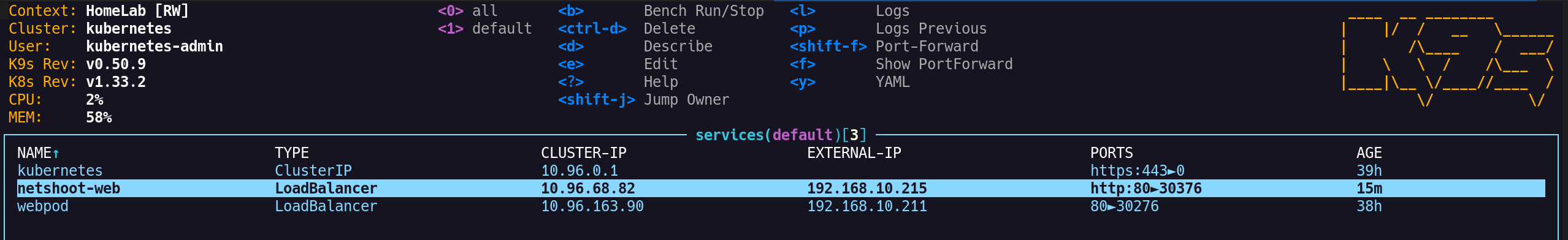

2. Hubble UI 접속

- 컨트롤 플레인 노드의

curl-pod가 3개 노드에 배포된webpod엔드포인트로 요청 - 라우터 경유 경로를 추적하기 위해 라우터에서

tcpdump진행 계획 수립

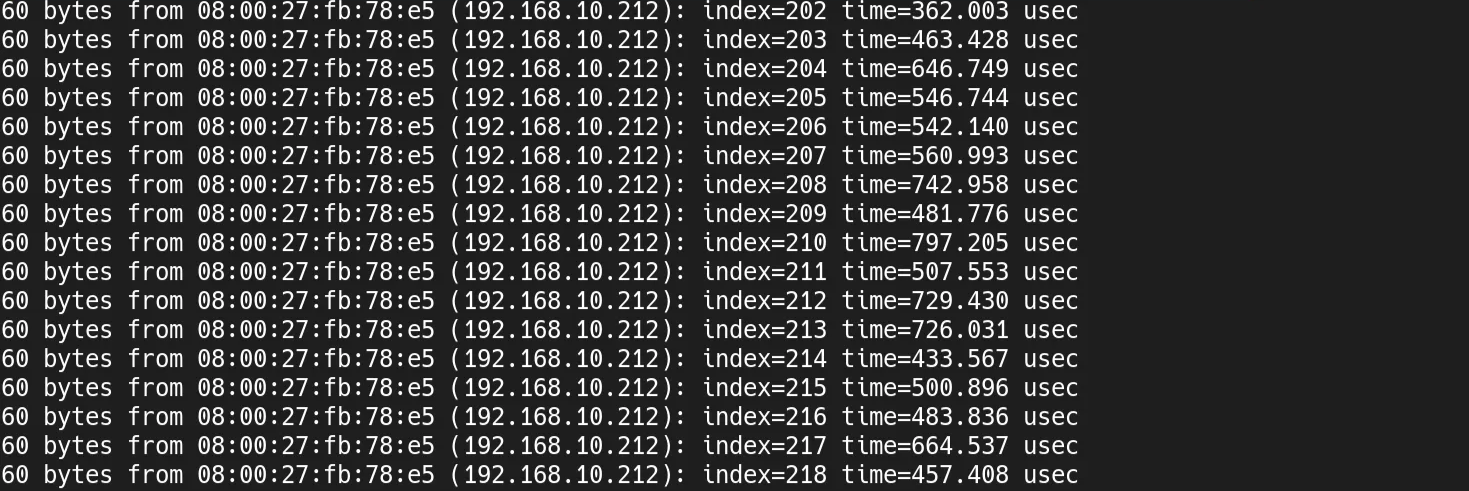

3. webpod IP 확인 및 ping 테스트

1

2

3

4

5

(⎈|HomeLab:N/A) root@k8s-ctr:~# export WEBPOD=$(kubectl get pod -l app=webpod --field-selector spec.nodeName=k8s-w0 -o jsonpath='{.items[0].status.podIP}')

echo $WEBPOD

# 결과

172.20.2.1

- 워커노드0(

k8s-w0)에 배포된webpod의 IP(172.20.2.1) 추출 후ping시도

1

2

3

4

root@router:~# tcpdump -i any icmp -nn

tcpdump: data link type LINUX_SLL2

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on any, link-type LINUX_SLL2 (Linux cooked v2), snapshot length 262144 bytes

- 라우터에서 tcpdump

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- ping -c 2 -w 1 -W 1 $WEBPOD

✅ 출력

1

2

3

4

5

6

PING 172.20.2.1 (172.20.2.1) 56(84) bytes of data.

--- 172.20.2.1 ping statistics ---

1 packets transmitted, 0 received, 100% packet loss, time 0ms

command terminated with exit code 1

- 패킷 손실 100% 발생 (응답 없음)

4. 라우터 tcpdump 결과 분석 (ICMP)

1

2

3

listening on any, link-type LINUX_SLL2 (Linux cooked v2), snapshot length 262144 bytes

22:47:59.061351 eth1 In IP 172.20.0.96 > 172.20.2.1: ICMP echo request, id 1155, seq 1, length 64

22:47:59.061370 eth0 Out IP 172.20.0.96 > 172.20.2.1: ICMP echo request, id 1155, seq 1, length 64

curl-pod(172.20.0.96) →webpod(172.20.2.1) ICMP 요청이eth1로 IN- 목적지가 다른 네트워크 인터페이스(

eth2)가 아닌 인터넷 전용eth0으로 OUT - 원인: 라우터 라우팅 테이블에

172.20.0.0/16CIDR 경로 없음 → default route로 처리

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# k get pod -owide

✅ 출력

1

2

3

4

5

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

curl-pod 1/1 Running 0 22h 172.20.0.96 k8s-ctr <none> <none>

webpod-697b545f57-fz95q 1/1 Running 0 22h 172.20.2.1 k8s-w0 <none> <none>

webpod-697b545f57-gkvrf 1/1 Running 0 22h 172.20.0.66 k8s-ctr <none> <none>

webpod-697b545f57-rpz7h 1/1 Running 0 22h 172.20.1.126 k8s-w1 <none> <none>

5. 라우팅 테이블 확인

1

root@router:~# ip -c route

✅ 출력

1

2

3

4

5

6

7

8

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.10.1.0/24 dev loop1 proto kernel scope link src 10.10.1.200

10.10.2.0/24 dev loop2 proto kernel scope link src 10.10.2.200

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.200

192.168.20.0/24 dev eth2 proto kernel scope link src 192.168.20.200

172.20.x.x대역에 대한 경로 미등록 확인

1

root@router:~# ip route get 172.20.2.1

✅ 출력

1

2

172.20.2.1 via 10.0.2.2 dev eth0 src 10.0.2.15 uid 0

cache

ip route get 172.20.2.1결과 default route(10.0.2.2 via eth0)로 전송됨

6. TCP 트래픽 분석

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- sh -c 'while true; do curl -s --connect-timeout 1 webpod | grep Hostname; echo "---" ; sleep 1; done'

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

Hostname: webpod-697b545f57-rpz7h

---

---

Hostname: webpod-697b545f57-rpz7h

---

Hostname: webpod-697b545f57-rpz7h

---

Hostname: webpod-697b545f57-gkvrf

---

Hostname: webpod-697b545f57-rpz7h

---

Hostname: webpod-697b545f57-gkvrf

---

Hostname: webpod-697b545f57-rpz7h

---

---

Hostname: webpod-697b545f57-gkvrf

---

---

Hostname: webpod-697b545f57-gkvrf

---

---

---

---

---

1

root@router:~# tcpdump -i any tcp port 80 -nn

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

tcpdump: data link type LINUX_SLL2

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on any, link-type LINUX_SLL2 (Linux cooked v2), snapshot length 262144 bytes

23:01:08.369358 eth1 In IP 172.20.0.96.54708 > 172.20.2.1.80: Flags [S], seq 656654546, win 64240, options [mss 1460,sackOK,TS val 677256276 ecr 0,nop,wscale 7], length 0

23:01:08.369376 eth0 Out IP 172.20.0.96.54708 > 172.20.2.1.80: Flags [S], seq 656654546, win 64240, options [mss 1460,sackOK,TS val 677256276 ecr 0,nop,wscale 7], length 0

23:01:16.429813 eth1 In IP 172.20.0.96.37360 > 172.20.2.1.80: Flags [S], seq 1376149342, win 64240, options [mss 1460,sackOK,TS val 677264336 ecr 0,nop,wscale 7], length 0

23:01:16.429833 eth0 Out IP 172.20.0.96.37360 > 172.20.2.1.80: Flags [S], seq 1376149342, win 64240, options [mss 1460,sackOK,TS val 677264336 ecr 0,nop,wscale 7], length 0

23:01:19.444988 eth1 In IP 172.20.0.96.37368 > 172.20.2.1.80: Flags [S], seq 1991901286, win 64240, options [mss 1460,sackOK,TS val 677267352 ecr 0,nop,wscale 7], length 0

23:01:19.445008 eth0 Out IP 172.20.0.96.37368 > 172.20.2.1.80: Flags [S], seq 1991901286, win 64240, options [mss 1460,sackOK,TS val 677267352 ecr 0,nop,wscale 7], length 0

23:01:22.461799 eth1 In IP 172.20.0.96.41056 > 172.20.2.1.80: Flags [S], seq 958768126, win 64240, options [mss 1460,sackOK,TS val 677270368 ecr 0,nop,wscale 7], length 0

23:01:22.461831 eth0 Out IP 172.20.0.96.41056 > 172.20.2.1.80: Flags [S], seq 958768126, win 64240, options [mss 1460,sackOK,TS val 677270368 ecr 0,nop,wscale 7], length 0

23:01:24.468117 eth1 In IP 172.20.0.96.41064 > 172.20.2.1.80: Flags [S], seq 4136195789, win 64240, options [mss 1460,sackOK,TS val 677272375 ecr 0,nop,wscale 7], length 0

23:01:24.468135 eth0 Out IP 172.20.0.96.41064 > 172.20.2.1.80: Flags [S], seq 4136195789, win 64240, options [mss 1460,sackOK,TS val 677272375 ecr 0,nop,wscale 7], length 0

23:01:26.473963 eth1 In IP 172.20.0.96.41072 > 172.20.2.1.80: Flags [S], seq 2284309943, win 64240, options [mss 1460,sackOK,TS val 677274381 ecr 0,nop,wscale 7], length 0

23:01:26.473981 eth0 Out IP 172.20.0.96.41072 > 172.20.2.1.80: Flags [S], seq 2284309943, win 64240, options [mss 1460,sackOK,TS val 677274381 ecr 0,nop,wscale 7], length 0

23:01:28.480125 eth1 In IP 172.20.0.96.41084 > 172.20.2.1.80: Flags [S], seq 1101677227, win 64240, options [mss 1460,sackOK,TS val 677276387 ecr 0,nop,wscale 7], length 0

23:01:28.480143 eth0 Out IP 172.20.0.96.41084 > 172.20.2.1.80: Flags [S], seq 1101677227, win 64240, options [mss 1460,sackOK,TS val 677276387 ecr 0,nop,wscale 7], length 0

23:01:32.501119 eth1 In IP 172.20.0.96.56438 > 172.20.2.1.80: Flags [S], seq 601326350, win 64240, options [mss 1460,sackOK,TS val 677280408 ecr 0,nop,wscale 7], length 0

23:01:32.501138 eth0 Out IP 172.20.0.96.56438 > 172.20.2.1.80: Flags [S], seq 601326350, win 64240, options [mss 1460,sackOK,TS val 677280408 ecr 0,nop,wscale 7], length 0

tcpdump로 TCP 80 포트 관찰 시 SYN 패킷이eth1IN →eth0OUT- 목적지 pod CIDR로의 경로 부재로 잘못된 인터페이스로 송출되는 현상 재확인

7. Hubble 포트포워딩 및 Flow 모니터링

(1) 포트포워딩 셋팅

1

2

3

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium hubble port-forward&

[1] 13992

(⎈|HomeLab:N/A) root@k8s-ctr:~# ℹ️ Hubble Relay is available at 127.0.0.1:4245

hubble observe결과k8s-w0노드가 unavailable 상태로 표시- 특정 엔드포인트로 SYN만 전송되고 응답(ACK)이 오지 않는 세션 확인

📦 Overlay Network (Encapsulation) mode

1. VXLAN Encapsulation 가능 여부 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# grep -E 'CONFIG_VXLAN=y|CONFIG_VXLAN=m|CONFIG_GENEVE=y|CONFIG_GENEVE=m|CONFIG_FIB_RULES=y' /boot/config-$(uname -r)

✅ 출력

1

2

3

CONFIG_FIB_RULES=y # 커널에 내장됨

CONFIG_VXLAN=m # 모듈로 컴파일됨 → 커널에 로드해서 사용

CONFIG_GENEVE=m # 모듈로 컴파일됨 → 커널에 로드해서 사용

- 커널 설정 파일(

/boot/config-$(uname -r))에서CONFIG_VXLAN=m,CONFIG_GENEVE=m확인 - Encapsulation 방식(VXLAN, Geneve) 지원 여부 점검

2. VXLAN 모듈 로드

1

2

(⎈|HomeLab:N/A) root@k8s-ctr:~# lsmod | grep -E 'vxlan|geneve'

(⎈|HomeLab:N/A) root@k8s-ctr:~# modprobe vxlan

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# lsmod | grep -E 'vxlan'

vxlan 155648 0

ip6_udp_tunnel 16384 1 vxlan

udp_tunnel 32768 1 vxlan

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# for i in w1 w0 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i sudo modprobe vxlan ; echo; done

>> node : k8s-w1 <<

>> node : k8s-w0 <<

1

2

3

4

5

6

7

8

9

10

(⎈|HomeLab:N/A) root@k8s-ctr:~# for i in w1 w0 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i sudo lsmod | grep -E 'vxlan|geneve' ; echo; done

>> node : k8s-w1 <<

vxlan 155648 0

ip6_udp_tunnel 16384 1 vxlan

udp_tunnel 32768 1 vxlan

>> node : k8s-w0 <<

vxlan 155648 0

ip6_udp_tunnel 16384 1 vxlan

udp_tunnel 32768 1 vxlan

modprobe vxlan명령으로 컨트롤 플레인 및 워커 노드 2곳에 VXLAN 모듈 로드lsmod로 로드 여부 확인 (vxlan,ip6_udp_tunnel,udp_tunnel모듈 활성화)

3. Ping 테스트 (Native Routing 상태)

(1) 워커노드1에 배포된 webpod IP 추출

1

2

3

4

5

(⎈|HomeLab:N/A) root@k8s-ctr:~# export WEBPOD1=$(kubectl get pod -l app=webpod --field-selector spec.nodeName=k8s-w1 -o jsonpath='{.items[0].status.podIP}')

echo $WEBPOD1

# 결과

172.20.1.126

(2) curl-pod에서 ping 수행

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- ping $WEBPOD1

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

PING 172.20.1.126 (172.20.1.126) 56(84) bytes of data.

64 bytes from 172.20.1.126: icmp_seq=1 ttl=62 time=0.637 ms

64 bytes from 172.20.1.126: icmp_seq=2 ttl=62 time=0.647 ms

64 bytes from 172.20.1.126: icmp_seq=3 ttl=62 time=0.438 ms

64 bytes from 172.20.1.126: icmp_seq=4 ttl=62 time=0.455 ms

64 bytes from 172.20.1.126: icmp_seq=5 ttl=62 time=0.561 ms

64 bytes from 172.20.1.126: icmp_seq=6 ttl=62 time=0.407 ms

64 bytes from 172.20.1.126: icmp_seq=7 ttl=62 time=0.367 ms

64 bytes from 172.20.1.126: icmp_seq=8 ttl=62 time=0.377 ms

64 bytes from 172.20.1.126: icmp_seq=9 ttl=62 time=0.386 ms

64 bytes from 172.20.1.126: icmp_seq=10 ttl=62 time=0.414 ms

64 bytes from 172.20.1.126: icmp_seq=11 ttl=62 time=0.368 ms

64 bytes from 172.20.1.126: icmp_seq=12 ttl=62 time=0.369 ms

64 bytes from 172.20.1.126: icmp_seq=13 ttl=62 time=0.664 ms

64 bytes from 172.20.1.126: icmp_seq=14 ttl=62 time=0.537 ms

64 bytes from 172.20.1.126: icmp_seq=15 ttl=62 time=0.387 ms

64 bytes from 172.20.1.126: icmp_seq=16 ttl=62 time=0.452 ms

64 bytes from 172.20.1.126: icmp_seq=17 ttl=62 time=0.381 ms

64 bytes from 172.20.1.126: icmp_seq=18 ttl=62 time=0.606 ms

64 bytes from 172.20.1.126: icmp_seq=19 ttl=62 time=0.975 ms

64 bytes from 172.20.1.126: icmp_seq=20 ttl=62 time=0.384 ms

64 bytes from 172.20.1.126: icmp_seq=21 ttl=62 time=0.340 ms

64 bytes from 172.20.1.126: icmp_seq=22 ttl=62 time=0.392 ms

64 bytes from 172.20.1.126: icmp_seq=23 ttl=62 time=0.490 ms

64 bytes from 172.20.1.126: icmp_seq=24 ttl=62 time=0.405 ms

64 bytes from 172.20.1.126: icmp_seq=25 ttl=62 time=0.820 ms

64 bytes from 172.20.1.126: icmp_seq=26 ttl=62 time=0.387 ms

...

- 컨트롤플레인과 워커노드1은 같은 대역이라 통신 정상 응답

4. Cilium Overlay 모드(VXLAN) 전환

1

2

3

(⎈|HomeLab:N/A) root@k8s-ctr:~# helm upgrade cilium cilium/cilium --namespace kube-system --version 1.18.0 --reuse-values \

--set routingMode=tunnel --set tunnelProtocol=vxlan \

--set autoDirectNodeRoutes=false --set installNoConntrackIptablesRules=false

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

Release "cilium" has been upgraded. Happy Helming!

NAME: cilium

LAST DEPLOYED: Fri Aug 8 23:47:06 2025

NAMESPACE: kube-system

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

You have successfully installed Cilium with Hubble Relay and Hubble UI.

Your release version is 1.18.0.

For any further help, visit https://docs.cilium.io/en/v1.18/gettinghelp

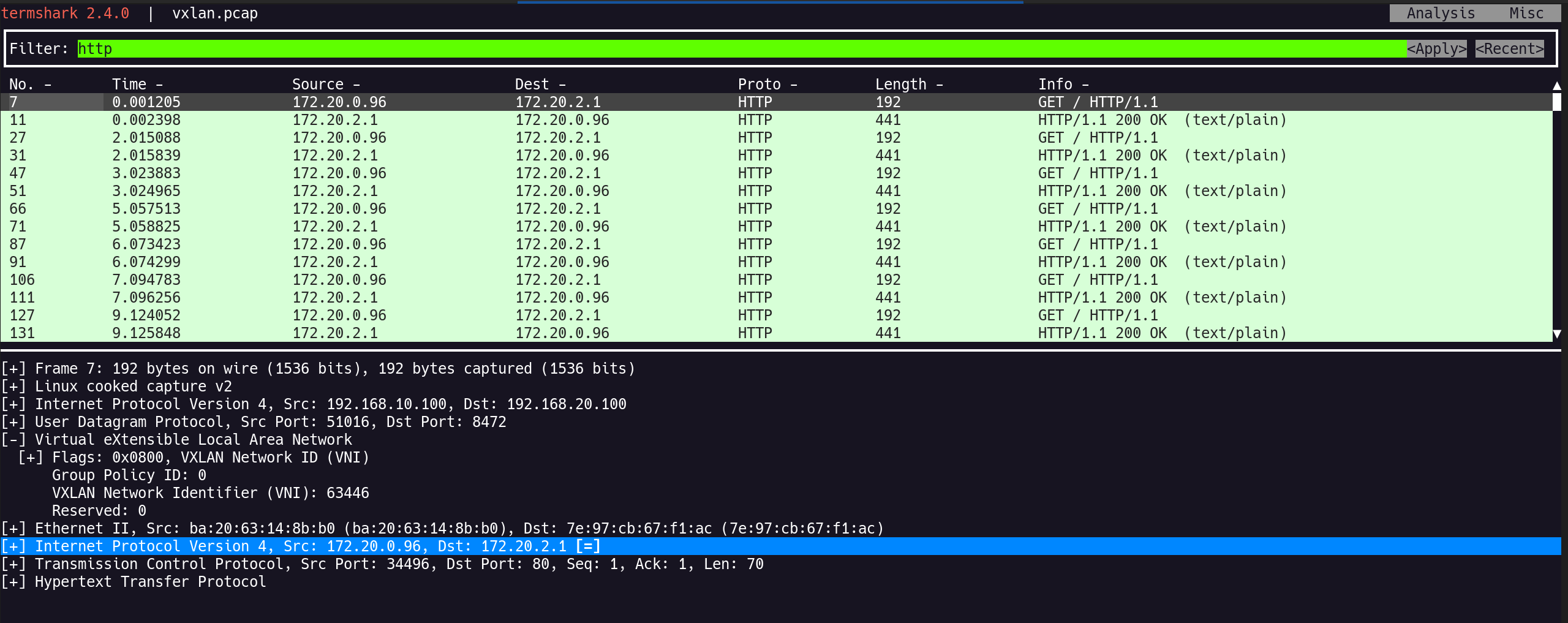

- Helm 업그레이드 시

routingMode=tunnel,tunnelProtocol=vxlan설정 autoDirectNodeRoutes=false로 Direct Routing 비활성화

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl rollout restart -n kube-system ds/cilium

# 결과

daemonset.apps/cilium restarted

kubectl rollout restart로 Cilium DaemonSet 재시작

5. Overlay 모드 적용 확인

(1) mode=overlay-vxlan 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium features status | grep datapath_network

✅ 출력

1

Yes cilium_feature_datapath_network mode=overlay-vxlan 1 1 1

(2) Routing: Network: Tunnel [vxlan] 출력

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it -n kube-system ds/cilium -- cilium status | grep ^Routing

✅ 출력

1

Routing: Network: Tunnel [vxlan] Host: BPF

(3) 각 노드에 cilium_vxlan 인터페이스 생성 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c addr show dev cilium_vxlan

✅ 출력

1

2

3

4

26: cilium_vxlan: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default

link/ether 8e:c3:47:03:17:dc brd ff:ff:ff:ff:ff:ff

inet6 fe80::8cc3:47ff:fe03:17dc/64 scope link

valid_lft forever preferred_lft forever

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# for i in w1 w0 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i ip -c addr show dev cilium_vxlan ; echo; done

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

>> node : k8s-w1 <<

8: cilium_vxlan: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default

link/ether 2e:fb:fe:83:8f:85 brd ff:ff:ff:ff:ff:ff

inet6 fe80::2cfb:feff:fe83:8f85/64 scope link

valid_lft forever preferred_lft forever

>> node : k8s-w0 <<

8: cilium_vxlan: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default

link/ether d6:44:cc:8c:e1:ce brd ff:ff:ff:ff:ff:ff

inet6 fe80::d444:ccff:fe8c:e1ce/64 scope link

valid_lft forever preferred_lft forever

6. Pod CIDR 라우팅 테이블 등록 확인 (컨트롤 플레인)

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c route

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.10.0.0/16 via 192.168.10.200 dev eth1 proto static

172.20.0.0/24 via 172.20.0.253 dev cilium_host proto kernel src 172.20.0.253

172.20.0.0/16 via 192.168.10.200 dev eth1 proto static

172.20.0.253 dev cilium_host proto kernel scope link

172.20.1.0/24 via 172.20.0.253 dev cilium_host proto kernel src 172.20.0.253 mtu 1450

172.20.2.0/24 via 172.20.0.253 dev cilium_host proto kernel src 172.20.0.253 mtu 1450

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.100

192.168.20.0/24 via 192.168.10.200 dev eth1 proto static

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c route | grep cilium_host

✅ 출력

1

2

3

4

172.20.0.0/24 via 172.20.0.253 dev cilium_host proto kernel src 172.20.0.253

172.20.0.253 dev cilium_host proto kernel scope link

172.20.1.0/24 via 172.20.0.253 dev cilium_host proto kernel src 172.20.0.253 mtu 1450

172.20.2.0/24 via 172.20.0.253 dev cilium_host proto kernel src 172.20.0.253 mtu 1450

- 기존에는 없던 다른 노드의 Pod CIDR(

172.20.1.0/24,172.20.2.0/24) 경로가cilium_host인터페이스를 통해 등록됨

7. 특정 Pod IP 경로 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip route get 172.20.1.10

✅ 출력

1

2

172.20.1.10 dev cilium_host src 172.20.0.253 uid 0

cache mtu 1450

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip route get 172.20.2.10

✅ 출력

1

2

172.20.2.10 dev cilium_host src 172.20.0.253 uid 0

cache mtu 1450

172.20.1.10,172.20.2.10에 대한 경로 확인 시dev cilium_host경유로 통신함을 확인- MTU는 VXLAN 터널 적용으로 1450으로 설정

8. 워커노드 라우팅 테이블 비교

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# for i in w1 w0 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i ip -c route | grep cilium_host ; echo; done

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

>> node : k8s-w1 <<

172.20.0.0/24 via 172.20.1.238 dev cilium_host proto kernel src 172.20.1.238 mtu 1450

172.20.1.0/24 via 172.20.1.238 dev cilium_host proto kernel src 172.20.1.238

172.20.1.238 dev cilium_host proto kernel scope link

172.20.2.0/24 via 172.20.1.238 dev cilium_host proto kernel src 172.20.1.238 mtu 1450

>> node : k8s-w0 <<

172.20.0.0/24 via 172.20.2.13 dev cilium_host proto kernel src 172.20.2.13 mtu 1450

172.20.1.0/24 via 172.20.2.13 dev cilium_host proto kernel src 172.20.2.13 mtu 1450

172.20.2.0/24 via 172.20.2.13 dev cilium_host proto kernel src 172.20.2.13

172.20.2.13 dev cilium_host proto kernel scope link

- 워커노드1, 워커노드0 모두 자신 + 다른 노드의 Pod CIDR 라우팅 경로 포함

via뒤의 IP는 각 노드 Cilium Router IP로 설정되어 있음

9. Cilium Router IP 확인

1

2

3

4

5

6

7

8

9

(⎈|HomeLab:N/A) root@k8s-ctr:~# export CILIUMPOD0=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-ctr -o jsonpath='{.items[0].metadata.name}')

export CILIUMPOD1=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-w1 -o jsonpath='{.items[0].metadata.name}')

export CILIUMPOD2=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-w0 -o jsonpath='{.items[0].metadata.name}')

echo $CILIUMPOD0 $CILIUMPOD1 $CILIUMPOD2

# router 역할 IP 확인

kubectl exec -it $CILIUMPOD0 -n kube-system -c cilium-agent -- cilium status --all-addresses | grep router

kubectl exec -it $CILIUMPOD1 -n kube-system -c cilium-agent -- cilium status --all-addresses | grep router

kubectl exec -it $CILIUMPOD2 -n kube-system -c cilium-agent -- cilium status --all-addresses | grep router

✅ 출력

1

2

3

4

cilium-th5dp cilium-snscc cilium-sjdzj

172.20.0.253 (router)

172.20.1.238 (router)

172.20.2.13 (router)

- 컨트롤 플레인:

172.20.0.253, 워커노드1:172.20.1.238, 워커노드0:172.20.2.13

10. BPF ipcache 정보 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system $CILIUMPOD0 -- cilium-dbg bpf ipcache list

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

IP PREFIX/ADDRESS IDENTITY

172.20.0.197/32 identity=58623 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.1.0/24 identity=2 encryptkey=0 tunnelendpoint=192.168.10.101 flags=hastunnel

192.168.10.100/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.0.71/32 identity=18480 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.0.163/32 identity=32707 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

192.168.10.101/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

192.168.20.100/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.0.33/32 identity=7496 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.0.96/32 identity=63446 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.0.248/32 identity=58623 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.2.0/24 identity=2 encryptkey=0 tunnelendpoint=192.168.20.100 flags=hastunnel

172.20.0.75/32 identity=5827 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.0.253/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.1.238/32 identity=6 encryptkey=0 tunnelendpoint=192.168.10.101 flags=hastunnel

172.20.2.1/32 identity=8469 encryptkey=0 tunnelendpoint=192.168.20.100 flags=hastunnel

172.20.2.13/32 identity=6 encryptkey=0 tunnelendpoint=192.168.20.100 flags=hastunnel

0.0.0.0/0 identity=2 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.1.126/32 identity=8469 encryptkey=0 tunnelendpoint=192.168.10.101 flags=hastunnel

10.0.2.15/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.0.66/32 identity=8469 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.0.72/32 identity=48231 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.0.73/32 identity=22595 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system $CILIUMPOD1 -- cilium-dbg bpf ipcache list

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

IP PREFIX/ADDRESS IDENTITY

172.20.0.66/32 identity=8469 encryptkey=0 tunnelendpoint=192.168.10.100 flags=hastunnel

172.20.0.73/32 identity=22595 encryptkey=0 tunnelendpoint=192.168.10.100 flags=hastunnel

172.20.0.197/32 identity=58623 encryptkey=0 tunnelendpoint=192.168.10.100 flags=hastunnel

172.20.0.248/32 identity=58623 encryptkey=0 tunnelendpoint=192.168.10.100 flags=hastunnel

172.20.1.238/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.2.0/24 identity=2 encryptkey=0 tunnelendpoint=192.168.20.100 flags=hastunnel

192.168.20.100/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

0.0.0.0/0 identity=2 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

10.0.2.15/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.0.71/32 identity=18480 encryptkey=0 tunnelendpoint=192.168.10.100 flags=hastunnel

172.20.0.75/32 identity=5827 encryptkey=0 tunnelendpoint=192.168.10.100 flags=hastunnel

172.20.0.96/32 identity=63446 encryptkey=0 tunnelendpoint=192.168.10.100 flags=hastunnel

172.20.0.253/32 identity=6 encryptkey=0 tunnelendpoint=192.168.10.100 flags=hastunnel

192.168.10.100/32 identity=7 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.0.33/32 identity=7496 encryptkey=0 tunnelendpoint=192.168.10.100 flags=hastunnel

172.20.0.163/32 identity=32707 encryptkey=0 tunnelendpoint=192.168.10.100 flags=hastunnel

172.20.0.0/24 identity=2 encryptkey=0 tunnelendpoint=192.168.10.100 flags=hastunnel

172.20.1.126/32 identity=8469 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.2.1/32 identity=8469 encryptkey=0 tunnelendpoint=192.168.20.100 flags=hastunnel

192.168.10.101/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.0.72/32 identity=48231 encryptkey=0 tunnelendpoint=192.168.10.100 flags=hastunnel

172.20.2.13/32 identity=6 encryptkey=0 tunnelendpoint=192.168.20.100 flags=hastunnel

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system $CILIUMPOD2 -- cilium-dbg bpf ipcache list

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

IP PREFIX/ADDRESS IDENTITY

172.20.0.72/32 identity=48231 encryptkey=0 tunnelendpoint=192.168.10.100 flags=hastunnel

172.20.0.248/32 identity=58623 encryptkey=0 tunnelendpoint=192.168.10.100 flags=hastunnel

172.20.2.13/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

172.20.0.66/32 identity=8469 encryptkey=0 tunnelendpoint=192.168.10.100 flags=hastunnel

172.20.0.96/32 identity=63446 encryptkey=0 tunnelendpoint=192.168.10.100 flags=hastunnel

172.20.0.163/32 identity=32707 encryptkey=0 tunnelendpoint=192.168.10.100 flags=hastunnel

172.20.1.126/32 identity=8469 encryptkey=0 tunnelendpoint=192.168.10.101 flags=hastunnel

172.20.2.1/32 identity=8469 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

192.168.10.100/32 identity=7 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

0.0.0.0/0 identity=2 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>

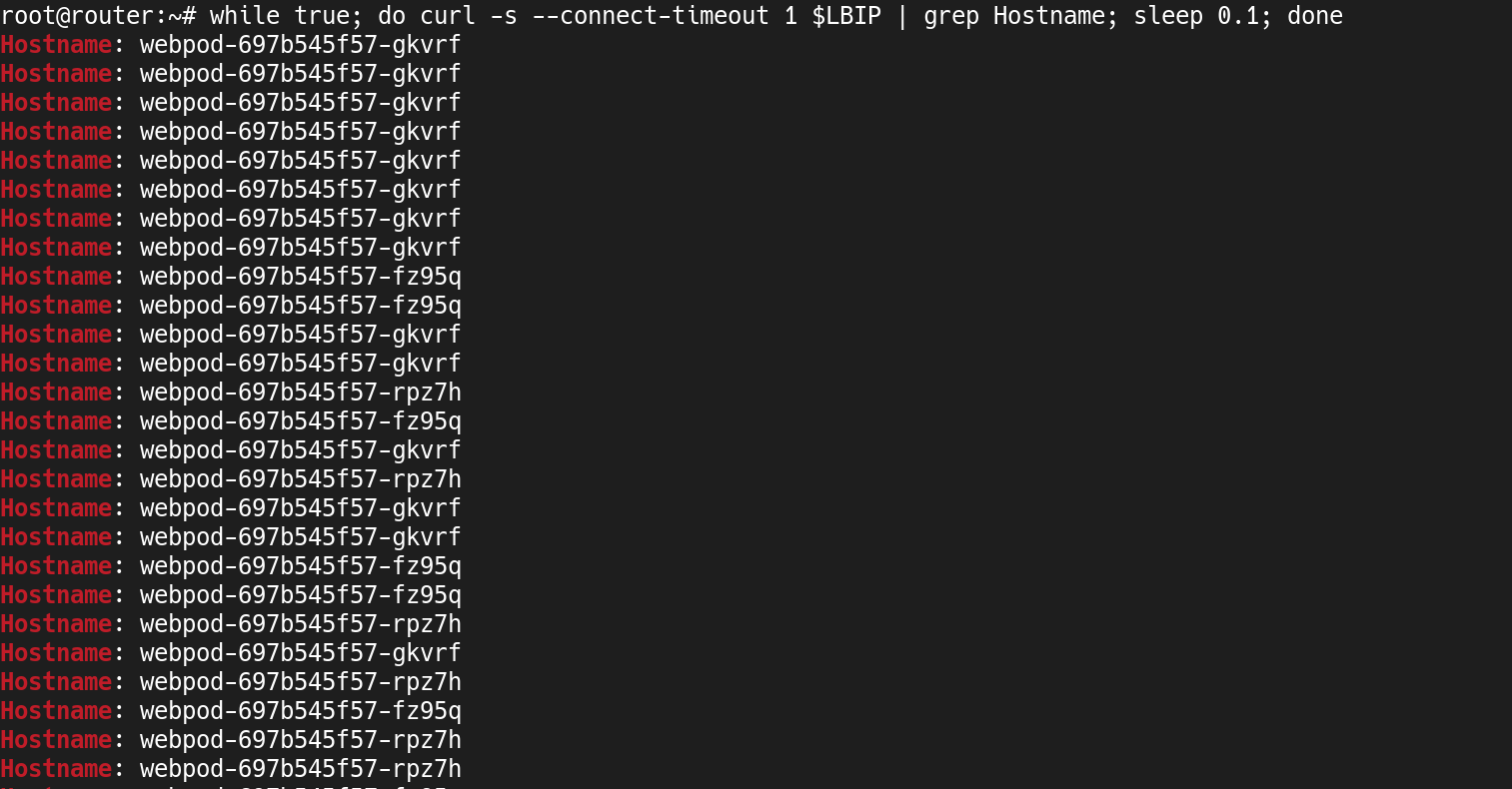

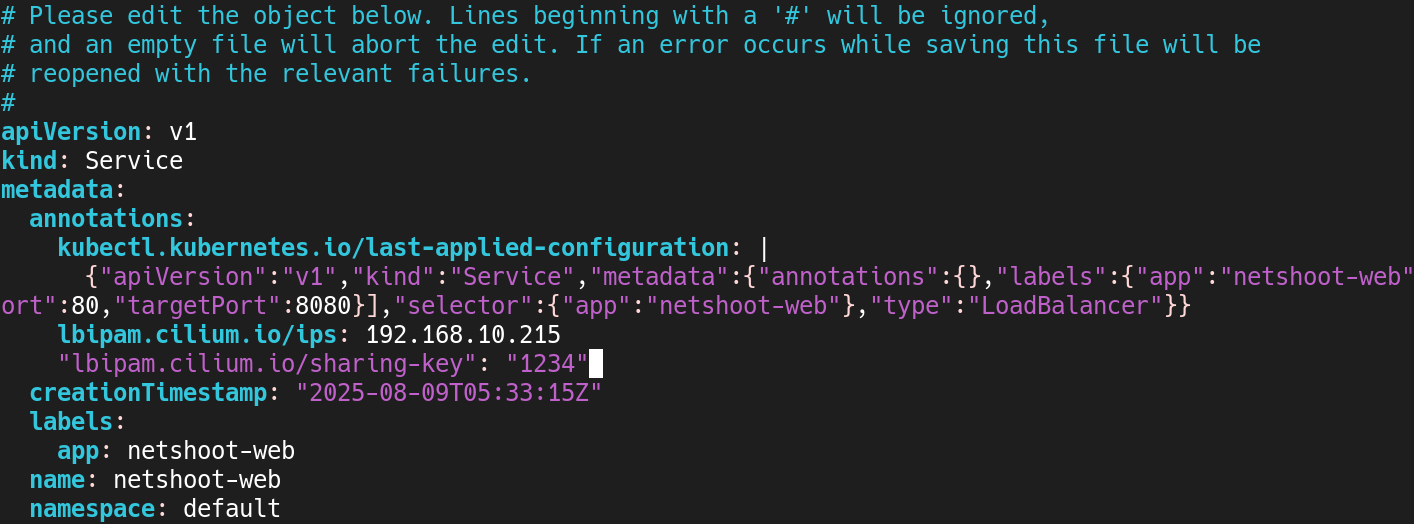

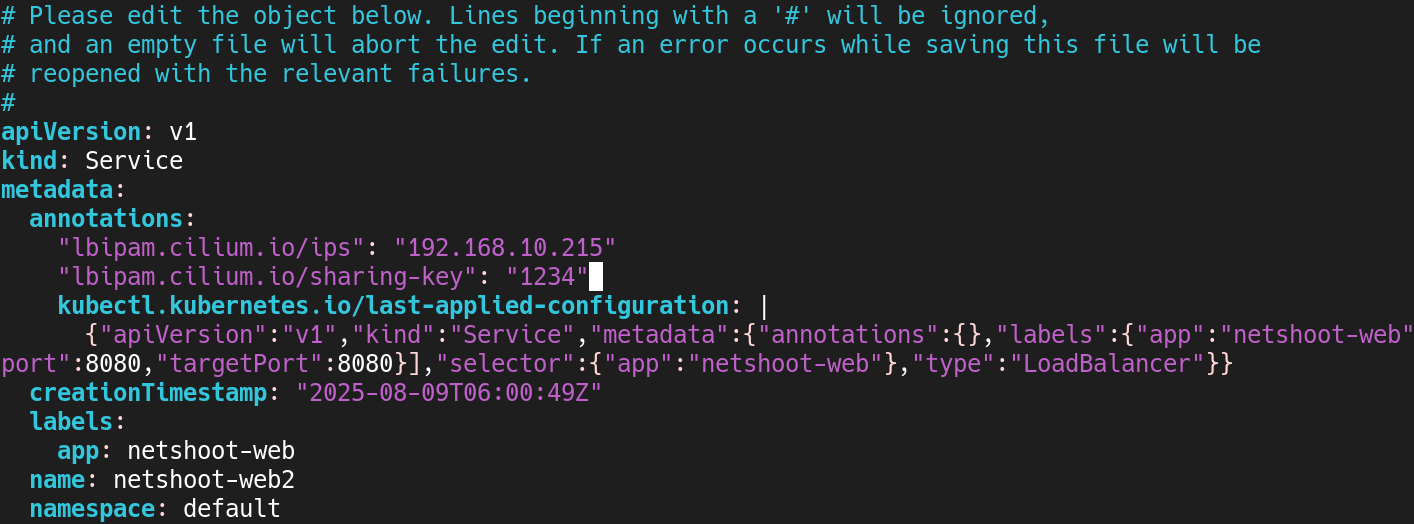

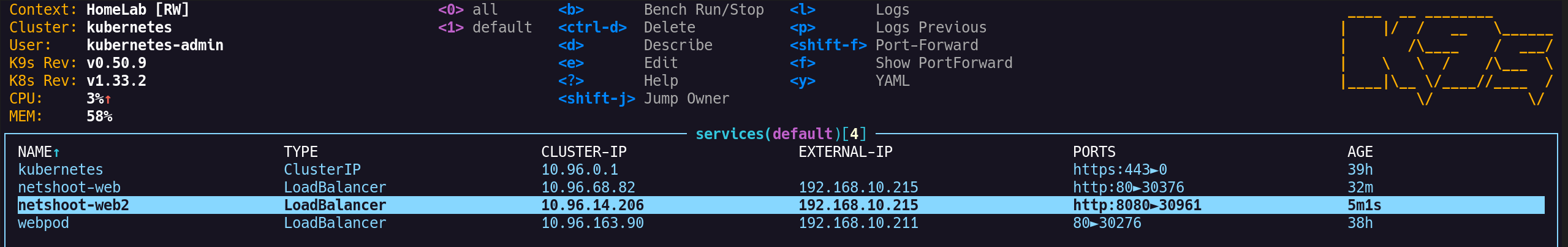

10.0.2.15/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none>