Cilium 3주차 정리

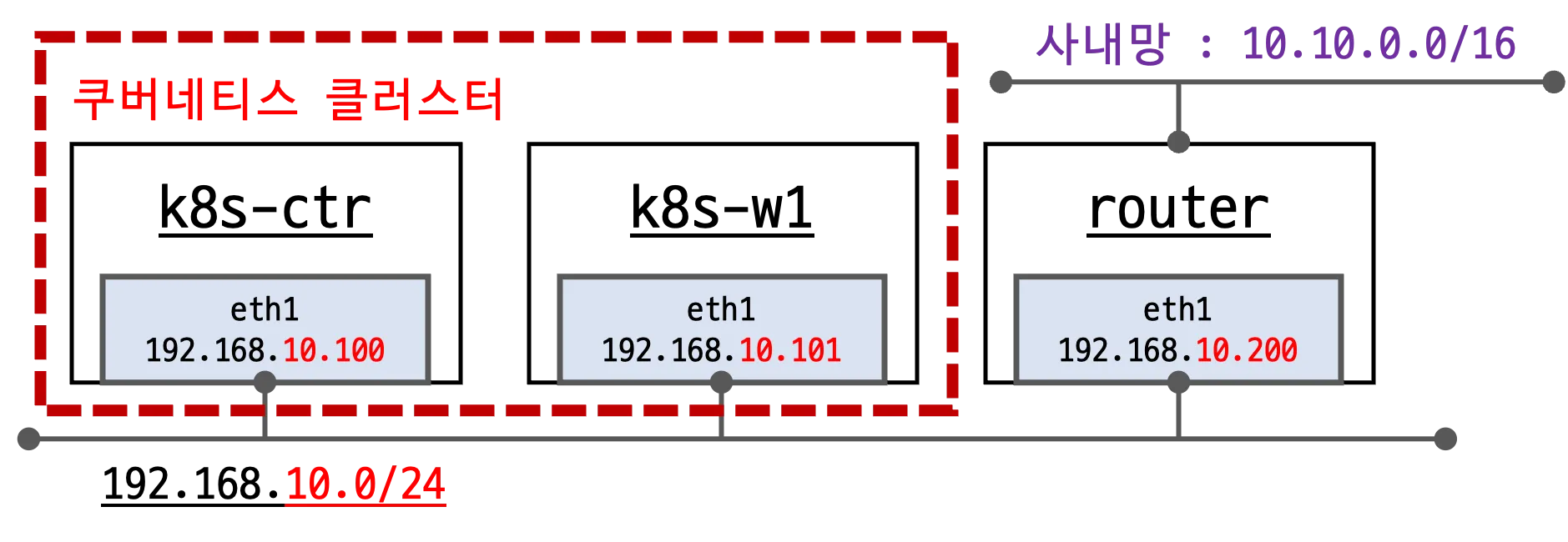

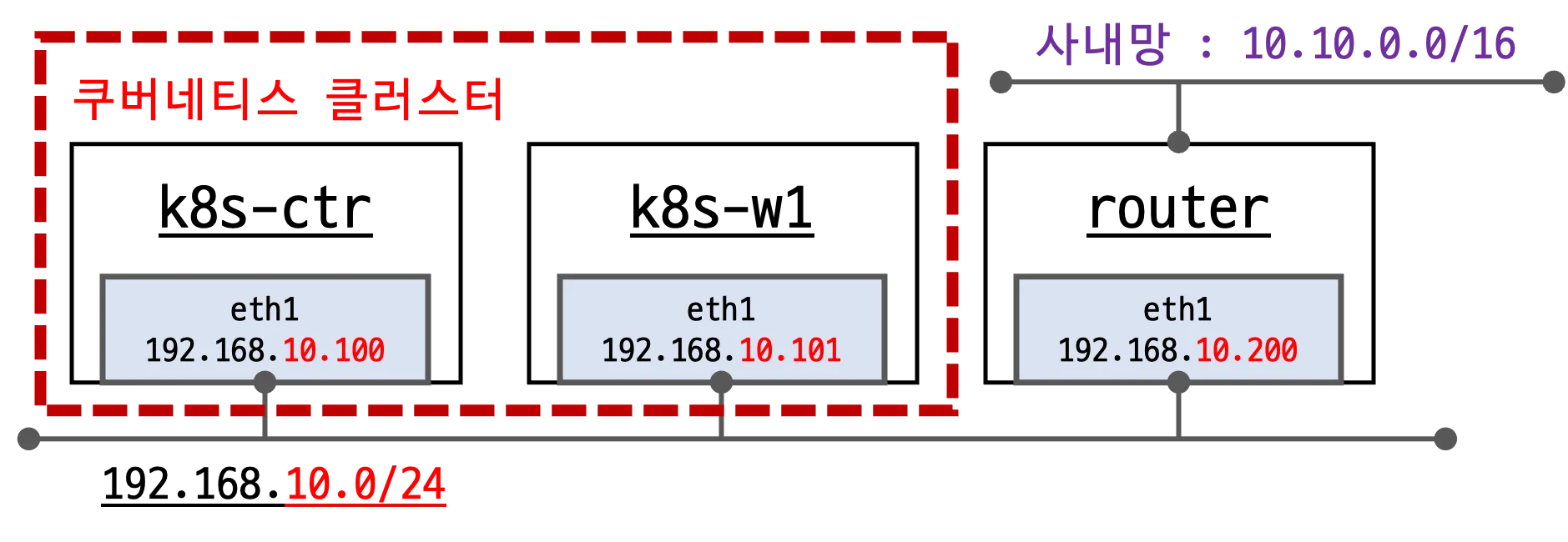

🔧 실습 환경 구성

- 클러스터 노드

- 컨트롤 플레인:

192.168.10.100(메모리 2GB → 2.5GB 상향) - 워커 노드:

192.168.10.101(1대) - 네트워크 대역:

192.168.10.0/24 - 컨트롤 플레인에 taint 제거 → 파드 배포 가능

kubeadm-init-ctr-config.yaml사용 시 버전 변수K8S_VERSION_PLACEHOLDER로 재사용성 확보

- 컨트롤 플레인:

- 라우터

- 주소:

192.168.10.200 - 사내망(10.10.0.0/16)과 쿠버네티스 네트워크(192.168.10.0/24) 간 통신 중계

static route 설정:

to: 10.10.0.0/16 → via: 192.168.10.200- IP forwarding 활성화

- dummy 인터페이스 2개 생성

loop1: 10.10.1.200loop2: 10.10.2.200

- 주소:

🚀 실습 환경 배포

1. Vagrantfile 다운로드 및 가상머신 구성

1

2

3

curl -O https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/3w/Vagrantfile

vagrant up

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

Bringing machine 'k8s-ctr' up with 'virtualbox' provider...

Bringing machine 'k8s-w1' up with 'virtualbox' provider...

Bringing machine 'router' up with 'virtualbox' provider...

==> k8s-ctr: Preparing master VM for linked clones...

k8s-ctr: This is a one time operation. Once the master VM is prepared,

k8s-ctr: it will be used as a base for linked clones, making the creation

k8s-ctr: of new VMs take milliseconds on a modern system.

==> k8s-ctr: Importing base box 'bento/ubuntu-24.04'...

==> k8s-ctr: Cloning VM...

==> k8s-ctr: Matching MAC address for NAT networking...

==> k8s-ctr: Checking if box 'bento/ubuntu-24.04' version '202502.21.0' is up to date...

==> k8s-ctr: Setting the name of the VM: k8s-ctr

==> k8s-ctr: Clearing any previously set network interfaces...

==> k8s-ctr: Preparing network interfaces based on configuration...

k8s-ctr: Adapter 1: nat

k8s-ctr: Adapter 2: hostonly

==> k8s-ctr: Forwarding ports...

k8s-ctr: 22 (guest) => 60000 (host) (adapter 1)

==> k8s-ctr: Running 'pre-boot' VM customizations...

==> k8s-ctr: Booting VM...

==> k8s-ctr: Waiting for machine to boot. This may take a few minutes...

k8s-ctr: SSH address: 127.0.0.1:60000

k8s-ctr: SSH username: vagrant

k8s-ctr: SSH auth method: private key

k8s-ctr:

k8s-ctr: Vagrant insecure key detected. Vagrant will automatically replace

k8s-ctr: this with a newly generated keypair for better security.

k8s-ctr:

k8s-ctr: Inserting generated public key within guest...

k8s-ctr: Removing insecure key from the guest if it's present...

k8s-ctr: Key inserted! Disconnecting and reconnecting using new SSH key...

==> k8s-ctr: Machine booted and ready!

==> k8s-ctr: Checking for guest additions in VM...

==> k8s-ctr: Setting hostname...

==> k8s-ctr: Configuring and enabling network interfaces...

==> k8s-ctr: Running provisioner: shell...

k8s-ctr: Running: /tmp/vagrant-shell20250730-27828-acul9.sh

k8s-ctr: >>>> Initial Config Start <<<<

k8s-ctr: [TASK 1] Setting Profile & Bashrc

k8s-ctr: [TASK 2] Disable AppArmor

k8s-ctr: [TASK 3] Disable and turn off SWAP

k8s-ctr: [TASK 4] Install Packages

k8s-ctr: [TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl)

k8s-ctr: [TASK 6] Install Packages & Helm

k8s-ctr: >>>> Initial Config End <<<<

==> k8s-ctr: Running provisioner: shell...

k8s-ctr: Running: /tmp/vagrant-shell20250730-27828-zl78rn.sh

k8s-ctr: >>>> K8S Controlplane config Start <<<<

k8s-ctr: [TASK 1] Initial Kubernetes

k8s-ctr: [TASK 2] Setting kube config file

k8s-ctr: [TASK 3] Source the completion

k8s-ctr: [TASK 4] Alias kubectl to k

k8s-ctr: [TASK 5] Install Kubectx & Kubens

k8s-ctr: [TASK 6] Install Kubeps & Setting PS1

k8s-ctr: [TASK 7] Install Cilium CNI

k8s-ctr: [TASK 8] Install Cilium / Hubble CLI

k8s-ctr: cilium

k8s-ctr: hubble

k8s-ctr: [TASK 9] Remove node taint

k8s-ctr: node/k8s-ctr untainted

k8s-ctr: [TASK 10] local DNS with hosts file

k8s-ctr: [TASK 11] Install Prometheus & Grafana

k8s-ctr: [TASK 12] Dynamically provisioning persistent local storage with Kubernetes

k8s-ctr: >>>> K8S Controlplane Config End <<<<

==> k8s-ctr: Running provisioner: shell...

k8s-ctr: Running: /tmp/vagrant-shell20250730-27828-7fwjno.sh

k8s-ctr: >>>> Route Add Config Start <<<<

k8s-ctr: >>>> Route Add Config End <<<<

==> k8s-w1: Cloning VM...

==> k8s-w1: Matching MAC address for NAT networking...

==> k8s-w1: Checking if box 'bento/ubuntu-24.04' version '202502.21.0' is up to date...

==> k8s-w1: Setting the name of the VM: k8s-w1

==> k8s-w1: Clearing any previously set network interfaces...

==> k8s-w1: Preparing network interfaces based on configuration...

k8s-w1: Adapter 1: nat

k8s-w1: Adapter 2: hostonly

==> k8s-w1: Forwarding ports...

k8s-w1: 22 (guest) => 60001 (host) (adapter 1)

==> k8s-w1: Running 'pre-boot' VM customizations...

==> k8s-w1: Booting VM...

==> k8s-w1: Waiting for machine to boot. This may take a few minutes...

k8s-w1: SSH address: 127.0.0.1:60001

k8s-w1: SSH username: vagrant

k8s-w1: SSH auth method: private key

k8s-w1:

k8s-w1: Vagrant insecure key detected. Vagrant will automatically replace

k8s-w1: this with a newly generated keypair for better security.

k8s-w1:

k8s-w1: Inserting generated public key within guest...

k8s-w1: Removing insecure key from the guest if it's present...

k8s-w1: Key inserted! Disconnecting and reconnecting using new SSH key...

==> k8s-w1: Machine booted and ready!

==> k8s-w1: Checking for guest additions in VM...

==> k8s-w1: Setting hostname...

==> k8s-w1: Configuring and enabling network interfaces...

==> k8s-w1: Running provisioner: shell...

k8s-w1: Running: /tmp/vagrant-shell20250730-27828-km5kmk.sh

k8s-w1: >>>> Initial Config Start <<<<

k8s-w1: [TASK 1] Setting Profile & Bashrc

k8s-w1: [TASK 2] Disable AppArmor

k8s-w1: [TASK 3] Disable and turn off SWAP

k8s-w1: [TASK 4] Install Packages

k8s-w1: [TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl)

k8s-w1: [TASK 6] Install Packages & Helm

k8s-w1: >>>> Initial Config End <<<<

==> k8s-w1: Running provisioner: shell...

k8s-w1: Running: /tmp/vagrant-shell20250730-27828-fmg78c.sh

k8s-w1: >>>> K8S Node config Start <<<<

k8s-w1: [TASK 1] K8S Controlplane Join

k8s-w1: >>>> K8S Node config End <<<<

==> k8s-w1: Running provisioner: shell...

k8s-w1: Running: /tmp/vagrant-shell20250730-27828-ila0lv.sh

k8s-w1: >>>> Route Add Config Start <<<<

k8s-w1: >>>> Route Add Config End <<<<

==> router: Cloning VM...

==> router: Matching MAC address for NAT networking...

==> router: Checking if box 'bento/ubuntu-24.04' version '202502.21.0' is up to date...

==> router: Setting the name of the VM: router

==> router: Clearing any previously set network interfaces...

==> router: Preparing network interfaces based on configuration...

router: Adapter 1: nat

router: Adapter 2: hostonly

==> router: Forwarding ports...

router: 22 (guest) => 60009 (host) (adapter 1)

==> router: Running 'pre-boot' VM customizations...

==> router: Booting VM...

==> router: Waiting for machine to boot. This may take a few minutes...

router: SSH address: 127.0.0.1:60009

router: SSH username: vagrant

router: SSH auth method: private key

router: Warning: Connection reset. Retrying...

router:

router: Vagrant insecure key detected. Vagrant will automatically replace

router: this with a newly generated keypair for better security.

router:

router: Inserting generated public key within guest...

router: Removing insecure key from the guest if it's present...

router: Key inserted! Disconnecting and reconnecting using new SSH key...

==> router: Machine booted and ready!

==> router: Checking for guest additions in VM...

==> router: Setting hostname...

==> router: Configuring and enabling network interfaces...

==> router: Running provisioner: shell...

router: Running: /tmp/vagrant-shell20250730-27828-2x1jkp.sh

router: >>>> Initial Config Start <<<<

router: [TASK 1] Setting Profile & Bashrc

router: [TASK 2] Disable AppArmor

router: [TASK 3] Add Kernel setting - IP Forwarding

router: [TASK 4] Setting Dummy Interface

router: [TASK 5] Install Packages

router: [TASK 6] Install Apache

router: >>>> Initial Config End <<<<

2. 컨트롤 플레인 노드 접속

1

vagrant ssh k8s-ctr

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

Welcome to Ubuntu 24.04.2 LTS (GNU/Linux 6.8.0-53-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/pro

System information as of Wed Jul 30 02:46:22 PM KST 2025

System load: 0.28

Usage of /: 29.2% of 30.34GB

Memory usage: 51%

Swap usage: 0%

Processes: 217

Users logged in: 0

IPv4 address for eth0: 10.0.2.15

IPv6 address for eth0: fd17:625c:f037:2:a00:27ff:fe6b:69c9

This system is built by the Bento project by Chef Software

More information can be found at https://github.com/chef/bento

Use of this system is acceptance of the OS vendor EULA and License Agreements.

(⎈|HomeLab:N/A) root@k8s-ctr:~#

3. 워커 노드 SSH 통신 확인

컨트롤 플레인에서 워커 노드에 SSH 접속 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-w1 hostname

✅ 출력

1

2

Warning: Permanently added 'k8s-w1' (ED25519) to the list of known hosts.

k8s-w1

4. 클러스터 네트워크 CIDR 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl cluster-info dump | grep -m 2 -E "cluster-cidr|service-cluster-ip-range"

✅ 출력

1

2

"--service-cluster-ip-range=10.96.0.0/16",

"--cluster-cidr=10.244.0.0/16",

5. 노드 상태 및 내부 IP 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get node -owide

✅ 출력

1

2

3

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-ctr Ready control-plane 7m38s v1.33.2 192.168.10.100 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

k8s-w1 Ready <none> 5m38s v1.33.2 192.168.10.101 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

- 내부 IP 확인 가능 (

192.168.10.100,192.168.10.101)

6. 쿠버네티스 IPAM 및 파드 네트워크 상태 확인

(1) 노드별 Pod CIDR 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get nodes -o jsonpath='{range .items[*]}{.metadata.name}{"\t"}{.spec.podCIDR}{"\n"}{end}'

✅ 출력

1

2

k8s-ctr 10.244.0.0/24

k8s-w1 10.244.1.0/24

kube-controller-manager가 각 노드에 할당한 Pod CIDR 확인

(2) Cilium이 사용하는 Pod CIDR 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumnode -o json | grep podCIDRs -A2

✅ 출력

1

2

3

4

5

6

7

"podCIDRs": [

"10.244.0.0/24"

],

--

"podCIDRs": [

"10.244.1.0/24"

],

CiliumNode리소스를 통해 각 노드가 인식하고 있는 Pod CIDR 확인

(3) IPAM 모드 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium config view | grep ^ipam

✅ 출력

1

2

ipam kubernetes

ipam-cilium-node-update-rate 15s

kubernetes모드일 경우 Kubernetes가 IP를 할당하고, Cilium은 그것을 그대로 사용함

(4) Cilium 엔드포인트 IP 확인

ciliumendpoints 리소스를 조회하여 파드에 부여된 실제 IP 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumendpoints -A

✅ 출력

1

2

3

4

5

6

7

8

NAMESPACE NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6

cilium-monitoring grafana-5c69859d9-wdb82 22795 ready 10.244.0.104

cilium-monitoring prometheus-6fc896bc5d-bxnd5 1213 ready 10.244.0.65

kube-system coredns-674b8bbfcf-9pxvx 28565 ready 10.244.0.199

kube-system coredns-674b8bbfcf-khjhq 28565 ready 10.244.0.59

kube-system hubble-relay-5dcd46f5c-5r79v 17061 ready 10.244.0.122

kube-system hubble-ui-76d4965bb6-xmdp8 2452 ready 10.244.0.80

local-path-storage local-path-provisioner-74f9666bc9-scg4s 56893 ready 10.244.0.253

10.244.0.x→ 컨트롤 플레인 노드10.244.1.x→ 워커 노드

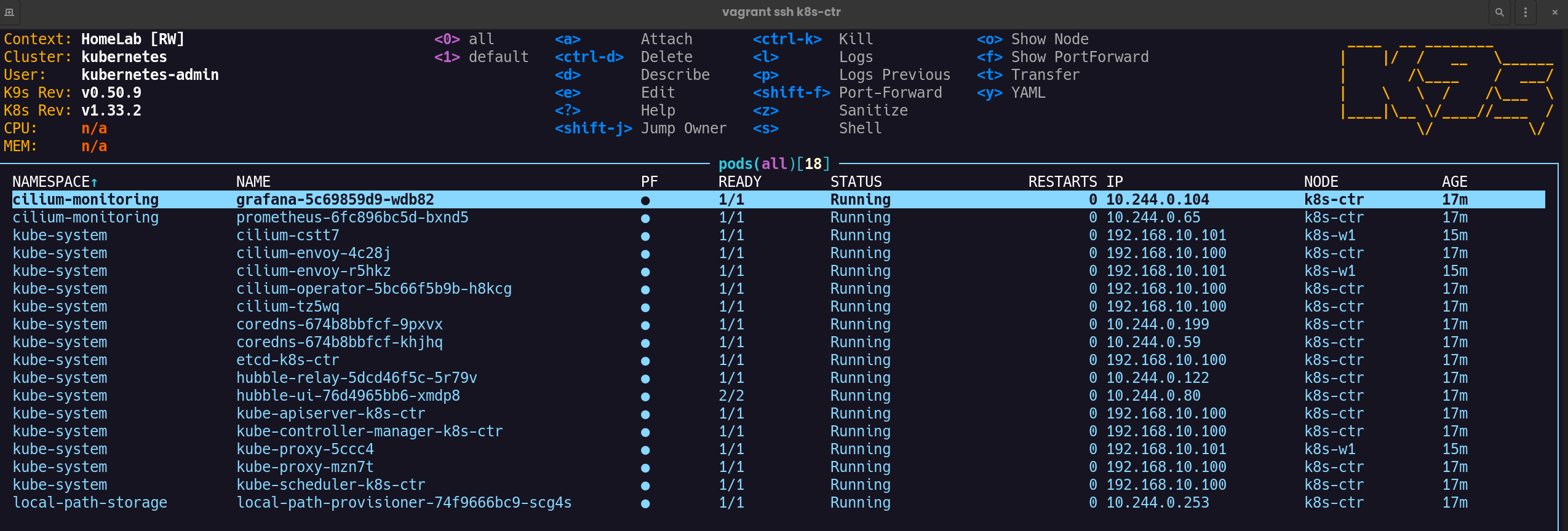

🐶 k9s 설치 및 실행 정리

1. k9s 설치

1

2

(⎈|HomeLab:N/A) root@k8s-ctr:~# wget https://github.com/derailed/k9s/releases/latest/download/k9s_linux_amd64.deb -O /tmp/k9s_linux_amd64.deb

apt install /tmp/k9s_linux_amd64.deb

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

--2025-07-30 14:55:17-- https://github.com/derailed/k9s/releases/latest/download/k9s_linux_amd64.deb

Resolving github.com (github.com)... 20.200.245.247

Connecting to github.com (github.com)|20.200.245.247|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://github.com/derailed/k9s/releases/download/v0.50.9/k9s_linux_amd64.deb [following]

--2025-07-30 14:55:17-- https://github.com/derailed/k9s/releases/download/v0.50.9/k9s_linux_amd64.deb

Reusing existing connection to github.com:443.

HTTP request sent, awaiting response... 302 Found

Location: https://release-assets.githubusercontent.com/github-production-release-asset/167596393/68b2cb87-c3c4-4c08-8ebe-b8aaa51894f5?sp=r&sv=2018-11-09&sr=b&spr=https&se=2025-07-30T06%3A41%3A09Z&rscd=attachment%3B+filename%3Dk9s_linux_amd64.deb&rsct=application%2Foctet-stream&skoid=96c2d410-5711-43a1-aedd-ab1947aa7ab0&sktid=398a6654-997b-47e9-b12b-9515b896b4de&skt=2025-07-30T05%3A40%3A14Z&ske=2025-07-30T06%3A41%3A09Z&sks=b&skv=2018-11-09&sig=JeO%2BpcQvqHA9Cn%2F9LNC%2FVbGkvi%2BA2WVntygiGkgYwwk%3D&jwt=eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJnaXRodWIuY29tIiwiYXVkIjoicmVsZWFzZS1hc3NldHMuZ2l0aHVidXNlcmNvbnRlbnQuY29tIiwia2V5Ijoia2V5MSIsImV4cCI6MTc1Mzg1NTIyMywibmJmIjoxNzUzODU0OTIzLCJwYXRoIjoicmVsZWFzZWFzc2V0cHJvZHVjdGlvbi5ibG9iLmNvcmUud2luZG93cy5uZXQifQ.lj7UoO3dvLsG-a_0jHncvKP_C05qv3_v8-1Ne7RIpK0&response-content-disposition=attachment%3B%20filename%3Dk9s_linux_amd64.deb&response-content-type=application%2Foctet-stream [following]

--2025-07-30 14:55:18-- https://release-assets.githubusercontent.com/github-production-release-asset/167596393/68b2cb87-c3c4-4c08-8ebe-b8aaa51894f5?sp=r&sv=2018-11-09&sr=b&spr=https&se=2025-07-30T06%3A41%3A09Z&rscd=attachment%3B+filename%3Dk9s_linux_amd64.deb&rsct=application%2Foctet-stream&skoid=96c2d410-5711-43a1-aedd-ab1947aa7ab0&sktid=398a6654-997b-47e9-b12b-9515b896b4de&skt=2025-07-30T05%3A40%3A14Z&ske=2025-07-30T06%3A41%3A09Z&sks=b&skv=2018-11-09&sig=JeO%2BpcQvqHA9Cn%2F9LNC%2FVbGkvi%2BA2WVntygiGkgYwwk%3D&jwt=eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJnaXRodWIuY29tIiwiYXVkIjoicmVsZWFzZS1hc3NldHMuZ2l0aHVidXNlcmNvbnRlbnQuY29tIiwia2V5Ijoia2V5MSIsImV4cCI6MTc1Mzg1NTIyMywibmJmIjoxNzUzODU0OTIzLCJwYXRoIjoicmVsZWFzZWFzc2V0cHJvZHVjdGlvbi5ibG9iLmNvcmUud2luZG93cy5uZXQifQ.lj7UoO3dvLsG-a_0jHncvKP_C05qv3_v8-1Ne7RIpK0&response-content-disposition=attachment%3B%20filename%3Dk9s_linux_amd64.deb&response-content-type=application%2Foctet-stream

Resolving release-assets.githubusercontent.com (release-assets.githubusercontent.com)... 185.199.110.133, 185.199.108.133, 185.199.109.133, ...

Connecting to release-assets.githubusercontent.com (release-assets.githubusercontent.com)|185.199.110.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 38258230 (36M) [application/octet-stream]

Saving to: ‘/tmp/k9s_linux_amd64.deb’

/tmp/k9s_linux_amd64.de 100%[==============================>] 36.49M 17.9MB/s in 2.0s

2025-07-30 14:55:20 (17.9 MB/s) - ‘/tmp/k9s_linux_amd64.deb’ saved [38258230/38258230]

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

Note, selecting 'k9s' instead of '/tmp/k9s_linux_amd64.deb'

The following NEW packages will be installed:

k9s

0 upgraded, 1 newly installed, 0 to remove and 175 not upgraded.

Need to get 0 B/38.3 MB of archives.

After this operation, 124 MB of additional disk space will be used.

Get:1 /tmp/k9s_linux_amd64.deb k9s amd64 0.50.9 [38.3 MB]

Selecting previously unselected package k9s.

(Reading database ... 51864 files and directories currently installed.)

Preparing to unpack /tmp/k9s_linux_amd64.deb ...

Unpacking k9s (0.50.9) ...

Setting up k9s (0.50.9) ...

Scanning processes...

Scanning linux images...

Running kernel seems to be up-to-date.

No services need to be restarted.

No containers need to be restarted.

No user sessions are running outdated binaries.

No VM guests are running outdated hypervisor (qemu) binaries on this host.

2. k9s 실행

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# k9s

🌐 Cilium IPAM 실습

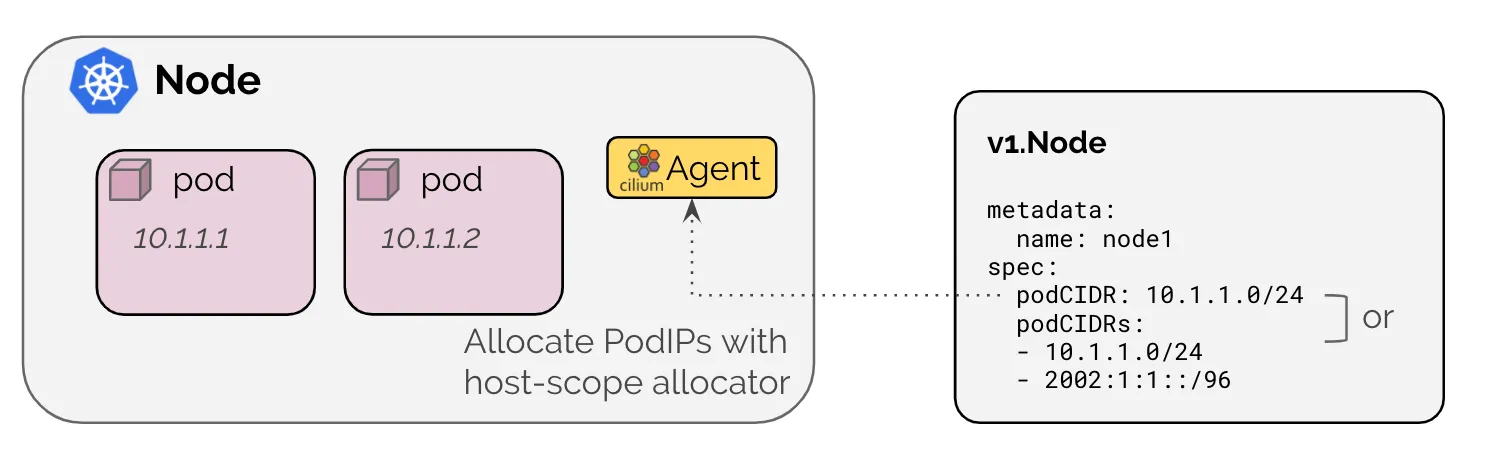

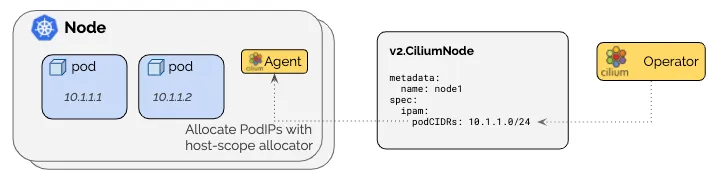

1. IPAM 개념 및 Cilium 모드

Kubernetes Host Scope

- 노드별로 고정된

PodCIDR를 사용하는 모드 - KubeControllerManager가 IP 범위를 할당 및 관리

- 각 노드에 미리 정의된 CIDR 블록이 할당됨

Cilium Cluster Scope

- Cilium이 자체적으로 IP 풀을 관리하며 동적으로 할당

- 별도 IPAM 설정이 없을 경우 기본적으로 사용되는 모드

- 외부 IPAM(AWS ENI, Azure IPAM 등)과의 연동도 가능

2. 멀티 CIDR 및 Multi-pool 제약사항

클러스터 내 복수 CIDR 구성

- Cilium은 클러스터 내 여러 CIDR 블록을 지원

- 제약사항:

vxlan,geneve같은 터널 기반 라우팅 모드에서는 Multi-pool 미지원 - 확장성: 특정 노드의 Pod 수요가 증가하여 CIDR이 부족한 경우, 해당 노드에만 추가 CIDR을 할당하여 유연한 확장 가능

3. 노드별 Pod CIDR 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get nodes -o jsonpath='{range .items[*]}{.metadata.name}{"\t"}{.spec.podCIDR}{"\n"}{end}'

✅ 출력

1

2

k8s-ctr 10.244.0.0/24

k8s-w1 10.244.1.0/24

- Kubernetes Host Scope 기반 IPAM 환경에서 각 노드에

/24CIDR 블록이 자동 할당됨

4. kube-controller-manager 설정 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kc describe pod -n kube-system kube-controller-manager-k8s-ctr

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

Name: kube-controller-manager-k8s-ctr

Namespace: kube-system

Priority: 2000001000

Priority Class Name: system-node-critical

Node: k8s-ctr/192.168.10.100

Start Time: Wed, 30 Jul 2025 14:41:28 +0900

Labels: component=kube-controller-manager

tier=control-plane

Annotations: kubernetes.io/config.hash: 2da908bf08a691927af74a336851f6e1

kubernetes.io/config.mirror: 2da908bf08a691927af74a336851f6e1

kubernetes.io/config.seen: 2025-07-30T14:41:20.396308103+09:00

kubernetes.io/config.source: file

Status: Running

SeccompProfile: RuntimeDefault

IP: 192.168.10.100

IPs:

IP: 192.168.10.100

Controlled By: Node/k8s-ctr

Containers:

kube-controller-manager:

Container ID: containerd://fb984494600e1c9a3755783595ee377a07d82efade606d941f2c162a604eed32

Image: registry.k8s.io/kube-controller-manager:v1.33.2

Image ID: registry.k8s.io/kube-controller-manager@sha256:2236e72a4be5dcc9c04600353ff8849db1557f5364947c520ff05471ae719081

Port: <none>

Host Port: <none>

Command:

kube-controller-manager

--allocate-node-cidrs=true

--authentication-kubeconfig=/etc/kubernetes/controller-manager.conf

--authorization-kubeconfig=/etc/kubernetes/controller-manager.conf

--bind-address=127.0.0.1

--client-ca-file=/etc/kubernetes/pki/ca.crt

--cluster-cidr=10.244.0.0/16

--cluster-name=kubernetes

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt

--cluster-signing-key-file=/etc/kubernetes/pki/ca.key

--controllers=*,bootstrapsigner,tokencleaner

--kubeconfig=/etc/kubernetes/controller-manager.conf

--leader-elect=true

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

--root-ca-file=/etc/kubernetes/pki/ca.crt

--service-account-private-key-file=/etc/kubernetes/pki/sa.key

--service-cluster-ip-range=10.96.0.0/16

--use-service-account-credentials=true

State: Running

Started: Wed, 30 Jul 2025 14:41:24 +0900

Ready: True

Restart Count: 0

Requests:

cpu: 200m

Liveness: http-get https://127.0.0.1:10257/healthz delay=10s timeout=15s period=10s #success=1 #failure=8

Startup: http-get https://127.0.0.1:10257/healthz delay=10s timeout=15s period=10s #success=1 #failure=24

Environment: <none>

Mounts:

/etc/ca-certificates from etc-ca-certificates (ro)

/etc/kubernetes/controller-manager.conf from kubeconfig (ro)

/etc/kubernetes/pki from k8s-certs (ro)

/etc/ssl/certs from ca-certs (ro)

/usr/libexec/kubernetes/kubelet-plugins/volume/exec from flexvolume-dir (rw)

/usr/local/share/ca-certificates from usr-local-share-ca-certificates (ro)

/usr/share/ca-certificates from usr-share-ca-certificates (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

ca-certs:

Type: HostPath (bare host directory volume)

Path: /etc/ssl/certs

HostPathType: DirectoryOrCreate

etc-ca-certificates:

Type: HostPath (bare host directory volume)

Path: /etc/ca-certificates

HostPathType: DirectoryOrCreate

flexvolume-dir:

Type: HostPath (bare host directory volume)

Path: /usr/libexec/kubernetes/kubelet-plugins/volume/exec

HostPathType: DirectoryOrCreate

k8s-certs:

Type: HostPath (bare host directory volume)

Path: /etc/kubernetes/pki

HostPathType: DirectoryOrCreate

kubeconfig:

Type: HostPath (bare host directory volume)

Path: /etc/kubernetes/controller-manager.conf

HostPathType: FileOrCreate

usr-local-share-ca-certificates:

Type: HostPath (bare host directory volume)

Path: /usr/local/share/ca-certificates

HostPathType: DirectoryOrCreate

usr-share-ca-certificates:

Type: HostPath (bare host directory volume)

Path: /usr/share/ca-certificates

HostPathType: DirectoryOrCreate

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: :NoExecute op=Exists

Events: <none>

-allocate-node-cidrs=true: 노드별 CIDR 자동 할당 활성화-cluster-cidr=10.244.0.0/16: 전체 클러스터 Pod IP 범위 설정-service-cluster-ip-range=10.96.0.0/16: 서비스 IP 범위

5. Cilium이 인식한 Pod CIDR 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumnode -o json | grep podCIDRs -A2

✅ 출력

1

2

3

4

5

6

7

"podCIDRs": [

"10.244.0.0/24"

],

--

"podCIDRs": [

"10.244.1.0/24"

],

- 컨트롤 플레인 노드:

10.244.0.0/24 - 워커 노드:

10.244.1.0/24

6. Cilium Endpoint IP 할당 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumendpoints.cilium.io -A

✅ 출력

1

2

3

4

5

6

7

8

NAMESPACE NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6

cilium-monitoring grafana-5c69859d9-wdb82 22795 ready 10.244.0.104

cilium-monitoring prometheus-6fc896bc5d-bxnd5 1213 ready 10.244.0.65

kube-system coredns-674b8bbfcf-9pxvx 28565 ready 10.244.0.199

kube-system coredns-674b8bbfcf-khjhq 28565 ready 10.244.0.59

kube-system hubble-relay-5dcd46f5c-5r79v 17061 ready 10.244.0.122

kube-system hubble-ui-76d4965bb6-xmdp8 2452 ready 10.244.0.80

local-path-storage local-path-provisioner-74f9666bc9-scg4s 56893 ready 10.244.0.253

- 모든 Pod가 컨트롤 플레인 노드의 CIDR 범위(

10.244.0.0/24) 내에서 IP 할당받음 - IP 할당이 정상적으로 이루어지고 모든 Endpoint가

ready상태

🦈 샘플 애플리케이션 배포 및 확인 & Termshark

1. 샘플 애플리케이션 배포 (webpod)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

(⎈|HomeLab:N/A) root@k8s-ctr:~# cat << EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: webpod

spec:

replicas: 2

selector:

matchLabels:

app: webpod

template:

metadata:

labels:

app: webpod

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- sample-app

topologyKey: "kubernetes.io/hostname"

containers:

- name: webpod

image: traefik/whoami

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: webpod

labels:

app: webpod

spec:

selector:

app: webpod

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

EOF

# 결과

deployment.apps/webpod created

service/webpod created

2. curl 테스트용 파드 배포 (curl-pod)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

(⎈|HomeLab:N/A) root@k8s-ctr:~# cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: curl-pod

labels:

app: curl

spec:

nodeName: k8s-ctr

containers:

- name: curl

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

# 결과

pod/curl-pod created

nodeName: k8s-ctr: 컨트롤 플레인 노드에 명시적으로 고정 배치

3. 리소스 배포 상태 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get deploy,svc,ep webpod -owide

✅ 출력

1

2

3

4

5

6

7

8

9

Warning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/webpod 2/2 2 2 97s webpod traefik/whoami app=webpod

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/webpod ClusterIP 10.96.152.212 <none> 80/TCP 97s app=webpod

NAME ENDPOINTS AGE

endpoints/webpod 10.244.0.1:80,10.244.1.96:80 96s

- Deployment: 2개 Pod가 정상 생성 및 실행 중

- Service: ClusterIP 타입으로

10.96.152.212할당 - Endpoints

10.244.0.1:80(컨트롤 플레인 노드의 Pod)10.244.1.96:80(워커 노드의 Pod)

4. Cilium Endpoint 정보 조회

(1) EndpointSlice 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get endpointslices -l app=webpod

✅ 출력

1

2

NAME ADDRESSTYPE PORTS ENDPOINTS AGE

webpod-2wrvt IPv4 80 10.244.0.1,10.244.1.96 118s

(2) Cilium Endpoint 상세 정보

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it -n kube-system ds/cilium -c cilium-agent -- cilium-dbg endpoint list

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS

ENFORCEMENT ENFORCEMENT

147 Disabled Disabled 28565 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system 10.244.0.199 ready

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

318 Disabled Disabled 5580 k8s:app=curl 10.244.0.27 ready

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

853 Disabled Disabled 2452 k8s:app.kubernetes.io/name=hubble-ui 10.244.0.80 ready

k8s:app.kubernetes.io/part-of=cilium

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=hubble-ui

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=hubble-ui

1009 Disabled Disabled 12497 k8s:app=webpod 10.244.0.1 ready

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

1043 Disabled Disabled 56893 k8s:app=local-path-provisioner 10.244.0.253 ready

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=local-path-storage

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=local-path-provisioner-service-account

k8s:io.kubernetes.pod.namespace=local-path-storage

1452 Disabled Disabled 28565 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system 10.244.0.59 ready

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

1680 Disabled Disabled 1213 k8s:app=prometheus 10.244.0.65 ready

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=cilium-monitoring

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=prometheus-k8s

k8s:io.kubernetes.pod.namespace=cilium-monitoring

1694 Disabled Disabled 17061 k8s:app.kubernetes.io/name=hubble-relay 10.244.0.122 ready

k8s:app.kubernetes.io/part-of=cilium

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=hubble-relay

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=hubble-relay

2772 Disabled Disabled 22795 k8s:app=grafana 10.244.0.104 ready

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=cilium-monitoring

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=cilium-monitoring

3358 Disabled Disabled 1 k8s:node-role.kubernetes.io/control-plane ready

k8s:node.kubernetes.io/exclude-from-external-load-balancers

reserved:host

5. 서비스 통신 테스트

(1) 단일 요청 테스트

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- curl webpod | grep Hostname

✅ 출력

1

Hostname: webpod-697b545f57-bpzn9

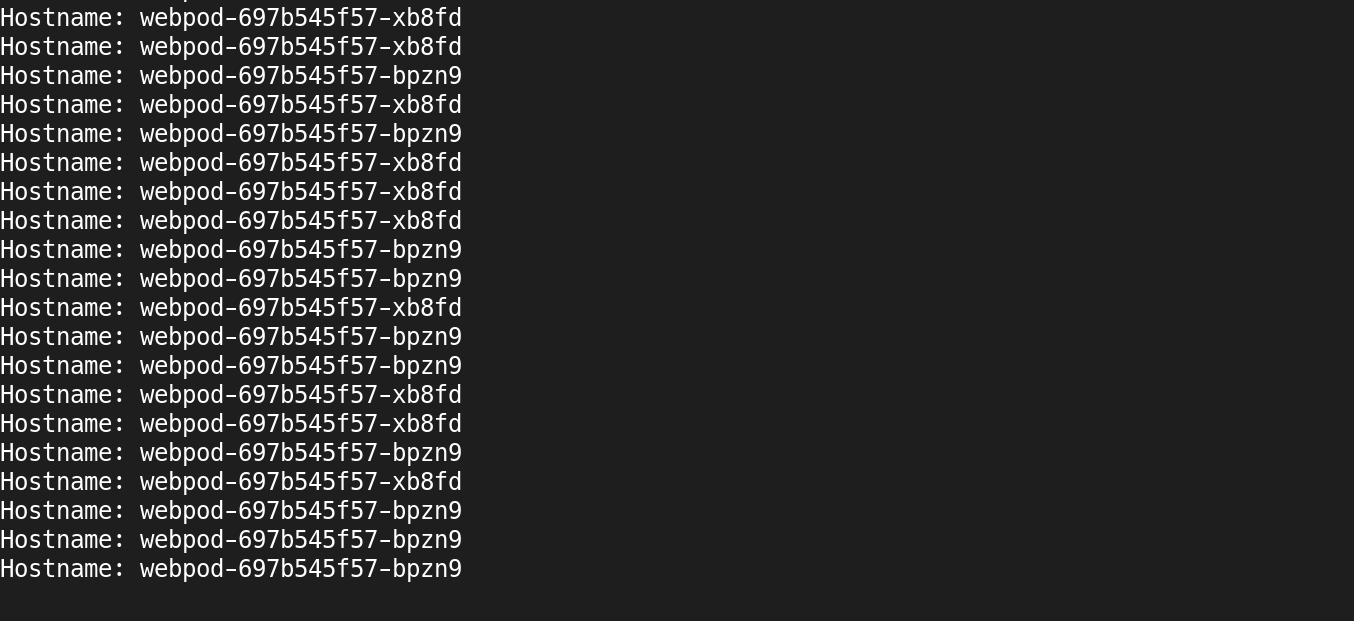

(2) 연속 요청을 통한 로드 밸런싱 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- sh -c 'while true; do curl -s webpod | grep Hostname; sleep 1; done'

✅ 출력

1

2

3

4

5

6

7

8

9

Hostname: webpod-697b545f57-xb8fd

Hostname: webpod-697b545f57-xb8fd

Hostname: webpod-697b545f57-bpzn9

Hostname: webpod-697b545f57-bpzn9

Hostname: webpod-697b545f57-bpzn9

Hostname: webpod-697b545f57-bpzn9

Hostname: webpod-697b545f57-bpzn9

Hostname: webpod-697b545f57-xb8fd

...

- 두 개의 서로 다른 Pod(

bpzn9,xb8fd) 간에 트래픽이 분산됨 - DNS 기반 서비스 디스커버리가 정상 동작 (

webpod서비스명으로 접근)

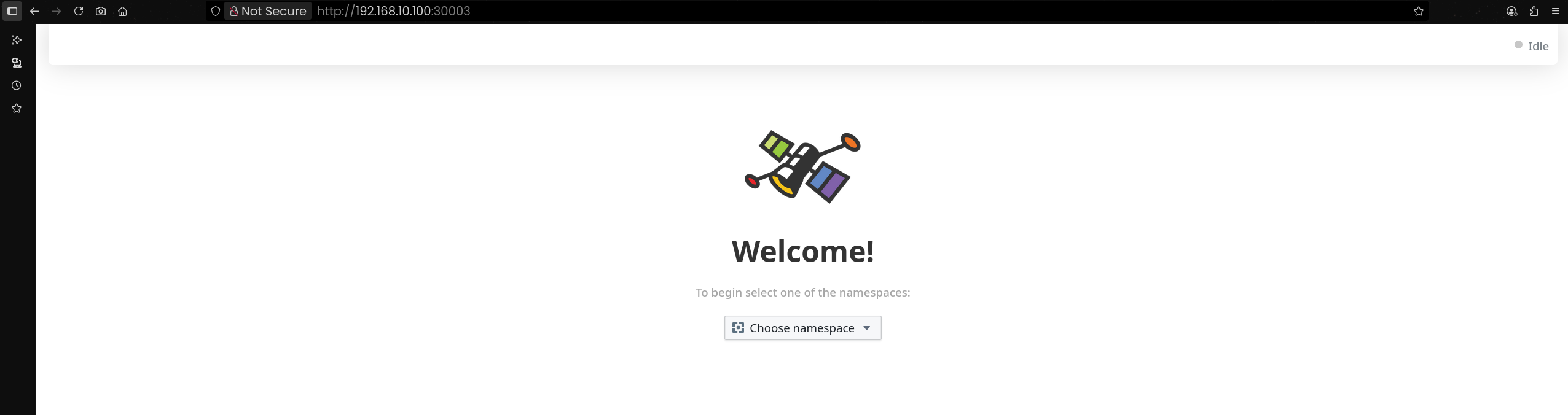

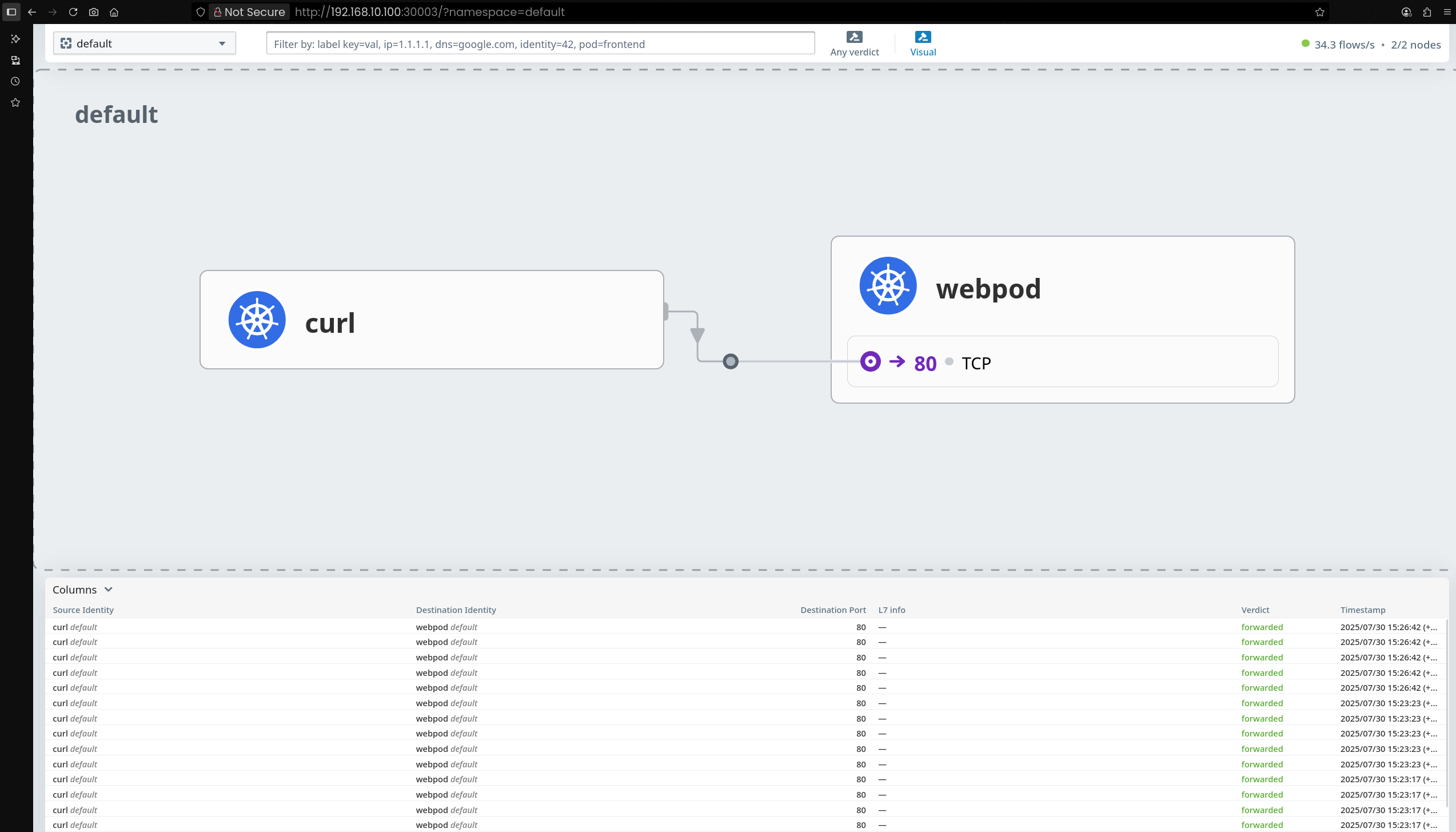

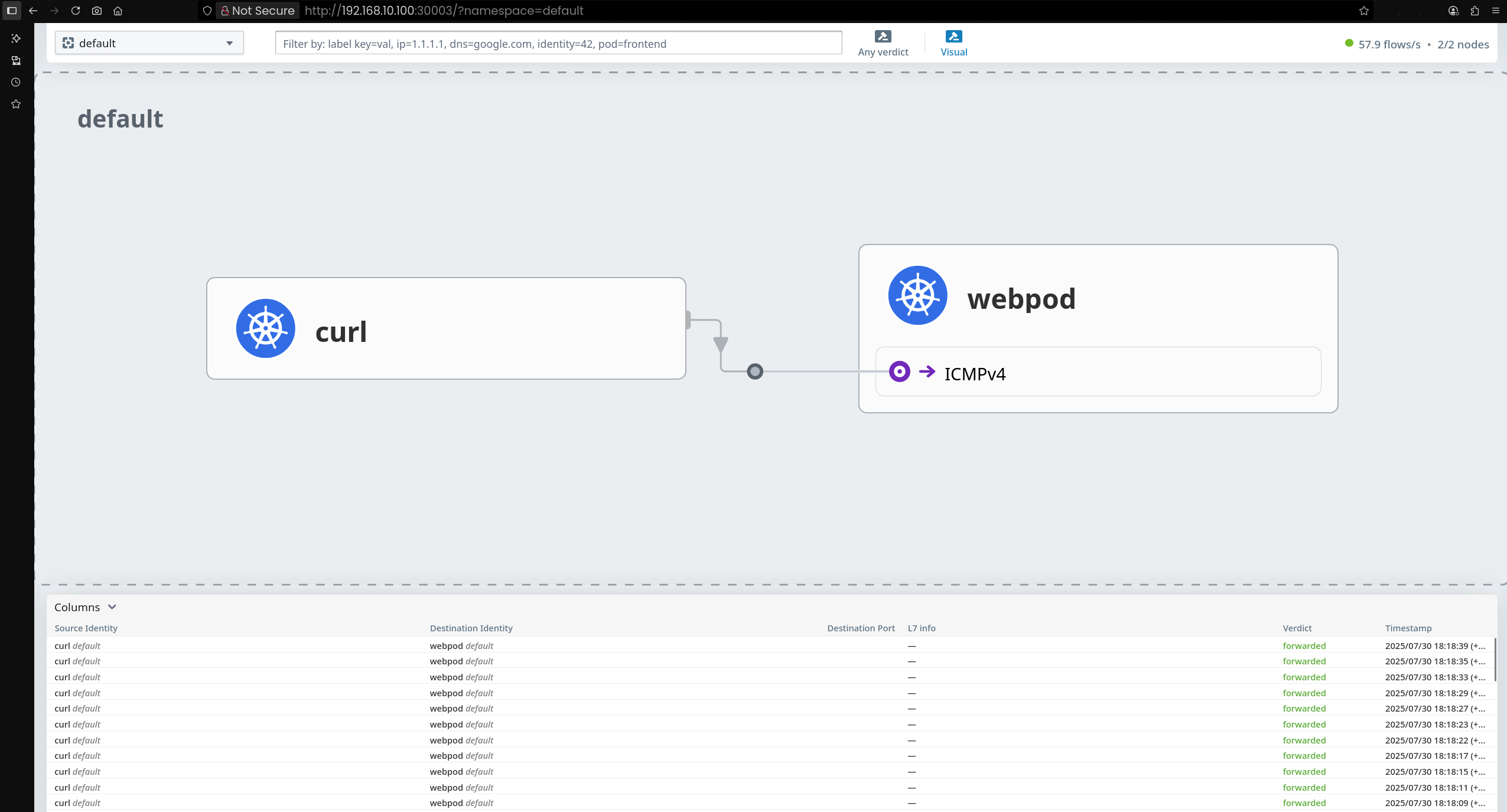

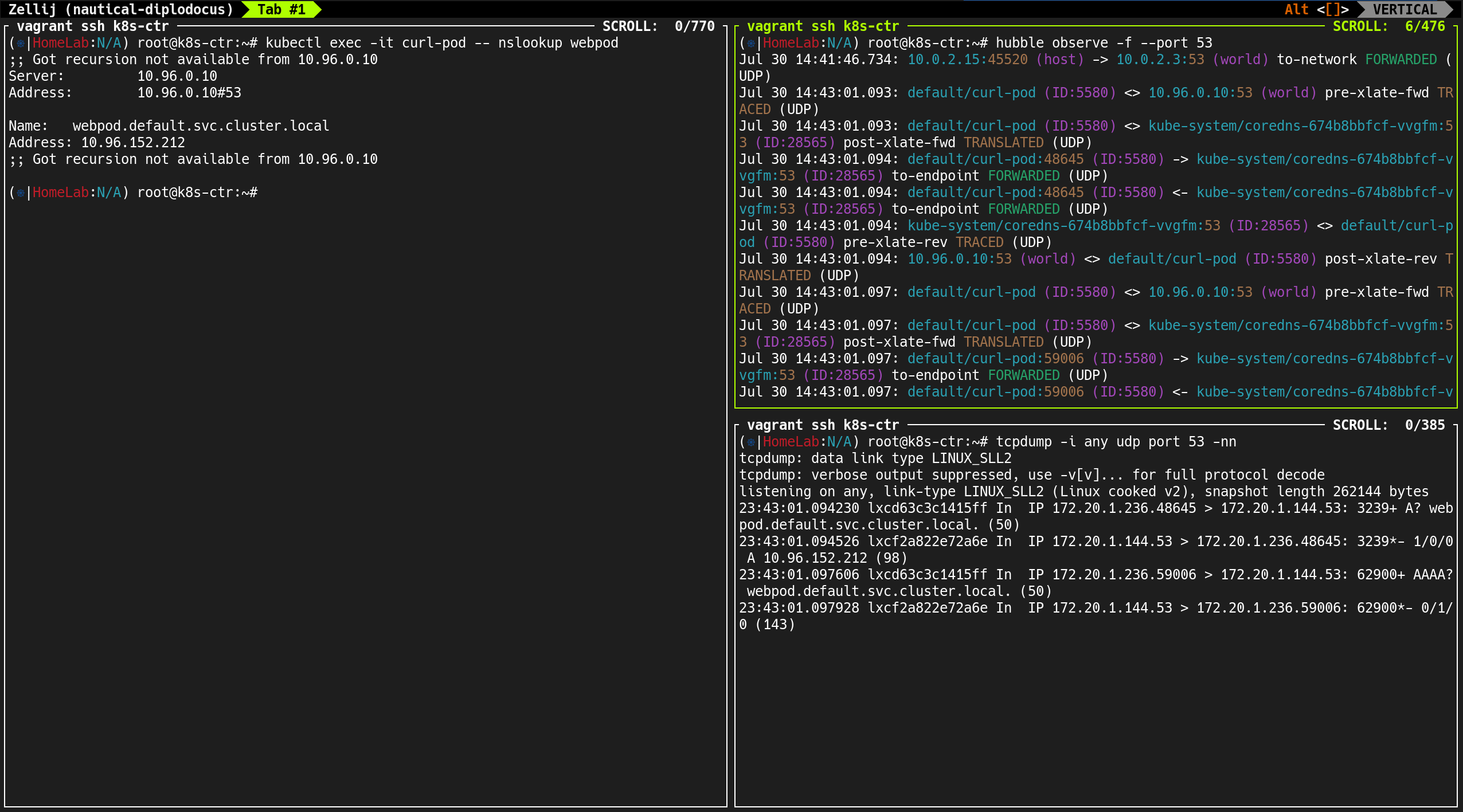

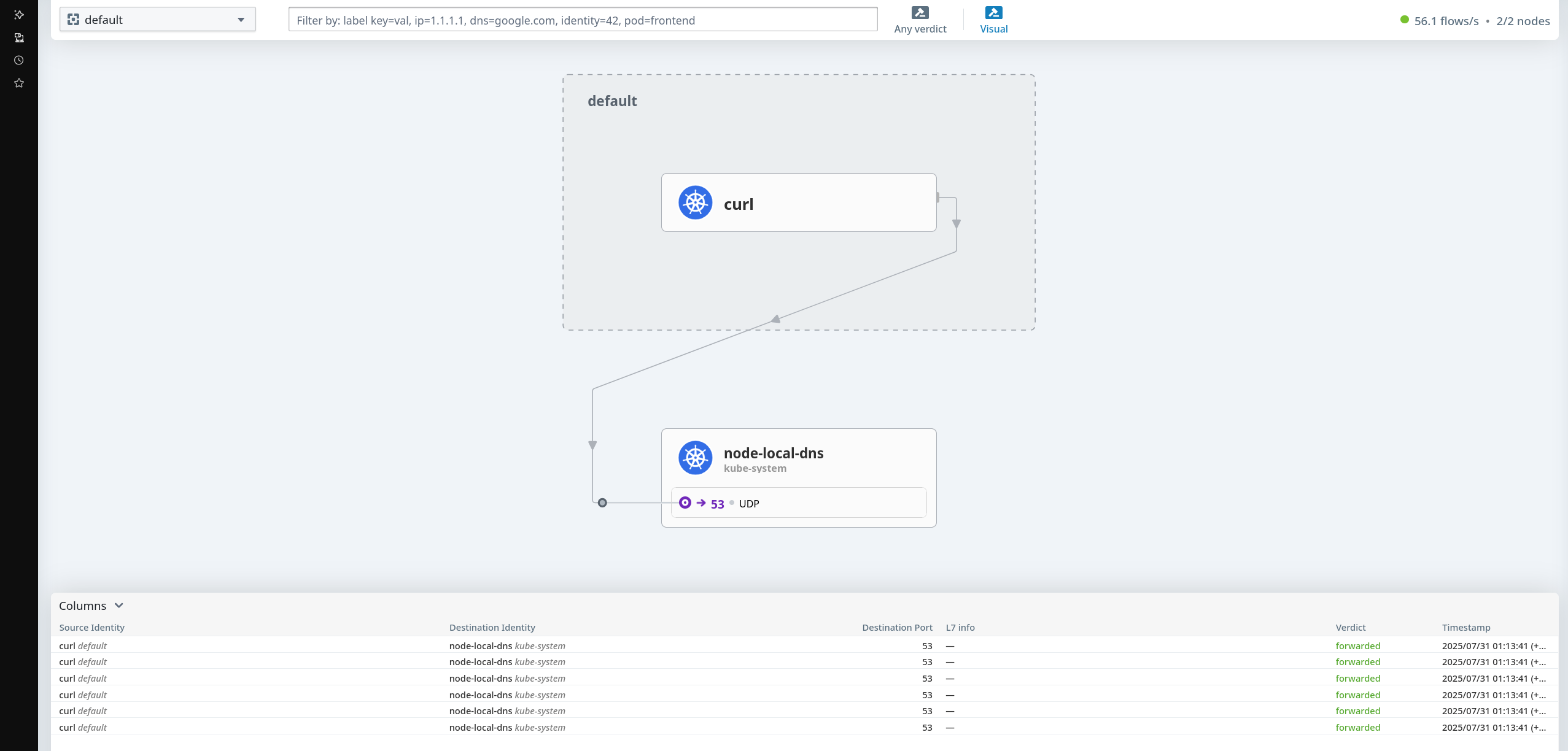

6. Hubble 흐름 추적 실습

(1) Hubble UI 웹 접속 주소 확인

1

2

(⎈|HomeLab:N/A) root@k8s-ctr:~# NODEIP=$(ip -4 addr show eth1 | grep -oP '(?<=inet\s)\d+(\.\d+){3}')

echo -e "http://$NODEIP:30003"

✅ 출력

1

http://192.168.10.100:30003

(2) 지속적인 curl 요청 수행

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- sh -c 'while true; do curl -s webpod | grep Hostname; sleep 1; done'

- curl이 default 네임스페이스에 있는 webpod 서비스명으로 들어가는걸 확인할 수 있다.

(3) Hubble Relay 포트 포워딩

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium hubble port-forward&

✅ 출력

1

2

[1] 10026

ℹ️ Hubble Relay is available at 127.0.0.1:4245

- gRPC API를 통해 로컬호스트의

4245포트에서 접근 가능

(4) 실시간 네트워크 흐름 모니터링

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# hubble observe -f --protocol tcp --pod curl-pod

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

Jul 30 06:30:30.990: default/curl-pod:53176 (ID:5580) <- default/webpod-697b545f57-xb8fd:80 (ID:12497) to-network FORWARDED (TCP Flags: ACK, FIN)

Jul 30 06:30:30.990: default/curl-pod:53176 (ID:5580) -> default/webpod-697b545f57-xb8fd:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: ACK)

Jul 30 06:30:32.254: default/curl-pod (ID:5580) <> 10.96.152.212:80 (world) pre-xlate-fwd TRACED (TCP)

Jul 30 06:30:32.254: default/curl-pod (ID:5580) <> default/webpod-697b545f57-bpzn9:80 (ID:12497) post-xlate-fwd TRANSLATED (TCP)

Jul 30 06:30:32.254: default/curl-pod:58930 (ID:5580) -> default/webpod-697b545f57-bpzn9:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: SYN)

Jul 30 06:30:32.254: default/curl-pod:58930 (ID:5580) <- default/webpod-697b545f57-bpzn9:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: SYN, ACK)

Jul 30 06:30:32.254: default/curl-pod:58930 (ID:5580) -> default/webpod-697b545f57-bpzn9:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: ACK)

Jul 30 06:30:32.254: default/curl-pod:58930 (ID:5580) <> default/webpod-697b545f57-bpzn9 (ID:12497) pre-xlate-rev TRACED (TCP)

Jul 30 06:30:32.254: default/curl-pod:58930 (ID:5580) <> default/webpod-697b545f57-bpzn9 (ID:12497) pre-xlate-rev TRACED (TCP)

Jul 30 06:30:32.254: default/curl-pod:58930 (ID:5580) <> default/webpod-697b545f57-bpzn9 (ID:12497) pre-xlate-rev TRACED (TCP)

Jul 30 06:30:32.255: default/curl-pod:58930 (ID:5580) -> default/webpod-697b545f57-bpzn9:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Jul 30 06:30:32.255: default/curl-pod:58930 (ID:5580) <> default/webpod-697b545f57-bpzn9 (ID:12497) pre-xlate-rev TRACED (TCP)

Jul 30 06:30:32.256: default/curl-pod:58930 (ID:5580) <> default/webpod-697b545f57-bpzn9 (ID:12497) pre-xlate-rev TRACED (TCP)

Jul 30 06:30:32.256: default/curl-pod:58930 (ID:5580) <- default/webpod-697b545f57-bpzn9:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Jul 30 06:30:32.257: default/curl-pod:58930 (ID:5580) -> default/webpod-697b545f57-bpzn9:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Jul 30 06:30:32.257: default/curl-pod:58930 (ID:5580) <- default/webpod-697b545f57-bpzn9:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Jul 30 06:30:32.257: default/curl-pod:58930 (ID:5580) -> default/webpod-697b545f57-bpzn9:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: ACK)

Jul 30 06:30:33.263: default/curl-pod (ID:5580) <> 10.96.152.212:80 (world) pre-xlate-fwd TRACED (TCP)

Jul 30 06:30:33.263: default/curl-pod (ID:5580) <> default/webpod-697b545f57-bpzn9:80 (ID:12497) post-xlate-fwd TRANSLATED (TCP)

Jul 30 06:30:33.263: default/curl-pod:58942 (ID:5580) -> default/webpod-697b545f57-bpzn9:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: SYN)

Jul 30 06:30:33.263: default/curl-pod:58942 (ID:5580) <- default/webpod-697b545f57-bpzn9:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: SYN, ACK)

Jul 30 06:30:33.263: default/curl-pod:58942 (ID:5580) -> default/webpod-697b545f57-bpzn9:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: ACK)

Jul 30 06:30:33.264: default/curl-pod:58942 (ID:5580) <> default/webpod-697b545f57-bpzn9 (ID:12497) pre-xlate-rev TRACED (TCP)

Jul 30 06:30:33.264: default/curl-pod:58942 (ID:5580) -> default/webpod-697b545f57-bpzn9:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Jul 30 06:30:33.264: default/curl-pod:58942 (ID:5580) <> default/webpod-697b545f57-bpzn9 (ID:12497) pre-xlate-rev TRACED (TCP)

Jul 30 06:30:33.264: default/curl-pod:58942 (ID:5580) <> default/webpod-697b545f57-bpzn9 (ID:12497) pre-xlate-rev TRACED (TCP)

Jul 30 06:30:33.265: default/curl-pod:58942 (ID:5580) <> default/webpod-697b545f57-bpzn9 (ID:12497) pre-xlate-rev TRACED (TCP)

Jul 30 06:30:33.265: default/curl-pod:58942 (ID:5580) <> default/webpod-697b545f57-bpzn9 (ID:12497) pre-xlate-rev TRACED (TCP)

Jul 30 06:30:33.265: default/curl-pod:58942 (ID:5580) <- default/webpod-697b545f57-bpzn9:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Jul 30 06:30:33.265: default/curl-pod:58942 (ID:5580) -> default/webpod-697b545f57-bpzn9:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Jul 30 06:30:33.265: default/curl-pod:58942 (ID:5580) <- default/webpod-697b545f57-bpzn9:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Jul 30 06:30:33.265: default/curl-pod:58942 (ID:5580) -> default/webpod-697b545f57-bpzn9:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: ACK)

Jul 30 06:30:34.018: default/curl-pod:53190 (ID:5580) -> default/webpod-697b545f57-xb8fd:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: SYN)

Jul 30 06:30:34.018: default/curl-pod:53190 (ID:5580) <- default/webpod-697b545f57-xb8fd:80 (ID:12497) to-network FORWARDED (TCP Flags: SYN, ACK)

Jul 30 06:30:34.018: default/curl-pod:53190 (ID:5580) -> default/webpod-697b545f57-xb8fd:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: ACK)

Jul 30 06:30:34.018: default/curl-pod:53190 (ID:5580) -> default/webpod-697b545f57-xb8fd:80 (ID:12497) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

10.96.152.212:80: ClusterIP 서비스 주소 (world 라벨)pre-xlate-fwd: NAT 변환 전 추적 - 소켓 로드밸런서에 의한 서비스 IP 접근post-xlate-fwd: NAT 변환 후 - 실제 Pod IP로 변환됨

TCP 연결 생명주기

1

2

3

4

5

TCP Flags: SYN # 연결 시작

TCP Flags: SYN, ACK # 연결 수락

TCP Flags: ACK # 연결 확인

TCP Flags: ACK, PSH # HTTP 데이터 전송

TCP Flags: ACK, FIN # 연결 종료

7. 서비스 정보 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# k get svc

✅ 출력

1

2

3

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 57m

webpod ClusterIP 10.96.152.212 <none> 80/TCP 19m

webpod서비스의 ClusterIP10.96.152.212가 Hubble 로그의 서비스 주소와 일치- Cilium의 소켓 레벨 로드밸런서가 서비스 IP를 실제 Pod IP로 자동 변환

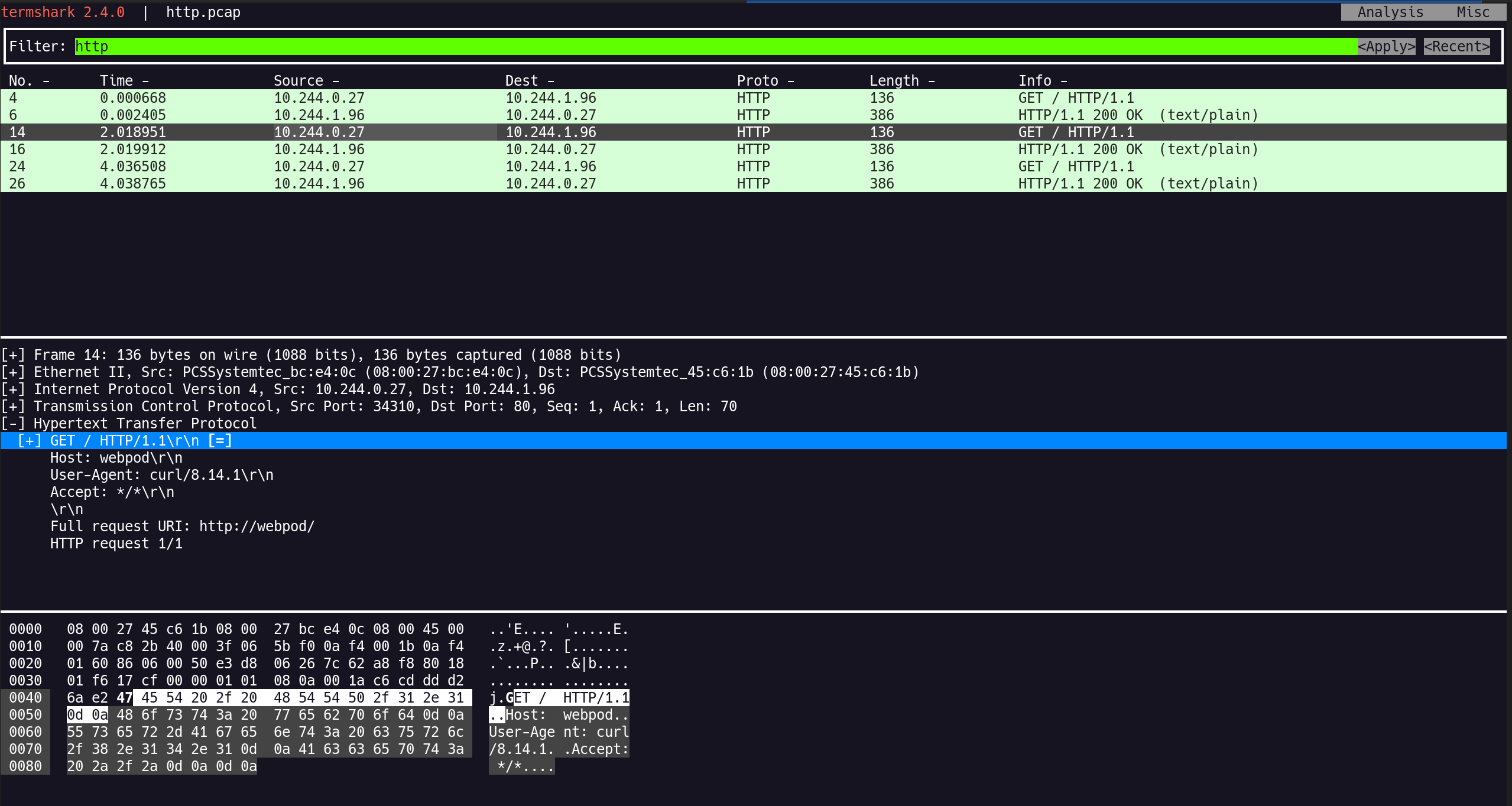

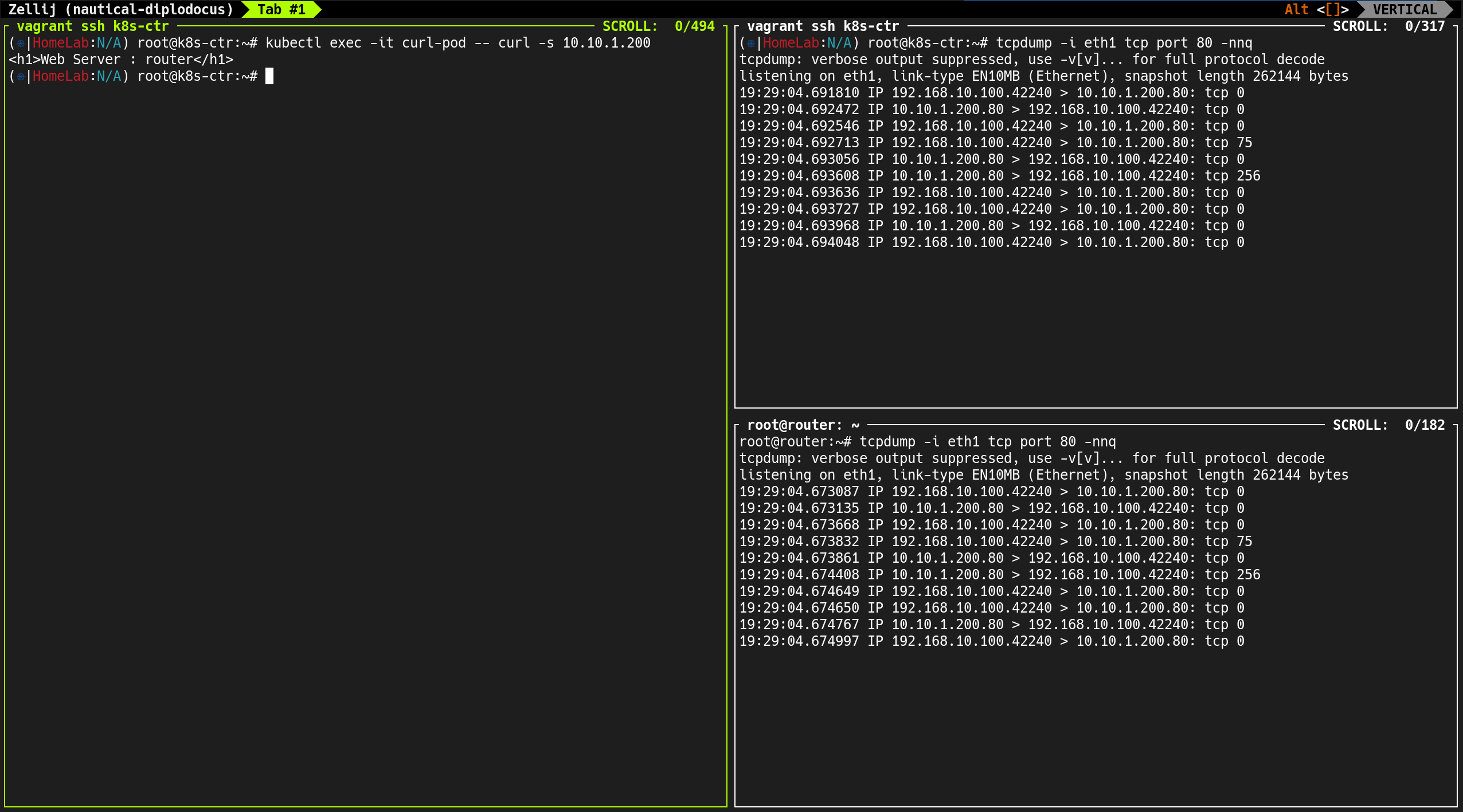

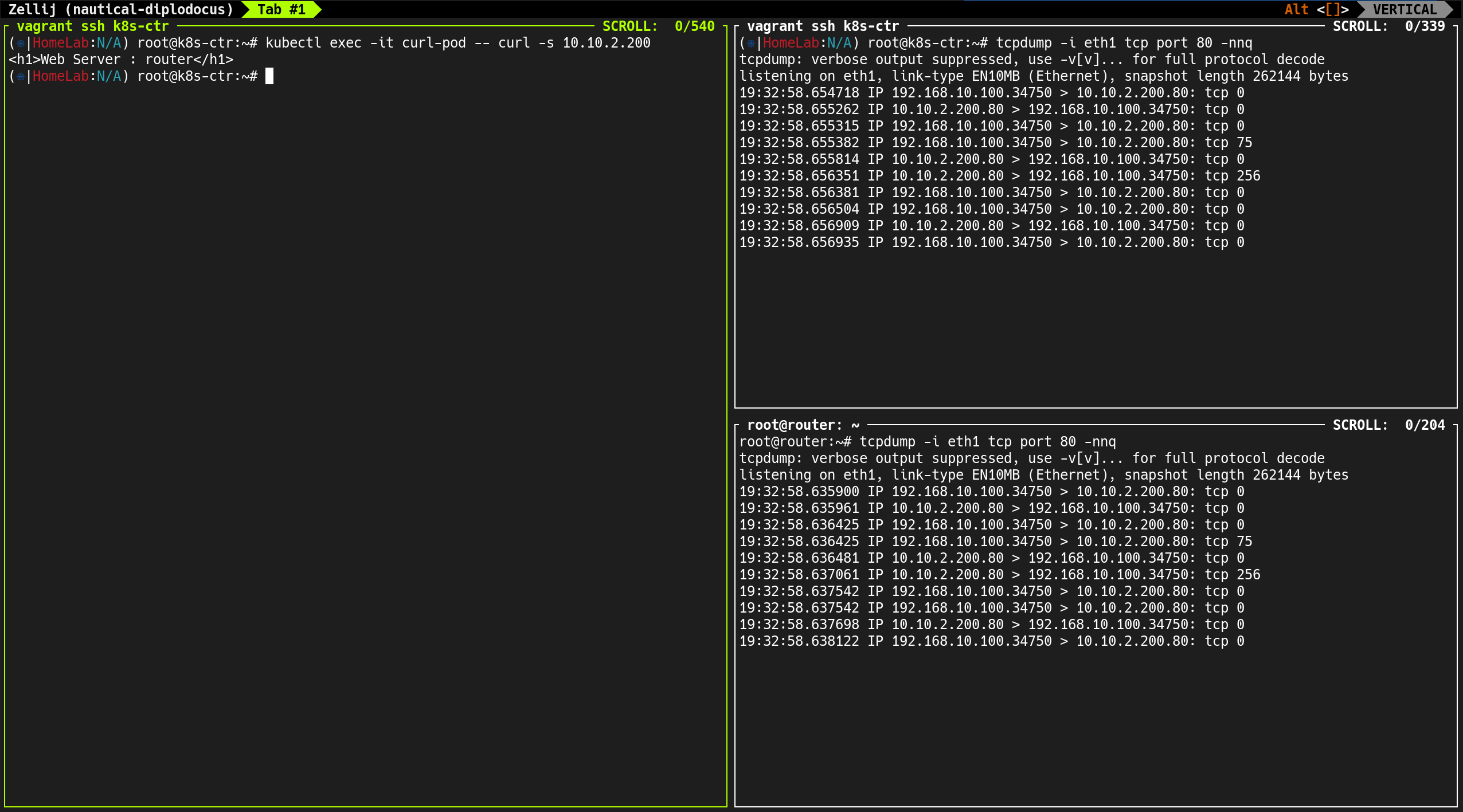

8. 네트워크 패킷 캡처 분석

(1) tcpdump를 통한 실시간 모니터링

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# tcpdump -i eth1 tcp port 80 -nn

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on eth1, link-type EN10MB (Ethernet), snapshot length 262144 bytes

15:43:14.755578 IP 10.244.0.27.41700 > 10.244.1.96.80: Flags [S], seq 501953752, win 64240, options [mss 1460,sackOK,TS val 1594519 ecr 0,nop,wscale 7], length 0

15:43:14.756290 IP 10.244.1.96.80 > 10.244.0.27.41700: Flags [S.], seq 2849751208, ack 501953753, win 65160, options [mss 1460,sackOK,TS val 3721394349 ecr 1594519,nop,wscale 7], length 0

15:43:14.756381 IP 10.244.0.27.41700 > 10.244.1.96.80: Flags [.], ack 1, win 502, options [nop,nop,TS val 1594520 ecr 3721394349], length 0

15:43:14.756622 IP 10.244.0.27.41700 > 10.244.1.96.80: Flags [P.], seq 1:71, ack 1, win 502, options [nop,nop,TS val 1594521 ecr 3721394349], length 70: HTTP: GET / HTTP/1.1

15:43:14.757363 IP 10.244.1.96.80 > 10.244.0.27.41700: Flags [.], ack 71, win 509, options [nop,nop,TS val 3721394350 ecr 1594521], length 0

15:43:14.757855 IP 10.244.1.96.80 > 10.244.0.27.41700: Flags [P.], seq 1:321, ack 71, win 509, options [nop,nop,TS val 3721394351 ecr 1594521], length 320: HTTP: HTTP/1.1 200 OK

15:43:14.757884 IP 10.244.0.27.41700 > 10.244.1.96.80: Flags [.], ack 321, win 501, options [nop,nop,TS val 1594522 ecr 3721394351], length 0

15:43:14.758124 IP 10.244.0.27.41700 > 10.244.1.96.80: Flags [F.], seq 71, ack 321, win 501, options [nop,nop,TS val 1594522 ecr 3721394351], length 0

15:43:14.758448 IP 10.244.1.96.80 > 10.244.0.27.41700: Flags [F.], seq 321, ack 72, win 509, options [nop,nop,TS val 3721394352 ecr 1594522], length 0

15:43:14.758485 IP 10.244.0.27.41700 > 10.244.1.96.80: Flags [.], ack 322, win 501, options [nop,nop,TS val 1594522 ecr 3721394352], length 0

15:43:16.770376 IP 10.244.0.27.41702 > 10.244.1.96.80: Flags [S], seq 2173259033, win 64240, options [mss 1460,sackOK,TS val 1596534 ecr 0,nop,wscale 7], length 0

15:43:16.771075 IP 10.244.1.96.80 > 10.244.0.27.41702: Flags [S.], seq 1449700480, ack 2173259034, win 65160, options [mss 1460,sackOK,TS val 3721396364 ecr 1596534,nop,wscale 7], length 0

15:43:16.771133 IP 10.244.0.27.41702 > 10.244.1.96.80: Flags [.], ack 1, win 502, options [nop,nop,TS val 1596535 ecr 3721396364], length 0

15:43:16.771167 IP 10.244.0.27.41702 > 10.244.1.96.80: Flags [P.], seq 1:71, ack 1, win 502, options [nop,nop,TS val 1596535 ecr 3721396364], length 70: HTTP: GET / HTTP/1.1

15:43:16.771658 IP 10.244.1.96.80 > 10.244.0.27.41702: Flags [.], ack 71, win 509, options [nop,nop,TS val 3721396365 ecr 1596535], length 0

15:43:16.772436 IP 10.244.1.96.80 > 10.244.0.27.41702: Flags [P.], seq 1:321, ack 71, win 509, options [nop,nop,TS val 3721396366 ecr 1596535], length 320: HTTP: HTTP/1.1 200 OK

15:43:16.772479 IP 10.244.0.27.41702 > 10.244.1.96.80: Flags [.], ack 321, win 501, options [nop,nop,TS val 1596536 ecr 3721396366], length 0

15:43:16.772648 IP 10.244.0.27.41702 > 10.244.1.96.80: Flags [F.], seq 71, ack 321, win 501, options [nop,nop,TS val 1596537 ecr 3721396366], length 0

15:43:16.773058 IP 10.244.1.96.80 > 10.244.0.27.41702: Flags [F.], seq 321, ack 72, win 509, options [nop,nop,TS val 3721396366 ecr 1596537], length 0

15:43:16.773093 IP 10.244.0.27.41702 > 10.244.1.96.80: Flags [.], ack 322, win 501, options [nop,nop,TS val 1596537 ecr 3721396366], length 0

15:43:17.778477 IP 10.244.0.27.52802 > 10.244.1.96.80: Flags [S], seq 1698202645, win 64240, options [mss 1460,sackOK,TS val 1597542 ecr 0,nop,wscale 7], length 0

15:43:17.779167 IP 10.244.1.96.80 > 10.244.0.27.52802: Flags [S.], seq 4294649790, ack 1698202646, win 65160, options [mss 1460,sackOK,TS val 3721397372 ecr 1597542,nop,wscale 7], length 0

...

10.244.0.27: curl-pod의 실제 IP 주소10.244.1.96: webpod의 실제 IP 주소 (워커 노드)- 서비스 IP(

10.96.152.212)는 패킷 레벨에서는 보이지 않음 - eBPF가 커널 레벨에서 투명하게 NAT 변환을 수행

(2) 패킷 캡처 파일 생성

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# tcpdump -i eth1 tcp port 80 -w /tmp/http.pcap

✅ 출력

1

2

3

4

tcpdump: listening on eth1, link-type EN10MB (Ethernet), snapshot length 262144 bytes

^C30 packets captured

30 packets received by filter

0 packets dropped by kernel

(3) Termshark로 분석

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# termshark -r /tmp/http.pcap

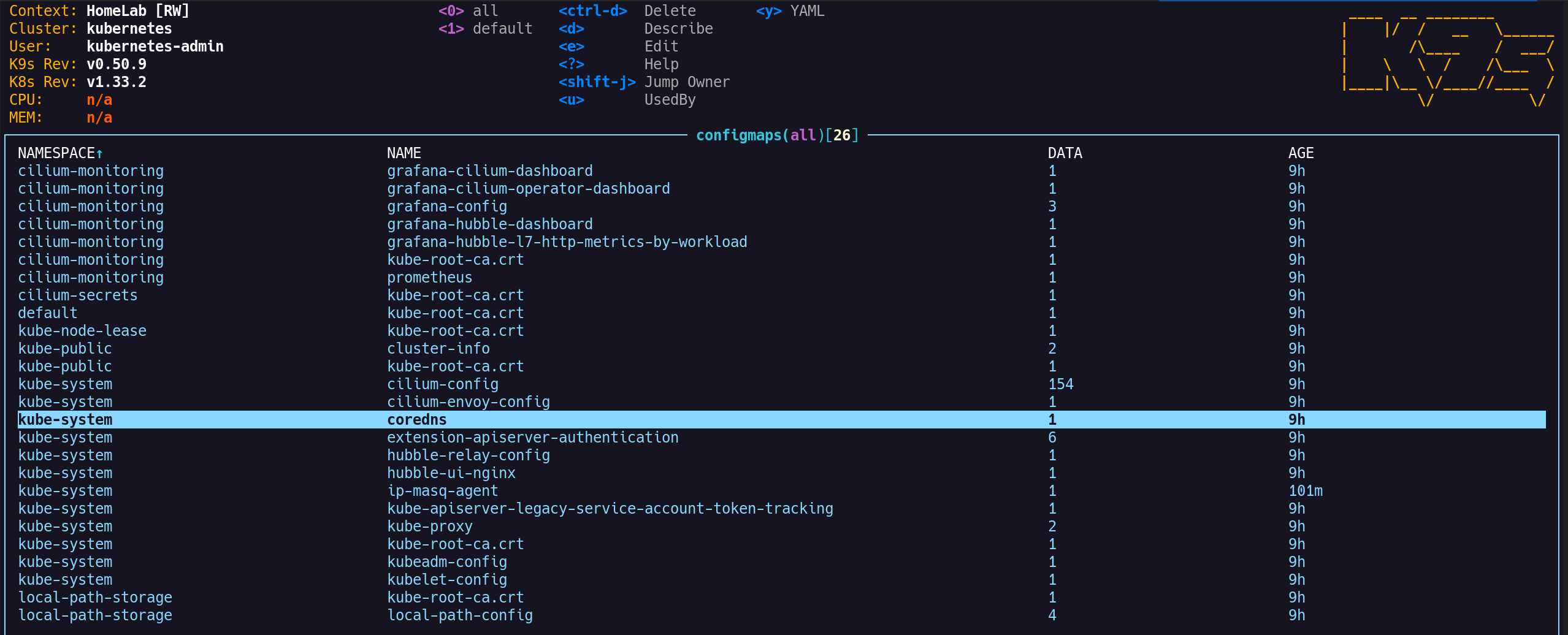

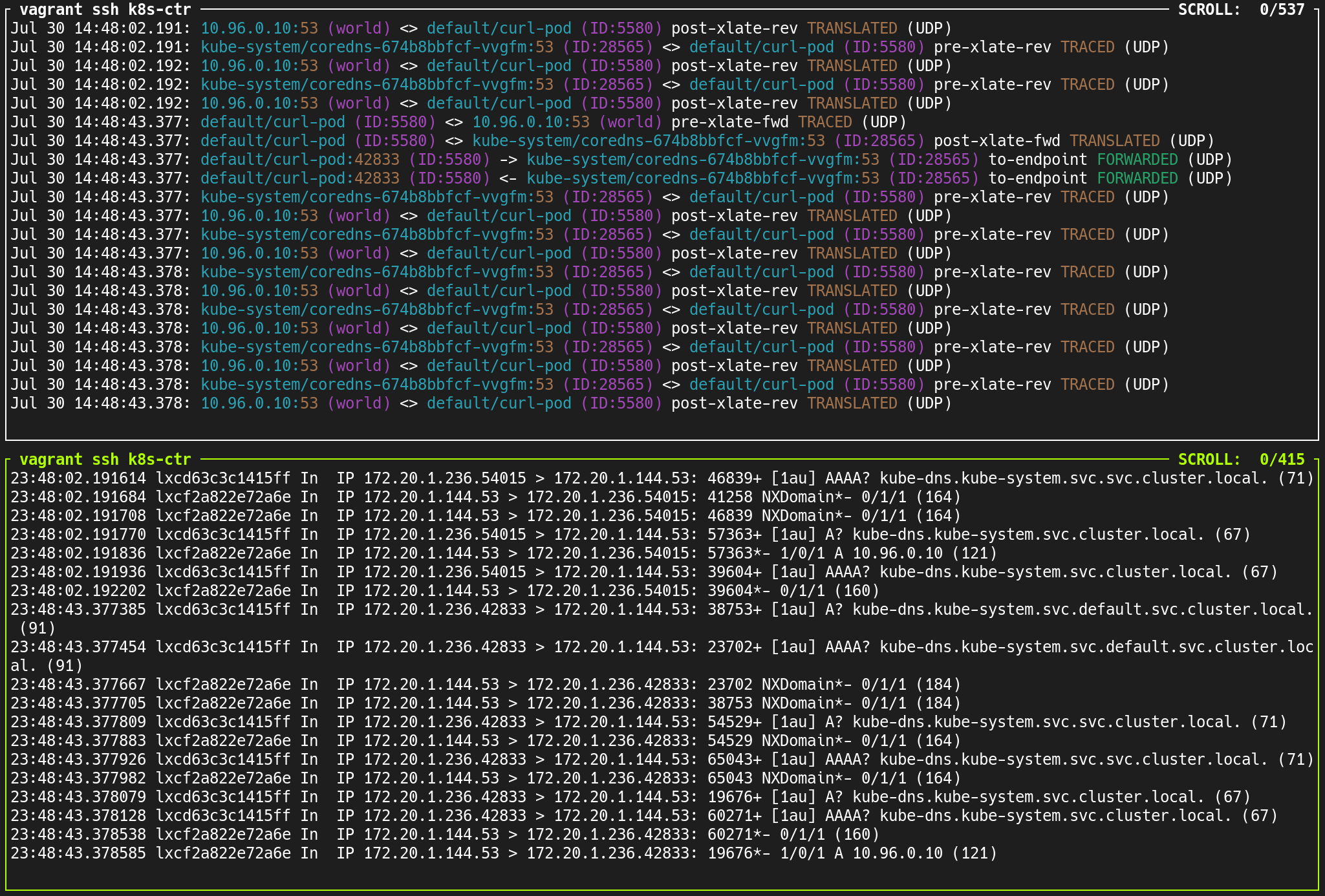

🐝 [Cilium] Cluster Scope & 마이그레이션 실습

- https://docs.cilium.io/en/stable/network/concepts/ipam/cluster-pool/

- https://docs.cilium.io/en/stable/network/kubernetes/ipam-cluster-pool/

1. 개요

- 목표: Kubernetes Host Scope에서 Cilium Cluster Scope IPAM 모드로 마이그레이션

- IP 대역 변경: 10.244.0.0/16 → 172.20.0.0/16

- 관리 주체 변경: kube-controller-manager → Cilium Operator

통신 확인 목적, 반복 요청

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- sh -c 'while true; do curl -s webpod | grep Hostname; sleep 1; done'

2. IPAM 모드 변경

(1) 최초 변경 시도 (실패)

1

2

(⎈|HomeLab:N/A) root@k8s-ctr:~# helm upgrade cilium cilium/cilium --namespace kube-system --reuse-values \

--set ipam.mode="cluster-pool" --set ipam.operator.clusterPoolIPv4PodCIDRList={"172.20.0.0/16"} --set ipv4NativeRoutingCIDR=172.20.0.0/16

✅ 출력

1

Error: UPGRADE FAILED: template: cilium/templates/cilium-operator/deployment.yaml:145:26: executing "cilium/templates/cilium-operator/deployment.yaml" at <.Values.k8sServiceHostRef.name>: nil pointer evaluating interface {}.name

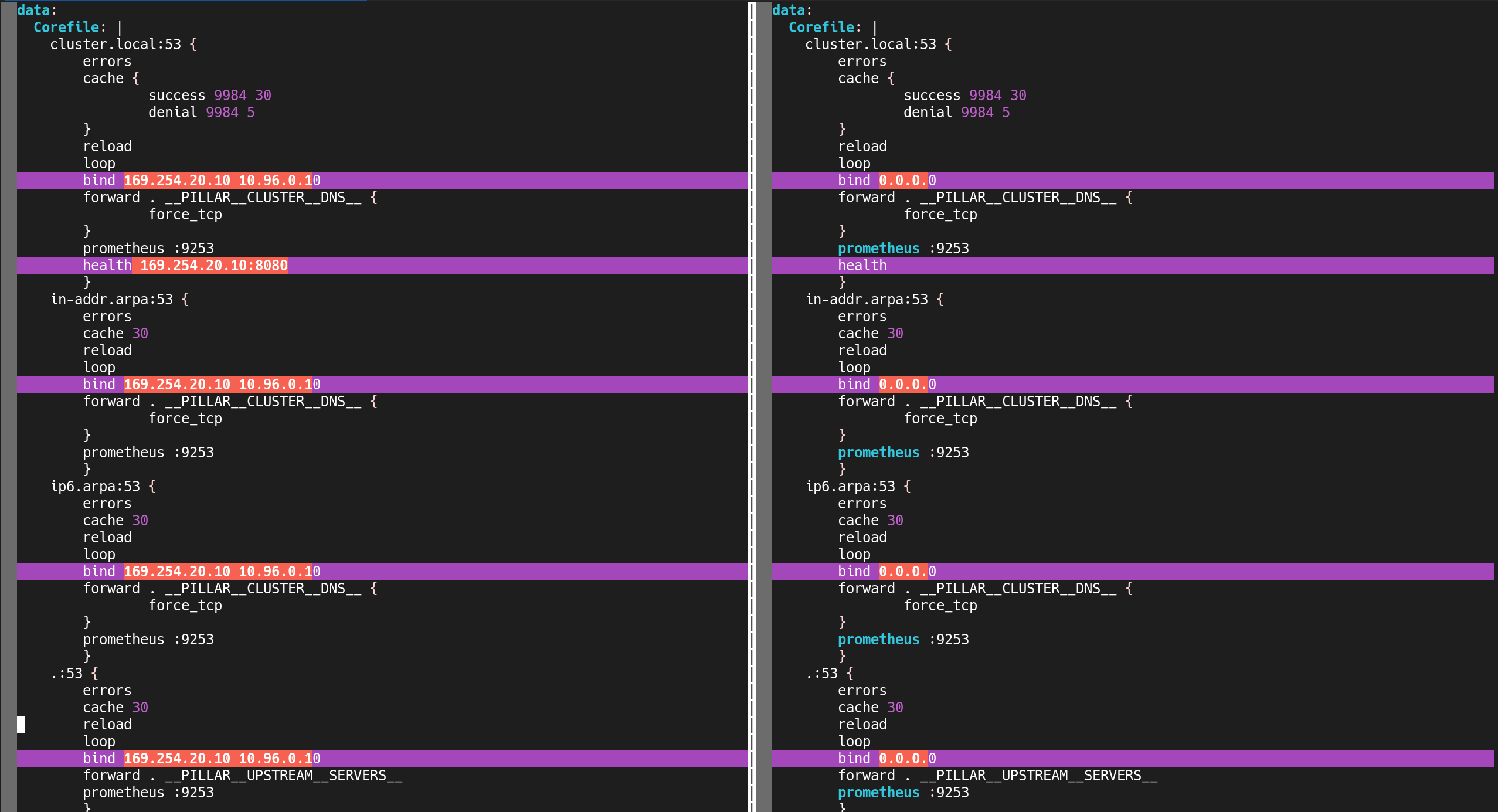

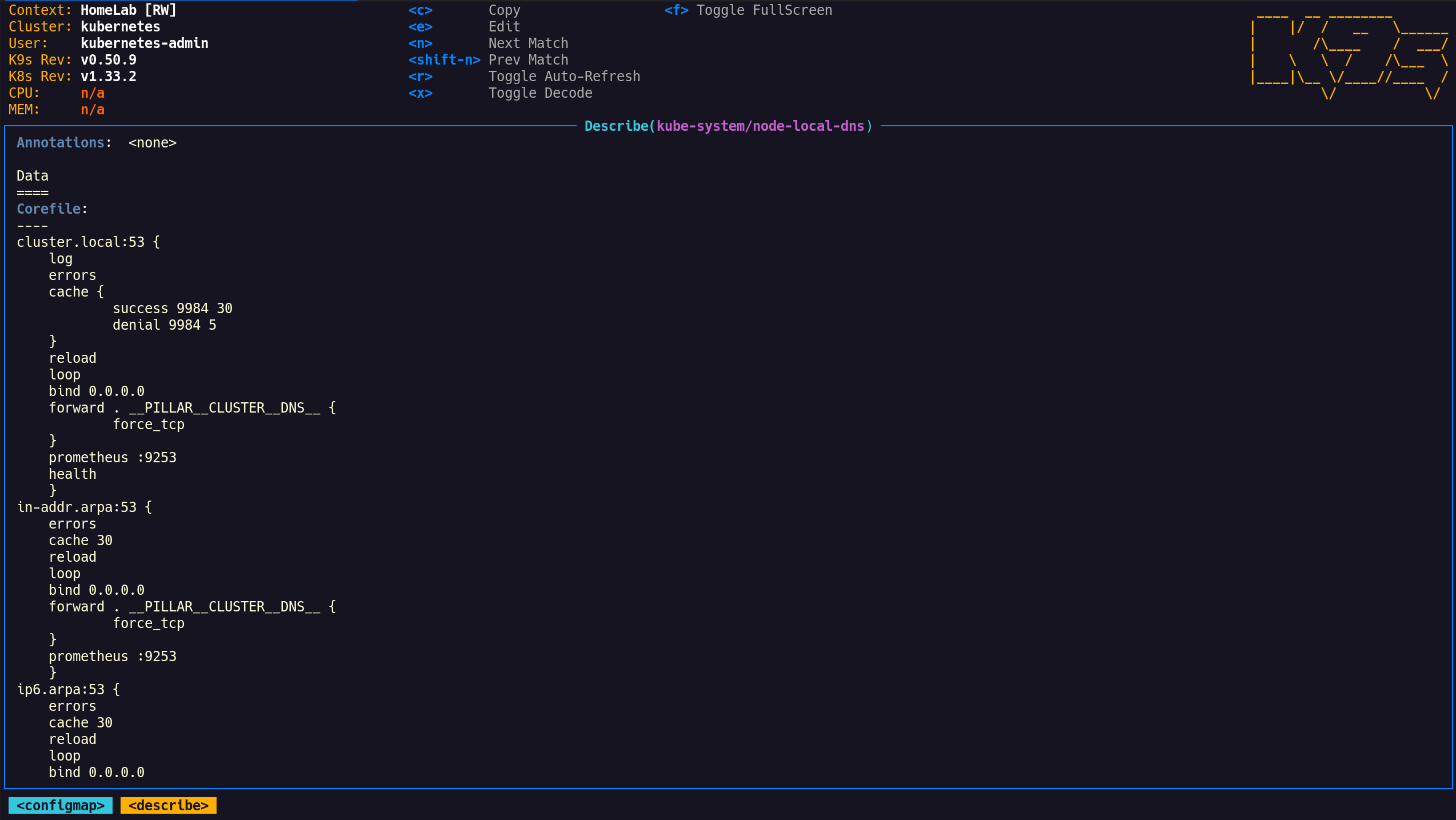

(2) 문제 해결: Values 정제 💡

기존 값을 clean-values.yaml로 백업

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# helm get values cilium -n kube-system > clean-values.yaml

오류 유발 항목 제거 후 final-values.yaml 작성하여 설정 정제

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

(⎈|HomeLab:N/A) root@k8s-ctr:~# cat > final-values.yaml << EOF

autoDirectNodeRoutes: true

bpf:

masquerade: true

debug:

enabled: true

endpointHealthChecking:

enabled: false

endpointRoutes:

enabled: true

healthChecking: false

hubble:

enabled: true

metrics:

enableOpenMetrics: true

enabled:

- dns

- drop

- tcp

- flow

- port-distribution

- icmp

- httpV2:exemplars=true;labelsContext=source_ip,source_namespace,source_workload,destination_ip,destination_namespace,destination_workload,traffic_direction

relay:

enabled: true

ui:

enabled: true

service:

nodePort: 30003

type: NodePort

installNoConntrackIptablesRules: true

ipam:

mode: cluster-pool

operator:

clusterPoolIPv4PodCIDRList:

- "172.20.0.0/16"

ipv4NativeRoutingCIDR: 172.20.0.0/16

k8s:

requireIPv4PodCIDR: true

k8sServiceHost: 192.168.10.100

k8sServicePort: 6443

kubeProxyReplacement: true

operator:

prometheus:

enabled: true

replicas: 1

prometheus:

enabled: true

routingMode: native

EOF

(3) 설정 적용: IPAM cluster-pool로 변경 성공

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# helm upgrade cilium cilium/cilium --namespace kube-system -f final-values.yaml

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

Release "cilium" has been upgraded. Happy Helming!

NAME: cilium

LAST DEPLOYED: Wed Jul 30 16:26:05 2025

NAMESPACE: kube-system

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

You have successfully installed Cilium with Hubble Relay and Hubble UI.

Your release version is 1.18.0.

For any further help, visit https://docs.cilium.io/en/v1.18/gettinghelp

3. Cilium 구성 요소 재시작

(1) Cilium Operator 재시작

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl -n kube-system rollout restart deploy/cilium-operator

# 결과

deployment.apps/cilium-operator restarted

(2) Cilium DaemonSet 재시작

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl -n kube-system rollout restart ds/cilium

# 결과

daemonset.apps/cilium restarted

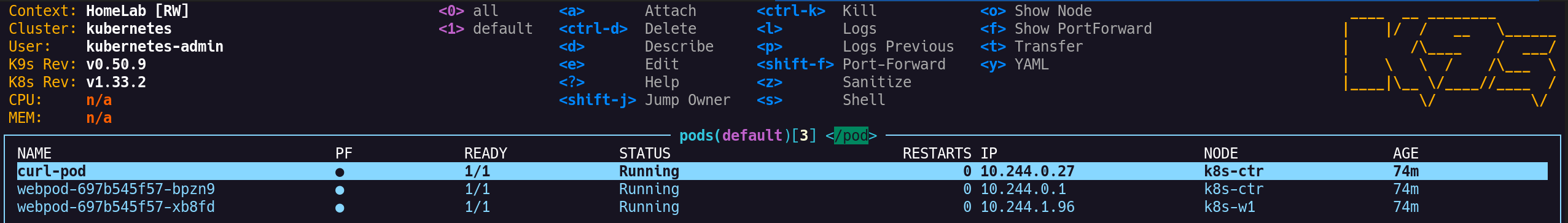

4. k9s 출력 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# k9s

5. IPAM 모드 변경 확인

IPAM 모드가 cluster-pool로 정상 변경되었음

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium config view | grep ^ipam

✅ 출력

1

2

ipam cluster-pool

ipam-cilium-node-update-rate 15s

6. Pod CIDR 미반영 확인

(1) CiliumNode의 기존 CIDR 유지 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumnode -o json | grep podCIDRs -A2

✅ 출력

1

2

3

4

5

6

7

"podCIDRs": [

"10.244.0.0/24"

],

--

"podCIDRs": [

"10.244.1.0/24"

],

(2) CiliumEndpoint의 Pod IP 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumendpoints.cilium.io -A

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

NAMESPACE NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6

cilium-monitoring grafana-5c69859d9-wdb82 22795 ready 10.244.0.104

cilium-monitoring prometheus-6fc896bc5d-bxnd5 1213 ready 10.244.0.65

default curl-pod 5580 ready 10.244.0.27

default webpod-697b545f57-bpzn9 12497 ready 10.244.0.1

default webpod-697b545f57-xb8fd 12497 ready 10.244.1.96

kube-system coredns-674b8bbfcf-9pxvx 28565 ready 10.244.0.199

kube-system coredns-674b8bbfcf-khjhq 28565 ready 10.244.0.59

kube-system hubble-relay-5b48c999f9-cvjjc 17061 ready 10.244.1.67

kube-system hubble-ui-655f947f96-tcrrp 2452 ready 10.244.1.66

local-path-storage local-path-provisioner-74f9666bc9-scg4s 56893 ready 10.244.0.253

7. IPAM 변경 미반영 원인 파악

(1) CiliumNode 내 podCIDRs 값 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumnode -o json | grep podCIDRs -A2

✅ 출력

1

2

3

4

5

6

7

"podCIDRs": [

"10.244.0.0/24"

],

--

"podCIDRs": [

"10.244.1.0/24"

],

(2) 기존 CiliumNode의 IP 유지 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumnode

✅ 출력

1

2

3

NAME CILIUMINTERNALIP INTERNALIP AGE

k8s-ctr 10.244.0.70 192.168.10.100 130m

k8s-w1 10.244.1.175 192.168.10.101 128m

8. CiliumNode 리소스 삭제 및 재시작

(1) 워커 노드의 CiliumNode 삭제

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl delete ciliumnode k8s-w1

# 결과

ciliumnode.cilium.io "k8s-w1" deleted

(2) Cilium DaemonSet 재시작

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl -n kube-system rollout restart ds/cilium

# 결과

daemonset.apps/cilium restarted

(3) 변경된 Pod CIDRs 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumnode -o json | grep podCIDRs -A2

✅ 출력

1

2

3

4

5

6

7

"podCIDRs": [

"10.244.0.0/24"

],

--

"podCIDRs": [

"172.20.0.0/24"

],

9. 컨트롤플레인 노드도 CIDR 재설정

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumendpoints.cilium.io -A

✅ 출력

1

2

3

4

5

6

7

8

NAMESPACE NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6

cilium-monitoring grafana-5c69859d9-wdb82 22795 ready 10.244.0.104

cilium-monitoring prometheus-6fc896bc5d-bxnd5 1213 ready 10.244.0.65

default curl-pod 5580 ready 10.244.0.27

default webpod-697b545f57-bpzn9 12497 ready 10.244.0.1

kube-system coredns-674b8bbfcf-9pxvx 28565 ready 10.244.0.199

kube-system coredns-674b8bbfcf-khjhq 28565 ready 10.244.0.59

local-path-storage local-path-provisioner-74f9666bc9-scg4s 56893 ready 10.244.0.253

(1) 컨트롤플레인 노드 삭제

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl delete ciliumnode k8s-ctr

# 결과

ciliumnode.cilium.io "k8s-ctr" deleted

(2) DaemonSet 재시작

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl -n kube-system rollout restart ds/cilium

# 결과

daemonset.apps/cilium restarted

(3) 변경된 Pod CIDRs 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumnode -o json | grep podCIDRs -A2

✅ 출력

1

2

3

4

5

6

7

"podCIDRs": [

"172.20.1.0/24"

],

--

"podCIDRs": [

"172.20.0.0/24"

],

10. 엔드포인트 및 라우팅 경로 확인

(1) 변경된 Endpoint IP 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumendpoints.cilium.io -A

✅ 출력

1

2

3

NAMESPACE NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6

kube-system coredns-674b8bbfcf-gbnm8 28565 ready 172.20.0.186

kube-system coredns-674b8bbfcf-vvgfm 28565 ready 172.20.1.144

(2) 라우팅 경로 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c route

✅ 출력

1

2

3

4

5

6

7

8

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.10.0.0/16 via 192.168.10.200 dev eth1 proto static

172.20.0.0/24 via 192.168.10.101 dev eth1 proto kernel

172.20.1.144 dev lxcf2a822e72a6e proto kernel scope link

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.100

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@k8s-w1 ip -c route

✅ 출력

1

2

3

4

5

6

7

8

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.10.0.0/16 via 192.168.10.200 dev eth1 proto static

172.20.0.186 dev lxc80130454cb70 proto kernel scope link

172.20.1.0/24 via 192.168.10.100 dev eth1 proto kernel

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.101

11. 기존 Pod의 IP 유지 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get pod -A -owide | grep 10.244.

✅ 출력

1

2

3

4

5

6

7

8

cilium-monitoring grafana-5c69859d9-wdb82 0/1 Running 0 143m 10.244.0.104 k8s-ctr <none> <none>

cilium-monitoring prometheus-6fc896bc5d-bxnd5 1/1 Running 0 143m 10.244.0.65 k8s-ctr <none> <none>

default curl-pod 1/1 Running 0 105m 10.244.0.27 k8s-ctr <none> <none>

default webpod-697b545f57-bpzn9 1/1 Running 0 106m 10.244.0.1 k8s-ctr <none> <none>

default webpod-697b545f57-xb8fd 1/1 Running 0 106m 10.244.1.96 k8s-w1 <none> <none>

kube-system hubble-relay-5b48c999f9-cvjjc 0/1 Running 5 (28s ago) 39m 10.244.1.67 k8s-w1 <none> <none>

kube-system hubble-ui-655f947f96-tcrrp 1/2 CrashLoopBackOff 6 (106s ago) 39m 10.244.1.66 k8s-w1 <none> <none>

local-path-storage local-path-provisioner-74f9666bc9-scg4s 1/1 Running 0 143m 10.244.0.253 k8s-ctr <none> <none>

12. Deployment 리소스 재시작

시스템 및 모니터링 파드 재시작

1

2

3

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl -n kube-system rollout restart deploy/hubble-relay deploy/hubble-ui

kubectl -n cilium-monitoring rollout restart deploy/prometheus deploy/grafana

kubectl rollout restart deploy/webpod

✅ 출력

1

2

3

4

5

deployment.apps/hubble-relay restarted

deployment.apps/hubble-ui restarted

deployment.apps/prometheus restarted

deployment.apps/grafana restarted

deployment.apps/webpod restarted

13. 수동 생성 파드 삭제 및 재배포

(1) curl-pod 삭제

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl delete pod curl-pod

# 출력

pod "curl-pod" deleted

(2) curl-pod 재배포

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

(⎈|HomeLab:N/A) root@k8s-ctr:~# cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: curl-pod

labels:

app: curl

spec:

nodeName: k8s-ctr

containers:

- name: curl

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

# 결과

pod/curl-pod created

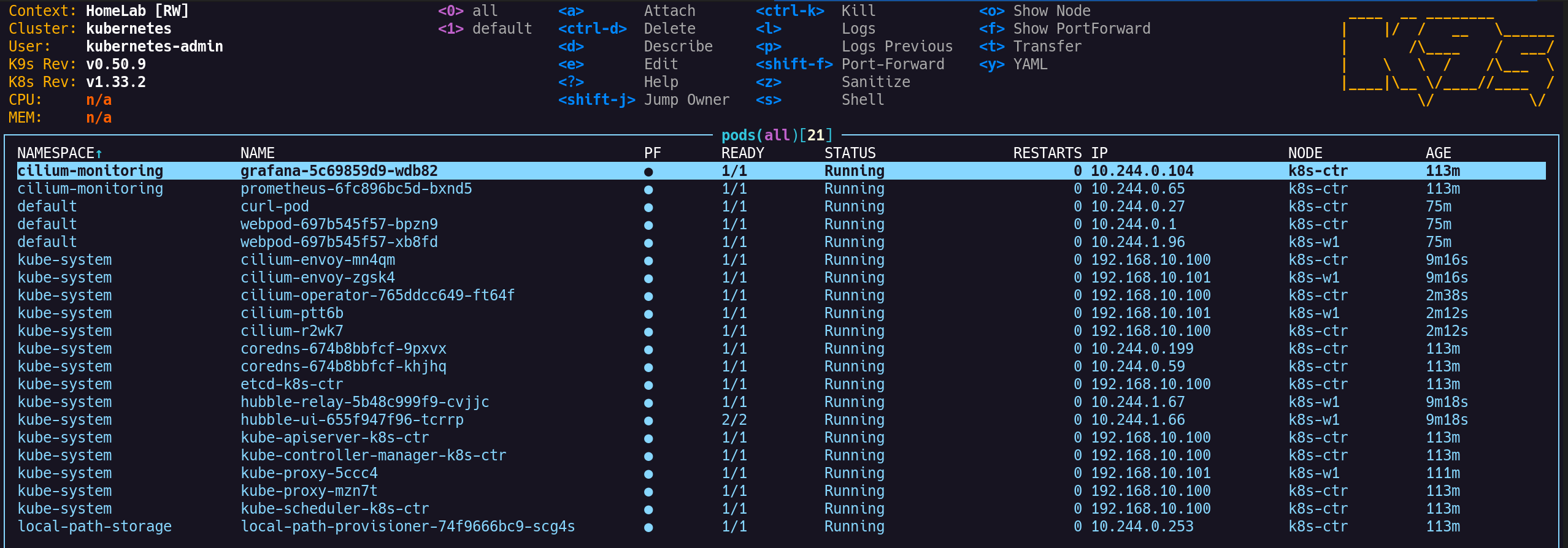

14. 새 IP 할당 상태 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumendpoints.cilium.io -A

✅ 출력

1

2

3

4

5

6

7

8

9

10

NAMESPACE NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6

cilium-monitoring grafana-6bc98cff96-h74hv 22795 ready 172.20.0.67

cilium-monitoring prometheus-597ff4d4c5-hzrsx 1213 ready 172.20.0.17

default curl-pod 5580 ready 172.20.1.236

default webpod-556878d5d7-7p8bn 12497 ready 172.20.1.40

default webpod-556878d5d7-r4dmh 12497 ready 172.20.0.130

kube-system coredns-674b8bbfcf-gbnm8 28565 ready 172.20.0.186

kube-system coredns-674b8bbfcf-vvgfm 28565 ready 172.20.1.144

kube-system hubble-relay-c8db994db-5hc26 17061 ready 172.20.0.190

kube-system hubble-ui-5c5855f4bf-8dkrf 2452 ready 172.20.0.162

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# k get pod -A

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

NAMESPACE NAME READY STATUS RESTARTS AGE

cilium-monitoring grafana-6bc98cff96-h74hv 1/1 Running 0 3m31s

cilium-monitoring prometheus-597ff4d4c5-hzrsx 1/1 Running 0 3m31s

default curl-pod 1/1 Running 0 110s

default webpod-556878d5d7-7p8bn 1/1 Running 0 3m4s

default webpod-556878d5d7-r4dmh 1/1 Running 0 3m30s

kube-system cilium-8nxg4 1/1 Running 0 8m42s

kube-system cilium-envoy-mn4qm 1/1 Running 0 44m

kube-system cilium-envoy-zgsk4 1/1 Running 0 44m

kube-system cilium-kl2mj 1/1 Running 0 8m42s

kube-system cilium-operator-765ddcc649-ft64f 1/1 Running 0 38m

kube-system coredns-674b8bbfcf-gbnm8 1/1 Running 0 8m19s

kube-system coredns-674b8bbfcf-vvgfm 1/1 Running 0 8m4s

kube-system etcd-k8s-ctr 1/1 Running 0 149m

kube-system hubble-relay-c8db994db-5hc26 1/1 Running 0 3m31s

kube-system hubble-ui-5c5855f4bf-8dkrf 2/2 Running 0 3m31s

kube-system kube-apiserver-k8s-ctr 1/1 Running 0 149m

kube-system kube-controller-manager-k8s-ctr 1/1 Running 0 149m

kube-system kube-proxy-5ccc4 1/1 Running 0 147m

kube-system kube-proxy-mzn7t 1/1 Running 0 149m

kube-system kube-scheduler-k8s-ctr 1/1 Running 0 149m

local-path-storage local-path-provisioner-74f9666bc9-scg4s 1/1 Running 0 148m

15. curl-pod에서 통신 테스트

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- sh -c 'while true; do curl -s webpod | grep Hostname; sleep 1; done'

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

Hostname: webpod-556878d5d7-7p8bn

Hostname: webpod-556878d5d7-r4dmh

Hostname: webpod-556878d5d7-7p8bn

Hostname: webpod-556878d5d7-7p8bn

Hostname: webpod-556878d5d7-7p8bn

Hostname: webpod-556878d5d7-r4dmh

Hostname: webpod-556878d5d7-7p8bn

Hostname: webpod-556878d5d7-r4dmh

Hostname: webpod-556878d5d7-7p8bn

Hostname: webpod-556878d5d7-7p8bn

...

16. Hubble 포트포워딩 충돌 해결

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium hubble port-forward&

✅ 출력

1

2

3

[2] 34662

(⎈|HomeLab:N/A) root@k8s-ctr:~#

Error: Unable to port forward: failed to port forward: failed to port forward: unable to listen on any of the requested ports: [{4245 4245}]

기존 포트 충돌 확인 및 종료

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ss -tnlp | grep 4245

✅ 출력

1

2

LISTEN 0 4096 127.0.0.1:4245 0.0.0.0:* users:(("cilium",pid=10026,fd=7))

LISTEN 0 4096 [::1]:4245 [::]:* users:(("cilium",pid=10026,fd=8))

1

2

3

4

(⎈|HomeLab:N/A) root@k8s-ctr:~# kill -9 10026

# 결과

[1]+ Killed cilium hubble port-forward

17. Hubble 포트포워딩 정상 재시작

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium hubble port-forward&

✅ 출력

1

2

[1] 34787

(⎈|HomeLab:N/A) root@k8s-ctr:~# ℹ️ Hubble Relay is available at 127.0.0.1:4245

Hubble 상태 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# hubble status

✅ 출력

1

2

3

4

Healthcheck (via localhost:4245): Ok

Current/Max Flows: 8,190/8,190 (100.00%)

Flows/s: 38.00

Connected Nodes: 2/2

🔧 IPAM 모드 변경은 신중하게

IPAM 모드를 변경하는 작업은 단순히 Pod CIDR 대역을 바꾸는 것보다 훨씬 더 큰 리스크를 동반함. 초기 클러스터 설계 단계에서 사용할 IPAM 모드를 신중하게 결정해야 하며, 향후 클러스터 확장(스케일업) 계획이나 네트워크 구조까지 고려해 설정하는 것을 권장함.

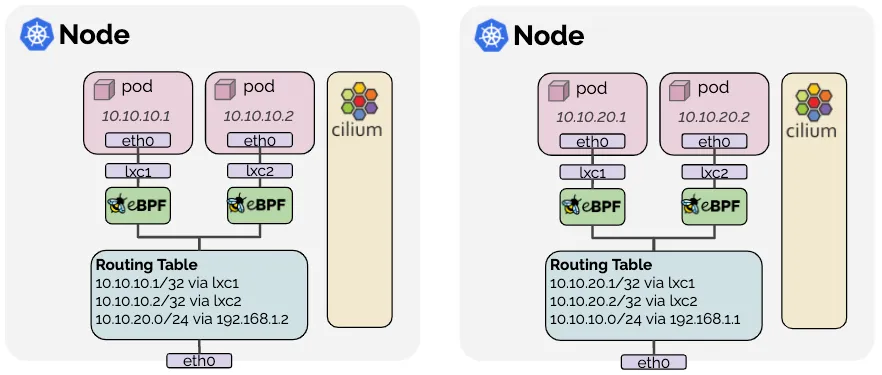

🧭 Routing

1. 파드 상태 및 IP 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get pod -owide

✅ 출력

1

2

3

4

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

curl-pod 1/1 Running 0 57m 172.20.1.236 k8s-ctr <none> <none>

webpod-556878d5d7-7p8bn 1/1 Running 0 58m 172.20.1.40 k8s-ctr <none> <none>

webpod-556878d5d7-r4dmh 1/1 Running 0 59m 172.20.0.130 k8s-w1 <none> <none>

webpod 1,2 파드 IP

1

2

3

(⎈|HomeLab:N/A) root@k8s-ctr:~# export WEBPODIP1=$(kubectl get -l app=webpod pods --field-selector spec.nodeName=k8s-ctr -o jsonpath='{.items[0].status.podIP}')

export WEBPODIP2=$(kubectl get -l app=webpod pods --field-selector spec.nodeName=k8s-w1 -o jsonpath='{.items[0].status.podIP}')

echo $WEBPODIP1 $WEBPODIP2

✅ 출력

1

172.20.1.40 172.20.0.130

2. 파드 간 통신 확인 (ping)

curl-pod → webpod-2 로 ping 시도

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- ping $WEBPODIP2

✅ 출력

1

2

3

4

5

6

7

8

9

10

PING 172.20.0.130 (172.20.0.130) 56(84) bytes of data.

64 bytes from 172.20.0.130: icmp_seq=1 ttl=62 time=0.433 ms

64 bytes from 172.20.0.130: icmp_seq=2 ttl=62 time=0.657 ms

64 bytes from 172.20.0.130: icmp_seq=3 ttl=62 time=0.554 ms

64 bytes from 172.20.0.130: icmp_seq=4 ttl=62 time=0.374 ms

64 bytes from 172.20.0.130: icmp_seq=5 ttl=62 time=0.990 ms

64 bytes from 172.20.0.130: icmp_seq=6 ttl=62 time=0.486 ms

64 bytes from 172.20.0.130: icmp_seq=7 ttl=62 time=0.446 ms

64 bytes from 172.20.0.130: icmp_seq=8 ttl=62 time=0.533 ms

...

- ICMP 응답 수신 정상

- 파드 간 통신에 문제 없음 (Native Routing 정상 작동)

3. 라우팅 테이블 확인 (k8s-ctr 노드)

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c route

✅ 출력

1

2

3

4

5

6

7

8

9

10

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.10.0.0/16 via 192.168.10.200 dev eth1 proto static

172.20.0.0/24 via 192.168.10.101 dev eth1 proto kernel

172.20.1.40 dev lxc0895f39b5225 proto kernel scope link

172.20.1.144 dev lxcf2a822e72a6e proto kernel scope link

172.20.1.236 dev lxcd63c3c1415ff proto kernel scope link

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.100

172.20.0.0/24 via 192.168.10.101 dev eth1 proto kernel- webpod-2가 속한 네트워크 대역으로 가기 위해 워커노드1의 IP 사용

4. 라우팅 테이블 확인 (k8s-w1 노드)

webpod-2 (172.20.0.130)는 veth 인터페이스에 직접 연결

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@k8s-w1 ip -c route

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.10.0.0/16 via 192.168.10.200 dev eth1 proto static

172.20.0.17 dev lxce960d096d8a4 proto kernel scope link

172.20.0.67 dev lxcd23f85153e89 proto kernel scope link

172.20.0.130 dev lxc097ff224d206 proto kernel scope link

172.20.0.162 dev lxc4fe9abccf909 proto kernel scope link

172.20.0.186 dev lxc80130454cb70 proto kernel scope link

172.20.0.190 dev lxcb2f1076877d3 proto kernel scope link

172.20.1.0/24 via 192.168.10.100 dev eth1 proto kernel

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.101

172.20.1.0/24 via 192.168.10.100 dev eth1 proto kernel- curl-pod가 있는 k8s-ctr로의 경로가 설정되어 있음

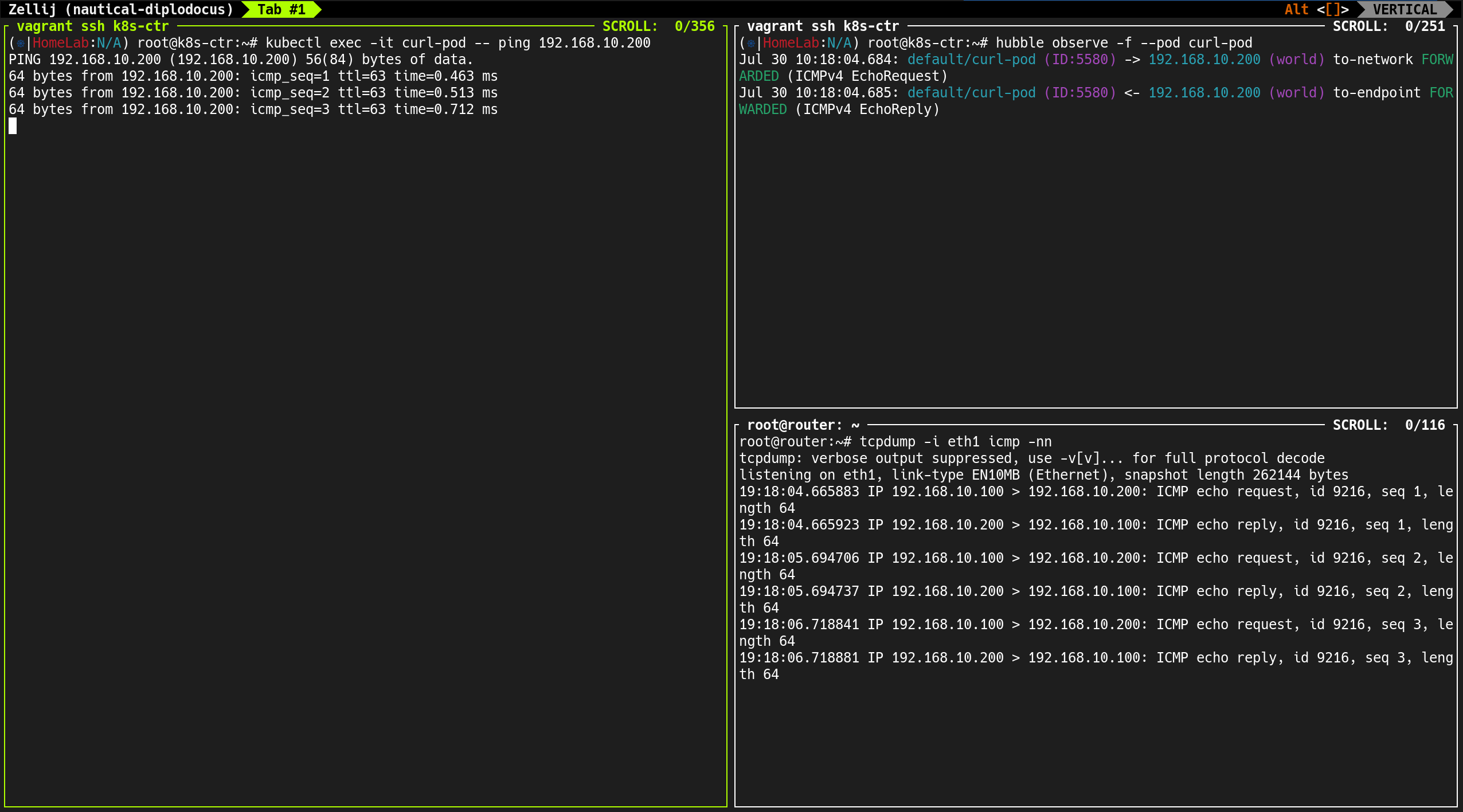

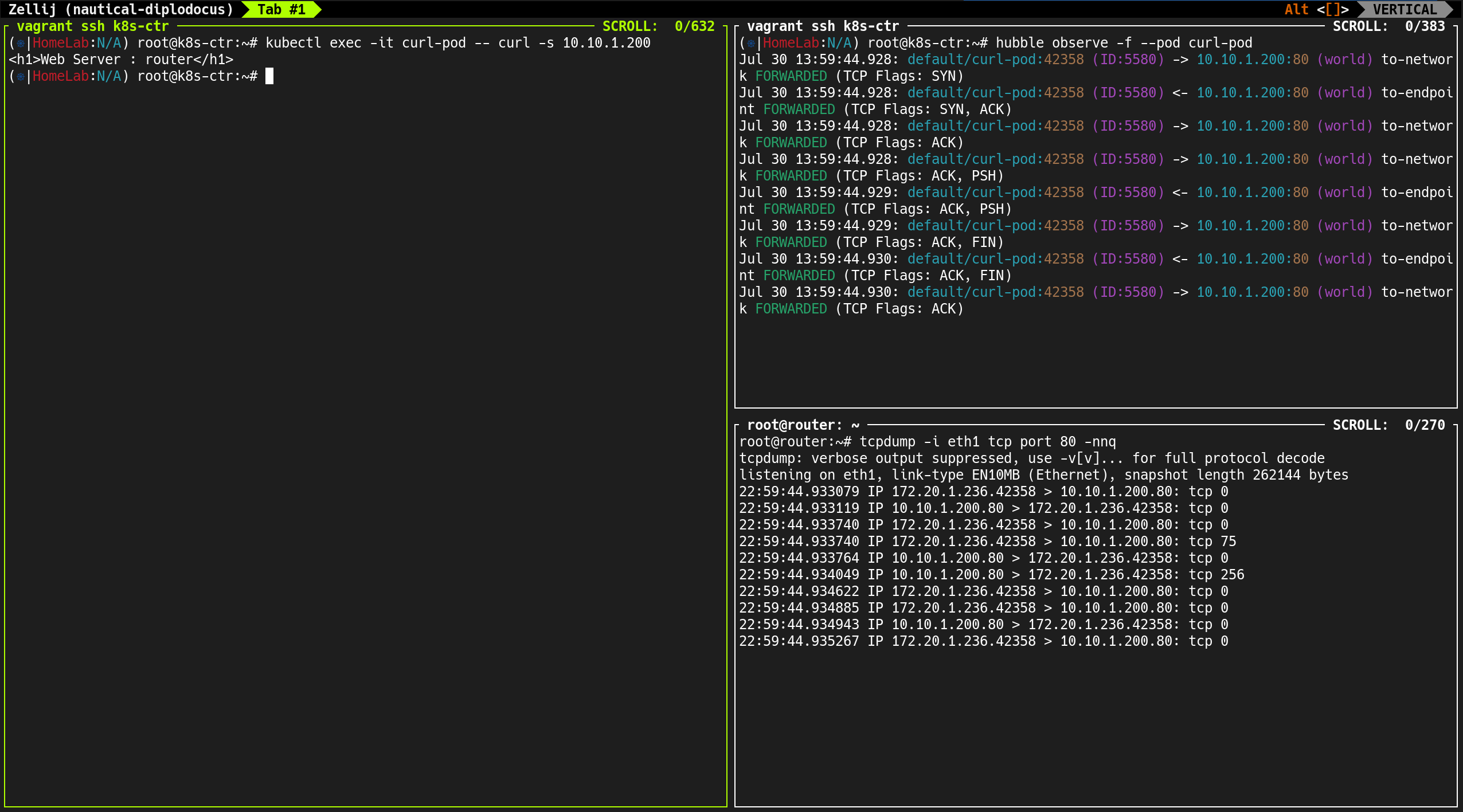

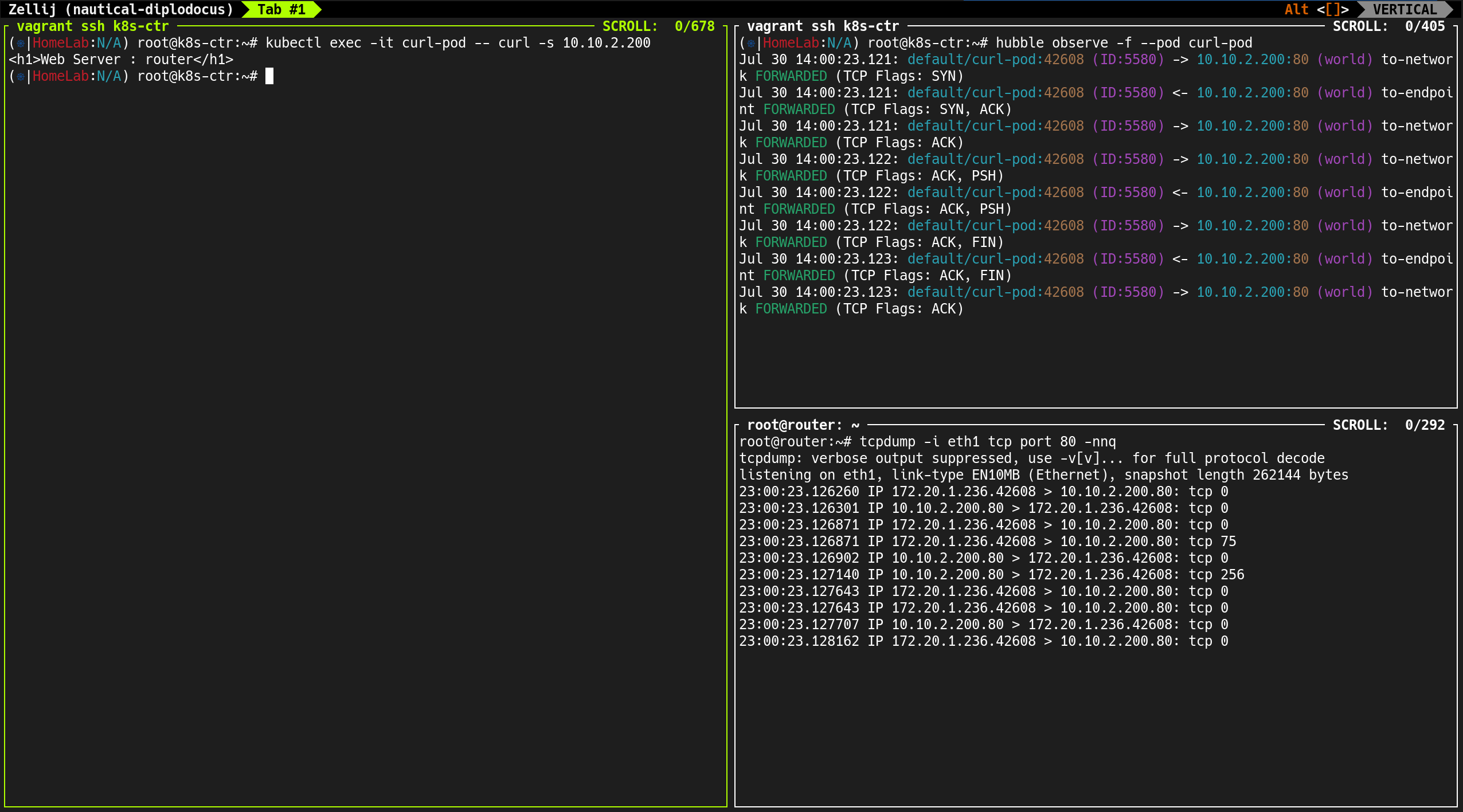

5. Hubble CLI로 트래픽 흐름 확인

1

hubble observe -f --pod curl-pod

✅ 출력

1

2

3

4

5

Jul 30 09:15:15.857: default/curl-pod (ID:5580) -> default/webpod-556878d5d7-r4dmh (ID:12497) to-network FORWARDED (ICMPv4 EchoRequest)

Jul 30 09:15:15.858: default/curl-pod (ID:5580) <- default/webpod-556878d5d7-r4dmh (ID:12497) to-endpoint FORWARDED (ICMPv4 EchoReply)

Jul 30 09:15:16.848: default/curl-pod (ID:5580) -> default/webpod-556878d5d7-r4dmh (ID:12497) to-endpoint FORWARDED (ICMPv4 EchoRequest)

Jul 30 09:15:16.848: default/curl-pod (ID:5580) <- default/webpod-556878d5d7-r4dmh (ID:12497) to-network FORWARDED (ICMPv4 EchoReply)

...

- ICMP EchoRequest / EchoReply 트래픽 실시간 로그 출력

- Source:

curl-pod, Destination:webpod-2

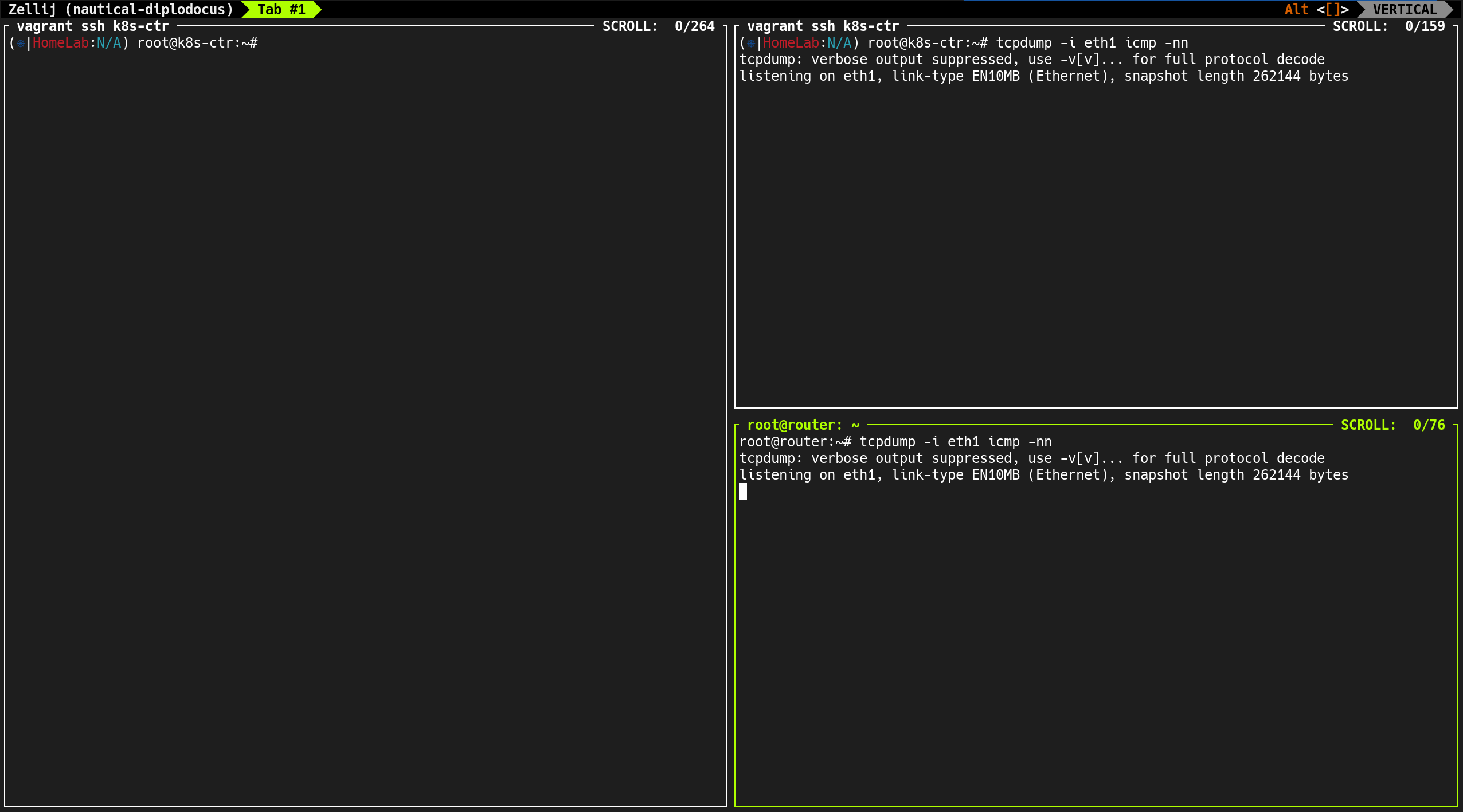

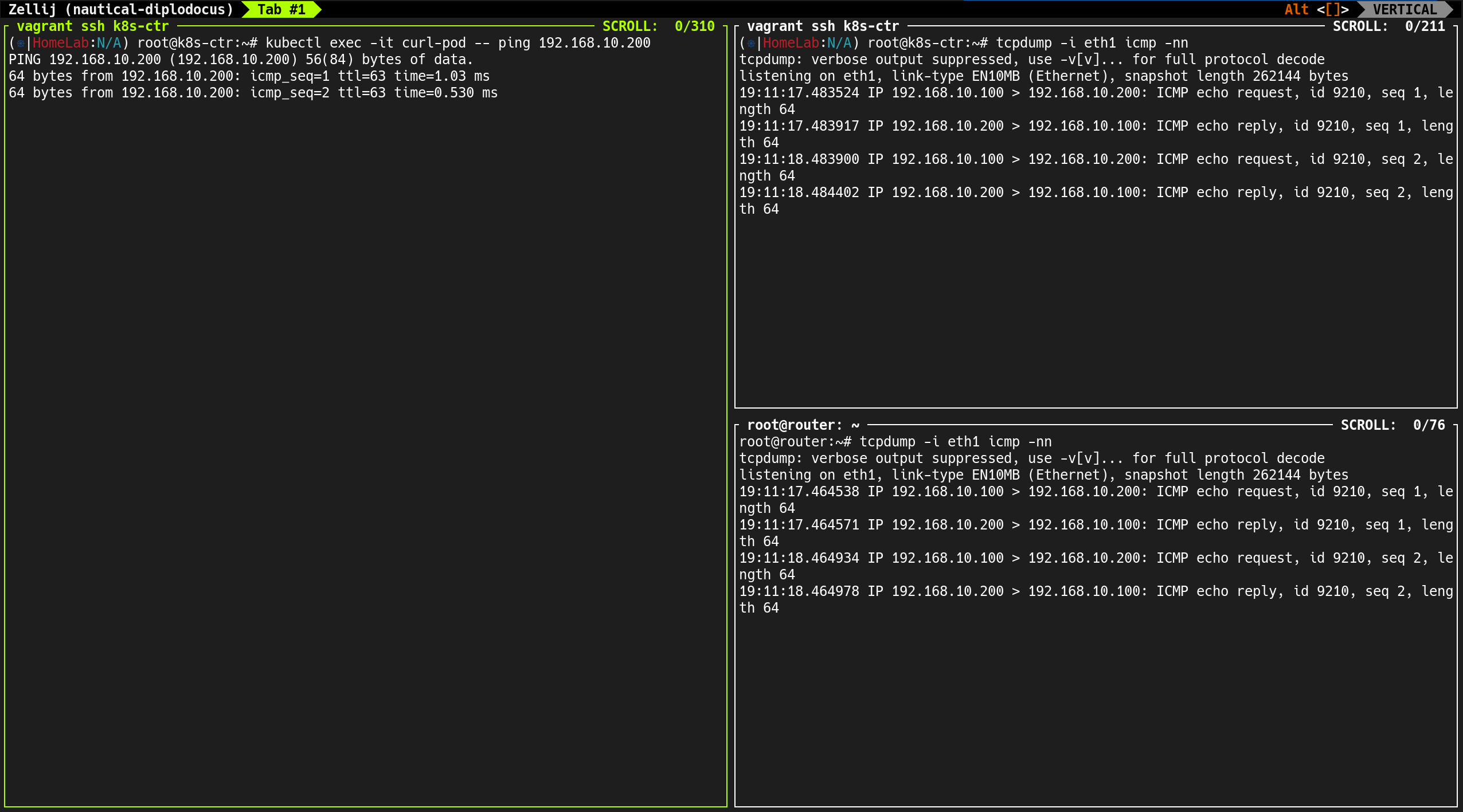

6. tcpdump로 네트워크 패킷 캡처

tcpdump -i eth1 icmp 명령으로 실시간 ICMP 트래픽 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# tcpdump -i eth1 icmp

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on eth1, link-type EN10MB (Ethernet), snapshot length 262144 bytes

18:20:34.129970 IP 172.20.1.236 > 172.20.0.130: ICMP echo request, id 9174, seq 636, length 64

18:20:34.130563 IP 172.20.0.130 > 172.20.1.236: ICMP echo reply, id 9174, seq 636, length 64

18:20:35.153607 IP 172.20.1.236 > 172.20.0.130: ICMP echo request, id 9174, seq 637, length 64

18:20:35.154045 IP 172.20.0.130 > 172.20.1.236: ICMP echo reply, id 9174, seq 637, length 64

18:20:36.178084 IP 172.20.1.236 > 172.20.0.130: ICMP echo request, id 9174, seq 638, length 64

18:20:36.179263 IP 172.20.0.130 > 172.20.1.236: ICMP echo reply, id 9174, seq 638, length 64

18:20:37.179611 IP 172.20.1.236 > 172.20.0.130: ICMP echo request, id 9174, seq 639, length 64

18:20:37.179994 IP 172.20.0.130 > 172.20.1.236: ICMP echo reply, id 9174, seq 639, length 64

18:20:38.225687 IP 172.20.1.236 > 172.20.0.130: ICMP echo request, id 9174, seq 640, length 64

18:20:38.226119 IP 172.20.0.130 > 172.20.1.236: ICMP echo reply, id 9174, seq 640, length 64

...

curl-pod (172.20.1.236)→webpod-2 (172.20.0.130)직접 통신- 오버레이 터널링(VXLAN, Geneve) 없이 native IP로 전송됨

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# k get pod -owide

✅ 출력

1

2

3

4

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

curl-pod 1/1 Running 0 72m 172.20.1.236 k8s-ctr <none> <none>

webpod-556878d5d7-7p8bn 1/1 Running 0 73m 172.20.1.40 k8s-ctr <none> <none>

webpod-556878d5d7-r4dmh 1/1 Running 0 73m 172.20.0.130 k8s-w1 <none> <none>

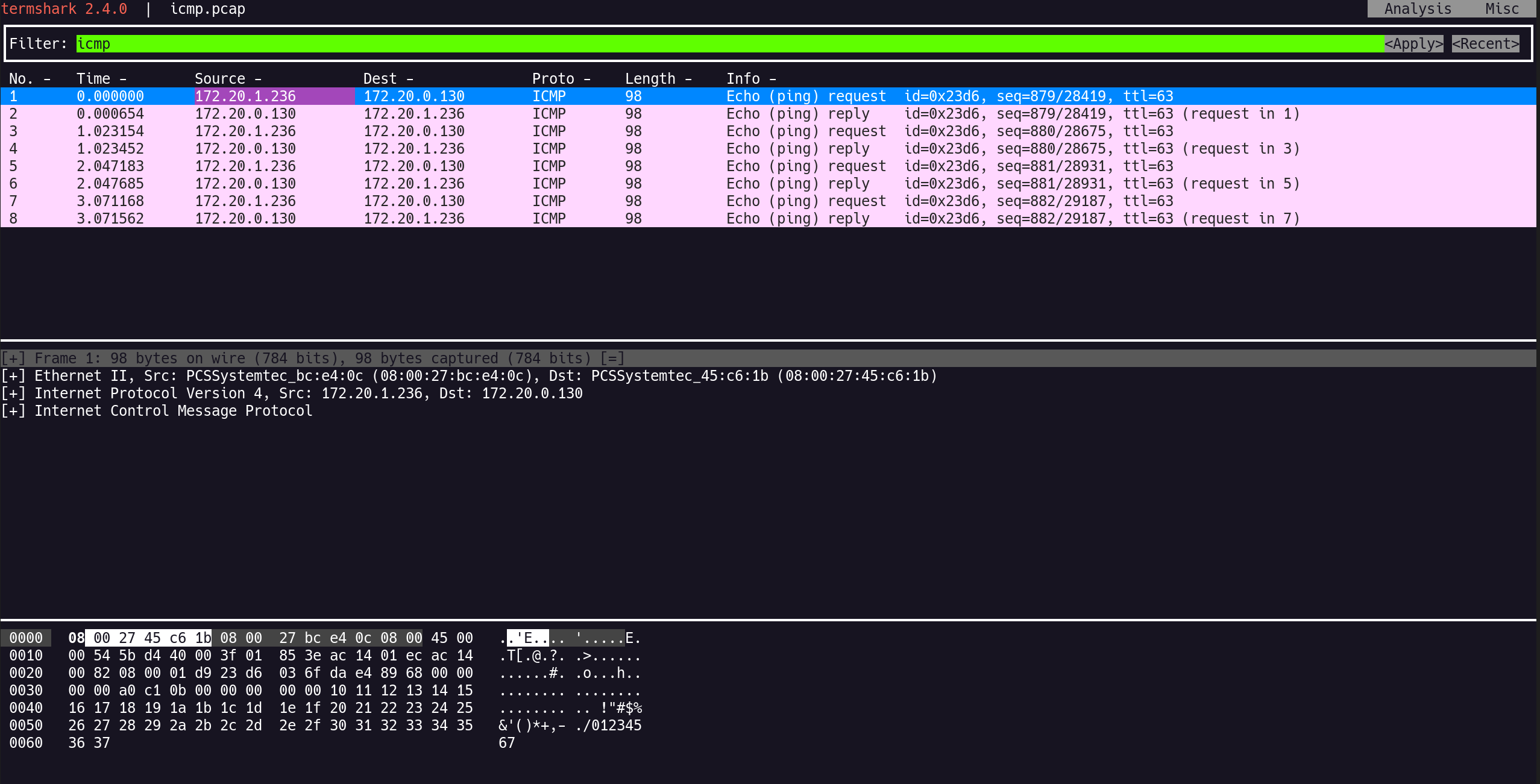

7. tcpdump 결과 저장 및 termshark 분석

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# tcpdump -i eth1 icmp -w /tmp/icmp.pcap

✅ 출력

1

2

3

4

tcpdump: listening on eth1, link-type EN10MB (Ethernet), snapshot length 262144 bytes

^C8 packets captured

10 packets received by filter

0 packets dropped by kernel

Source IP와 Destination IP가 그대로 보이며 encapsulation 없음

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# termshark -r /tmp/icmp.pcap

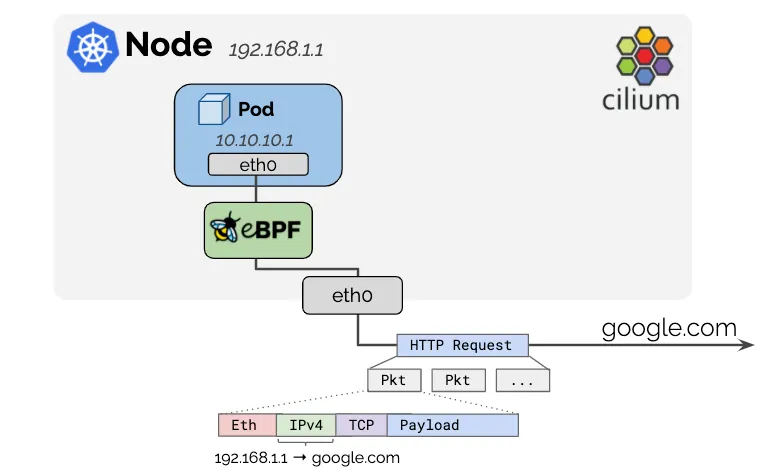

🔀 Masquerading

Masquerading 개요

- 내부 사설 IP를 가진 장치들이 공유기를 통해 하나의 공인 IP로 NAT되어 외부와 통신하는 방식

- 이와 유사하게 Kubernetes에서도 Pod가 외부 인터넷과 통신할 때 노드의 IP로 masquerading이 필요함

Kubernetes에서의 Masquerading 동작

- Pod가 인터넷으로 나갈 때, 노드의 IP로 Source NAT(Masquerade) 됨

- 이유: 대부분의 노드 IP는 외부 인터넷과 연결 가능함

- 외부로 나가는 트래픽만 masquerading 대상이며, 클러스터 내부 통신은 제외

Cilium의 Masquerading 동작 방식

- 클러스터 외부로 나가는 모든 트래픽의 소스 IP → 노드 IP로 변환됨

- 단, Pod 간 통신 또는 클러스터 내부 노드 IP 대상 트래픽은 masquerading 되지 않음

- ex. 다른 노드의 Pod와 통신 시, 소스 IP가 노드 IP로 바뀌면 안됨 → 예외 처리 필요

예외 처리를 위한 설정: ipv4-native-routing-cidr

ipv4-native-routing-cidr: 10.0.0.0/8와 같이 설정- 해당 CIDR 범위의 IP로 가는 트래픽은 masquerading 되지 않음

- 주로 클러스터 내 Pod CIDR 범위를 지정

1. Masquerading 상태 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it -n kube-system ds/cilium -c cilium-agent -- cilium status | grep Masquerading

✅ 출력

1

Masquerading: BPF [eth0, eth1] 172.20.0.0/16 [IPv4: Enabled, IPv6: Disabled]

2. ipv4-native-routing-cidr 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium config view | grep ipv4-native-routing-cidr

✅ 출력

1

ipv4-native-routing-cidr 172.20.0.0/16

- 같은 클러스터 내 Node IP로 가는 트래픽은 Masquerading 제외 대상

3. 파드 간 통신 시 Masquerading 여부 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# tcpdump -i eth1 icmp -nn

✅ 출력

1

2

3

4

5

6

7

8

9

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on eth1, link-type EN10MB (Ethernet), snapshot length 262144 bytes

18:46:02.125979 IP 172.20.1.236 > 172.20.0.130: ICMP echo request, id 9174, seq 2131, length 64

18:46:02.126385 IP 172.20.0.130 > 172.20.1.236: ICMP echo reply, id 9174, seq 2131, length 64

18:46:03.153938 IP 172.20.1.236 > 172.20.0.130: ICMP echo request, id 9174, seq 2132, length 64

18:46:03.154695 IP 172.20.0.130 > 172.20.1.236: ICMP echo reply, id 9174, seq 2132, length 64

18:46:04.154704 IP 172.20.1.236 > 172.20.0.130: ICMP echo request, id 9174, seq 2133, length 64

18:46:04.155285 IP 172.20.0.130 > 172.20.1.236: ICMP echo reply, id 9174, seq 2133, length 64

...

tcpdump를 통해 ICMP 요청/응답을 확인한 결과, 소스 IP가 그대로 유지되어 전달됨- Pod 간 통신에서는 Masquerading이 적용되지 않음

1

2

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- ping 192.168.10.101

(⎈|HomeLab:N/A) root@k8s-ctr:~# tcpdump -i eth1 icmp -nn

✅ 출력

1

2

3

4

64 bytes from 192.168.10.101: icmp_seq=1 ttl=63 time=0.333 ms

64 bytes from 192.168.10.101: icmp_seq=2 ttl=63 time=0.535 ms

64 bytes from 192.168.10.101: icmp_seq=3 ttl=63 time=0.499 ms

...

1

2

3

4

5

6

7

8

9

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on eth1, link-type EN10MB (Ethernet), snapshot length 262144 bytes

18:48:32.790099 IP 172.20.1.236 > 192.168.10.101: ICMP echo request, id 9180, seq 1, length 64

18:48:32.790388 IP 192.168.10.101 > 172.20.1.236: ICMP echo reply, id 9180, seq 1, length 64

18:48:33.809718 IP 172.20.1.236 > 192.168.10.101: ICMP echo request, id 9180, seq 2, length 64

18:48:33.810202 IP 192.168.10.101 > 172.20.1.236: ICMP echo reply, id 9180, seq 2, length 64

18:48:34.833711 IP 172.20.1.236 > 192.168.10.101: ICMP echo request, id 9180, seq 3, length 64

18:48:34.834176 IP 192.168.10.101 > 172.20.1.236: ICMP echo reply, id 9180, seq 3, length 64

...

- 만약 masquerading(NAT)이 되었더라면 소스 IP가 노드 IP(예:

192.168.10.100)로 변했어야 함 - 결과적으로 외부 노드로의 트래픽에도 Masquerading은 적용되지 않음

🧪 Masquerading 실습

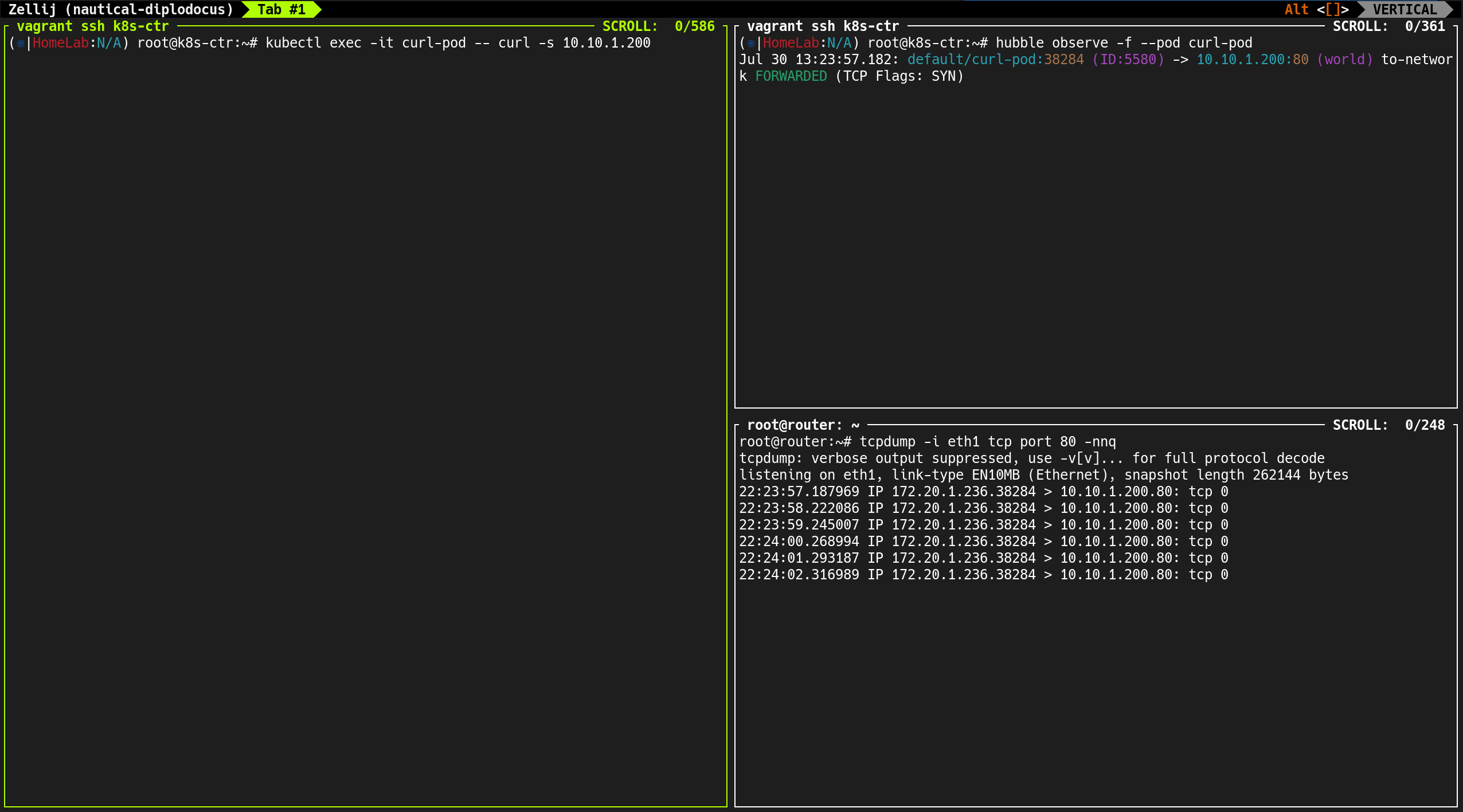

1. Masquerading 실습 개요

- 실습 목적: Pod에서 클러스터 외부 서버(router)로 통신 시 Masquerading 여부 확인

- 비교 대상

- 클러스터 내 노드 간 통신 시

- 클러스터 외부 서버(router) 통신 시

- 환경 구성

curl-pod,webpod, 외부 라우터 서버 (192.168.10.200)- 동일 네트워크 대역:

192.168.10.0/24

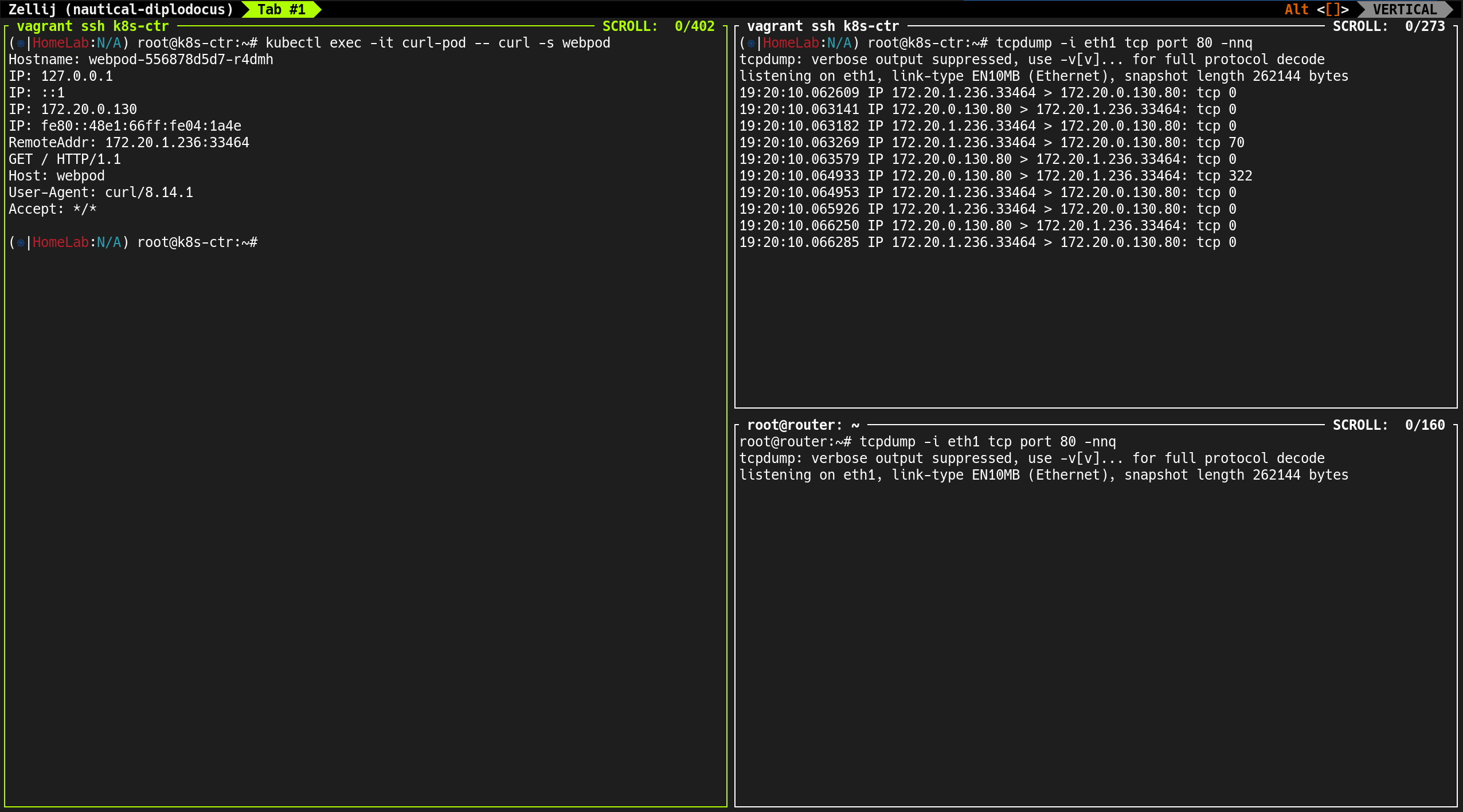

2. 클러스터 내부 통신: Masquerading 되지 않음

1

2

3

4

5

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- curl -s webpod | grep Hostname

Hostname: webpod-556878d5d7-r4dmh

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- curl -s webpod | grep Hostname

Hostname: webpod-556878d5d7-7p8bn

1

2

(⎈|HomeLab:N/A) root@k8s-ctr:~# tcpdump -i eth1 icmp -nn

root@router:~# tcpdump -i eth1 icmp -nn

소스 IP가 Pod IP(172.20.1.236)로 유지됨

1

kubectl exec -it curl-pod -- ping 192.168.10.101

1

2

3

4

5

(⎈|HomeLab:N/A) root@k8s-ctr:~# k get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

curl-pod 1/1 Running 0 121m 172.20.1.236 k8s-ctr <none> <none>

webpod-556878d5d7-7p8bn 1/1 Running 0 122m 172.20.1.40 k8s-ctr <none> <none>

webpod-556878d5d7-r4dmh 1/1 Running 0 123m 172.20.0.130 k8s-w1 <none> <none>

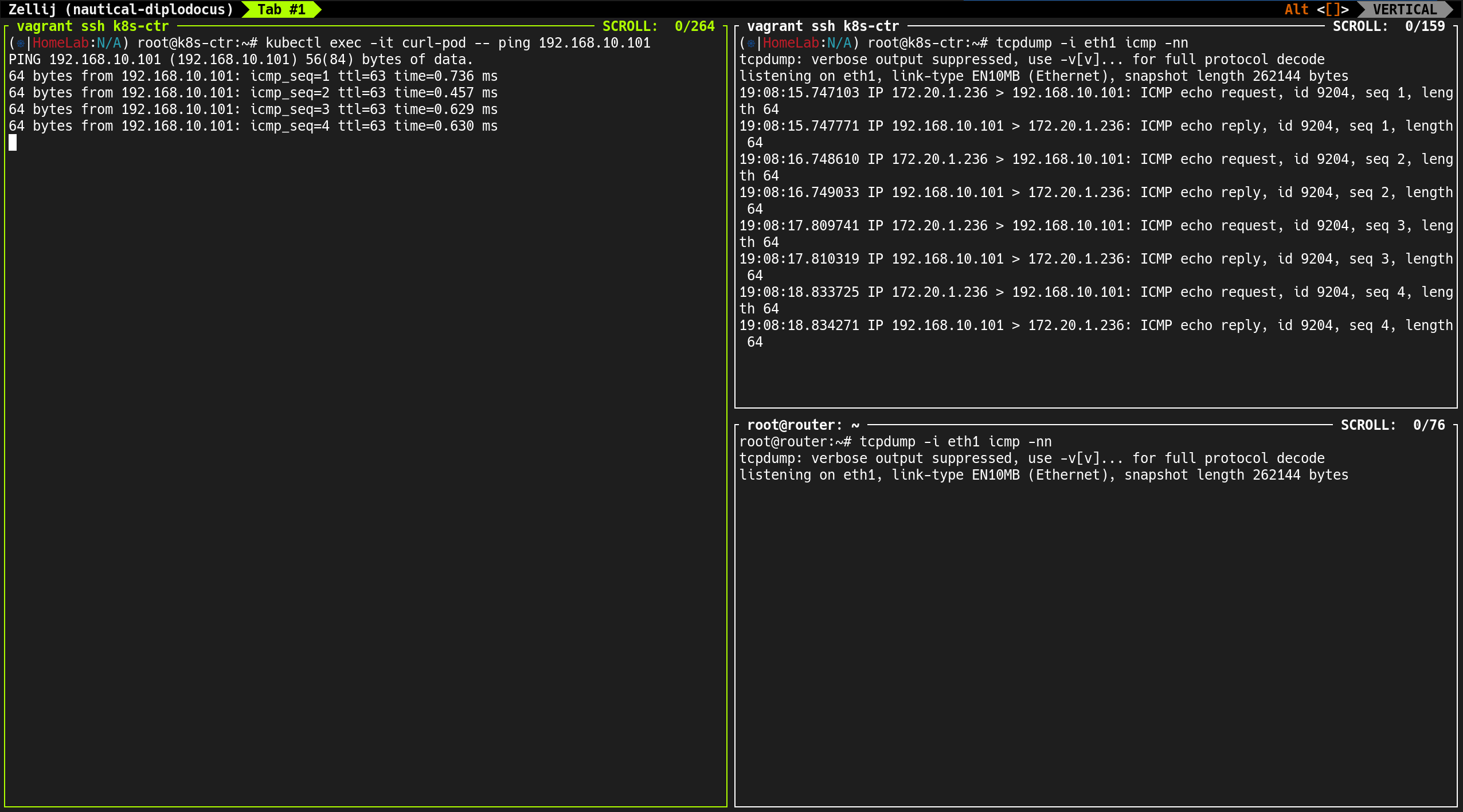

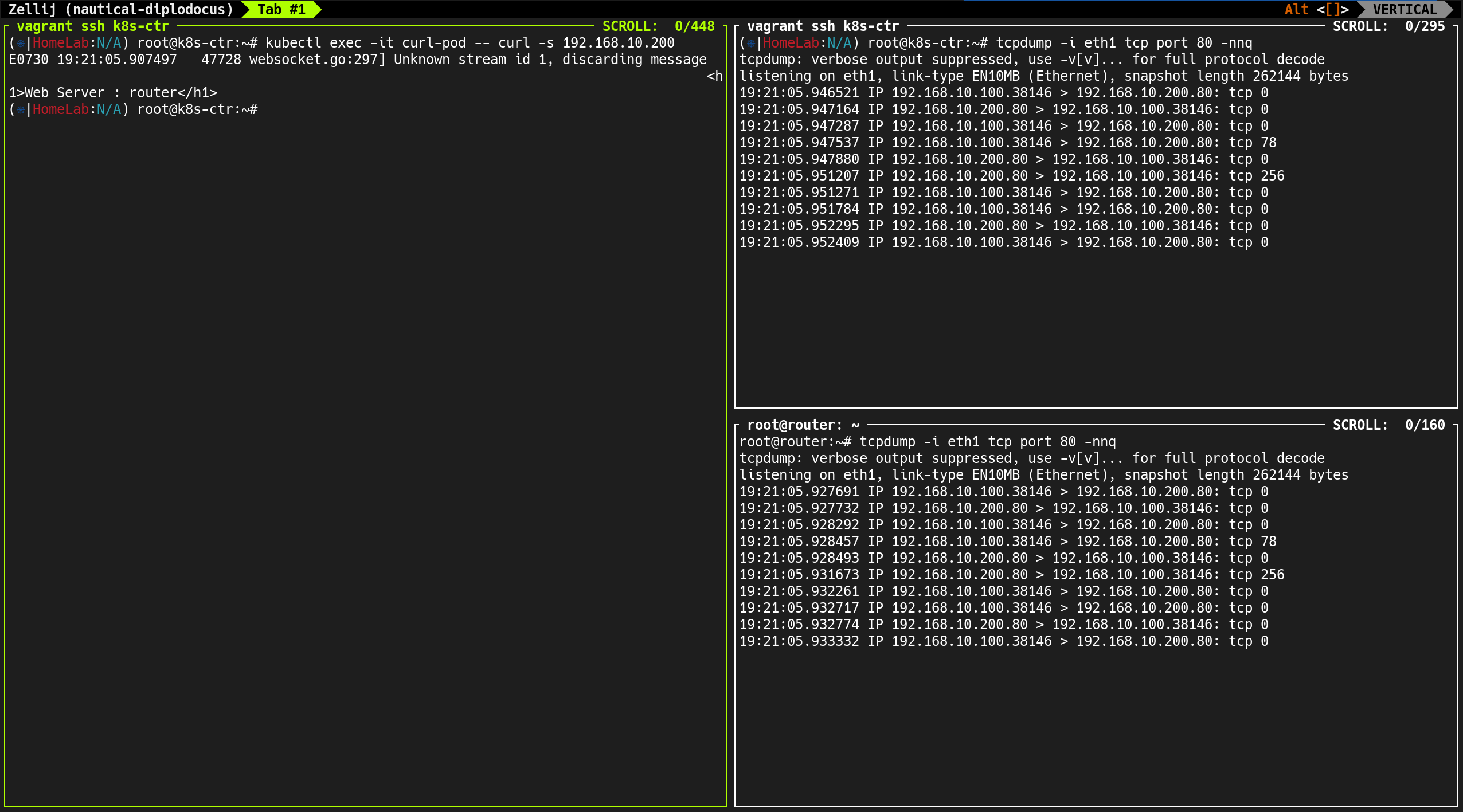

3. 클러스터 외부(router) 통신: Masquerading 발생

소스 IP가 Pod IP가 아니라 해당 Pod가 위치한 노드 IP(192.168.10.100)로 NAT

1

kubectl exec -it curl-pod -- ping 192.168.10.200

- Cilium은 클러스터 외부로 나가는 트래픽은 자동으로 Masquerading 수행

- 동일 네트워크 대역이어도 노드가 아닌 외부 서버이므로 NAT 적용됨

4. hubble observe 명령으로 실시간 트래픽 흐름 확인

1

hubble observe -f --pod curl-pod

curl-pod에서 발생하는 트래픽 흐름을 실시간으로 확인- 외부 서버(router:

192.168.10.200) 호출 시 → 소스 IP는 노드 IP로 Masquerading 되어 전송

5. TCP 포트 80으로도 Masquerading 확인

1

kubectl exec -it curl-pod -- curl -s webpod

1

kubectl exec -it curl-pod -- curl -s 192.168.10.200

- 외부 통신 (

192.168.10.200) → 소스 IP는 노드 IP

6. 라우터의 Loopback 인터페이스 확인

라우터(192.168.10.200)에는 2개의 더미 인터페이스 존재

1

root@router:~# ip -br -c -4 addr

✅ 출력

1

2

3

4

5

lo UNKNOWN 127.0.0.1/8

eth0 UP 10.0.2.15/24 metric 100

eth1 UP 192.168.10.200/24

loop1 UNKNOWN 10.10.1.200/24

loop2 UNKNOWN 10.10.2.200/24

1

root@router:~# ip -c a

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host noprefixroute

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 08:00:27:6b:69:c9 brd ff:ff:ff:ff:ff:ff

altname enp0s3

inet 10.0.2.15/24 metric 100 brd 10.0.2.255 scope global dynamic eth0

valid_lft 69510sec preferred_lft 69510sec

inet6 fd17:625c:f037:2:a00:27ff:fe6b:69c9/64 scope global dynamic mngtmpaddr noprefixroute

valid_lft 85902sec preferred_lft 13902sec

inet6 fe80::a00:27ff:fe6b:69c9/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 08:00:27:dc:00:69 brd ff:ff:ff:ff:ff:ff

altname enp0s8

inet 192.168.10.200/24 brd 192.168.10.255 scope global eth1

valid_lft forever preferred_lft forever