🖥️ 실습 환경 구성

1. Vagrantfile 다운로드 및 가상머신 구성

1

2

3

| curl -O https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/2w/Vagrantfile

vagrant up

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

| Bringing machine 'k8s-ctr' up with 'virtualbox' provider...

Bringing machine 'k8s-w1' up with 'virtualbox' provider...

Bringing machine 'k8s-w2' up with 'virtualbox' provider...

==> k8s-ctr: Preparing master VM for linked clones...

k8s-ctr: This is a one time operation. Once the master VM is prepared,

k8s-ctr: it will be used as a base for linked clones, making the creation

k8s-ctr: of new VMs take milliseconds on a modern system.

==> k8s-ctr: Importing base box 'bento/ubuntu-24.04'...

==> k8s-ctr: Cloning VM...

==> k8s-ctr: Matching MAC address for NAT networking...

==> k8s-ctr: Checking if box 'bento/ubuntu-24.04' version '202502.21.0' is up to date...

==> k8s-ctr: Setting the name of the VM: k8s-ctr

==> k8s-ctr: Clearing any previously set network interfaces...

==> k8s-ctr: Preparing network interfaces based on configuration...

k8s-ctr: Adapter 1: nat

k8s-ctr: Adapter 2: hostonly

==> k8s-ctr: Forwarding ports...

k8s-ctr: 22 (guest) => 60000 (host) (adapter 1)

==> k8s-ctr: Running 'pre-boot' VM customizations...

==> k8s-ctr: Booting VM...

==> k8s-ctr: Waiting for machine to boot. This may take a few minutes...

k8s-ctr: SSH address: 127.0.0.1:60000

k8s-ctr: SSH username: vagrant

k8s-ctr: SSH auth method: private key

k8s-ctr:

k8s-ctr: Vagrant insecure key detected. Vagrant will automatically replace

k8s-ctr: this with a newly generated keypair for better security.

k8s-ctr:

k8s-ctr: Inserting generated public key within guest...

k8s-ctr: Removing insecure key from the guest if it's present...

k8s-ctr: Key inserted! Disconnecting and reconnecting using new SSH key...

==> k8s-ctr: Machine booted and ready!

==> k8s-ctr: Checking for guest additions in VM...

==> k8s-ctr: Setting hostname...

==> k8s-ctr: Configuring and enabling network interfaces...

==> k8s-ctr: Running provisioner: shell...

k8s-ctr: Running: /tmp/vagrant-shell20250725-4641-sd34li.sh

k8s-ctr: >>>> Initial Config Start <<<<

k8s-ctr: [TASK 1] Setting Profile & Bashrc

k8s-ctr: [TASK 2] Disable AppArmor

k8s-ctr: [TASK 3] Disable and turn off SWAP

k8s-ctr: [TASK 4] Install Packages

k8s-ctr: [TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl)

k8s-ctr: [TASK 6] Install Packages & Helm

k8s-ctr: >>>> Initial Config End <<<<

==> k8s-ctr: Running provisioner: shell...

k8s-ctr: Running: /tmp/vagrant-shell20250725-4641-ffu2wg.sh

k8s-ctr: >>>> K8S Controlplane config Start <<<<

k8s-ctr: [TASK 1] Initial Kubernetes

k8s-ctr: [TASK 2] Setting kube config file

k8s-ctr: [TASK 3] Source the completion

k8s-ctr: [TASK 4] Alias kubectl to k

k8s-ctr: [TASK 5] Install Kubectx & Kubens

k8s-ctr: [TASK 6] Install Kubeps & Setting PS1

k8s-ctr: [TASK 7] Install Cilium CNI

k8s-ctr: [TASK 8] Install Cilium CLI

k8s-ctr: cilium

k8s-ctr: [TASK 9] local DNS with hosts file

k8s-ctr: >>>> K8S Controlplane Config End <<<<

==> k8s-w1: Cloning VM...

==> k8s-w1: Matching MAC address for NAT networking...

==> k8s-w1: Checking if box 'bento/ubuntu-24.04' version '202502.21.0' is up to date...

==> k8s-w1: Setting the name of the VM: k8s-w1

==> k8s-w1: Clearing any previously set network interfaces...

==> k8s-w1: Preparing network interfaces based on configuration...

k8s-w1: Adapter 1: nat

k8s-w1: Adapter 2: hostonly

==> k8s-w1: Forwarding ports...

k8s-w1: 22 (guest) => 60001 (host) (adapter 1)

==> k8s-w1: Running 'pre-boot' VM customizations...

==> k8s-w1: Booting VM...

==> k8s-w1: Waiting for machine to boot. This may take a few minutes...

k8s-w1: SSH address: 127.0.0.1:60001

k8s-w1: SSH username: vagrant

k8s-w1: SSH auth method: private key

k8s-w1:

k8s-w1: Vagrant insecure key detected. Vagrant will automatically replace

k8s-w1: this with a newly generated keypair for better security.

k8s-w1:

k8s-w1: Inserting generated public key within guest...

k8s-w1: Removing insecure key from the guest if it's present...

k8s-w1: Key inserted! Disconnecting and reconnecting using new SSH key...

==> k8s-w1: Machine booted and ready!

==> k8s-w1: Checking for guest additions in VM...

==> k8s-w1: Setting hostname...

==> k8s-w1: Configuring and enabling network interfaces...

==> k8s-w1: Running provisioner: shell...

k8s-w1: Running: /tmp/vagrant-shell20250725-4641-wbk3an.sh

k8s-w1: >>>> Initial Config Start <<<<

k8s-w1: [TASK 1] Setting Profile & Bashrc

k8s-w1: [TASK 2] Disable AppArmor

k8s-w1: [TASK 3] Disable and turn off SWAP

k8s-w1: [TASK 4] Install Packages

k8s-w1: [TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl)

k8s-w1: [TASK 6] Install Packages & Helm

k8s-w1: >>>> Initial Config End <<<<

==> k8s-w1: Running provisioner: shell...

k8s-w1: Running: /tmp/vagrant-shell20250725-4641-3fipel.sh

k8s-w1: >>>> K8S Node config Start <<<<

k8s-w1: [TASK 1] K8S Controlplane Join

k8s-w1: >>>> K8S Node config End <<<<

==> k8s-w2: Cloning VM...

==> k8s-w2: Matching MAC address for NAT networking...

==> k8s-w2: Checking if box 'bento/ubuntu-24.04' version '202502.21.0' is up to date...

==> k8s-w2: Setting the name of the VM: k8s-w2

==> k8s-w2: Clearing any previously set network interfaces...

==> k8s-w2: Preparing network interfaces based on configuration...

k8s-w2: Adapter 1: nat

k8s-w2: Adapter 2: hostonly

==> k8s-w2: Forwarding ports...

k8s-w2: 22 (guest) => 60002 (host) (adapter 1)

==> k8s-w2: Running 'pre-boot' VM customizations...

==> k8s-w2: Booting VM...

==> k8s-w2: Waiting for machine to boot. This may take a few minutes...

k8s-w2: SSH address: 127.0.0.1:60002

k8s-w2: SSH username: vagrant

k8s-w2: SSH auth method: private key

k8s-w2:

k8s-w2: Vagrant insecure key detected. Vagrant will automatically replace

k8s-w2: this with a newly generated keypair for better security.

k8s-w2:

k8s-w2: Inserting generated public key within guest...

k8s-w2: Removing insecure key from the guest if it's present...

k8s-w2: Key inserted! Disconnecting and reconnecting using new SSH key...

==> k8s-w2: Machine booted and ready!

==> k8s-w2: Checking for guest additions in VM...

==> k8s-w2: Setting hostname...

==> k8s-w2: Configuring and enabling network interfaces...

==> k8s-w2: Running provisioner: shell...

k8s-w2: Running: /tmp/vagrant-shell20250725-4641-9v5rvk.sh

k8s-w2: >>>> Initial Config Start <<<<

k8s-w2: [TASK 1] Setting Profile & Bashrc

k8s-w2: [TASK 2] Disable AppArmor

k8s-w2: [TASK 3] Disable and turn off SWAP

k8s-w2: [TASK 4] Install Packages

k8s-w2: [TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl)

k8s-w2: [TASK 6] Install Packages & Helm

k8s-w2: >>>> Initial Config End <<<<

==> k8s-w2: Running provisioner: shell...

k8s-w2: Running: /tmp/vagrant-shell20250725-4641-c0575o.sh

k8s-w2: >>>> K8S Node config Start <<<<

k8s-w2: [TASK 1] K8S Controlplane Join

k8s-w2: >>>> K8S Node config End <<<<

|

2. VM 접속 및 기본 정보 확인

(1) k8s-ctr 접속

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

| Welcome to Ubuntu 24.04.2 LTS (GNU/Linux 6.8.0-53-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/pro

System information as of Fri Jul 25 08:33:03 PM KST 2025

System load: 0.57

Usage of /: 25.0% of 30.34GB

Memory usage: 50%

Swap usage: 0%

Processes: 171

Users logged in: 0

IPv4 address for eth0: 10.0.2.15

IPv6 address for eth0: fd17:625c:f037:2:a00:27ff:fe6b:69c9

This system is built by the Bento project by Chef Software

More information can be found at https://github.com/chef/bento

Use of this system is acceptance of the OS vendor EULA and License Agreements.

(⎈|HomeLab:N/A) root@k8s-ctr:~#

|

(2) 호스트네임, IP 매핑 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cat /etc/hosts

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

| 127.0.0.1 localhost

127.0.1.1 vagrant

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

127.0.2.1 k8s-ctr k8s-ctr

192.168.10.100 k8s-ctr

|

(3) sshpass를 통해 k8s-w1, k8s-w2에 원격 접속하여 호스트네임 확인

1

2

3

4

5

6

7

| (⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-w1 hostname

Warning: Permanently added 'k8s-w1' (ED25519) to the list of known hosts.

k8s-w1

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-w2 hostname

Warning: Permanently added 'k8s-w2' (ED25519) to the list of known hosts.

k8s-w2

|

3. kubeadm-config 설정 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl describe cm -n kube-system kubeadm-config

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

| Name: kubeadm-config

Namespace: kube-system

Labels: <none>

Annotations: <none>

Data

====

ClusterConfiguration:

----

apiServer: {}

apiVersion: kubeadm.k8s.io/v1beta4

caCertificateValidityPeriod: 87600h0m0s

certificateValidityPeriod: 8760h0m0s

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

encryptionAlgorithm: RSA-2048

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.k8s.io

kind: ClusterConfiguration

kubernetesVersion: v1.33.2

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/16

proxy:

disabled: true

scheduler: {}

BinaryData

====

Events: <none>

|

- 네트워크 셋팅 확인

dnsDomain: cluster.local, podSubnet: 10.244.0.0/16, serviceSubnet: 10.96.0.0/16

4. kubelet-config 설정 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl describe cm -n kube-system kubelet-config

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

| Name: kubelet-config

Namespace: kube-system

Labels: <none>

Annotations: kubeadm.kubernetes.io/component-config.hash: sha256:0ff07274ab31cc8c0f9d989e90179a90b6e9b633c8f3671993f44185a0791127

Data

====

kubelet:

----

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

containerRuntimeEndpoint: ""

cpuManagerReconcilePeriod: 0s

crashLoopBackOff: {}

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMaximumGCAge: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging:

flushFrequency: 0

options:

json:

infoBufferSize: "0"

text:

infoBufferSize: "0"

verbosity: 0

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

resolvConf: /run/systemd/resolve/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

BinaryData

====

Events: <none>

|

5. 노드 상태 및 IP 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get node -owide

|

✅ 출력

1

2

3

4

| NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-ctr Ready control-plane 12m v1.33.2 192.168.10.100 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

k8s-w1 Ready <none> 9m38s v1.33.2 192.168.10.101 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

k8s-w2 Ready <none> 7m40s v1.33.2 192.168.10.102 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

|

6. 노드별 kubeadm-flags.env 정보 확인

k8s-ctr: 192.168.10.100, k8s-w1: 192.168.10.101, k8s-w2: 192.168.10.102

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cat /var/lib/kubelet/kubeadm-flags.env

|

✅ 출력

1

| KUBELET_KUBEADM_ARGS="--container-runtime-endpoint=unix:///run/containerd/containerd.sock --node-ip=192.168.10.100 --pod-infra-container-image=registry.k8s.io/pause:3.10"

|

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i cat /var/lib/kubelet/kubeadm-flags.env ; echo; done

|

✅ 출력

1

2

3

4

5

| >> node : k8s-w1 <<

KUBELET_KUBEADM_ARGS="--container-runtime-endpoint=unix:///run/containerd/containerd.sock --node-ip=192.168.10.101 --pod-infra-container-image=registry.k8s.io/pause:3.10"

>> node : k8s-w2 <<

KUBELET_KUBEADM_ARGS="--container-runtime-endpoint=unix:///run/containerd/containerd.sock --node-ip=192.168.10.102 --pod-infra-container-image=registry.k8s.io/pause:3.10"

|

7. Pod CIDR 확인

(1) kubecontroller가 할당한 Pod CIDR 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get nodes -o jsonpath='{range .items[*]}{.metadata.name}{"\t"}{.spec.podCIDR}{"\n"}{end}'

|

✅ 출력

1

2

3

| k8s-ctr 10.244.0.0/24

k8s-w1 10.244.1.0/24

k8s-w2 10.244.2.0/24

|

(2) Cilium이 관리하는 Pod CIDR 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumnode -o json | grep podCIDRs -A2

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

| "podCIDRs": [

"172.20.0.0/24"

],

--

"podCIDRs": [

"172.20.1.0/24"

],

--

"podCIDRs": [

"172.20.2.0/24"

],

|

8. Cilium 상태 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cilium status

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| /¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Envoy DaemonSet: OK

\__/¯¯\__/ Hubble Relay: disabled

\__/ ClusterMesh: disabled

DaemonSet cilium Desired: 3, Ready: 3/3, Available: 3/3

DaemonSet cilium-envoy Desired: 3, Ready: 3/3, Available: 3/3

Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1

Containers: cilium Running: 3

cilium-envoy Running: 3

cilium-operator Running: 1

clustermesh-apiserver

hubble-relay

Cluster Pods: 2/2 managed by Cilium

Helm chart version: 1.17.6

Image versions cilium quay.io/cilium/cilium:v1.17.6@sha256:544de3d4fed7acba72758413812780a4972d47c39035f2a06d6145d8644a3353: 3

cilium-envoy quay.io/cilium/cilium-envoy:v1.33.4-1752151664-7c2edb0b44cf95f326d628b837fcdd845102ba68@sha256:318eff387835ca2717baab42a84f35a83a5f9e7d519253df87269f80b9ff0171: 3

cilium-operator quay.io/cilium/operator-generic:v1.17.6@sha256:91ac3bf7be7bed30e90218f219d4f3062a63377689ee7246062fa0cc3839d096: 1

|

9. Cilium 세부 설정값 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cilium config view

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

| agent-not-ready-taint-key node.cilium.io/agent-not-ready

arping-refresh-period 30s

auto-direct-node-routes true

bpf-distributed-lru false

bpf-events-drop-enabled true

bpf-events-policy-verdict-enabled true

bpf-events-trace-enabled true

bpf-lb-acceleration disabled

bpf-lb-algorithm-annotation false

bpf-lb-external-clusterip false

bpf-lb-map-max 65536

bpf-lb-mode-annotation false

bpf-lb-sock false

bpf-lb-source-range-all-types false

bpf-map-dynamic-size-ratio 0.0025

bpf-policy-map-max 16384

bpf-root /sys/fs/bpf

cgroup-root /run/cilium/cgroupv2

cilium-endpoint-gc-interval 5m0s

cluster-id 0

cluster-name default

cluster-pool-ipv4-cidr 172.20.0.0/16

cluster-pool-ipv4-mask-size 24

clustermesh-enable-endpoint-sync false

clustermesh-enable-mcs-api false

cni-exclusive true

cni-log-file /var/run/cilium/cilium-cni.log

custom-cni-conf false

datapath-mode veth

debug true

debug-verbose

default-lb-service-ipam lbipam

direct-routing-skip-unreachable false

dnsproxy-enable-transparent-mode true

dnsproxy-socket-linger-timeout 10

egress-gateway-reconciliation-trigger-interval 1s

enable-auto-protect-node-port-range true

enable-bpf-clock-probe false

enable-bpf-masquerade true

enable-endpoint-health-checking false

enable-endpoint-lockdown-on-policy-overflow false

enable-endpoint-routes true

enable-experimental-lb false

enable-health-check-loadbalancer-ip false

enable-health-check-nodeport true

enable-health-checking false

enable-hubble false

enable-internal-traffic-policy true

enable-ipv4 true

enable-ipv4-big-tcp false

enable-ipv4-masquerade true

enable-ipv6 false

enable-ipv6-big-tcp false

enable-ipv6-masquerade true

enable-k8s-networkpolicy true

enable-k8s-terminating-endpoint true

enable-l2-neigh-discovery true

enable-l7-proxy true

enable-lb-ipam true

enable-local-redirect-policy false

enable-masquerade-to-route-source false

enable-metrics true

enable-node-selector-labels false

enable-non-default-deny-policies true

enable-policy default

enable-policy-secrets-sync true

enable-runtime-device-detection true

enable-sctp false

enable-source-ip-verification true

enable-svc-source-range-check true

enable-tcx true

enable-vtep false

enable-well-known-identities false

enable-xt-socket-fallback true

envoy-access-log-buffer-size 4096

envoy-base-id 0

envoy-keep-cap-netbindservice false

external-envoy-proxy true

health-check-icmp-failure-threshold 3

http-retry-count 3

identity-allocation-mode crd

identity-gc-interval 15m0s

identity-heartbeat-timeout 30m0s

install-no-conntrack-iptables-rules true

ipam cluster-pool

ipam-cilium-node-update-rate 15s

iptables-random-fully false

ipv4-native-routing-cidr 172.20.0.0/16

k8s-require-ipv4-pod-cidr false

k8s-require-ipv6-pod-cidr false

kube-proxy-replacement true

kube-proxy-replacement-healthz-bind-address

max-connected-clusters 255

mesh-auth-enabled true

mesh-auth-gc-interval 5m0s

mesh-auth-queue-size 1024

mesh-auth-rotated-identities-queue-size 1024

monitor-aggregation medium

monitor-aggregation-flags all

monitor-aggregation-interval 5s

nat-map-stats-entries 32

nat-map-stats-interval 30s

node-port-bind-protection true

nodeport-addresses

nodes-gc-interval 5m0s

operator-api-serve-addr 127.0.0.1:9234

operator-prometheus-serve-addr :9963

policy-cidr-match-mode

policy-secrets-namespace cilium-secrets

policy-secrets-only-from-secrets-namespace true

preallocate-bpf-maps false

procfs /host/proc

proxy-connect-timeout 2

proxy-idle-timeout-seconds 60

proxy-initial-fetch-timeout 30

proxy-max-concurrent-retries 128

proxy-max-connection-duration-seconds 0

proxy-max-requests-per-connection 0

proxy-xff-num-trusted-hops-egress 0

proxy-xff-num-trusted-hops-ingress 0

remove-cilium-node-taints true

routing-mode native

service-no-backend-response reject

set-cilium-is-up-condition true

set-cilium-node-taints true

synchronize-k8s-nodes true

tofqdns-dns-reject-response-code refused

tofqdns-enable-dns-compression true

tofqdns-endpoint-max-ip-per-hostname 1000

tofqdns-idle-connection-grace-period 0s

tofqdns-max-deferred-connection-deletes 10000

tofqdns-proxy-response-max-delay 100ms

tunnel-protocol vxlan

tunnel-source-port-range 0-0

unmanaged-pod-watcher-interval 15

vtep-cidr

vtep-endpoint

vtep-mac

vtep-mask

write-cni-conf-when-ready /host/etc/cni/net.d/05-cilium.conflist

|

- CNI 동작, BPF 정책, L7 Proxy, metrics, DNSProxy 등 다양한 항목의 현재 설정 상태 확인 가능

10. Cilium Agent 내부 동적 설정 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system -c cilium-agent -it ds/cilium -- cilium-dbg config

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| ##### Read-write configurations #####

ConntrackAccounting : Disabled

ConntrackLocal : Disabled

Debug : Disabled

DebugLB : Disabled

DebugPolicy : Enabled

DropNotification : Enabled

MonitorAggregationLevel : Medium

PolicyAccounting : Enabled

PolicyAuditMode : Disabled

PolicyTracing : Disabled

PolicyVerdictNotification : Enabled

SourceIPVerification : Enabled

TraceNotification : Enabled

PolicyEnforcement : default

|

11. Cilium Endpoint 상태 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumendpoints -A

|

✅ 출력

1

2

3

| NAMESPACE NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6

kube-system coredns-674b8bbfcf-8lcmh 3235 ready 172.20.0.21

kube-system coredns-674b8bbfcf-gjs6z 3235 ready 172.20.0.11

|

- 각각의 IPv4:

172.20.0.21, 172.20.0.11

12. Cilium과 Hubble의 모니터링 강점과 개발 배경

- Cilium은 다른 CNI에 비해 모니터링 지원이 뛰어남

- eBPF 기반이라 기존 모니터링 시스템과의 호환이 어렵고, 별도의 로깅·모니터링 시스템 구축이 쉽지 않음

- 이러한 한계를 극복하기 위해 Cilium은 초기단계부터 Hubble을 함께 개발하여 모니터링을 손쉽게 지원하도록 함

13. Hubble 설치 전 확인

(1) Hubble 활성화 여부 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cilium config view | grep -i hubble

|

✅ 출력

(2) Hubble 관련 Secret 존재 여부 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get secret -n kube-system | grep -iE 'cilium-ca|hubble'

|

- 아무것도 출력되지 않음

- 현재 Hubble 미설치 상태이며, 설치 시 Secret이 생성될 예정

(3) 현재 열린 TCP 포트 정보 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# ss -tnlp | grep -iE 'cilium|hubble' | tee before.txt

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

| LISTEN 0 4096 127.0.0.1:46395 0.0.0.0:* users:(("cilium-agent",pid=5229,fd=42))

LISTEN 0 4096 127.0.0.1:9234 0.0.0.0:* users:(("cilium-operator",pid=4772,fd=9))

LISTEN 0 4096 0.0.0.0:9964 0.0.0.0:* users:(("cilium-envoy",pid=4828,fd=25))

LISTEN 0 4096 0.0.0.0:9964 0.0.0.0:* users:(("cilium-envoy",pid=4828,fd=24))

LISTEN 0 4096 127.0.0.1:9890 0.0.0.0:* users:(("cilium-agent",pid=5229,fd=6))

LISTEN 0 4096 127.0.0.1:9891 0.0.0.0:* users:(("cilium-operator",pid=4772,fd=6))

LISTEN 0 4096 127.0.0.1:9878 0.0.0.0:* users:(("cilium-envoy",pid=4828,fd=27))

LISTEN 0 4096 127.0.0.1:9878 0.0.0.0:* users:(("cilium-envoy",pid=4828,fd=26))

LISTEN 0 4096 127.0.0.1:9879 0.0.0.0:* users:(("cilium-agent",pid=5229,fd=61))

LISTEN 0 4096 *:9963 *:* users:(("cilium-operator",pid=4772,fd=7))

|

- Hubble 활성화 전 포트 상태를 기록해두고 이후 비교하기 위함

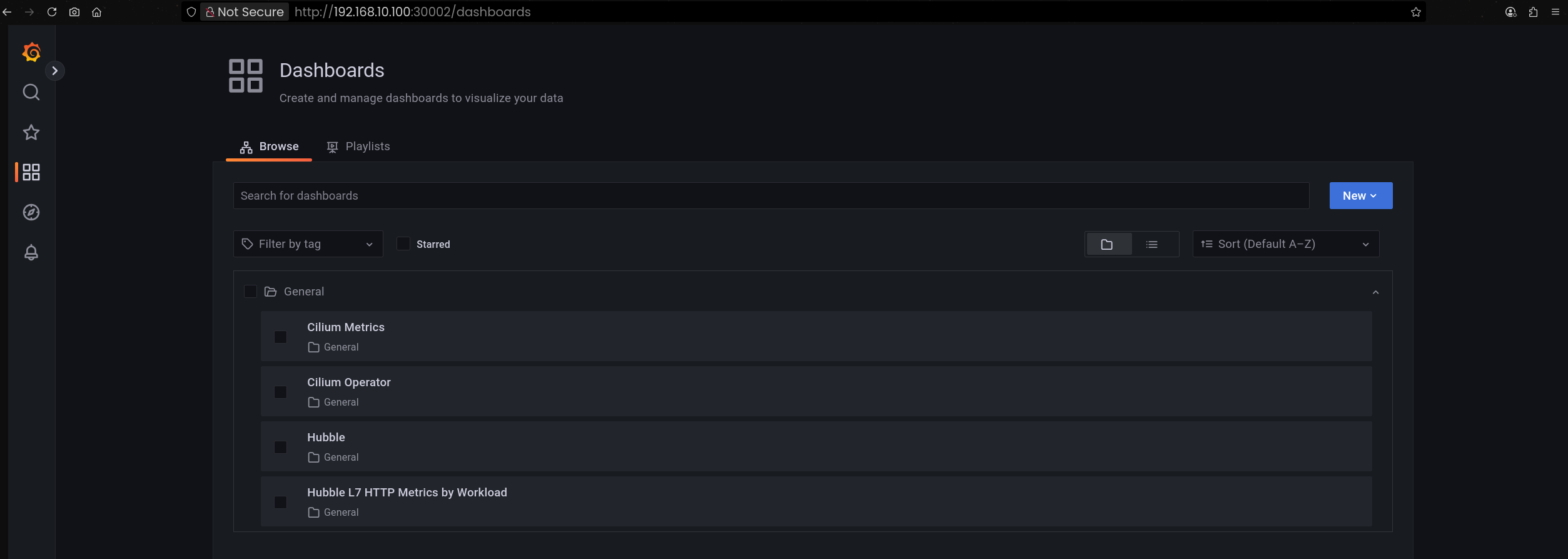

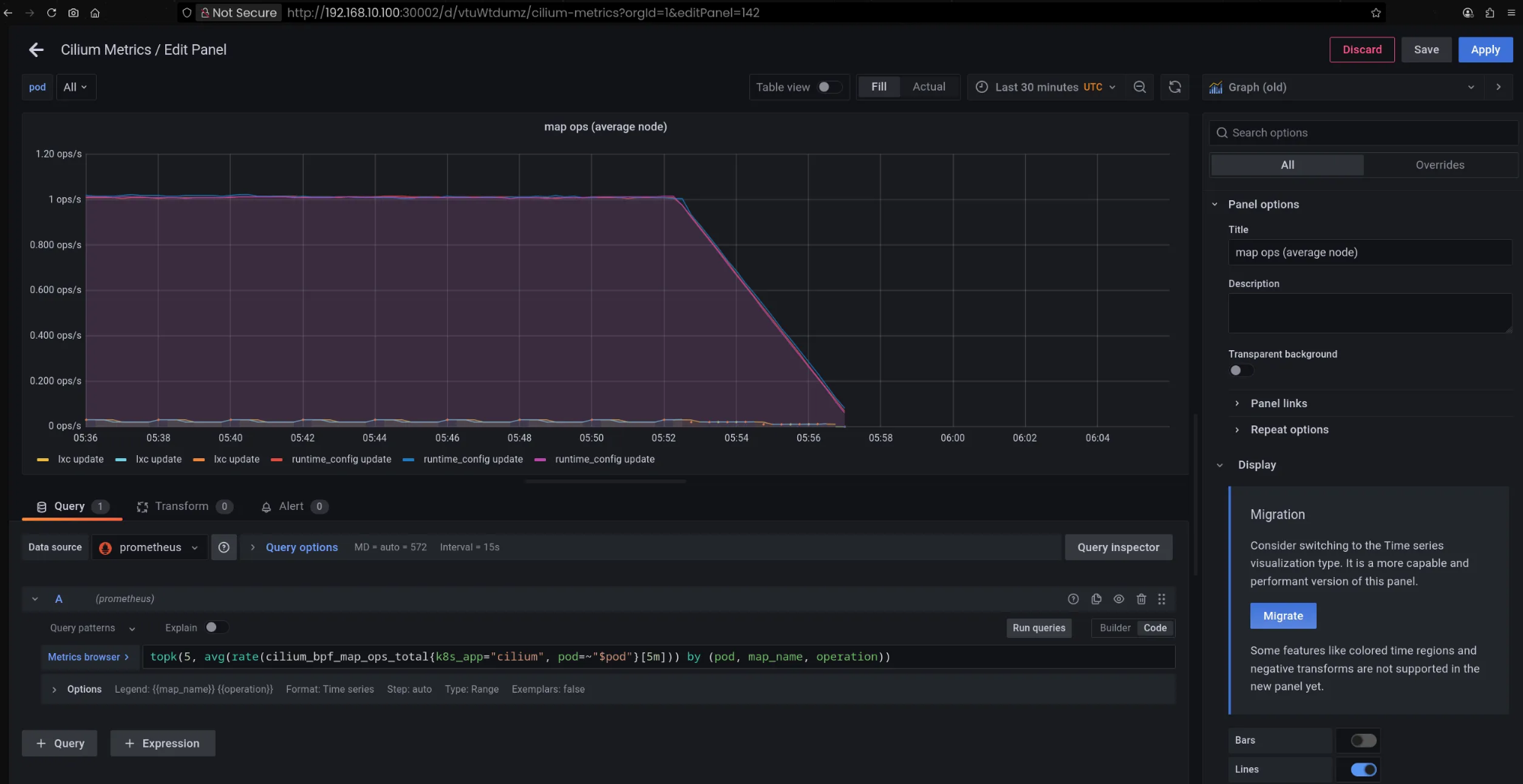

🔭 Hubble 설치 및 설정 과정

1. Hubble 설치 (Helm 업그레이드)

1

2

3

4

5

6

7

8

9

10

11

12

| (⎈|HomeLab:N/A) root@k8s-ctr:~# helm upgrade cilium cilium/cilium --namespace kube-system --reuse-values \

--set hubble.enabled=true \

--set hubble.relay.enabled=true \

--set hubble.ui.enabled=true \

--set hubble.ui.service.type=NodePort \

--set hubble.ui.service.nodePort=31234 \

--set hubble.export.static.enabled=true \

--set hubble.export.static.filePath=/var/run/cilium/hubble/events.log \

--set prometheus.enabled=true \

--set operator.prometheus.enabled=true \

--set hubble.metrics.enableOpenMetrics=true \

--set hubble.metrics.enabled="{dns,drop,tcp,flow,port-distribution,icmp,httpV2:exemplars=true;labelsContext=source_ip\,source_namespace\,source_workload\,destination_ip\,destination_namespace\,destination_workload\,traffic_direction}"

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

| Release "cilium" has been upgraded. Happy Helming!

NAME: cilium

LAST DEPLOYED: Fri Jul 25 21:03:35 2025

NAMESPACE: kube-system

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

You have successfully installed Cilium with Hubble Relay and Hubble UI.

Your release version is 1.17.6.

For any further help, visit https://docs.cilium.io/en/v1.17/gettinghelp

|

- UI 서비스는

NodePort 31234로 지정 - Prometheus 및 OpenMetrics 활성화

2. 설치 후 상태 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cilium status

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

| /¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Envoy DaemonSet: OK

\__/¯¯\__/ Hubble Relay: OK

\__/ ClusterMesh: disabled

DaemonSet cilium Desired: 3, Ready: 3/3, Available: 3/3

DaemonSet cilium-envoy Desired: 3, Ready: 3/3, Available: 3/3

Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1

Deployment hubble-relay Desired: 1, Ready: 1/1, Available: 1/1

Deployment hubble-ui Desired: 1, Ready: 1/1, Available: 1/1

Containers: cilium Running: 3

cilium-envoy Running: 3

cilium-operator Running: 1

clustermesh-apiserver

hubble-relay Running: 1

hubble-ui Running: 1

Cluster Pods: 4/4 managed by Cilium

Helm chart version: 1.17.6

Image versions cilium quay.io/cilium/cilium:v1.17.6@sha256:544de3d4fed7acba72758413812780a4972d47c39035f2a06d6145d8644a3353: 3

cilium-envoy quay.io/cilium/cilium-envoy:v1.33.4-1752151664-7c2edb0b44cf95f326d628b837fcdd845102ba68@sha256:318eff387835ca2717baab42a84f35a83a5f9e7d519253df87269f80b9ff0171: 3

cilium-operator quay.io/cilium/operator-generic:v1.17.6@sha256:91ac3bf7be7bed30e90218f219d4f3062a63377689ee7246062fa0cc3839d096: 1

hubble-relay quay.io/cilium/hubble-relay:v1.17.6@sha256:7d17ec10b3d37341c18ca56165b2f29a715cb8ee81311fd07088d8bf68c01e60: 1

hubble-ui quay.io/cilium/hubble-ui-backend:v0.13.2@sha256:a034b7e98e6ea796ed26df8f4e71f83fc16465a19d166eff67a03b822c0bfa15: 1

hubble-ui quay.io/cilium/hubble-ui:v0.13.2@sha256:9e37c1296b802830834cc87342a9182ccbb71ffebb711971e849221bd9d59392: 1

|

- Hubble Relay: OK로 변경

- hubble-relay, hubble-ui 파드가 추가로 배포됨

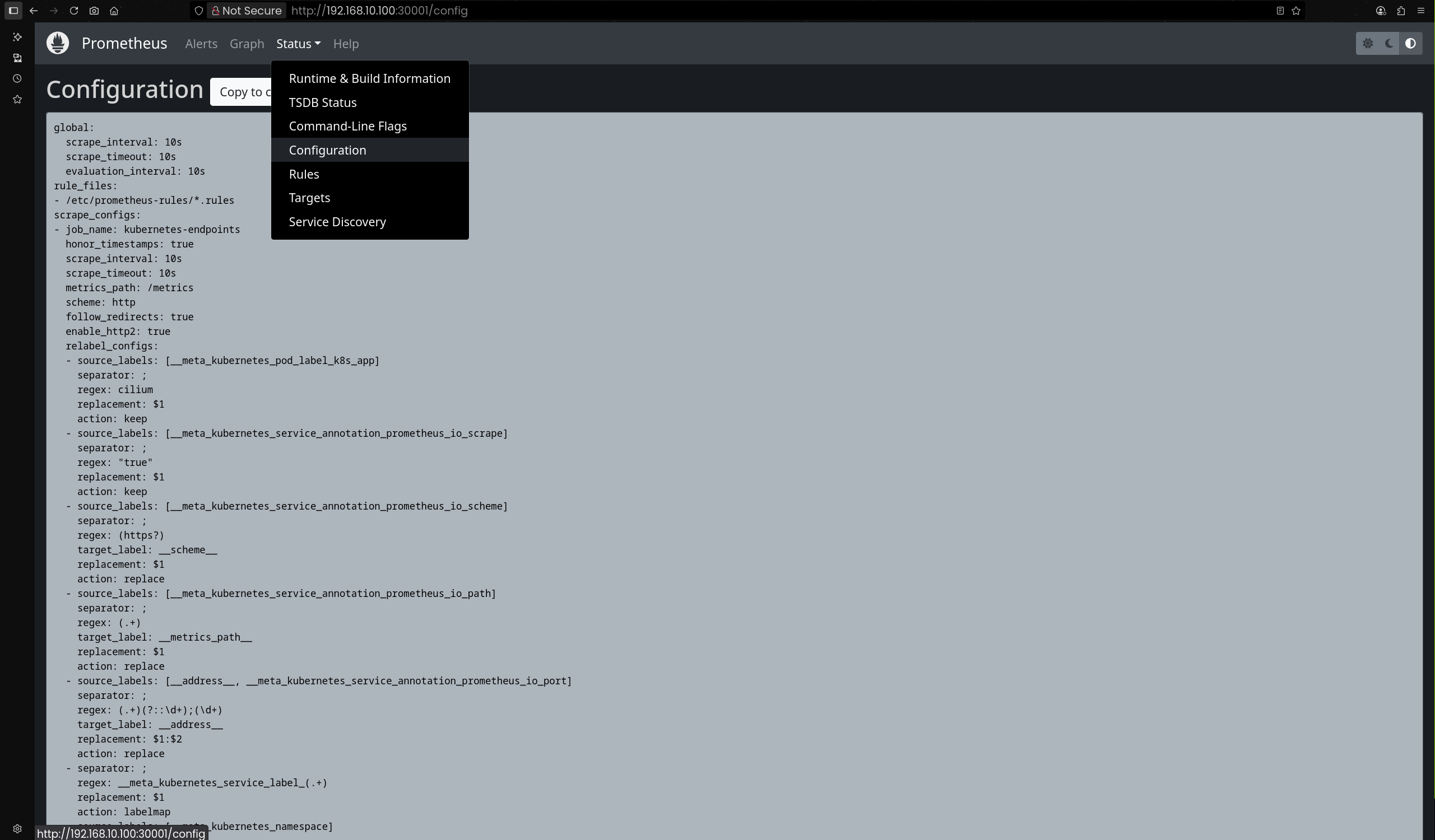

3. Config 변경 사항 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cilium config view | grep -i hubble

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| enable-hubble true

enable-hubble-open-metrics true

hubble-disable-tls false

hubble-export-allowlist

hubble-export-denylist

hubble-export-fieldmask

hubble-export-file-max-backups 5

hubble-export-file-max-size-mb 10

hubble-export-file-path /var/run/cilium/hubble/events.log

hubble-listen-address :4244

hubble-metrics dns drop tcp flow port-distribution icmp httpV2:exemplars=true;labelsContext=source_ip,source_namespace,source_workload,destination_ip,destination_namespace,destination_workload,traffic_direction

hubble-metrics-server :9965

hubble-metrics-server-enable-tls false

hubble-socket-path /var/run/cilium/hubble.sock

hubble-tls-cert-file /var/lib/cilium/tls/hubble/server.crt

hubble-tls-client-ca-files /var/lib/cilium/tls/hubble/client-ca.crt

hubble-tls-key-file /var/lib/cilium/tls/hubble/server.key

|

enable-hubble: truehubble-metrics-server: :9965hubble-listen-address: :4244hubble-export-file-path: /var/run/cilium/hubble/events.log- TLS 관련 cert/key 경로가 추가됨

4. Secret 생성 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get secret -n kube-system | grep -iE 'cilium-ca|hubble'

|

✅ 출력

1

2

3

| cilium-ca Opaque 2 3m43s

hubble-relay-client-certs kubernetes.io/tls 3 3m43s

hubble-server-certs kubernetes.io/tls 3 3m43s

|

- 설치 전에는 존재하지 않았던 Secret들이 새로 추가됨

5. 포트 변경 사항 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# ss -tnlp | grep -iE 'cilium|hubble' | tee after.txt

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

| LISTEN 0 4096 127.0.0.1:46395 0.0.0.0:* users:(("cilium-agent",pid=7666,fd=53))

LISTEN 0 4096 127.0.0.1:9234 0.0.0.0:* users:(("cilium-operator",pid=4772,fd=9))

LISTEN 0 4096 0.0.0.0:9964 0.0.0.0:* users:(("cilium-envoy",pid=4828,fd=25))

LISTEN 0 4096 0.0.0.0:9964 0.0.0.0:* users:(("cilium-envoy",pid=4828,fd=24))

LISTEN 0 4096 127.0.0.1:9890 0.0.0.0:* users:(("cilium-agent",pid=7666,fd=6))

LISTEN 0 4096 127.0.0.1:9891 0.0.0.0:* users:(("cilium-operator",pid=4772,fd=6))

LISTEN 0 4096 127.0.0.1:9878 0.0.0.0:* users:(("cilium-envoy",pid=4828,fd=27))

LISTEN 0 4096 127.0.0.1:9878 0.0.0.0:* users:(("cilium-envoy",pid=4828,fd=26))

LISTEN 0 4096 127.0.0.1:9879 0.0.0.0:* users:(("cilium-agent",pid=7666,fd=58))

LISTEN 0 4096 *:4244 *:* users:(("cilium-agent",pid=7666,fd=49))

LISTEN 0 4096 *:9965 *:* users:(("cilium-agent",pid=7666,fd=31))

LISTEN 0 4096 *:9963 *:* users:(("cilium-operator",pid=4772,fd=7))

LISTEN 0 4096 *:9962 *:* users:(("cilium-agent",pid=7666,fd=7))

|

- 설치 전 대비 추가된 포트: 4244, 9965, 9962

- 4244: 각 노드 cilium-agent에서 Hubble 프로세스가 리스닝

- 9965: Hubble Metrics 서버

- 9962: 추가 내부 포트

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# vi -d before.txt after.txt

|

✅ 출력

6. 노드별 4244 포트 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i sudo ss -tnlp |grep 4244 ; echo; done

|

✅ 출력

1

2

3

4

5

| >> node : k8s-w1 <<

LISTEN 0 4096 *:4244 *:* users:(("cilium-agent",pid=6068,fd=52))

>> node : k8s-w2 <<

LISTEN 0 4096 *:4244 *:* users:(("cilium-agent",pid=5995,fd=52))

|

- 각 노드(

k8s-w1, k8s-w2)에서 4244 포트가 열려 있음 - 이는 Hubble이 각 노드의 cilium-agent에 추가된 프로세스를 통해 데이터를 수집한다는 의미

7. Hubble Relay 동작 확인

(1) hubble-relay 파드 상태

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get pod -n kube-system -l k8s-app=hubble-relay

|

✅ 출력

1

2

| NAME READY STATUS RESTARTS AGE

hubble-relay-5dcd46f5c-s4wqq 1/1 Running 0 9m3s

|

hubble-relay 파드가 정상 배포되어 Running 상태- 4245/TCP 포트로 데이터 수집

(2) hubble-relay 파드 상세

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kc describe pod -n kube-system -l k8s-app=hubble-relay

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

| Name: hubble-relay-5dcd46f5c-s4wqq

Namespace: kube-system

Priority: 0

Service Account: hubble-relay

Node: k8s-w2/192.168.10.102

Start Time: Fri, 25 Jul 2025 21:03:38 +0900

Labels: app.kubernetes.io/name=hubble-relay

app.kubernetes.io/part-of=cilium

k8s-app=hubble-relay

pod-template-hash=5dcd46f5c

Annotations: <none>

Status: Running

IP: 172.20.2.58

IPs:

IP: 172.20.2.58

Controlled By: ReplicaSet/hubble-relay-5dcd46f5c

Containers:

hubble-relay:

Container ID: containerd://d385feef54ef21ba44f32d93715f5c95124d84cd878a410bbcd11be2cc9bf62d

Image: quay.io/cilium/hubble-relay:v1.17.6@sha256:7d17ec10b3d37341c18ca56165b2f29a715cb8ee81311fd07088d8bf68c01e60

Image ID: quay.io/cilium/hubble-relay@sha256:7d17ec10b3d37341c18ca56165b2f29a715cb8ee81311fd07088d8bf68c01e60

Port: 4245/TCP

Host Port: 0/TCP

Command:

hubble-relay

Args:

serve

--debug

State: Running

Started: Fri, 25 Jul 2025 21:04:03 +0900

Ready: True

Restart Count: 0

Liveness: grpc <pod>:4222 delay=10s timeout=10s period=10s #success=1 #failure=12

Readiness: grpc <pod>:4222 delay=0s timeout=3s period=10s #success=1 #failure=3

Startup: grpc <pod>:4222 delay=10s timeout=1s period=3s #success=1 #failure=20

Environment: <none>

Mounts:

/etc/hubble-relay from config (ro)

/var/lib/hubble-relay/tls from tls (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: hubble-relay-config

Optional: false

tls:

Type: Projected (a volume that contains injected data from multiple sources)

SecretName: hubble-relay-client-certs

Optional: false

QoS Class: BestEffort

Node-Selectors: kubernetes.io/os=linux

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 9m40s default-scheduler Successfully assigned kube-system/hubble-relay-5dcd46f5c-s4wqq to k8s-w2

Warning FailedMount 9m39s kubelet MountVolume.SetUp failed for volume "config" : failed to sync configmap cache: timed out waiting for the condition

Normal Pulling 9m24s kubelet Pulling image "quay.io/cilium/hubble-relay:v1.17.6@sha256:7d17ec10b3d37341c18ca56165b2f29a715cb8ee81311fd07088d8bf68c01e60"

Normal Pulled 9m15s kubelet Successfully pulled image "quay.io/cilium/hubble-relay:v1.17.6@sha256:7d17ec10b3d37341c18ca56165b2f29a715cb8ee81311fd07088d8bf68c01e60" in 8.579s (8.579s including waiting). Image size: 29149253 bytes.

Normal Created 9m15s kubelet Created container: hubble-relay

Normal Started 9m15s kubelet Started container hubble-relay

|

(3) 서비스와 엔드포인트

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kc get svc,ep -n kube-system hubble-relay

|

✅ 출력

1

2

3

4

5

6

| Warning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/hubble-relay ClusterIP 10.96.50.64 <none> 80/TCP 11m

NAME ENDPOINTS AGE

endpoints/hubble-relay 172.20.2.58:4245 11m

|

service/hubble-relay → endpoints/hubble-relay 로 연결- ClusterIP

10.96.50.64의 80/TCP로 접근 시 내부적으로 4245 포트로 전달

(4) Hubble Relay와 Hubble Peer 연동

hubble-relay는 hubble-peer 서비스(ClusterIP:443)를 통해 각 노드의 :4244 포트에서 데이터를 수집- 각 노드의 cilium-agent 내 허블 프로세스를 통해 흐름 데이터(Flow)를 가져옴

- 노드 수가 증가하면

hubble-peer의 엔드포인트가 자동으로 늘어나며, 수집 범위가 확대됨

8. ConfigMap 목록 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get cm -n kube-system

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

| NAME DATA AGE

cilium-config 158 51m

cilium-envoy-config 1 51m

coredns 1 51m

extension-apiserver-authentication 6 51m

hubble-relay-config 1 13m

hubble-ui-nginx 1 13m

kube-apiserver-legacy-service-account-token-tracking 1 51m

kube-root-ca.crt 1 51m

kubeadm-config 1 51m

kubelet-config 1 51m

|

hubble-relay-config, hubble-ui-nginx 등 Hubble 관련 ConfigMap 생성 확인

9. Hubble Relay 설정 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl describe cm -n kube-system hubble-relay-config

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

| Name: hubble-relay-config

Namespace: kube-system

Labels: app.kubernetes.io/managed-by=Helm

Annotations: meta.helm.sh/release-name: cilium

meta.helm.sh/release-namespace: kube-system

Data

====

config.yaml:

----

cluster-name: default

peer-service: "hubble-peer.kube-system.svc.cluster.local.:443"

listen-address: :4245

gops: true

gops-port: "9893"

retry-timeout:

sort-buffer-len-max:

sort-buffer-drain-timeout:

tls-hubble-client-cert-file: /var/lib/hubble-relay/tls/client.crt

tls-hubble-client-key-file: /var/lib/hubble-relay/tls/client.key

tls-hubble-server-ca-files: /var/lib/hubble-relay/tls/hubble-server-ca.crt

disable-server-tls: true

BinaryData

====

Events: <none>

|

peer-service로 hubble-peer.kube-system.svc.cluster.local.:443 지정listen-address: :4245 설정 → Hubble Relay가 4245 포트에서 동작- TLS 인증서 경로(

/var/lib/hubble-relay/tls/) 등 세부 설정 확인 가능

10. Hubble Peer 서비스 및 엔드포인트 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get svc,ep -n kube-system hubble-peer

|

✅ 출력

1

2

3

4

5

6

| Warning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/hubble-peer ClusterIP 10.96.99.7 <none> 443/TCP 15m

NAME ENDPOINTS AGE

endpoints/hubble-peer 192.168.10.100:4244,192.168.10.101:4244,192.168.10.102:4244 15m

|

hubble-peer 서비스가 ClusterIP 10.96.99.7로 생성됨- 엔드포인트는 각 노드의

4244 포트를 사용192.168.10.100:4244, 192.168.10.101:4244, 192.168.10.102:4244

- 각 cilium-agent가 호스트 네트워크를 사용하여 4244 포트를 직접 노출함

11. Hubble UI 파드 구성 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kc describe pod -n kube-system -l k8s-app=hubble-ui

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

| Name: hubble-ui-76d4965bb6-p4blh

Namespace: kube-system

Priority: 0

Service Account: hubble-ui

Node: k8s-w1/192.168.10.101

Start Time: Fri, 25 Jul 2025 21:03:38 +0900

Labels: app.kubernetes.io/name=hubble-ui

app.kubernetes.io/part-of=cilium

k8s-app=hubble-ui

pod-template-hash=76d4965bb6

Annotations: <none>

Status: Running

IP: 172.20.1.79

IPs:

IP: 172.20.1.79

Controlled By: ReplicaSet/hubble-ui-76d4965bb6

Containers:

frontend:

Container ID: containerd://e4f17c9e17383bd95bb54c77040bad5c4e0bbe619c99306f72edf3c29433bbd9

Image: quay.io/cilium/hubble-ui:v0.13.2@sha256:9e37c1296b802830834cc87342a9182ccbb71ffebb711971e849221bd9d59392

Image ID: quay.io/cilium/hubble-ui@sha256:9e37c1296b802830834cc87342a9182ccbb71ffebb711971e849221bd9d59392

Port: 8081/TCP

Host Port: 0/TCP

State: Running

Started: Fri, 25 Jul 2025 21:04:02 +0900

Ready: True

Restart Count: 0

Liveness: http-get http://:8081/healthz delay=0s timeout=1s period=10s #success=1 #failure=3

Readiness: http-get http://:8081/ delay=0s timeout=1s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/etc/nginx/conf.d/default.conf from hubble-ui-nginx-conf (rw,path="nginx.conf")

/tmp from tmp-dir (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-62g8t (ro)

backend:

Container ID: containerd://339fc85862a2589dfaced99bcc55e1abe29d23d59dd8e35e64566fca9f5cf65c

Image: quay.io/cilium/hubble-ui-backend:v0.13.2@sha256:a034b7e98e6ea796ed26df8f4e71f83fc16465a19d166eff67a03b822c0bfa15

Image ID: quay.io/cilium/hubble-ui-backend@sha256:a034b7e98e6ea796ed26df8f4e71f83fc16465a19d166eff67a03b822c0bfa15

Port: 8090/TCP

Host Port: 0/TCP

State: Running

Started: Fri, 25 Jul 2025 21:04:10 +0900

Ready: True

Restart Count: 0

Environment:

EVENTS_SERVER_PORT: 8090

FLOWS_API_ADDR: hubble-relay:80

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-62g8t (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

hubble-ui-nginx-conf:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: hubble-ui-nginx

Optional: false

tmp-dir:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit: <unset>

kube-api-access-62g8t:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

Optional: false

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: kubernetes.io/os=linux

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 20m default-scheduler Successfully assigned kube-system/hubble-ui-76d4965bb6-p4blh to k8s-w1

Normal Pulling 19m kubelet Pulling image "quay.io/cilium/hubble-ui:v0.13.2@sha256:9e37c1296b802830834cc87342a9182ccbb71ffebb711971e849221bd9d59392"

Normal Pulled 19m kubelet Successfully pulled image "quay.io/cilium/hubble-ui:v0.13.2@sha256:9e37c1296b802830834cc87342a9182ccbb71ffebb711971e849221bd9d59392" in 7.822s (7.822s including waiting). Image size: 11129482 bytes.

Normal Created 19m kubelet Created container: frontend

Normal Started 19m kubelet Started container frontend

Normal Pulling 19m kubelet Pulling image "quay.io/cilium/hubble-ui-backend:v0.13.2@sha256:a034b7e98e6ea796ed26df8f4e71f83fc16465a19d166eff67a03b822c0bfa15"

Normal Pulled 19m kubelet Successfully pulled image "quay.io/cilium/hubble-ui-backend:v0.13.2@sha256:a034b7e98e6ea796ed26df8f4e71f83fc16465a19d166eff67a03b822c0bfa15" in 7.109s (7.109s including waiting). Image size: 20317203 bytes.

Normal Created 19m kubelet Created container: backend

Normal Started 19m kubelet Started container backend

|

hubble-ui 파드에는 frontend(8081/TCP)와 backend(8090/TCP) 두 컨테이너 존재- frontend(Nginx)가 backend로 프록시하여 Hubble Relay에서 받은 Flow 데이터를 UI로 제공

- backend 컨테이너는

FLOWS_API_ADDR=hubble-relay:80 설정으로 Hubble Relay와 연동

12. Hubble UI Nginx 설정 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kc describe cm -n kube-system hubble-ui-nginx

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

| Name: hubble-ui-nginx

Namespace: kube-system

Labels: app.kubernetes.io/managed-by=Helm

Annotations: meta.helm.sh/release-name: cilium

meta.helm.sh/release-namespace: kube-system

Data

====

nginx.conf:

----

server {

listen 8081;

listen [::]:8081;

server_name localhost;

root /app;

index index.html;

client_max_body_size 1G;

location / {

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

location /api {

proxy_http_version 1.1;

proxy_pass_request_headers on;

proxy_pass http://127.0.0.1:8090;

}

location / {

# double `/index.html` is required here

try_files $uri $uri/ /index.html /index.html;

}

# Liveness probe

location /healthz {

access_log off;

add_header Content-Type text/plain;

return 200 'ok';

}

}

}

BinaryData

====

Events: <none>

|

- Nginx가

8081 포트에서 대기 /api 요청은 backend(127.0.0.1:8090)로 프록시, 나머지는 /index.html로 처리/healthz 엔드포인트로 헬스체크 지원

13. Hubble UI 서비스와 엔드포인트 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get svc,ep -n kube-system hubble-ui

|

✅ 출력

1

2

3

4

5

6

| Warning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/hubble-ui NodePort 10.96.93.209 <none> 80:31234/TCP 22m

NAME ENDPOINTS AGE

endpoints/hubble-ui 172.20.1.79:8081 22m

|

hubble-ui 서비스가 NodePort 31234로 생성됨- ClusterIP

10.96.93.209:80 → 파드 172.20.1.79:8081로 매핑 - 외부에서

<컨트롤플레인IP>:31234 접속 시 Hubble UI에 접근 가능

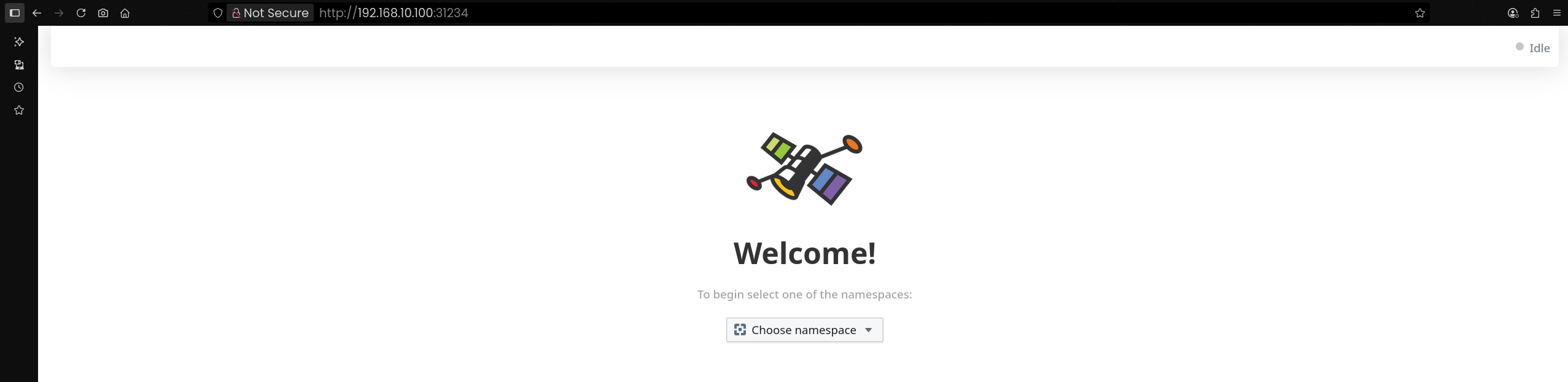

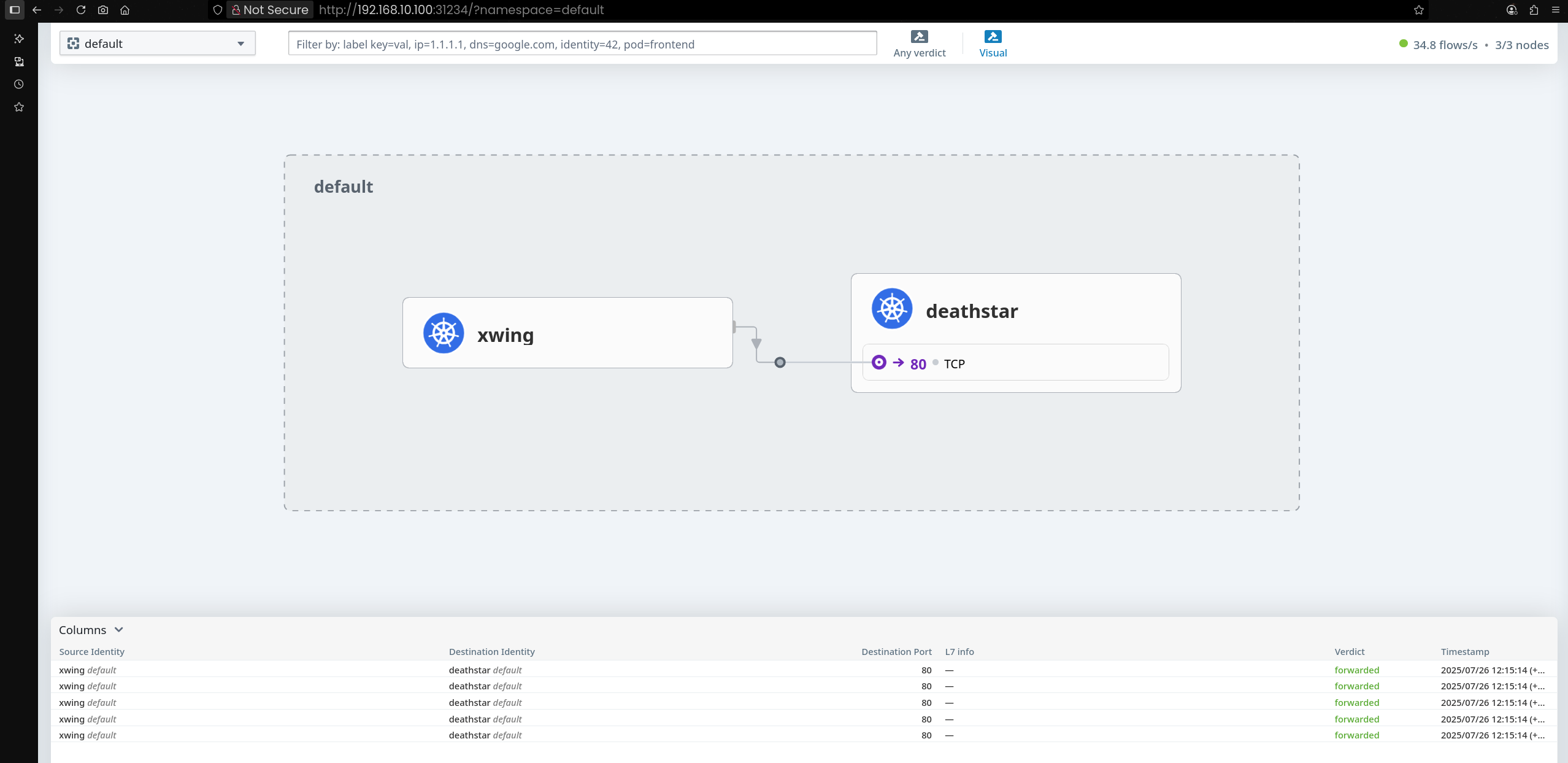

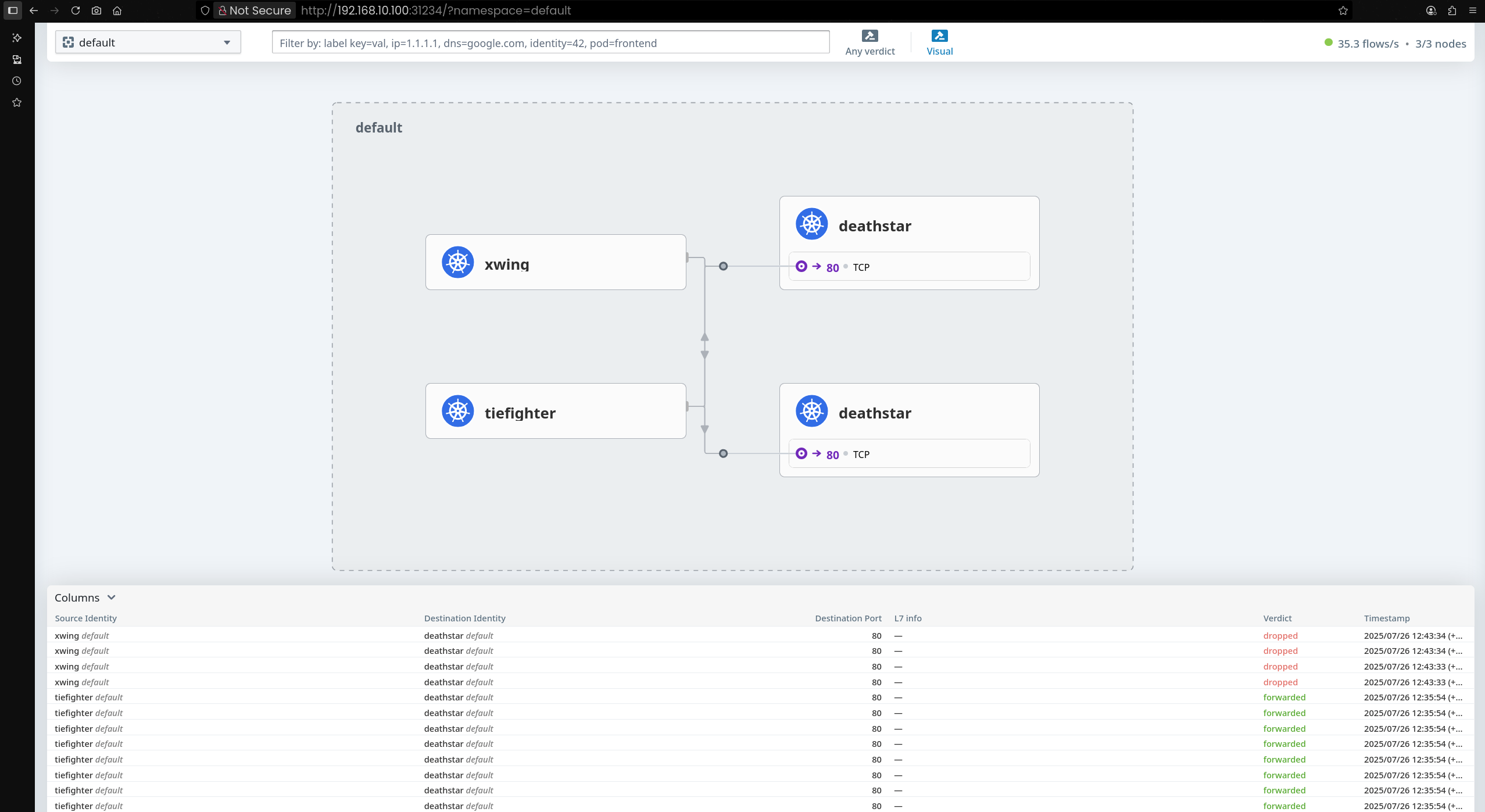

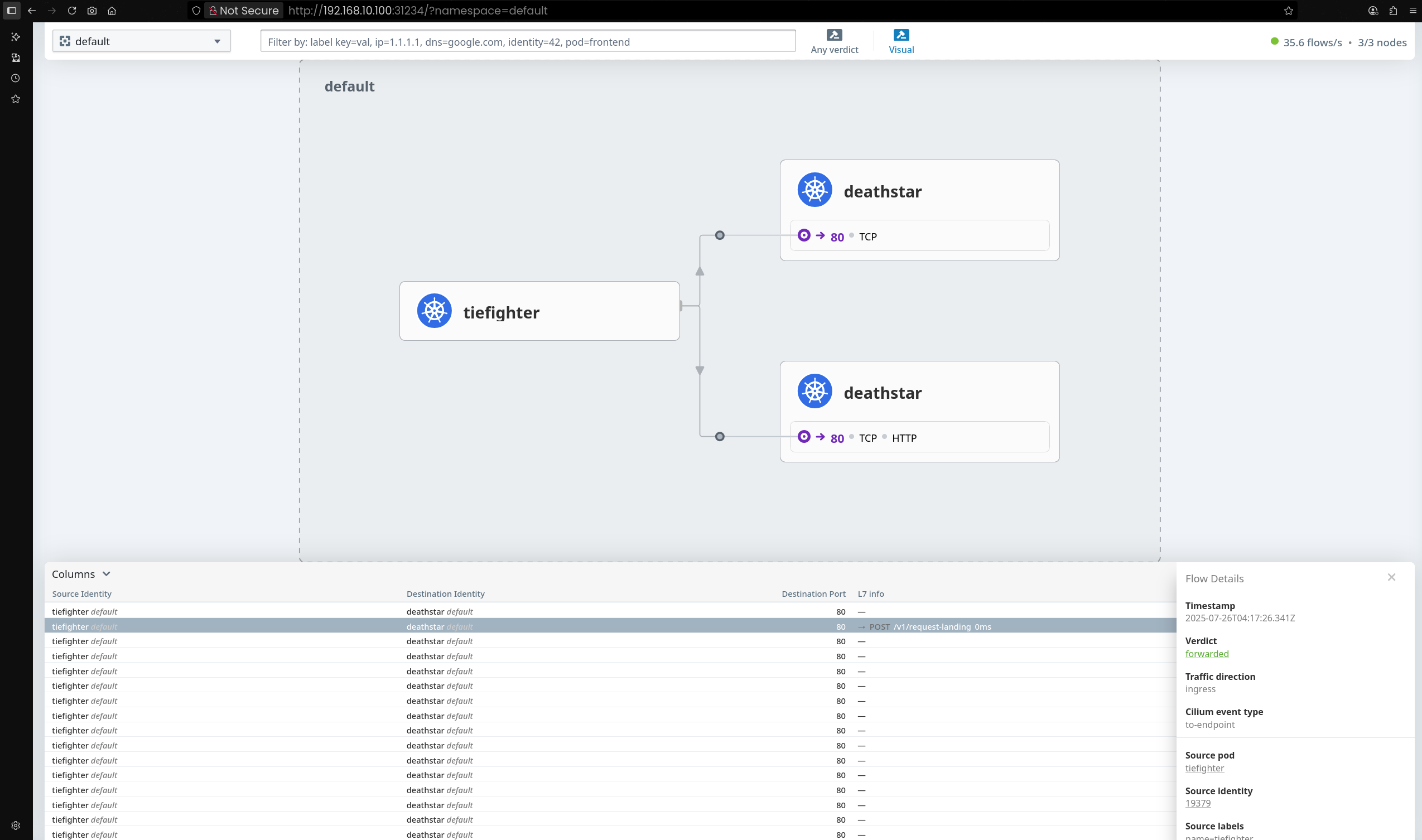

14. Hubble UI 웹 접속

1

2

| (⎈|HomeLab:N/A) root@k8s-ctr:~# NODEIP=$(ip -4 addr show eth1 | grep -oP '(?<=inet\s)\d+(\.\d+){3}')

echo -e "http://$NODEIP:31234"

|

✅ 출력

1

| http://192.168.10.100:31234

|

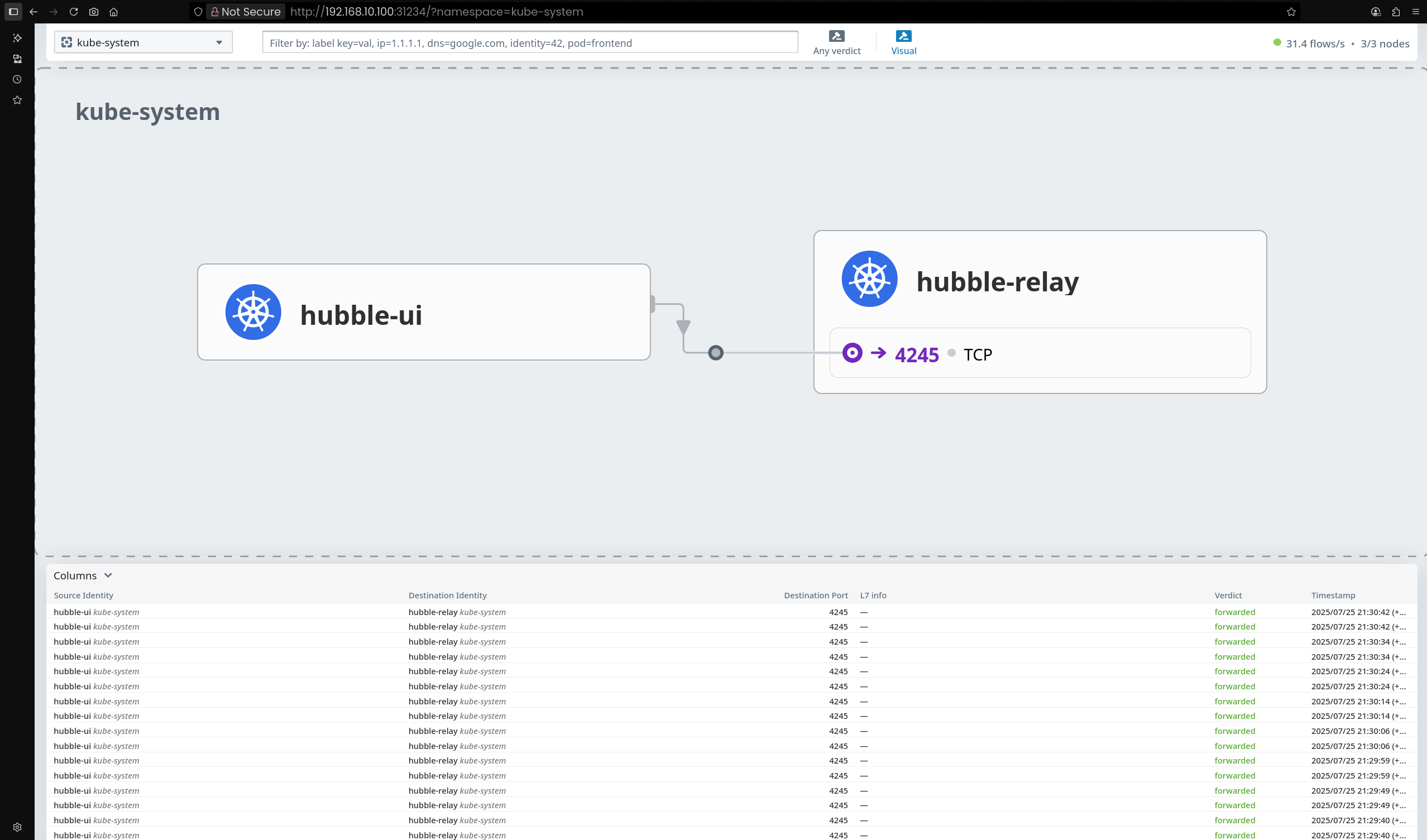

kube-system 네임스페이스로 이동하면 Hubble Relay를 통해 수집된 Flow 정보 상세 확인 가능

Flow를 클릭하면 통신 상세 정보(소스, 목적지, 프로토콜 등) 확인 가능

💻 Hubble Client 설치

1

2

3

4

5

6

| (⎈|HomeLab:N/A) root@k8s-ctr:~# HUBBLE_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/hubble/master/stable.txt)

HUBBLE_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then HUBBLE_ARCH=arm64; fi

curl -L --fail --remote-name-all https://github.com/cilium/hubble/releases/download/$HUBBLE_VERSION/hubble-linux-${HUBBLE_ARCH}.tar.gz{,.sha256sum}

sudo tar xzvfC hubble-linux-${HUBBLE_ARCH}.tar.gz /usr/local/bin

which hubble

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

| % Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 24.6M 100 24.6M 0 0 9606k 0 0:00:02 0:00:02 --:--:-- 12.6M

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 92 100 92 0 0 187 0 --:--:-- --:--:-- --:--:-- 187

hubble

/usr/local/bin/hubble

|

Hubble Client를 사용하려면 접속할 Hubble Relay의 주소를 명시해야 함

1

2

| (⎈|HomeLab:N/A) root@k8s-ctr:~# hubble status

failed getting status: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing: dial tcp 127.0.0.1:4245: connect: connection refused"

|

🔑 Hubble API 접근 검증

1. Hubble API 포트포워딩 실행

1

2

3

| (⎈|HomeLab:N/A) root@k8s-ctr:~# cilium hubble port-forward&

[1] 8917

(⎈|HomeLab:N/A) root@k8s-ctr:~# ℹ️ Hubble Relay is available at 127.0.0.1:4245

|

- 백그라운드에서 Hubble Relay(포트 4245)로 포트포워딩을 시작

2. 포트포워딩된 Hubble API 포트 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# ss -tnlp | grep 4245

|

✅ 출력

1

2

| LISTEN 0 4096 127.0.0.1:4245 0.0.0.0:* users:(("cilium",pid=8917,fd=7))

LISTEN 0 4096 [::1]:4245 [::]:* users:(("cilium",pid=8917,fd=8))

|

127.0.0.1:4245와 [::1]:4245 포트가 cilium 프로세스로 열림

3. Hubble 상태 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# hubble status

|

✅ 출력

1

2

3

4

| Healthcheck (via localhost:4245): Ok

Current/Max Flows: 12,285/12,285 (100.00%)

Flows/s: 35.77

Connected Nodes: 3/3

|

4. Hubble CLI 설정 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# hubble config view

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| basic-auth-password: ""

basic-auth-username: ""

config: /root/.config/hubble/config.yaml

debug: false

kube-context: ""

kube-namespace: kube-system

kubeconfig: ""

port-forward: false

port-forward-port: "4245"

request-timeout: 12s

server: localhost:4245

timeout: 5s

tls: false

tls-allow-insecure: false

tls-ca-cert-files: []

tls-client-cert-file: ""

tls-client-key-file: ""

tls-server-name: ""

|

5. Hubble CLI 서버 옵션 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# hubble help status | grep 'server string'

|

✅ 출력

1

| --server string Address of a Hubble server. Ignored when --input-file or --port-forward is provided. (default "localhost:4245")

|

--server string 옵션으로 Hubble 서버 주소 지정 가능

6. CiliumEndpoints 정보 조회

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumendpoints.cilium.io -n kube-system

|

✅ 출력

1

2

3

4

5

| NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6

coredns-674b8bbfcf-8lcmh 3235 ready 172.20.0.21

coredns-674b8bbfcf-gjs6z 3235 ready 172.20.0.11

hubble-relay-5dcd46f5c-s4wqq 40607 ready 172.20.2.58

hubble-ui-76d4965bb6-p4blh 14998 ready 172.20.1.79

|

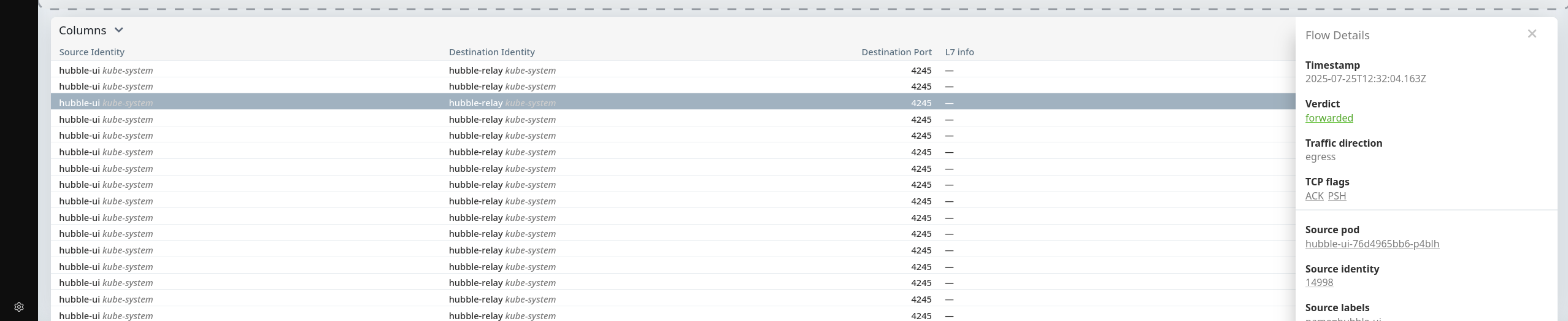

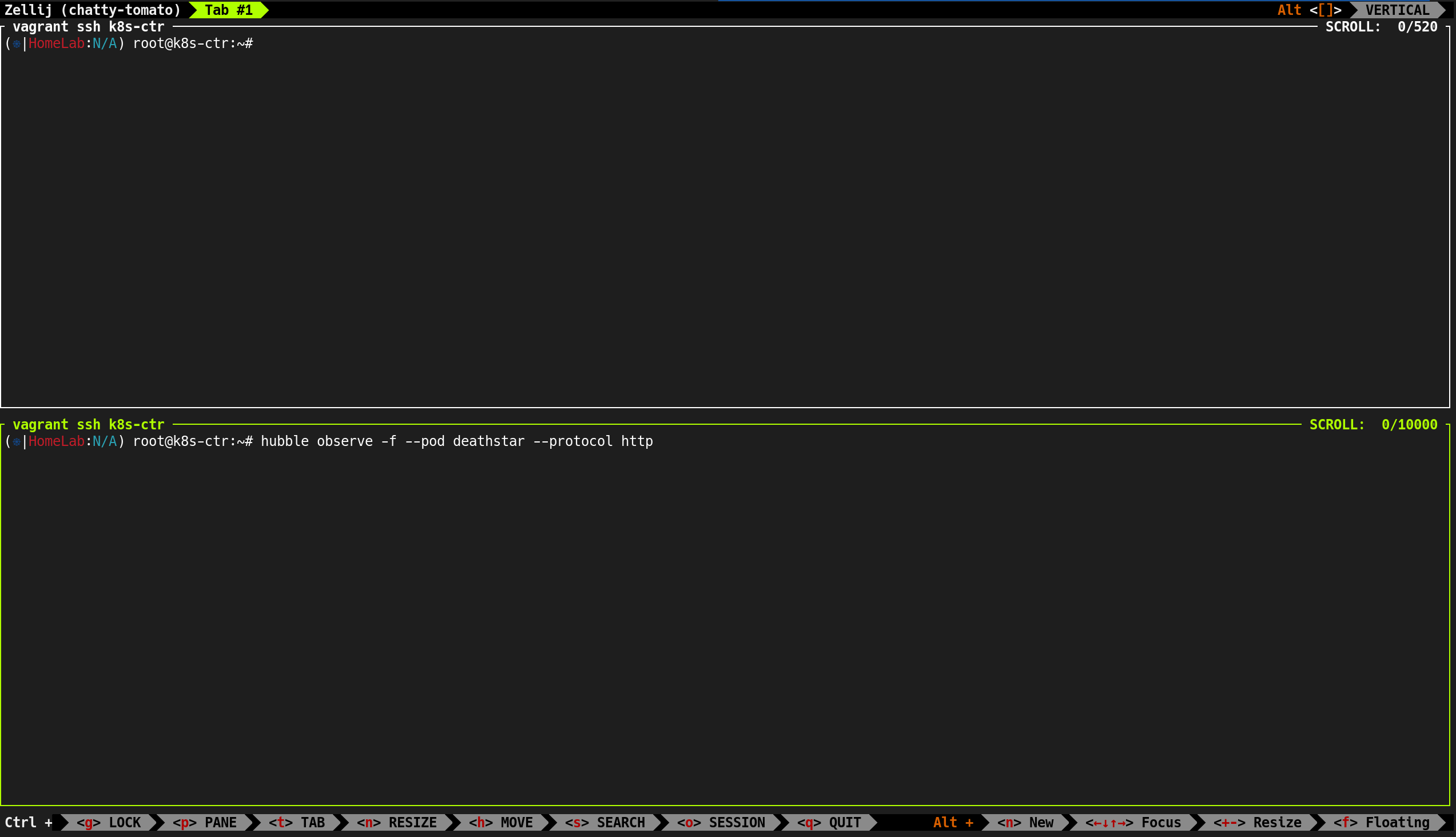

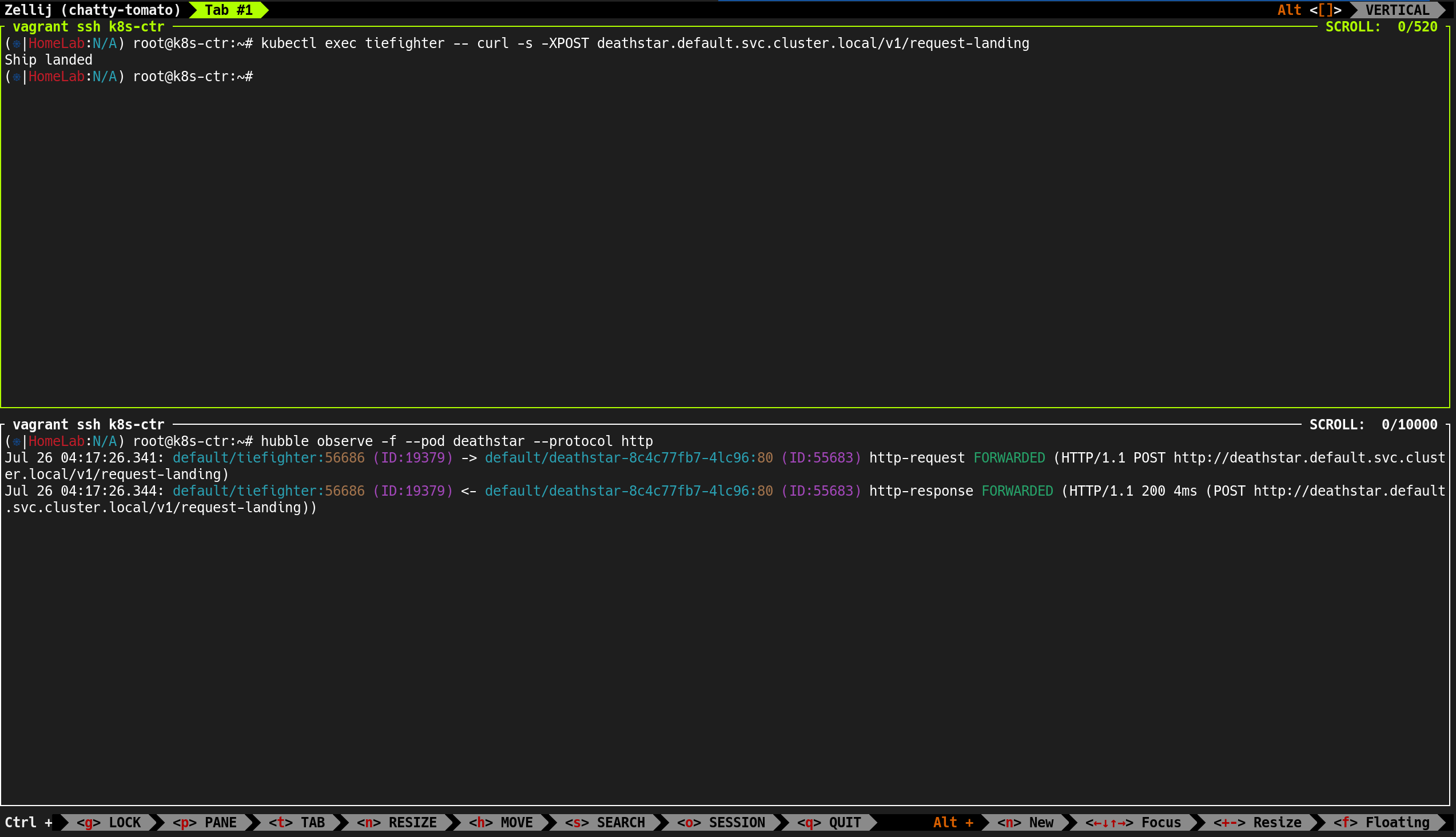

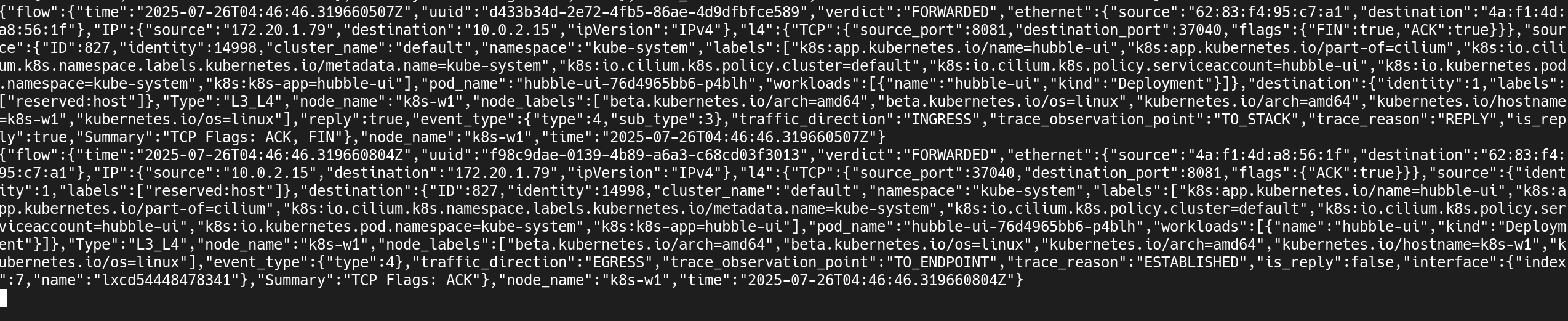

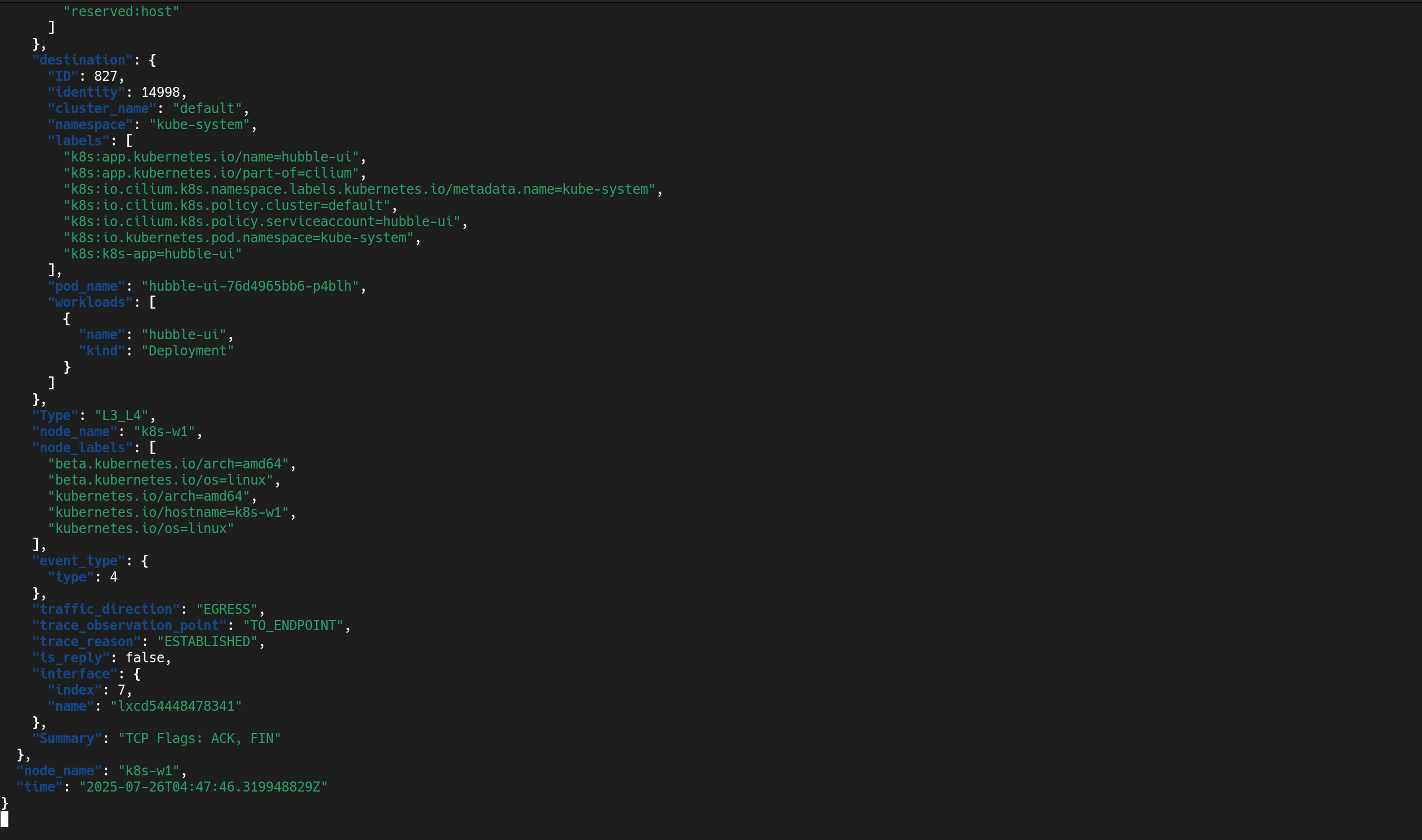

7. Hubble Observe로 실시간 트래픽 관찰

hubble-relay, hubble-ui, coredns 등과의 TCP/UDP 흐름이 실시간으로 기록

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# hubble observe

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

| Jul 26 01:38:52.399: kube-system/hubble-relay-5dcd46f5c-s4wqq:60070 (ID:40607) -> 192.168.10.102:4244 (host) to-stack FORWARDED (TCP Flags: ACK)

Jul 26 01:38:52.613: kube-system/hubble-relay-5dcd46f5c-s4wqq:53066 (ID:40607) <- 192.168.10.100:4244 (kube-apiserver) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Jul 26 01:38:53.990: 10.0.2.15:41170 (host) -> kube-system/hubble-relay-5dcd46f5c-s4wqq:4222 (ID:40607) to-endpoint FORWARDED (TCP Flags: SYN)

Jul 26 01:38:53.990: 10.0.2.15:41170 (host) <- kube-system/hubble-relay-5dcd46f5c-s4wqq:4222 (ID:40607) to-stack FORWARDED (TCP Flags: SYN, ACK)

Jul 26 01:38:53.990: 10.0.2.15:41170 (host) -> kube-system/hubble-relay-5dcd46f5c-s4wqq:4222 (ID:40607) to-endpoint FORWARDED (TCP Flags: ACK)

Jul 26 01:38:53.990: 10.0.2.15:41170 (host) <> kube-system/hubble-relay-5dcd46f5c-s4wqq (ID:40607) pre-xlate-rev TRACED (TCP)

Jul 26 01:38:53.990: 10.0.2.15:41170 (host) <> kube-system/hubble-relay-5dcd46f5c-s4wqq (ID:40607) pre-xlate-rev TRACED (TCP)

Jul 26 01:38:53.990: 10.0.2.15:41170 (host) -> kube-system/hubble-relay-5dcd46f5c-s4wqq:4222 (ID:40607) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Jul 26 01:38:53.990: 10.0.2.15:41170 (host) <> kube-system/hubble-relay-5dcd46f5c-s4wqq (ID:40607) pre-xlate-rev TRACED (TCP)

Jul 26 01:38:53.990: 10.0.2.15:41170 (host) <- kube-system/hubble-relay-5dcd46f5c-s4wqq:4222 (ID:40607) to-stack FORWARDED (TCP Flags: ACK, PSH)

Jul 26 01:38:53.991: 10.0.2.15:41170 (host) <> kube-system/hubble-relay-5dcd46f5c-s4wqq (ID:40607) pre-xlate-rev TRACED (TCP)

Jul 26 01:38:53.991: 10.0.2.15:41170 (host) <> kube-system/hubble-relay-5dcd46f5c-s4wqq (ID:40607) pre-xlate-rev TRACED (TCP)

Jul 26 01:38:53.991: 10.0.2.15:41170 (host) -> kube-system/hubble-relay-5dcd46f5c-s4wqq:4222 (ID:40607) to-endpoint FORWARDED (TCP Flags: ACK, RST)

Jul 26 01:38:54.690: 10.0.2.15:44558 (host) <> kube-system/hubble-ui-76d4965bb6-p4blh (ID:14998) pre-xlate-rev TRACED (TCP)

Jul 26 01:38:54.690: 10.0.2.15:44558 (host) -> kube-system/hubble-ui-76d4965bb6-p4blh:8081 (ID:14998) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Jul 26 01:38:54.690: 10.0.2.15:44558 (host) <> kube-system/hubble-ui-76d4965bb6-p4blh (ID:14998) pre-xlate-rev TRACED (TCP)

Jul 26 01:38:54.690: 10.0.2.15:44558 (host) <> kube-system/hubble-ui-76d4965bb6-p4blh (ID:14998) pre-xlate-rev TRACED (TCP)

Jul 26 01:38:54.691: 10.0.2.15:44558 (host) <- kube-system/hubble-ui-76d4965bb6-p4blh:8081 (ID:14998) to-stack FORWARDED (TCP Flags: ACK, PSH)

Jul 26 01:38:54.691: 10.0.2.15:44558 (host) <- kube-system/hubble-ui-76d4965bb6-p4blh:8081 (ID:14998) to-stack FORWARDED (TCP Flags: ACK, FIN)

Jul 26 01:38:54.691: 10.0.2.15:44558 (host) -> kube-system/hubble-ui-76d4965bb6-p4blh:8081 (ID:14998) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Jul 26 01:38:54.836: 127.0.0.1:32922 (world) <> 192.168.10.102 (host) pre-xlate-rev TRACED (TCP)

Jul 26 01:38:54.943: 127.0.0.1:54908 (world) <> 192.168.10.101 (host) pre-xlate-rev TRACED (TCP)

Jul 26 01:38:55.466: 192.168.10.100:54270 (kube-apiserver) -> kube-system/hubble-ui-76d4965bb6-p4blh:8081 (ID:14998) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Jul 26 01:38:55.467: 127.0.0.1:8090 (world) <> kube-system/hubble-ui-76d4965bb6-p4blh (ID:14998) pre-xlate-rev TRACED (TCP)

Jul 26 01:38:55.467: 127.0.0.1:8090 (world) <> kube-system/hubble-ui-76d4965bb6-p4blh (ID:14998) pre-xlate-rev TRACED (TCP)

Jul 26 01:38:55.467: 127.0.0.1:39880 (world) <> kube-system/hubble-ui-76d4965bb6-p4blh (ID:14998) pre-xlate-rev TRACED (TCP)

Jul 26 01:38:55.467: 127.0.0.1:39880 (world) <> kube-system/hubble-ui-76d4965bb6-p4blh (ID:14998) pre-xlate-rev TRACED (TCP)

Jul 26 01:38:55.467: 127.0.0.1:39880 (world) <> kube-system/hubble-ui-76d4965bb6-p4blh (ID:14998) pre-xlate-rev TRACED (TCP)

Jul 26 01:38:55.468: 127.0.0.1:8090 (world) <> kube-system/hubble-ui-76d4965bb6-p4blh (ID:14998) pre-xlate-rev TRACED (TCP)

Jul 26 01:38:55.468: 127.0.0.1:8090 (world) <> kube-system/hubble-ui-76d4965bb6-p4blh (ID:14998) pre-xlate-rev TRACED (TCP)

Jul 26 01:38:55.468: 192.168.10.100:54270 (kube-apiserver) <- kube-system/hubble-ui-76d4965bb6-p4blh:8081 (ID:14998) to-network FORWARDED (TCP Flags: ACK, PSH)

...

|

8. Cilium Agent 단축키 지정

1

2

3

4

5

6

7

8

9

10

11

12

| (⎈|HomeLab:N/A) root@k8s-ctr:~# export CILIUMPOD0=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-ctr -o jsonpath='{.items[0].metadata.name}')

export CILIUMPOD1=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-w1 -o jsonpath='{.items[0].metadata.name}')

export CILIUMPOD2=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-w2 -o jsonpath='{.items[0].metadata.name}')

echo $CILIUMPOD0 $CILIUMPOD1 $CILIUMPOD2

alias c0="kubectl exec -it $CILIUMPOD0 -n kube-system -c cilium-agent -- cilium"

alias c1="kubectl exec -it $CILIUMPOD1 -n kube-system -c cilium-agent -- cilium"

alias c2="kubectl exec -it $CILIUMPOD2 -n kube-system -c cilium-agent -- cilium"

alias c0bpf="kubectl exec -it $CILIUMPOD0 -n kube-system -c cilium-agent -- bpftool"

alias c1bpf="kubectl exec -it $CILIUMPOD1 -n kube-system -c cilium-agent -- bpftool"

alias c2bpf="kubectl exec -it $CILIUMPOD2 -n kube-system -c cilium-agent -- bpftool"

|

✅ 출력

1

| cilium-kk8gc cilium-x4kvl cilium-w4fhn

|

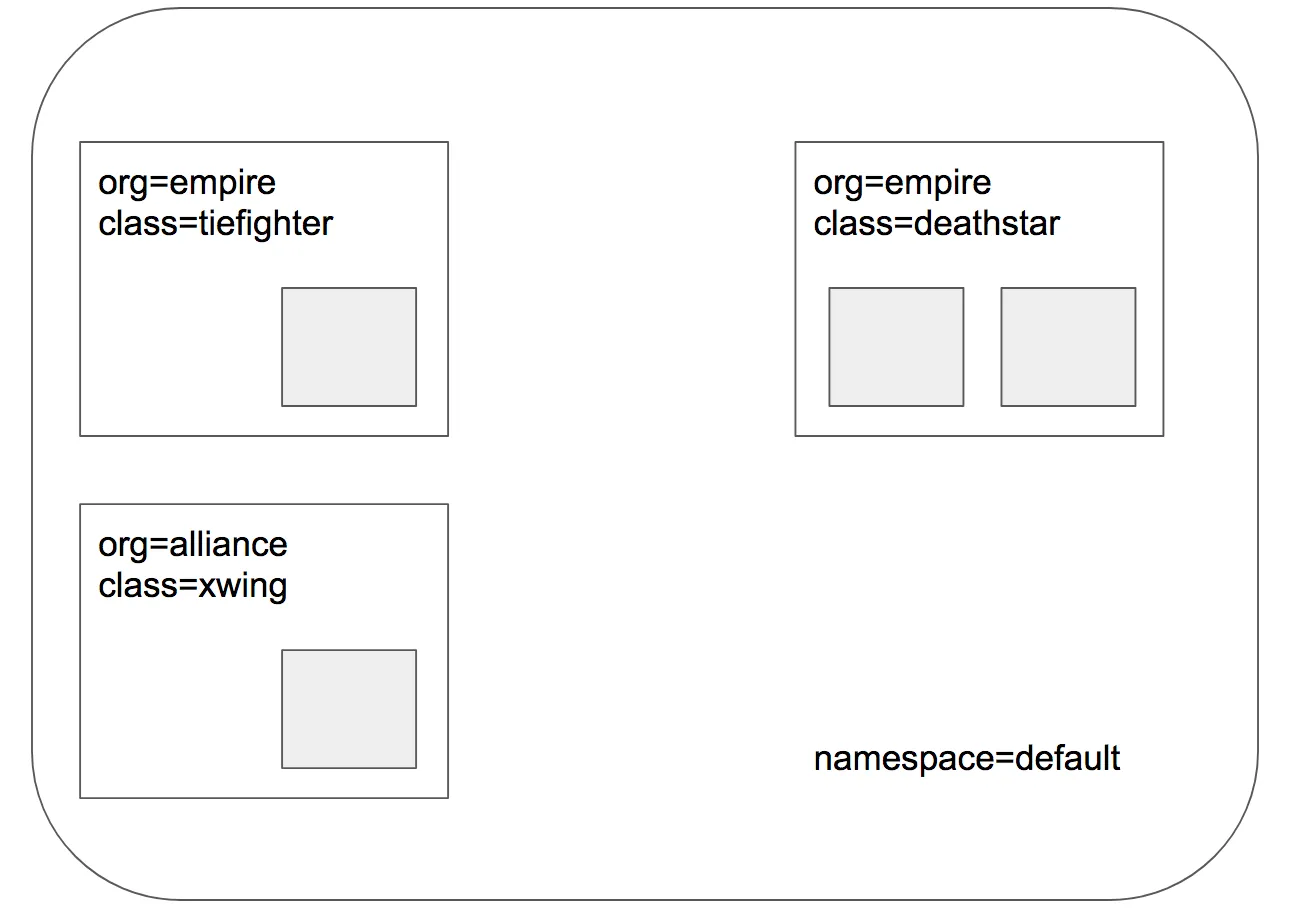

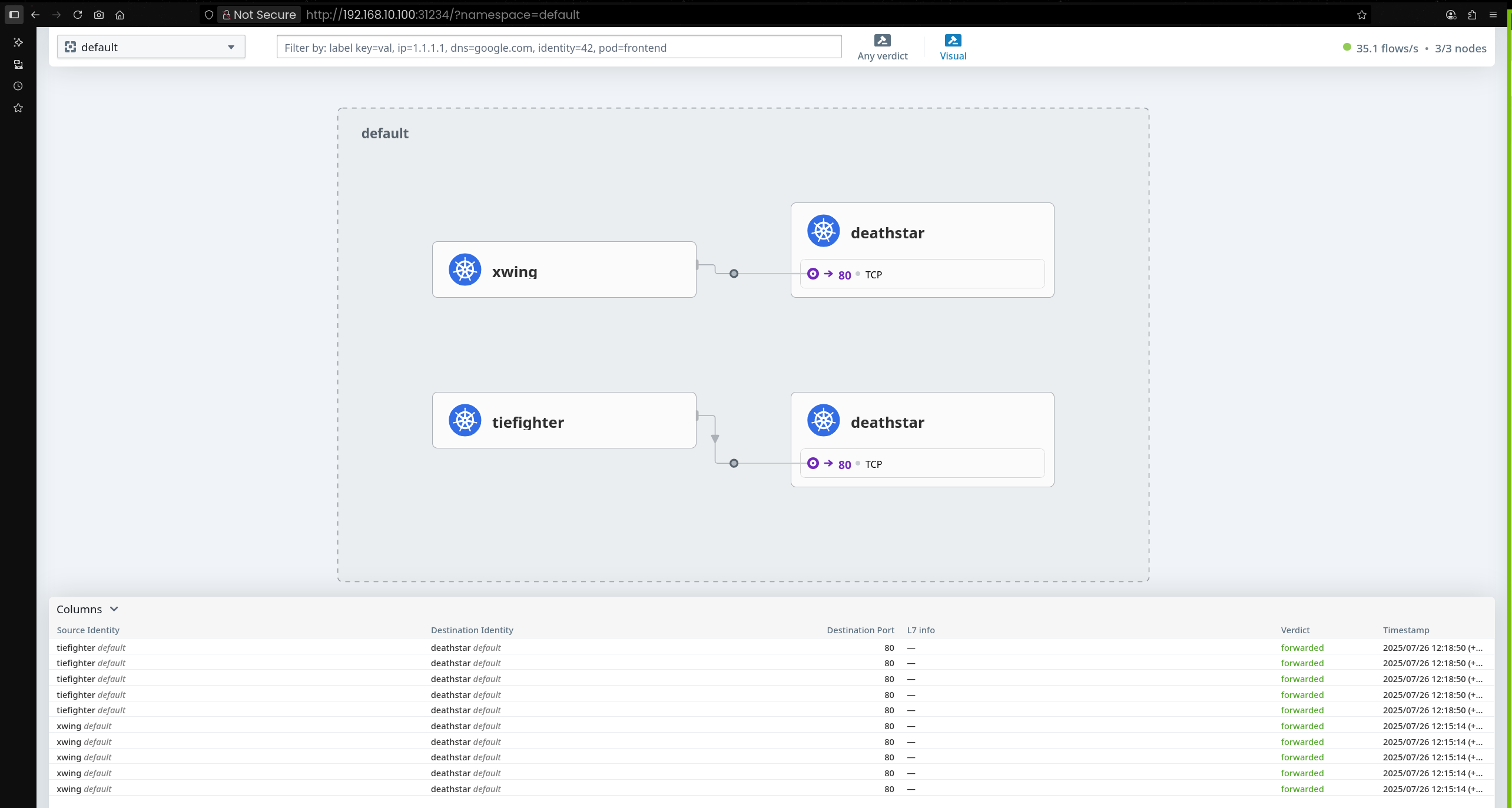

🎮 Star Wars 데모 & Hubble/UI 시작하기

Deploy the Demo Application

1. Star Wars Demo 애플리케이션 배포

1

2

3

4

5

6

7

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl apply -f https://raw.githubusercontent.com/cilium/cilium/1.17.6/examples/minikube/http-sw-app.yaml

# 결과

service/deathstar created

deployment.apps/deathstar created

pod/tiefighter created

pod/xwing created

|

2. 파드 라벨(Label) 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get pod --show-labels

|

✅ 출력

1

2

3

4

5

| NAME READY STATUS RESTARTS AGE LABELS

deathstar-8c4c77fb7-4lc96 1/1 Running 0 29s app.kubernetes.io/name=deathstar,class=deathstar,org=empire,pod-template-hash=8c4c77fb7

deathstar-8c4c77fb7-dwnlx 1/1 Running 0 29s app.kubernetes.io/name=deathstar,class=deathstar,org=empire,pod-template-hash=8c4c77fb7

tiefighter 1/1 Running 0 29s app.kubernetes.io/name=tiefighter,class=tiefighter,org=empire

xwing 1/1 Running 0 29s app.kubernetes.io/name=xwing,class=xwing,org=alliance

|

3. 배포·서비스·엔드포인트 상태 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get deploy,svc,ep deathstar

|

✅ 출력

1

2

3

4

5

6

7

8

9

| Warning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/deathstar 2/2 2 2 96s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/deathstar ClusterIP 10.96.192.29 <none> 80/TCP 96s

NAME ENDPOINTS AGE

endpoints/deathstar 172.20.1.188:80,172.20.2.9:80 96s

|

4. CiliumEndpoints 전체 조회

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumendpoints.cilium.io -A

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

|

NAMESPACE NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6

default deathstar-8c4c77fb7-4lc96 55683 ready 172.20.2.9

default deathstar-8c4c77fb7-dwnlx 37697 ready 172.20.1.188

default tiefighter 19379 ready 172.20.2.116

default xwing 37711 ready 172.20.1.61

kube-system coredns-674b8bbfcf-8lcmh 3235 ready 172.20.0.21

kube-system coredns-674b8bbfcf-gjs6z 3235 ready 172.20.0.11

kube-system hubble-relay-5dcd46f5c-s4wqq 40607 ready 172.20.2.58

kube-system hubble-ui-76d4965bb6-p4blh 14998 ready 172.20.1.79

|

5. CiliumIdentities 조회

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumidentities.cilium.io

|

✅ 출력

1

2

3

4

5

6

7

8

| NAME NAMESPACE AGE

14998 kube-system 13h

19379 default 2m47s

3235 kube-system 14h

37697 default 2m47s

37711 default 2m47s

40607 kube-system 13h

55683 default 2m47s

|

6. Cilium Agent에서 엔드포인트 리스트 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it -n kube-system ds/cilium -c cilium-agent -- cilium endpoint list

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS

ENFORCEMENT ENFORCEMENT

184 Disabled Disabled 37697 k8s:app.kubernetes.io/name=deathstar 172.20.1.188 ready

k8s:class=deathstar

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

k8s:org=empire

827 Disabled Disabled 14998 k8s:app.kubernetes.io/name=hubble-ui 172.20.1.79 ready

k8s:app.kubernetes.io/part-of=cilium

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=hubble-ui

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=hubble-ui

2272 Disabled Disabled 37711 k8s:app.kubernetes.io/name=xwing 172.20.1.61 ready

k8s:class=xwing

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

k8s:org=alliance

2439 Disabled Disabled 1 reserved:host ready

|

- 해당 노드에 올라간 엔드포인트 목록 출력

- deathstar, hubble-ui, xwing, host 엔드포인트 정보 확인

7. 각 노드별 Cilium 엔드포인트 리스트 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# c0 endpoint list

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS

ENFORCEMENT ENFORCEMENT

1083 Disabled Disabled 3235 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system 172.20.0.21 ready

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

1119 Disabled Disabled 1 k8s:node-role.kubernetes.io/control-plane ready

k8s:node.kubernetes.io/exclude-from-external-load-balancers

reserved:host

1122 Disabled Disabled 3235 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system 172.20.0.11 ready

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

|

- kube-system 네임스페이스(coredns 등) 엔드포인트 정보 출력

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# c1 endpoint list

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

| ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS

ENFORCEMENT ENFORCEMENT

184 Disabled Disabled 37697 k8s:app.kubernetes.io/name=deathstar 172.20.1.188 ready

k8s:class=deathstar

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

k8s:org=empire

827 Disabled Disabled 14998 k8s:app.kubernetes.io/name=hubble-ui 172.20.1.79 ready

k8s:app.kubernetes.io/part-of=cilium

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=hubble-ui

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=hubble-ui

2272 Disabled Disabled 37711 k8s:app.kubernetes.io/name=xwing 172.20.1.61 ready

k8s:class=xwing

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

k8s:org=alliance

2439 Disabled Disabled 1 reserved:host ready

|

deathstar, hubble-ui, xwing 엔드포인트 정보 출력

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# c2 endpoint list

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS

ENFORCEMENT ENFORCEMENT

61 Disabled Disabled 40607 k8s:app.kubernetes.io/name=hubble-relay 172.20.2.58 ready

k8s:app.kubernetes.io/part-of=cilium

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=hubble-relay

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=hubble-relay

115 Disabled Disabled 55683 k8s:app.kubernetes.io/name=deathstar 172.20.2.9 ready

k8s:class=deathstar

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

k8s:org=empire

451 Disabled Disabled 1 reserved:host ready

2643 Disabled Disabled 19379 k8s:app.kubernetes.io/name=tiefighter 172.20.2.116 ready

k8s:class=tiefighter

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

k8s:org=empire

|

hubble-relay, deathstar, tiefighter 엔드포인트 정보 출력- 각 엔드포인트가 어떤 라벨(Label) 을 가지고 있는지가 정책 결정에 매우 중요

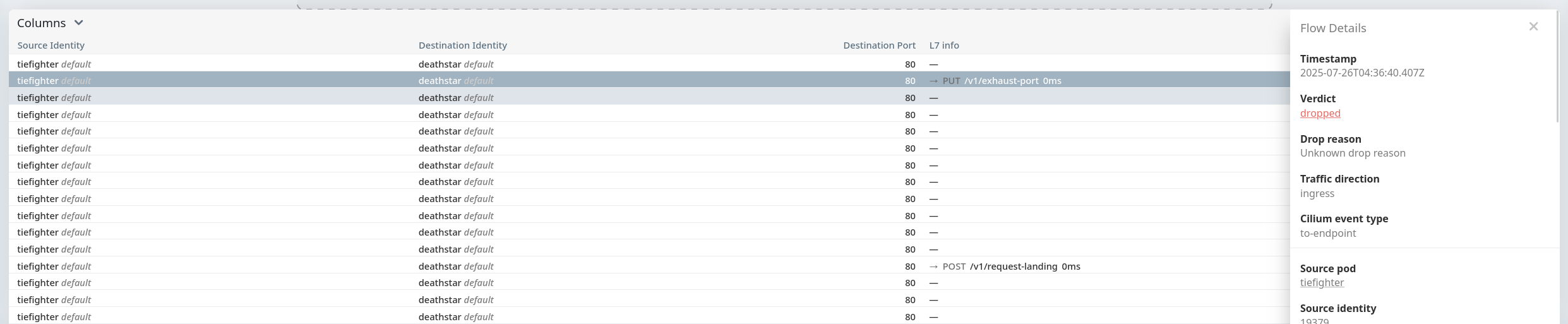

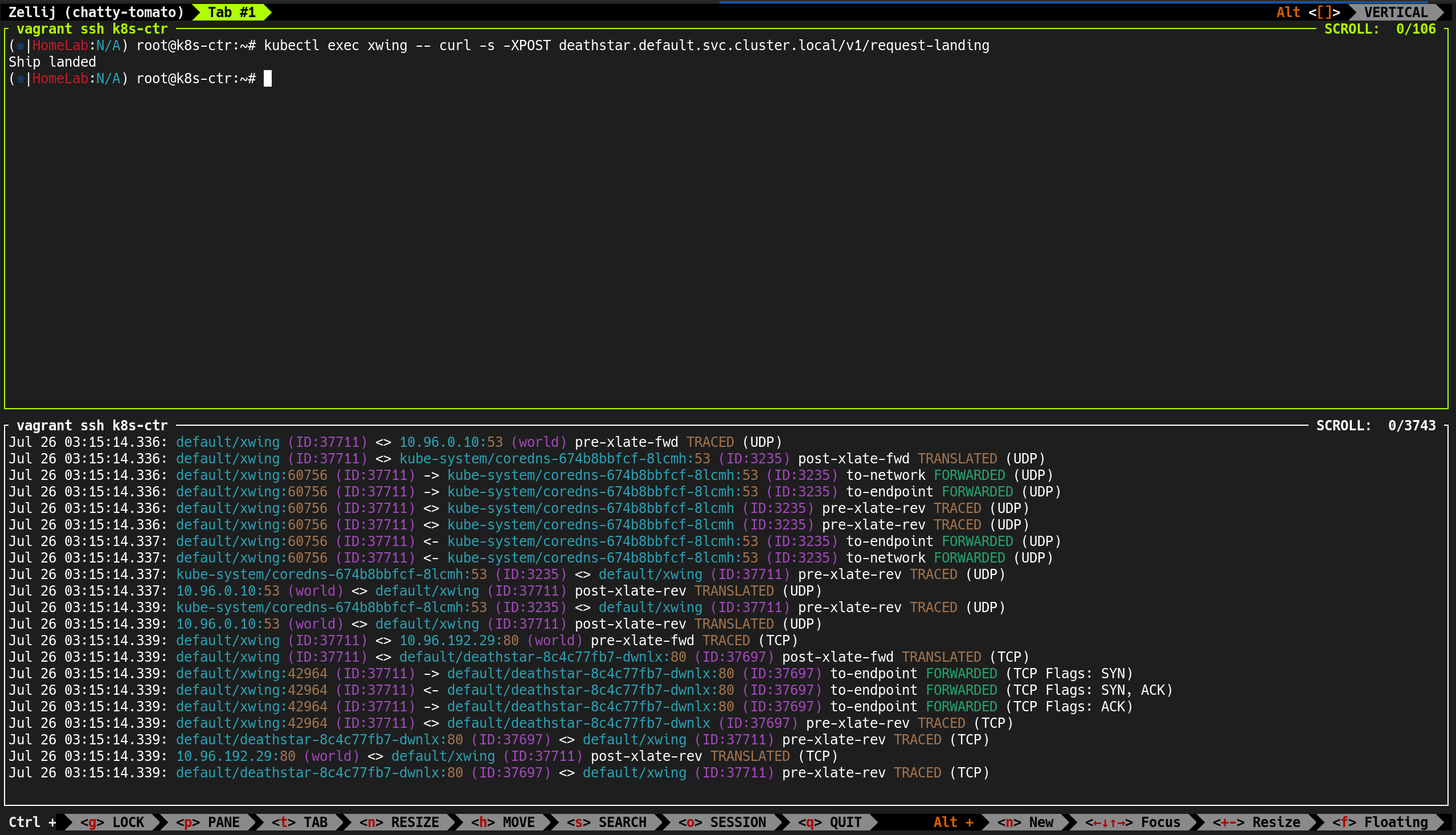

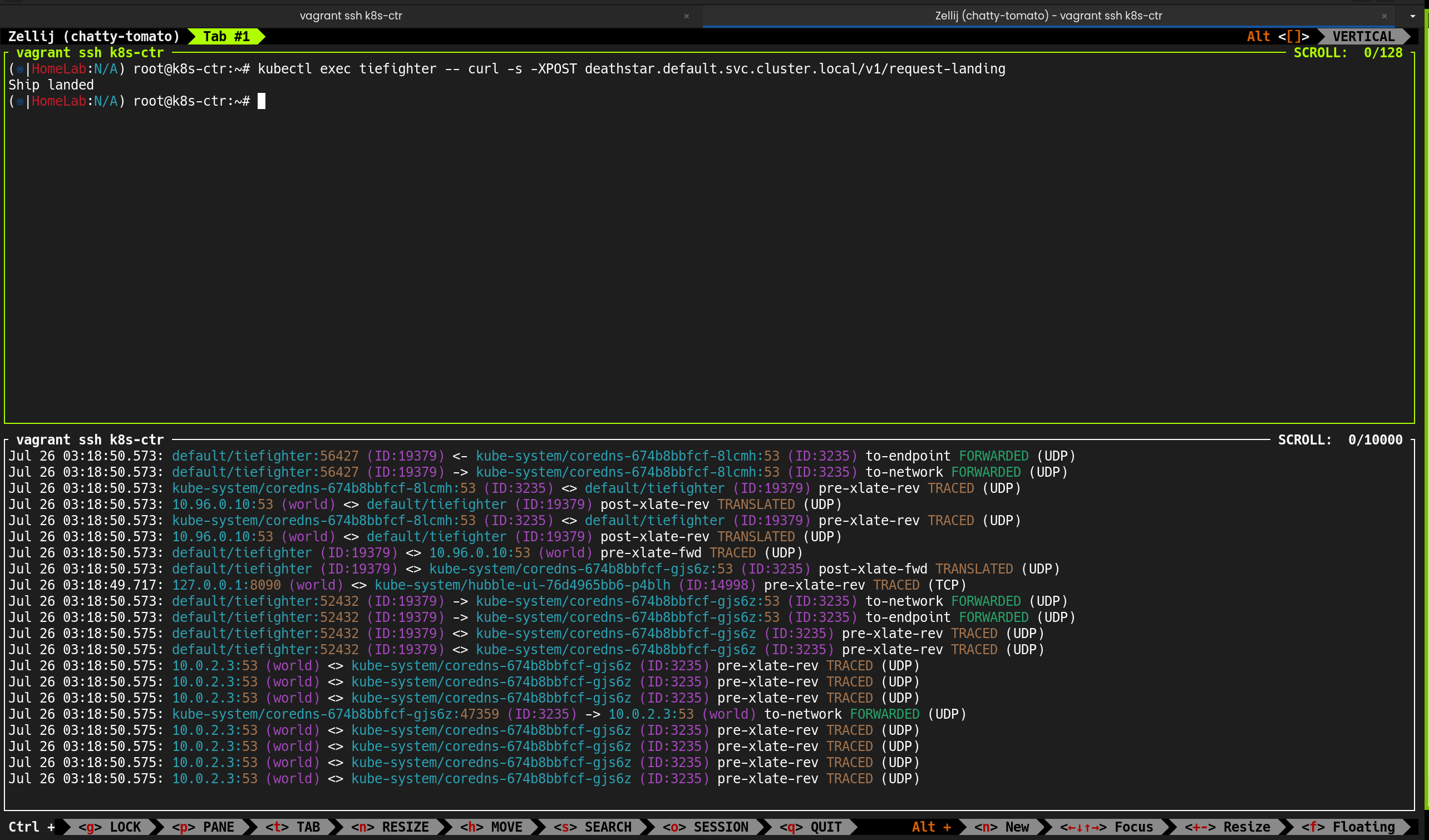

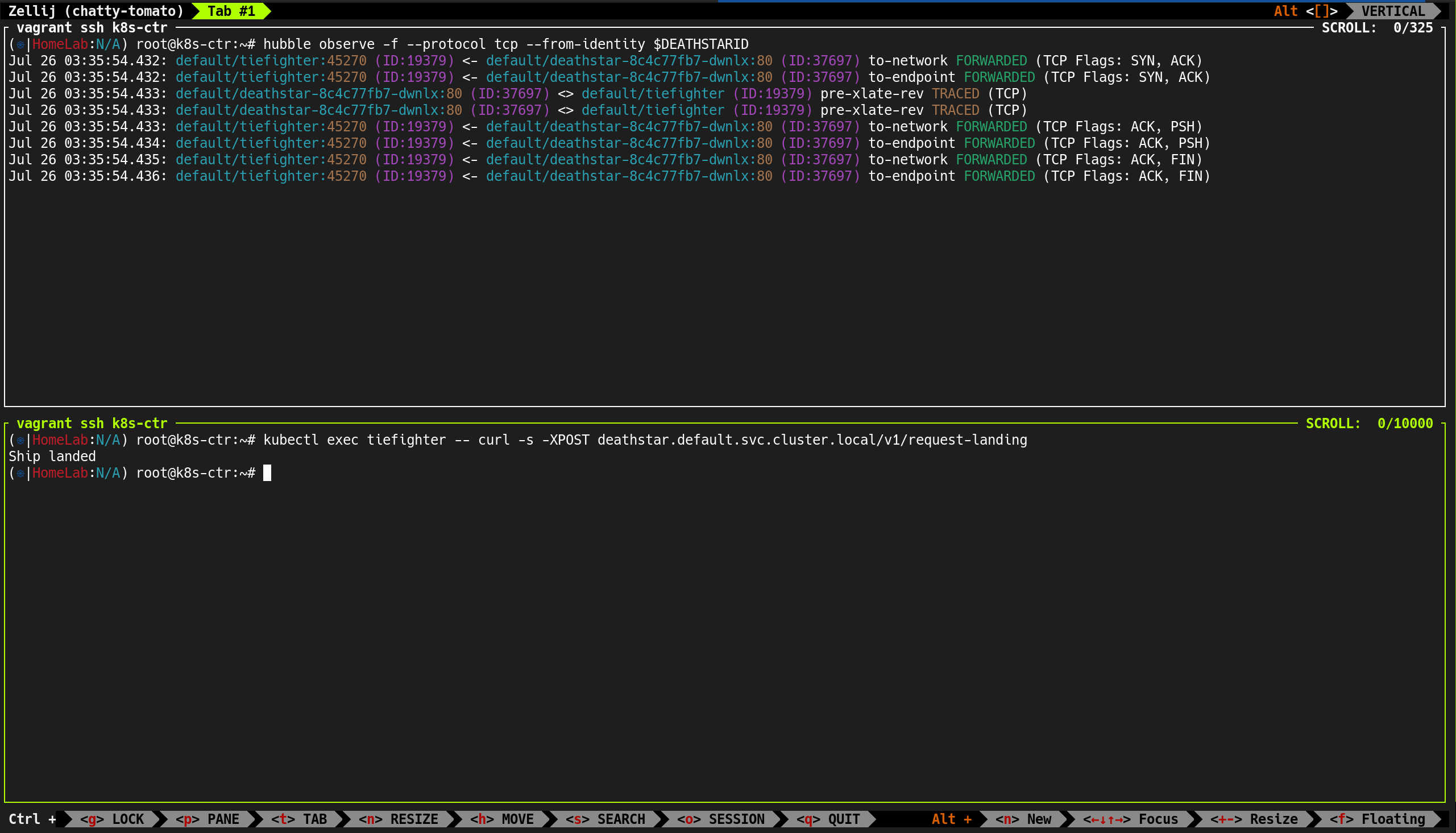

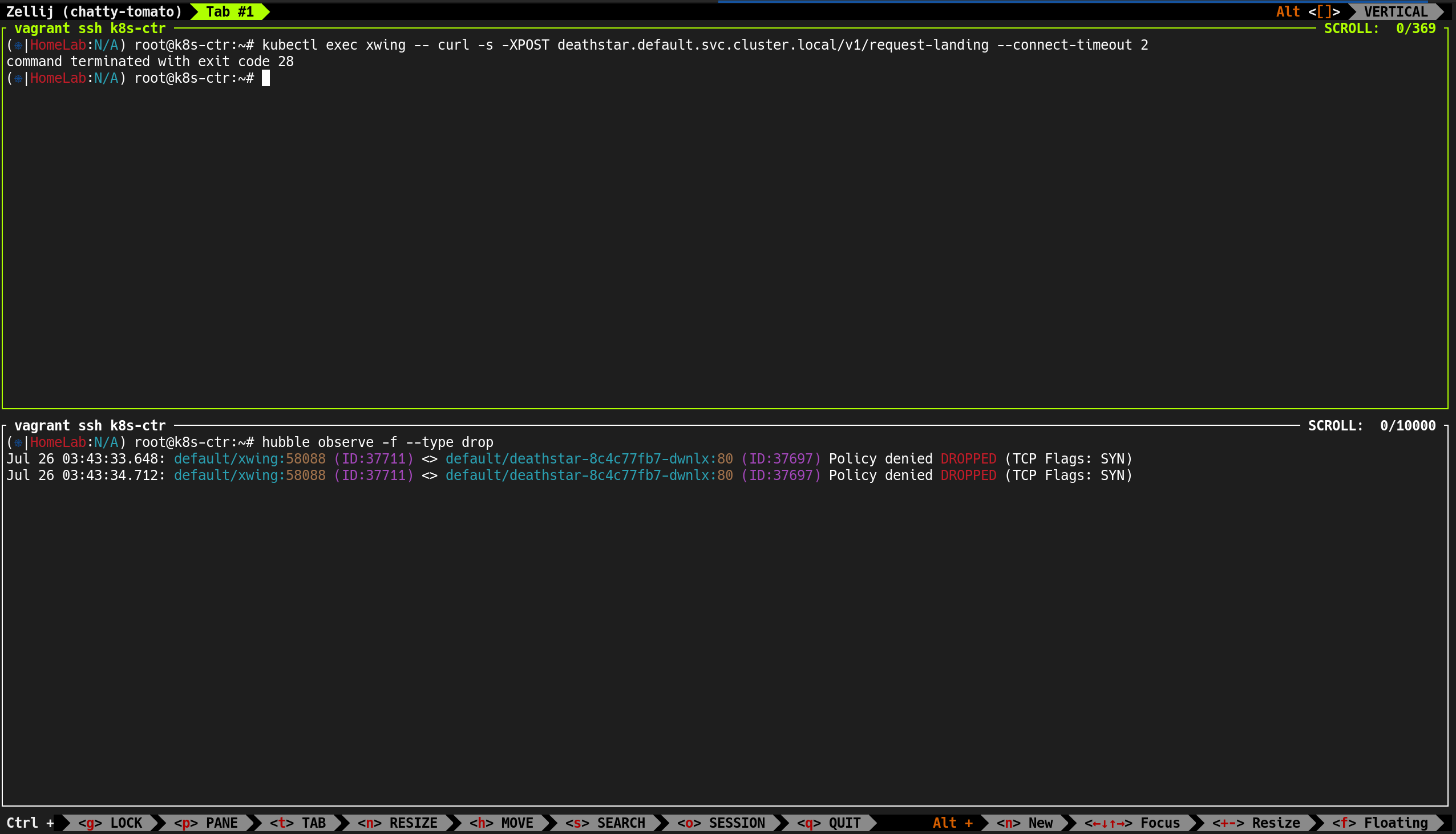

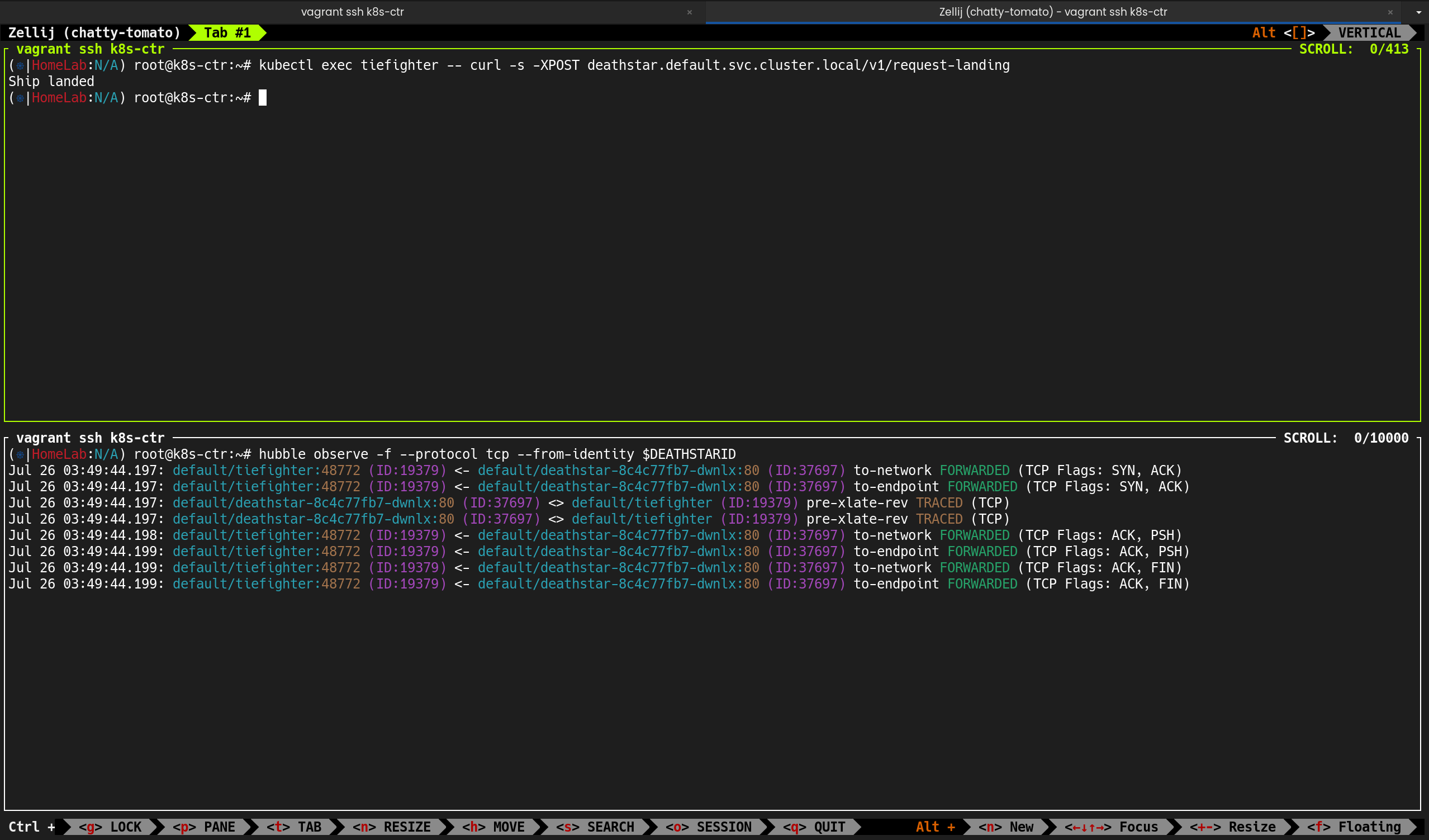

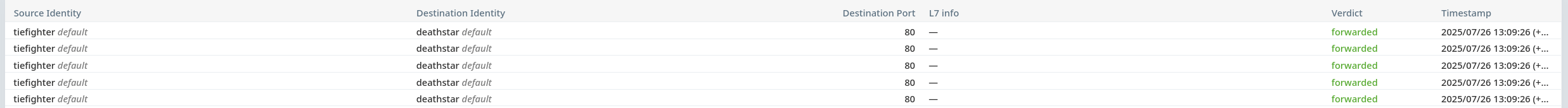

👀 현재 접근 상태 확인

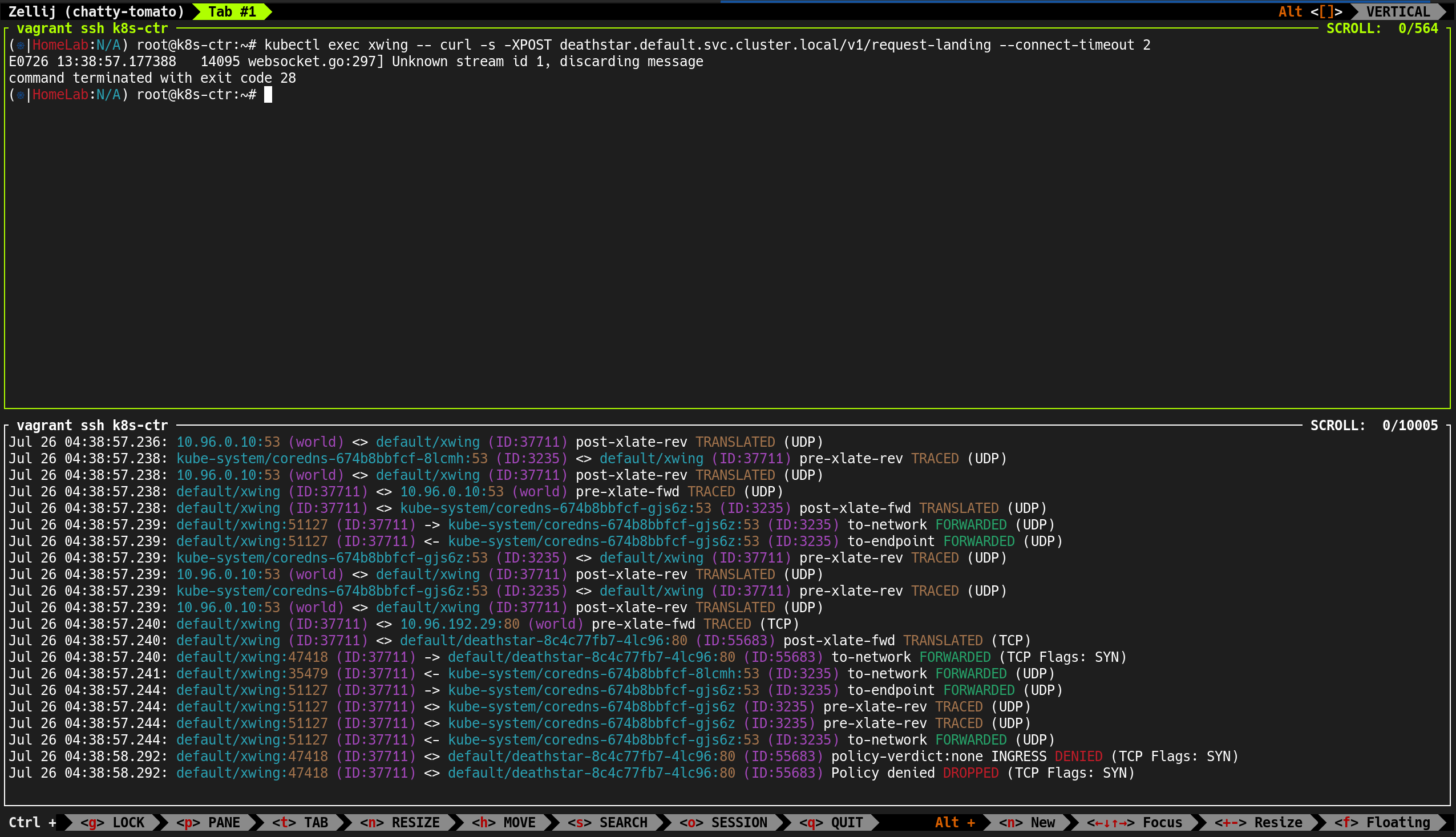

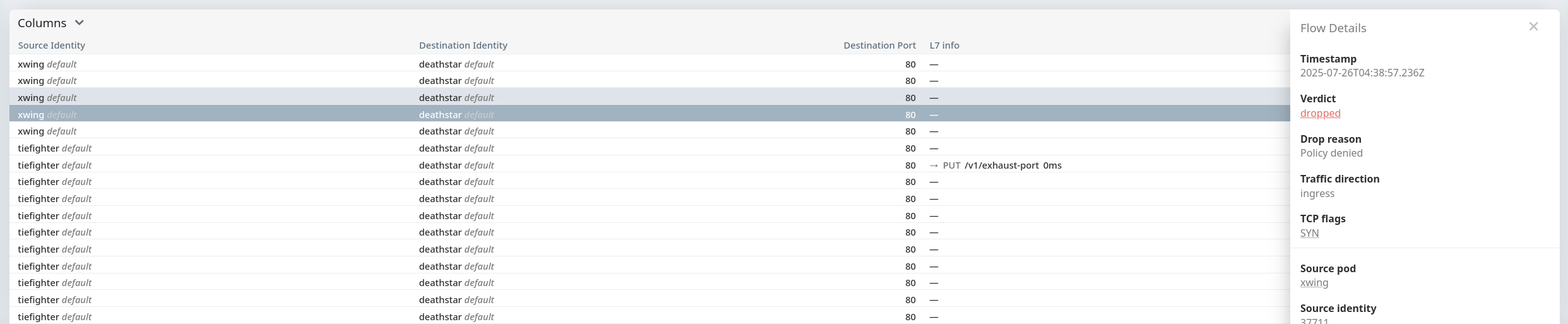

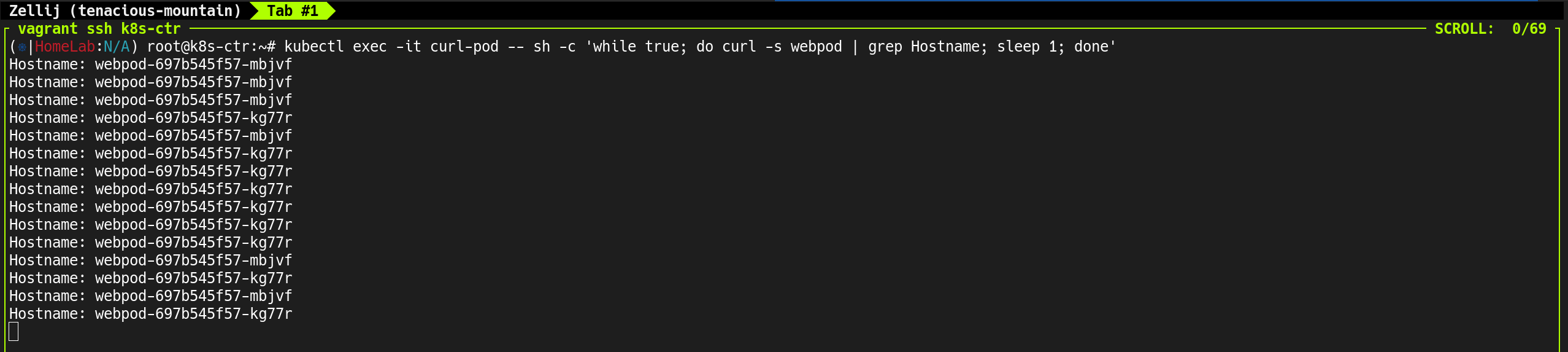

1. 현재 deathstar 접근 가능 상태 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# c1 endpoint list | grep -iE 'xwing|tiefighter|deathstar'

|

✅ 출력

1

2

3

4

| 184 Disabled Disabled 37697 k8s:app.kubernetes.io/name=deathstar 172.20.1.188 ready

k8s:class=deathstar

2272 Disabled Disabled 37711 k8s:app.kubernetes.io/name=xwing 172.20.1.61 ready

k8s:class=xwing

|

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# c2 endpoint list | grep -iE 'xwing|tiefighter|deathstar'

|

✅ 출력

1

2

3

4

| 115 Disabled Disabled 55683 k8s:app.kubernetes.io/name=deathstar 172.20.2.9 ready

k8s:class=deathstar

2643 Disabled Disabled 19379 k8s:app.kubernetes.io/name=tiefighter 172.20.2.116 ready

k8s:class=tiefighter

|

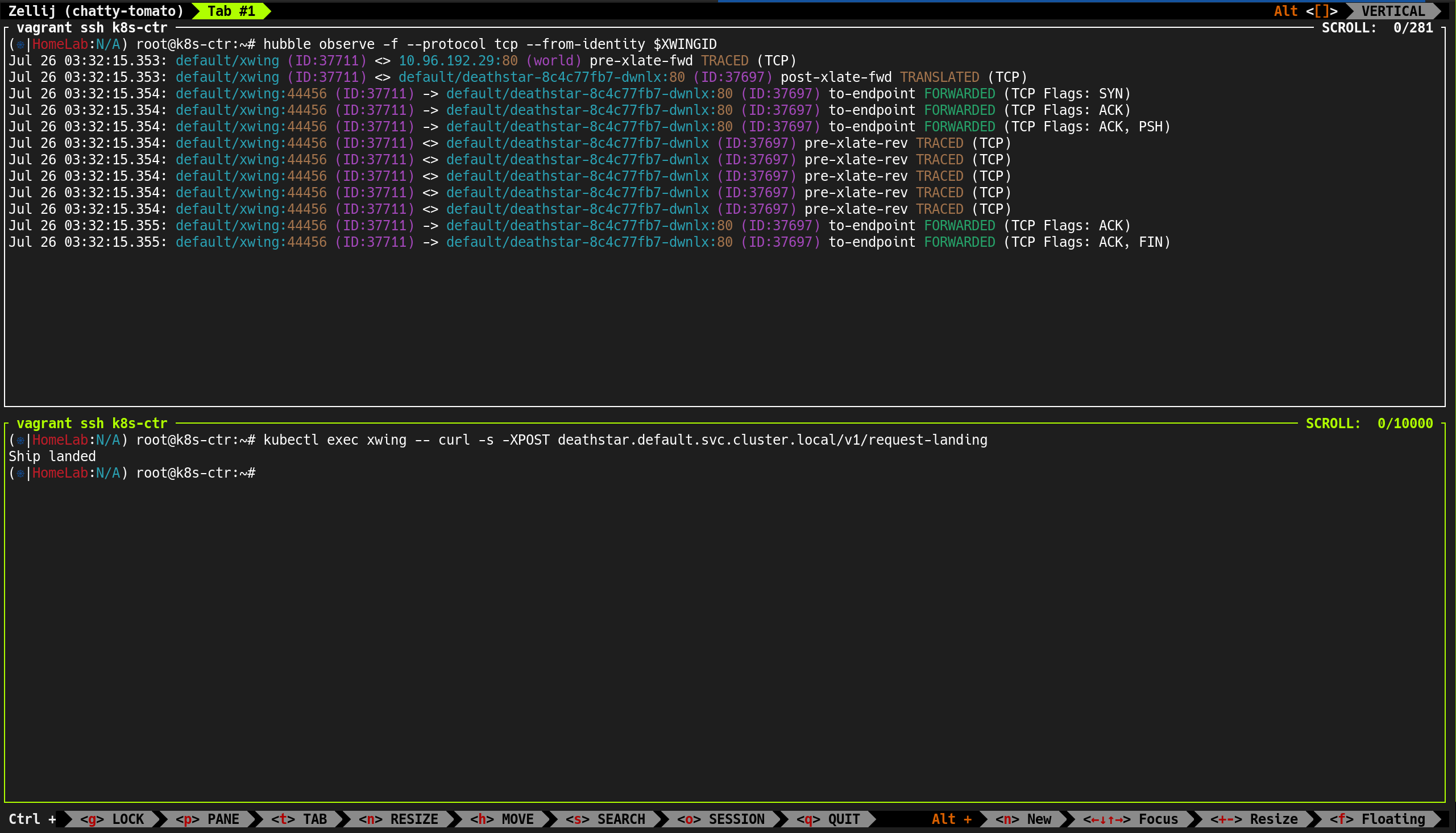

- Deathstar 서비스는 원래

org=empire 라벨만 허용하도록 설계 - 아직 네트워크 정책(CNP)을 적용하지 않아

xwing과 tiefighter 모두 접근 가능 - 각 노드에서 Deathstar, Xwing, Tiefighter 파드 모두 Ready 상태 확인

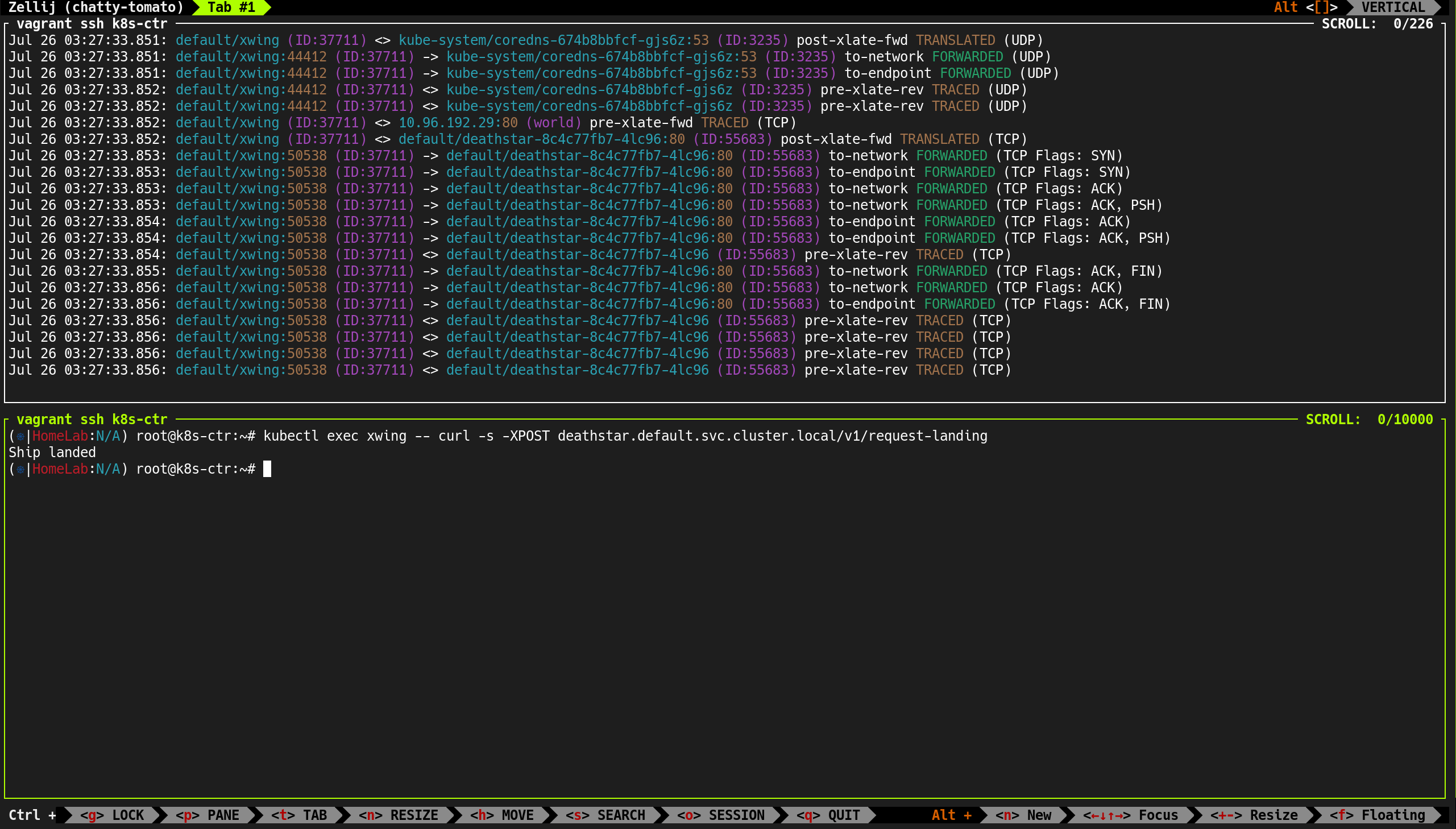

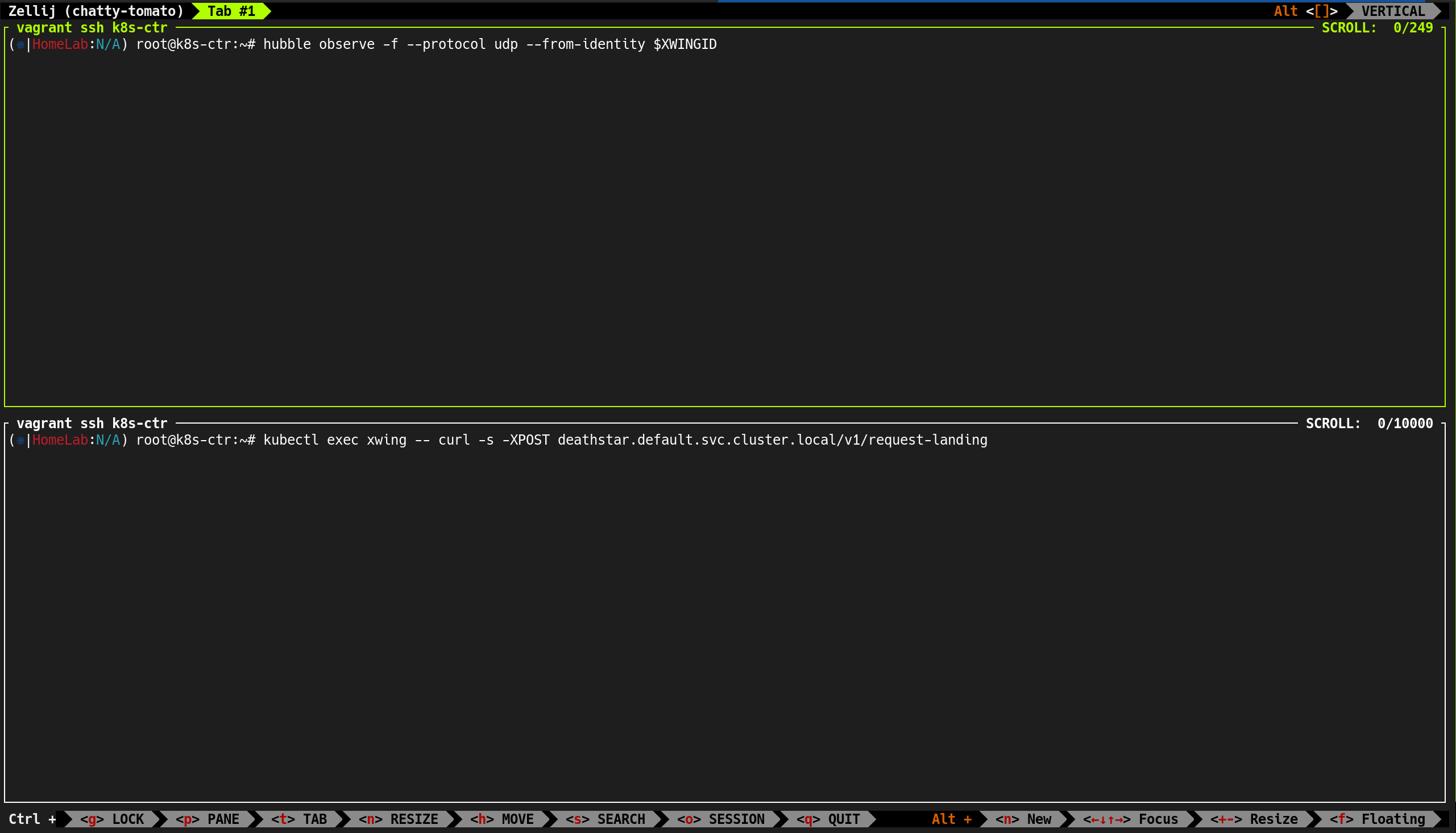

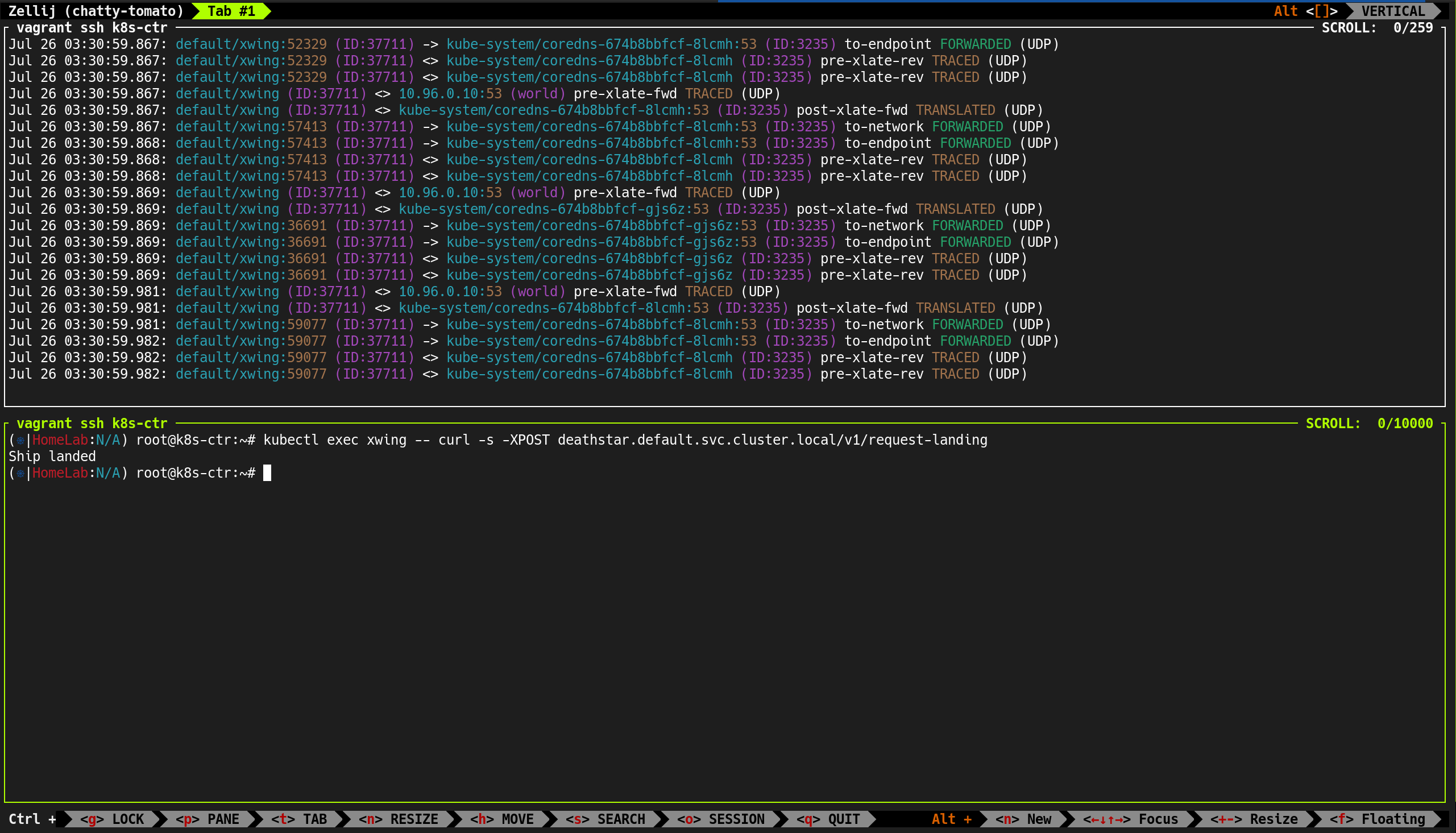

2. 각 노드(C0/C1/C2) 모니터링 확인

Cilium 모니터링 명령어로 트래픽 흐름을 실시간으로 확인

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# c0 monitor -v -v

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| Listening for events on 2 CPUs with 64x4096 of shared memory

Press Ctrl-C to quit

------------------------------------------------------------------------------

time="2025-07-26T01:56:34.86612445Z" level=info msg="Initializing dissection cache..." subsys=monitor

Ethernet {Contents=[..14..] Payload=[..78..] SrcMAC=08:00:27:cf:db:ea DstMAC=08:00:27:36:0a:32 EthernetType=IPv4 Length=0}

IPv4 {Contents=[..20..] Payload=[..56..] Version=4 IHL=5 TOS=0 Length=76 Id=13401 Flags=DF FragOffset=0 TTL=64 Protocol=TCP Checksum=28728 SrcIP=192.168.10.100 DstIP=192.168.10.102 Options=[] Padding=[]}

TCP {Contents=[..32..] Payload=[..24..] SrcPort=56870 DstPort=10250 Seq=1360120777 Ack=634515328 DataOffset=8 FIN=false SYN=false RST=false PSH=true ACK=true URG=false ECE=false CWR=false NS=false Window=991 Checksum=38489 Urgent=0 Options=[TCPOption(NOP:), TCPOption(NOP:), TCPOption(Timestamps:3450183246/4076572814 0xcda59e4ef2fb908e)] Padding=[] Multipath=false}

CPU 01: MARK 0xe50d4628 FROM 1119 to-network: 90 bytes (90 captured), state established, interface eth1, , identity host->unknown, orig-ip 192.168.10.100

------------------------------------------------------------------------------

Ethernet {Contents=[..14..] Payload=[..62..] SrcMAC=f6:8a:5d:17:4b:ae DstMAC=fa:3a:73:16:b2:e8 EthernetType=IPv4 Length=0}

IPv4 {Contents=[..20..] Payload=[..40..] Version=4 IHL=5 TOS=0 Length=60 Id=1306 Flags=DF FragOffset=0 TTL=64 Protocol=TCP Checksum=32116 SrcIP=10.0.2.15 DstIP=172.20.0.11 Options=[] Padding=[]}

TCP {Contents=[..40..] Payload=[] SrcPort=46488 DstPort=8181(intermapper) Seq=3425383573 Ack=0 DataOffset=10 FIN=false SYN=true RST=false PSH=false ACK=false URG=false ECE=false CWR=false NS=false Window=64240 Checksum=47196 Urgent=0 Options=[..5..] Padding=[] Multipath=false}

CPU 01: MARK 0x7bc3aa86 FROM 1122 to-endpoint: 74 bytes (74 captured), state new, interface lxc13029d3e0d35, , identity host->3235, orig-ip 10.0.2.15, to endpoint 1122

------------------------------------------------------------------------------

...

|

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# c1 monitor -v -v

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| Listening for events on 2 CPUs with 64x4096 of shared memory

Press Ctrl-C to quit

------------------------------------------------------------------------------

time="2025-07-26T01:56:44.690724179Z" level=info msg="Initializing dissection cache..." subsys=monitor

Ethernet {Contents=[..14..] Payload=[..62..] SrcMAC=4a:f1:4d:a8:56:1f DstMAC=62:83:f4:95:c7:a1 EthernetType=IPv4 Length=0}

IPv4 {Contents=[..20..] Payload=[..40..] Version=4 IHL=5 TOS=0 Length=60 Id=47457 Flags=DF FragOffset=0 TTL=64 Protocol=TCP Checksum=51176 SrcIP=10.0.2.15 DstIP=172.20.1.79 Options=[] Padding=[]}

TCP {Contents=[..40..] Payload=[] SrcPort=49718 DstPort=8081(sunproxyadmin) Seq=2450151608 Ack=0 DataOffset=10 FIN=false SYN=true RST=false PSH=false ACK=false URG=false ECE=false CWR=false NS=false Window=64240 Checksum=47520 Urgent=0 Options=[..5..] Padding=[] Multipath=false}

CPU 01: MARK 0xfe7f56b8 FROM 827 to-endpoint: 74 bytes (74 captured), state new, interface lxcd54448478341, , identity host->14998, orig-ip 10.0.2.15, to endpoint 827

------------------------------------------------------------------------------

Ethernet {Contents=[..14..] Payload=[..62..] SrcMAC=62:83:f4:95:c7:a1 DstMAC=4a:f1:4d:a8:56:1f EthernetType=IPv4 Length=0}

IPv4 {Contents=[..20..] Payload=[..40..] Version=4 IHL=5 TOS=0 Length=60 Id=0 Flags=DF FragOffset=0 TTL=64 Protocol=TCP Checksum=33098 SrcIP=172.20.1.79 DstIP=10.0.2.15 Options=[] Padding=[]}

TCP {Contents=[..40..] Payload=[] SrcPort=8081(sunproxyadmin) DstPort=49718 Seq=1872207516 Ack=2450151609 DataOffset=10 FIN=false SYN=true RST=false PSH=false ACK=true URG=false ECE=false CWR=false NS=false Window=65160 Checksum=47520 Urgent=0 Options=[..5..] Padding=[] Multipath=false}

CPU 01: MARK 0x2bf841f5 FROM 827 to-stack: 74 bytes (74 captured), state reply, , identity 14998->host, orig-ip 0.0.0.0

------------------------------------------------------------------------------

...

|

1

| (⎈|HomeLab:N/A) root@k8s-ctr:~# c2 monitor -v -v

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| Listening for events on 2 CPUs with 64x4096 of shared memory

Press Ctrl-C to quit

------------------------------------------------------------------------------

time="2025-07-26T01:56:56.589423599Z" level=info msg="Initializing dissection cache..." subsys=monitor

Ethernet {Contents=[..14..] Payload=[..54..] SrcMAC=08:00:27:36:0a:32 DstMAC=08:00:27:cf:db:ea EthernetType=IPv4 Length=0}

IPv4 {Contents=[..20..] Payload=[..32..] Version=4 IHL=5 TOS=0 Length=52 Id=5472 Flags=DF FragOffset=0 TTL=64 Protocol=TCP Checksum=36681 SrcIP=192.168.10.102 DstIP=192.168.10.100 Options=[] Padding=[]}

TCP {Contents=[..32..] Payload=[] SrcPort=47870 DstPort=6443(sun-sr-https) Seq=2993297840 Ack=594274701 DataOffset=8 FIN=false SYN=false RST=false PSH=false ACK=true URG=false ECE=false CWR=false NS=false Window=6125 Checksum=38465 Urgent=0 Options=[TCPOption(NOP:), TCPOption(NOP:), TCPOption(Timestamps:4076599536/3450204970 0xf2fbf8f0cda5f32a)] Padding=[] Multipath=false}

CPU 01: MARK 0xd11396d8 FROM 451 to-network: 66 bytes (66 captured), state established, interface eth1, , identity host->unknown, orig-ip 192.168.10.102

------------------------------------------------------------------------------

Ethernet {Contents=[..14..] Payload=[..62..] SrcMAC=b2:be:eb:e9:e7:5a DstMAC=5a:0d:e7:cf:c6:f4 EthernetType=IPv4 Length=0}