Cilium 1주차 정리

🐧 실습환경 구성 (Arch Linux)

1. VirtualBox 설치 및 설정

(1) 필수 패키지 설치

1

sudo pacman -S virtualbox virtualbox-host-modules-arch linux-headers

(2) VirtualBox 버전 확인

1

VBoxManage --version

✅ 출력

1

7.1.10r169112

(3) 커널 모듈 로딩

1

2

3

sudo modprobe vboxdrv

sudo modprobe vboxnetflt

sudo modprobe vboxnetadp

(4) 모듈 자동 로딩 - 부팅 시 자동 적용

1

sudo bash -c 'echo vboxdrv > /etc/modules-load.d/virtualbox.conf'

2. Vagrant 설치 및 초기화

(1) Vagrant 설치

1

sudo pacman -S vagrant

(2) Vagrant 버전 확인

1

vagrant --version

✅ 출력

1

Vagrant 2.4.7

(3) 작업 디렉토리 생성 및 Vagrantfile 다운로드

1

2

3

4

mkdir -p cilium-lab/1w && cd cilium-lab/1w

curl -O https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/1w/Vagrantfile

vagrant up

3. 네트워크 설정 파일 수정

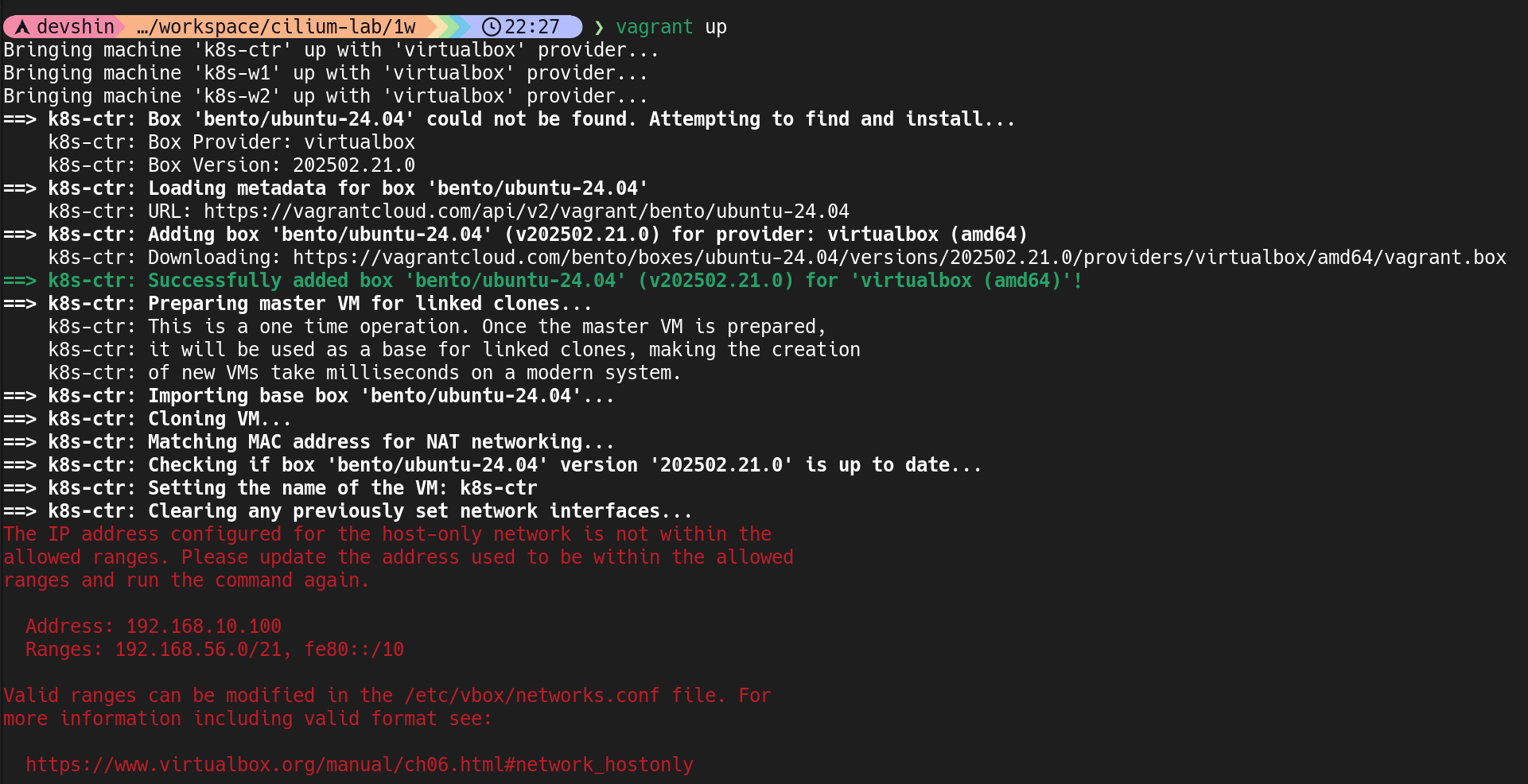

The IP address configured for the host-only network is not within the allowed ranges 오류 발생

VirtualBox 6.1 이상부터는 Host-Only 네트워크에 허용된 IP 대역만 사용가능

현재 허용된 대역

1

192.168.56.0/21

Vagrantfile에서 지정한 IP

1

192.168.10.100 ❌ (허용된 범위 밖)

VirtualBox에 IP 범위 추가

1

sudo vim /etc/vbox/networks.conf

아래 줄 추가

1

* 192.168.10.0/24

기존 VM 제거 후 재실행

1

2

vagrant destroy -f

vagrant up

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

Bringing machine 'k8s-ctr' up with 'virtualbox' provider...

Bringing machine 'k8s-w1' up with 'virtualbox' provider...

Bringing machine 'k8s-w2' up with 'virtualbox' provider...

==> k8s-ctr: Cloning VM...

==> k8s-ctr: Matching MAC address for NAT networking...

==> k8s-ctr: Checking if box 'bento/ubuntu-24.04' version '202502.21.0' is up to date...

==> k8s-ctr: Setting the name of the VM: k8s-ctr

==> k8s-ctr: Clearing any previously set network interfaces...

==> k8s-ctr: Preparing network interfaces based on configuration...

k8s-ctr: Adapter 1: nat

k8s-ctr: Adapter 2: hostonly

==> k8s-ctr: Forwarding ports...

k8s-ctr: 22 (guest) => 60000 (host) (adapter 1)

==> k8s-ctr: Running 'pre-boot' VM customizations...

==> k8s-ctr: Booting VM...

==> k8s-ctr: Waiting for machine to boot. This may take a few minutes...

k8s-ctr: SSH address: 127.0.0.1:60000

k8s-ctr: SSH username: vagrant

k8s-ctr: SSH auth method: private key

k8s-ctr:

k8s-ctr: Vagrant insecure key detected. Vagrant will automatically replace

k8s-ctr: this with a newly generated keypair for better security.

k8s-ctr:

k8s-ctr: Inserting generated public key within guest...

k8s-ctr: Removing insecure key from the guest if it's present...

k8s-ctr: Key inserted! Disconnecting and reconnecting using new SSH key...

==> k8s-ctr: Machine booted and ready!

==> k8s-ctr: Checking for guest additions in VM...

==> k8s-ctr: Setting hostname...

==> k8s-ctr: Configuring and enabling network interfaces...

==> k8s-ctr: Running provisioner: shell...

k8s-ctr: Running: /tmp/vagrant-shell20250714-112889-1m78z0.sh

k8s-ctr: >>>> Initial Config Start <<<<

k8s-ctr: [TASK 1] Setting Profile & Change Timezone

k8s-ctr: [TASK 2] Disable AppArmor

k8s-ctr: [TASK 3] Disable and turn off SWAP

k8s-ctr: [TASK 4] Install Packages

k8s-ctr: [TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl)

k8s-ctr: [TASK 6] Install Packages & Helm

k8s-ctr: >>>> Initial Config End <<<<

==> k8s-ctr: Running provisioner: shell...

k8s-ctr: Running: /tmp/vagrant-shell20250714-112889-mpo3zf.sh

k8s-ctr: >>>> K8S Controlplane config Start <<<<

k8s-ctr: [TASK 1] Initial Kubernetes

k8s-ctr: [TASK 2] Setting kube config file

k8s-ctr: [TASK 3] Source the completion

k8s-ctr: [TASK 4] Alias kubectl to k

k8s-ctr: [TASK 5] Install Kubectx & Kubens

k8s-ctr: [TASK 6] Install Kubeps & Setting PS1

k8s-ctr: [TASK 6] Install Kubeps & Setting PS1

k8s-ctr: >>>> K8S Controlplane Config End <<<<

==> k8s-w1: Cloning VM...

==> k8s-w1: Matching MAC address for NAT networking...

==> k8s-w1: Checking if box 'bento/ubuntu-24.04' version '202502.21.0' is up to date...

==> k8s-w1: Setting the name of the VM: k8s-w1

==> k8s-w1: Clearing any previously set network interfaces...

==> k8s-w1: Preparing network interfaces based on configuration...

k8s-w1: Adapter 1: nat

k8s-w1: Adapter 2: hostonly

==> k8s-w1: Forwarding ports...

k8s-w1: 22 (guest) => 60001 (host) (adapter 1)

==> k8s-w1: Running 'pre-boot' VM customizations...

==> k8s-w1: Booting VM...

==> k8s-w1: Waiting for machine to boot. This may take a few minutes...

k8s-w1: SSH address: 127.0.0.1:60001

k8s-w1: SSH username: vagrant

k8s-w1: SSH auth method: private key

k8s-w1:

k8s-w1: Vagrant insecure key detected. Vagrant will automatically replace

k8s-w1: this with a newly generated keypair for better security.

k8s-w1:

k8s-w1: Inserting generated public key within guest...

k8s-w1: Removing insecure key from the guest if it's present...

k8s-w1: Key inserted! Disconnecting and reconnecting using new SSH key...

==> k8s-w1: Machine booted and ready!

==> k8s-w1: Checking for guest additions in VM...

==> k8s-w1: Setting hostname...

==> k8s-w1: Configuring and enabling network interfaces...

==> k8s-w1: Running provisioner: shell...

k8s-w1: Running: /tmp/vagrant-shell20250714-112889-tnj10t.sh

k8s-w1: >>>> Initial Config Start <<<<

k8s-w1: [TASK 1] Setting Profile & Change Timezone

k8s-w1: [TASK 2] Disable AppArmor

k8s-w1: [TASK 3] Disable and turn off SWAP

k8s-w1: [TASK 4] Install Packages

k8s-w1: [TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl)

k8s-w1: [TASK 6] Install Packages & Helm

k8s-w1: >>>> Initial Config End <<<<

==> k8s-w1: Running provisioner: shell...

k8s-w1: Running: /tmp/vagrant-shell20250714-112889-gsjiv7.sh

k8s-w1: >>>> K8S Node config Start <<<<

k8s-w1: [TASK 1] K8S Controlplane Join

k8s-w1: >>>> K8S Node config End <<<<

==> k8s-w2: Cloning VM...

==> k8s-w2: Matching MAC address for NAT networking...

==> k8s-w2: Checking if box 'bento/ubuntu-24.04' version '202502.21.0' is up to date...

==> k8s-w2: Setting the name of the VM: k8s-w2

==> k8s-w2: Clearing any previously set network interfaces...

==> k8s-w2: Preparing network interfaces based on configuration...

k8s-w2: Adapter 1: nat

k8s-w2: Adapter 2: hostonly

==> k8s-w2: Forwarding ports...

k8s-w2: 22 (guest) => 60002 (host) (adapter 1)

==> k8s-w2: Running 'pre-boot' VM customizations...

==> k8s-w2: Booting VM...

==> k8s-w2: Waiting for machine to boot. This may take a few minutes...

k8s-w2: SSH address: 127.0.0.1:60002

k8s-w2: SSH username: vagrant

k8s-w2: SSH auth method: private key

k8s-w2:

k8s-w2: Vagrant insecure key detected. Vagrant will automatically replace

k8s-w2: this with a newly generated keypair for better security.

k8s-w2:

k8s-w2: Inserting generated public key within guest...

k8s-w2: Removing insecure key from the guest if it's present...

k8s-w2: Key inserted! Disconnecting and reconnecting using new SSH key...

==> k8s-w2: Machine booted and ready!

==> k8s-w2: Checking for guest additions in VM...

==> k8s-w2: Setting hostname...

==> k8s-w2: Configuring and enabling network interfaces...

==> k8s-w2: Running provisioner: shell...

k8s-w2: Running: /tmp/vagrant-shell20250714-112889-cd8mcd.sh

k8s-w2: >>>> Initial Config Start <<<<

k8s-w2: [TASK 1] Setting Profile & Change Timezone

k8s-w2: [TASK 2] Disable AppArmor

k8s-w2: [TASK 3] Disable and turn off SWAP

k8s-w2: [TASK 4] Install Packages

k8s-w2: [TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl)

k8s-w2: [TASK 6] Install Packages & Helm

k8s-w2: >>>> Initial Config End <<<<

==> k8s-w2: Running provisioner: shell...

k8s-w2: Running: /tmp/vagrant-shell20250714-112889-ncfxlg.sh

k8s-w2: >>>> K8S Node config Start <<<<

k8s-w2: [TASK 1] K8S Controlplane Join

k8s-w2: >>>> K8S Node config End <<<<

4. 가상머신 상태 확인

1

vagrant status

✅ 출력

1

2

3

4

5

6

7

8

9

Current machine states:

k8s-ctr running (virtualbox)

k8s-w1 running (virtualbox)

k8s-w2 running (virtualbox)

This environment represents multiple VMs. The VMs are all listed

above with their current state. For more information about a specific

VM, run `vagrant status NAME`.

5. 배포 후 SSH 접속 및 노드 네트워크 확인

각 노드 eth0 인터페이스 IP 확인

1

for i in ctr w1 w2 ; do echo ">> node : k8s-$i <<"; vagrant ssh k8s-$i -c 'ip -c -4 addr show dev eth0'; echo; done #

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

>> node : k8s-ctr <<

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

altname enp0s3

inet 10.0.2.15/24 metric 100 brd 10.0.2.255 scope global dynamic eth0

valid_lft 85640sec preferred_lft 85640sec

>> node : k8s-w1 <<

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

altname enp0s3

inet 10.0.2.15/24 metric 100 brd 10.0.2.255 scope global dynamic eth0

valid_lft 85784sec preferred_lft 85784sec

>> node : k8s-w2 <<

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

altname enp0s3

inet 10.0.2.15/24 metric 100 brd 10.0.2.255 scope global dynamic eth0

valid_lft 85941sec preferred_lft 85941sec

6. k8s-ctr 접속 후, 기본 정보 확인

(1) k8s-ctr 접속

1

vagrant ssh k8s-ctr

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

Welcome to Ubuntu 24.04.2 LTS (GNU/Linux 6.8.0-53-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/pro

System information as of Mon Jul 14 10:50:38 PM KST 2025

System load: 0.27

Usage of /: 19.4% of 30.34GB

Memory usage: 31%

Swap usage: 0%

Processes: 161

Users logged in: 0

IPv4 address for eth0: 10.0.2.15

IPv6 address for eth0: fd17:625c:f037:2:a00:27ff:fe6b:69c9

This system is built by the Bento project by Chef Software

More information can be found at https://github.com/chef/bento

Use of this system is acceptance of the OS vendor EULA and License Agreements.

(⎈|HomeLab:N/A) root@k8s-ctr:~#

(2) k8s-ctr 내부 기본 명령 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

(⎈|HomeLab:N/A) root@k8s-ctr:~# whoami

root

(⎈|HomeLab:N/A) root@k8s-ctr:~# pwd

/root

(⎈|HomeLab:N/A) root@k8s-ctr:~# hostnamectl

Static hostname: k8s-ctr

Icon name: computer-vm

Chassis: vm 🖴

Machine ID: 4f9fb3fa939a46d788144548529797c4

Boot ID: b47345bdfb114c0f99ef542366fb0ebc

Virtualization: oracle

Operating System: Ubuntu 24.04.2 LTS

Kernel: Linux 6.8.0-53-generic

Architecture: x86-64

Hardware Vendor: innotek GmbH

Hardware Model: VirtualBox

Firmware Version: VirtualBox

Firmware Date: Fri 2006-12-01

Firmware Age: 18y 7month 1w 6d

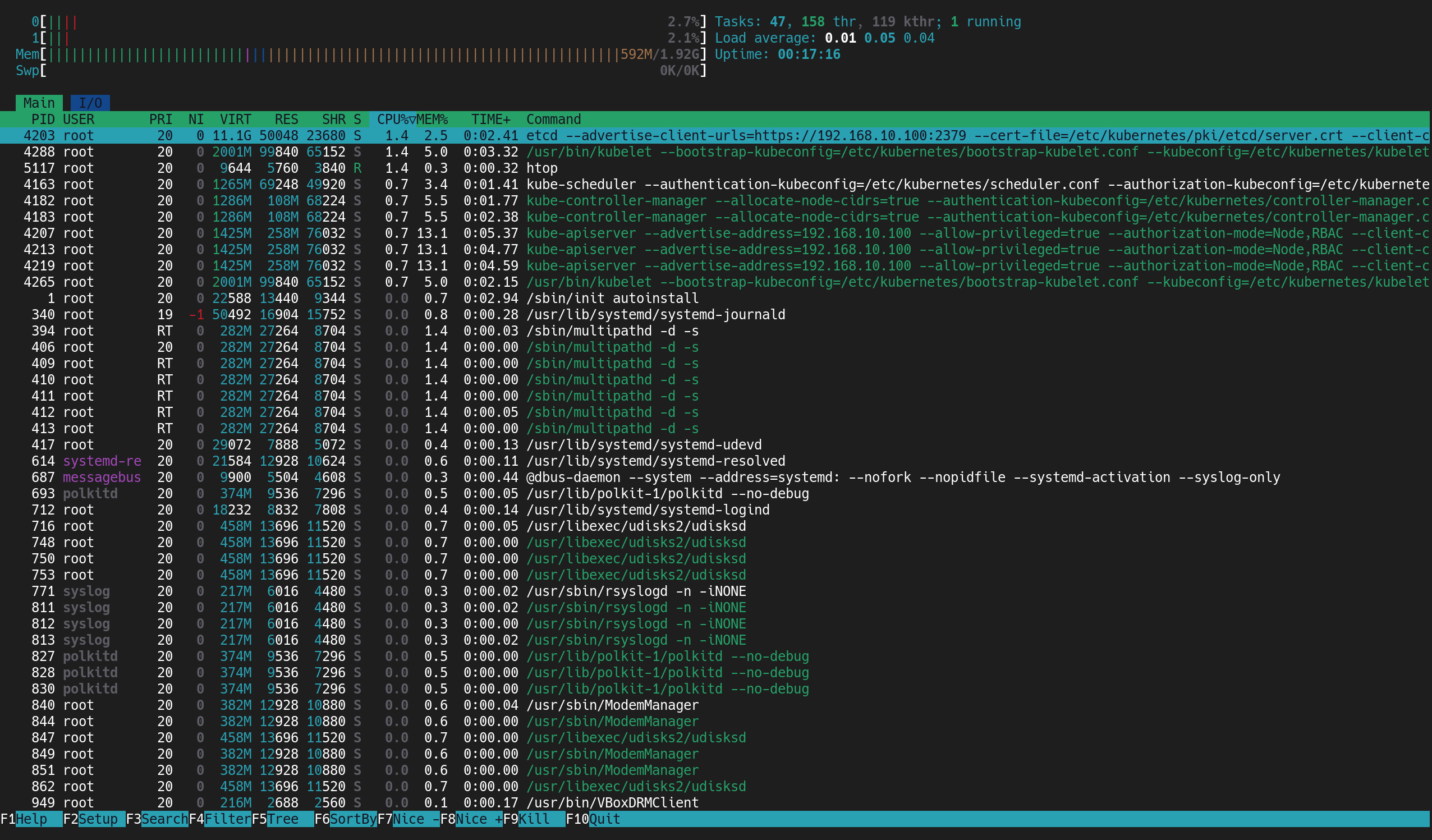

(3) 시스템 리소스 모니터링

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# htop

(4) /etc/hosts 파일 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# cat /etc/hosts

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

127.0.0.1 localhost

127.0.1.1 vagrant

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

127.0.2.1 k8s-ctr k8s-ctr

192.168.10.100 k8s-ctr

192.168.10.101 k8s-w1

192.168.10.102 k8s-w2

(5) 노드간 통신 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ping -c 1 k8s-w1

✅ 출력

1

2

3

4

5

6

PING k8s-w1 (192.168.10.101) 56(84) bytes of data.

64 bytes from k8s-w1 (192.168.10.101): icmp_seq=1 ttl=64 time=0.421 ms

--- k8s-w1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.421/0.421/0.421/0.000 ms

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ping -c 1 k8s-w2

✅ 출력

1

2

3

4

5

6

7

PING k8s-w2 (192.168.10.102) 56(84) bytes of data.

64 bytes from k8s-w2 (192.168.10.102): icmp_seq=1 ttl=64 time=0.433 ms

--- k8s-w2 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.433/0.433/0.433/0.000 ms

- k8s-w1 / k8s-w2 각각 0% 패킷 로스, 정상 응답

(6) 컨트롤플레인에서 워커 노드로 SSH 원격 명령

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-w1 hostname

✅ 출력

1

2

Warning: Permanently added 'k8s-w1' (ED25519) to the list of known hosts.

k8s-w1

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-w2 hostname

✅ 출력

1

2

Warning: Permanently added 'k8s-w2' (ED25519) to the list of known hosts.

k8s-w2

- k8s-w1 / k8s-w2 정상적으로 호스트명 반환

(7) SSH 접근 가능 이유 확인

- NAT된 VirtualBox 환경에서 sshd 프로세스 확인

- 10.0.2.2(VirtualBox NAT Gateway)에서 10.0.2.15로 SSH 접속됨

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ss -tnp |grep sshd

✅ 출력

1

ESTAB 0 0 [::ffff:10.0.2.15]:22 [::ffff:10.0.2.2]:48922 users:(("sshd",pid=4947,fd=4),("sshd",pid=4902,fd=4))

(8) 네트워크 인터페이스 및 라우팅 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c addr

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host noprefixroute

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 08:00:27:6b:69:c9 brd ff:ff:ff:ff:ff:ff

altname enp0s3

inet 10.0.2.15/24 metric 100 brd 10.0.2.255 scope global dynamic eth0

valid_lft 84849sec preferred_lft 84849sec

inet6 fd17:625c:f037:2:a00:27ff:fe6b:69c9/64 scope global dynamic mngtmpaddr noprefixroute

valid_lft 86357sec preferred_lft 14357sec

inet6 fe80::a00:27ff:fe6b:69c9/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 08:00:27:80:23:b9 brd ff:ff:ff:ff:ff:ff

altname enp0s8

inet 192.168.10.100/24 brd 192.168.10.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fe80:23b9/64 scope link

valid_lft forever preferred_lft forever

- eth0:

10.0.2.15/24(NAT 네트워크) / eth1:192.168.10.100/24(Host-Only 네트워크)

(9) 라우팅 테이블 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c route

✅ 출력

1

2

3

4

5

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.100

(10) DNS 설정 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# resolvectl

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

Global

Protocols: -LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

resolv.conf mode: stub

Link 2 (eth0)

Current Scopes: DNS

Protocols: +DefaultRoute -LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Current DNS Server: 10.0.2.3

DNS Servers: 10.0.2.3

DNS Domain: Davolink

Link 3 (eth1)

Current Scopes: none

Protocols: -DefaultRoute -LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

7. k8s-ctr 쿠버네티스 정보 확인

(1) 클러스터 정보 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl cluster-info

✅ 출력

1

2

3

4

Kubernetes control plane is running at https://192.168.10.100:6443

CoreDNS is running at https://192.168.10.100:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

- Kubernetes control plane:

https://192.168.10.100:6443 - CoreDNS:

https://192.168.10.100:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

(2) 노드 상태 및 INTERNAL-IP 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get node -owide

✅ 출력

1

2

3

4

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-ctr NotReady control-plane 30m v1.33.2 192.168.10.100 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

k8s-w1 NotReady <none> 28m v1.33.2 10.0.2.15 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

k8s-w2 NotReady <none> 26m v1.33.2 10.0.2.15 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

⚠ 문제점

- 워커 노드 INTERNAL-IP가 NAT 대역(10.0.2.15)으로 잡혀있음

- CNI 요구사항에 따라 INTERNAL-IP가 실제 k8s API 통신용 네트워크(192.168.10.x)로 설정되어야 함

(3) 파드 상태 및 IP 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get pod -A -owide

✅ 출력

1

2

3

4

5

6

7

8

9

10

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-674b8bbfcf-7gx6f 0/1 Pending 0 34m <none> <none> <none> <none>

kube-system coredns-674b8bbfcf-mjnst 0/1 Pending 0 34m <none> <none> <none> <none>

kube-system etcd-k8s-ctr 1/1 Running 0 35m 10.0.2.15 k8s-ctr <none> <none>

kube-system kube-apiserver-k8s-ctr 1/1 Running 0 35m 10.0.2.15 k8s-ctr <none> <none>

kube-system kube-controller-manager-k8s-ctr 1/1 Running 0 35m 10.0.2.15 k8s-ctr <none> <none>

kube-system kube-proxy-b6zgw 1/1 Running 0 32m 10.0.2.15 k8s-w1 <none> <none>

kube-system kube-proxy-grfn2 1/1 Running 0 34m 10.0.2.15 k8s-ctr <none> <none>

kube-system kube-proxy-p678s 1/1 Running 0 30m 10.0.2.15 k8s-w2 <none> <none>

kube-system kube-scheduler-k8s-ctr 1/1 Running 0 35m 10.0.2.15 k8s-ctr <none> <none>

- CoreDNS 파드:

Pending상태 (IP 없음 → CNI 미설치로 인한 문제) - etcd, kube-apiserver, kube-controller-manager, kube-scheduler, kube-proxy: 모두

10.0.2.15사용 중 - 호스트 네트워크 대역도 192.168.10.x로 변경 필요

(4) CoreDNS 파드 상세 상태 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# k describe pod -n kube-system -l k8s-app=kube-dns

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

Name: coredns-674b8bbfcf-7gx6f

Namespace: kube-system

Priority: 2000000000

Priority Class Name: system-cluster-critical

Service Account: coredns

Node: <none>

Labels: k8s-app=kube-dns

pod-template-hash=674b8bbfcf

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Controlled By: ReplicaSet/coredns-674b8bbfcf

Containers:

coredns:

Image: registry.k8s.io/coredns/coredns:v1.12.0

Ports: 53/UDP, 53/TCP, 9153/TCP

Host Ports: 0/UDP, 0/TCP, 0/TCP

Args:

-conf

/etc/coredns/Corefile

Limits:

memory: 170Mi

Requests:

cpu: 100m

memory: 70Mi

Liveness: http-get http://:8080/health delay=60s timeout=5s period=10s #success=1 #failure=5

Readiness: http-get http://:8181/ready delay=0s timeout=1s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/etc/coredns from config-volume (ro)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-f9fss (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

config-volume:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: coredns

Optional: false

kube-api-access-f9fss:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

Optional: false

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: kubernetes.io/os=linux

Tolerations: CriticalAddonsOnly op=Exists

node-role.kubernetes.io/control-plane:NoSchedule

node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 37m default-scheduler 0/1 nodes are available: 1 node(s) had untolerated taint {node.kubernetes.io/not-ready: }. preemption: 0/1 nodes are available: 1 Preemption is not helpful for scheduling.

Warning FailedScheduling 118s (x7 over 32m) default-scheduler 0/3 nodes are available: 3 node(s) had untolerated taint {node.kubernetes.io/not-ready: }. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling.

Name: coredns-674b8bbfcf-mjnst

Namespace: kube-system

Priority: 2000000000

Priority Class Name: system-cluster-critical

Service Account: coredns

Node: <none>

Labels: k8s-app=kube-dns

pod-template-hash=674b8bbfcf

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Controlled By: ReplicaSet/coredns-674b8bbfcf

Containers:

coredns:

Image: registry.k8s.io/coredns/coredns:v1.12.0

Ports: 53/UDP, 53/TCP, 9153/TCP

Host Ports: 0/UDP, 0/TCP, 0/TCP

Args:

-conf

/etc/coredns/Corefile

Limits:

memory: 170Mi

Requests:

cpu: 100m

memory: 70Mi

Liveness: http-get http://:8080/health delay=60s timeout=5s period=10s #success=1 #failure=5

Readiness: http-get http://:8181/ready delay=0s timeout=1s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/etc/coredns from config-volume (ro)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-55887 (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

config-volume:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: coredns

Optional: false

kube-api-access-55887:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

Optional: false

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: kubernetes.io/os=linux

Tolerations: CriticalAddonsOnly op=Exists

node-role.kubernetes.io/control-plane:NoSchedule

node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 37m default-scheduler 0/1 nodes are available: 1 node(s) had untolerated taint {node.kubernetes.io/not-ready: }. preemption: 0/1 nodes are available: 1 Preemption is not helpful for scheduling.

Warning FailedScheduling 2m28s (x7 over 32m) default-scheduler 0/3 nodes are available: 3 node(s) had untolerated taint {node.kubernetes.io/not-ready: }. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling.

- 두 개의 CoreDNS 파드 모두

Pending상태 - 스케줄러 이벤트:

0/3 nodes are available: 3 node(s) had untolerated taint {node.kubernetes.io/not-ready: } - 즉, 모든 노드가 NotReady 상태라서 스케줄링 불가

8. k8s-ctr INTERNAL-IP 변경 설정

(1) 변경 필요 이유

- 초기 kubeadm 설정 시 컨트롤플레인의 API 서버 IP가 INTERNAL-IP로 지정됨

- 기본값으로 eth0(NAT) IP(

10.0.2.x)가 잡혀 있어 CNI 및 Pod 네트워크와 맞지 않는 문제 발생 - Host-Only 네트워크(eth1) 대역인 192.168.10.x를 INTERNAL-IP로 고정해야 함

(2) 현재 kubelet 설정 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# cat /var/lib/kubelet/kubeadm-flags.env

✅ 출력

1

KUBELET_KUBEADM_ARGS="--container-runtime-endpoint=unix:///run/containerd/containerd.sock --pod-infra-container-image=registry.k8s.io/pause:3.10"

(3) eth1 IP 확인 및 변수로 지정

1

2

(⎈|HomeLab:N/A) root@k8s-ctr:~# NODEIP=$(ip -4 addr show eth1 | grep -oP '(?<=inet\s)\d+(\.\d+){3}')

(⎈|HomeLab:N/A) root@k8s-ctr:~# echo $NODEIP

✅ 출력

1

192.168.10.100

(4) kubelet 설정 파일에 node-ip 추가

1

2

(⎈|HomeLab:N/A) root@k8s-ctr:~# sed -i "s/^\(KUBELET_KUBEADM_ARGS=\"\)/\1--node-ip=${NODEIP} /" /var/lib/kubelet/kubeadm-flags.env

systemctl daemon-reexec && systemctl restart kubelet

(5) 적용 후 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# cat /var/lib/kubelet/kubeadm-flags.env

✅ 출력

1

KUBELET_KUBEADM_ARGS="--node-ip=192.168.10.100 --container-runtime-endpoint=unix:///run/containerd/containerd.sock --pod-infra-container-image=registry.k8s.io/pause:3.10"

--node-ip=192.168.10.100옵션이 추가됨

9. 워커 노드(k8s-w1/w2) INTERNAL-IP 변경 설정

(1) k8s-w1 변경

1

vagrant ssh k8s-w1

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

Welcome to Ubuntu 24.04.2 LTS (GNU/Linux 6.8.0-53-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/pro

System information as of Mon Jul 14 11:28:56 PM KST 2025

System load: 0.0

Usage of /: 17.0% of 30.34GB

Memory usage: 22%

Swap usage: 0%

Processes: 147

Users logged in: 0

IPv4 address for eth0: 10.0.2.15

IPv6 address for eth0: fd17:625c:f037:2:a00:27ff:fe6b:69c9

This system is built by the Bento project by Chef Software

More information can be found at https://github.com/chef/bento

Use of this system is acceptance of the OS vendor EULA and License Agreements.

Last login: Mon Jul 14 22:52:44 2025 from 10.0.2.2

root@k8s-w1:~# NODEIP=$(ip -4 addr show eth1 | grep -oP '(?<=inet\s)\d+(\.\d+){3}')

sed -i "s/^\(KUBELET_KUBEADM_ARGS=\"\)/\1--node-ip=${NODEIP} /" /var/lib/kubelet/kubeadm-flags.env

systemctl daemon-reexec && systemctl restart kubelet

root@k8s-w1:~# cat /var/lib/kubelet/kubeadm-flags.env

KUBELET_KUBEADM_ARGS="--node-ip=192.168.10.101 --container-runtime-endpoint=unix:///var/run/containerd/containerd.sock --pod-infra-container-image=registry.k8s.io/pause:3.10"

(2) k8s-w1 변경

1

vagrant ssh k8s-w2

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

Welcome to Ubuntu 24.04.2 LTS (GNU/Linux 6.8.0-53-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/pro

System information as of Mon Jul 14 11:29:17 PM KST 2025

System load: 0.0

Usage of /: 17.0% of 30.34GB

Memory usage: 21%

Swap usage: 0%

Processes: 147

Users logged in: 0

IPv4 address for eth0: 10.0.2.15

IPv6 address for eth0: fd17:625c:f037:2:a00:27ff:fe6b:69c9

This system is built by the Bento project by Chef Software

More information can be found at https://github.com/chef/bento

Use of this system is acceptance of the OS vendor EULA and License Agreements.

Last login: Mon Jul 14 22:52:45 2025 from 10.0.2.2

root@k8s-w2:~# NODEIP=$(ip -4 addr show eth1 | grep -oP '(?<=inet\s)\d+(\.\d+){3}')

sed -i "s/^\(KUBELET_KUBEADM_ARGS=\"\)/\1--node-ip=${NODEIP} /" /var/lib/kubelet/kubeadm-flags.env

systemctl daemon-reexec && systemctl restart kubelet

root@k8s-w2:~# cat /var/lib/kubelet/kubeadm-flags.env

KUBELET_KUBEADM_ARGS="--node-ip=192.168.10.102 --container-runtime-endpoint=unix:///var/run/containerd/containerd.sock --pod-infra-container-image=registry.k8s.io/pause:3.10"

(3) 변경 후 확인

노드 IP 정상 적용 여부 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get node -owide

✅ 출력

1

2

3

4

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-ctr NotReady control-plane 50m v1.33.2 192.168.10.100 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

k8s-w1 NotReady <none> 47m v1.33.2 192.168.10.101 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

k8s-w2 NotReady <none> 46m v1.33.2 192.168.10.102 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

(4) static Pod IP 변경 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get pod -A -owide

✅ 출력

1

2

3

4

5

6

7

8

9

10

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-674b8bbfcf-7gx6f 0/1 Pending 0 52m <none> <none> <none> <none>

kube-system coredns-674b8bbfcf-mjnst 0/1 Pending 0 52m <none> <none> <none> <none>

kube-system etcd-k8s-ctr 1/1 Running 0 52m 192.168.10.100 k8s-ctr <none> <none>

kube-system kube-apiserver-k8s-ctr 1/1 Running 0 52m 192.168.10.100 k8s-ctr <none> <none>

kube-system kube-controller-manager-k8s-ctr 1/1 Running 0 52m 192.168.10.100 k8s-ctr <none> <none>

kube-system kube-proxy-b6zgw 1/1 Running 0 49m 192.168.10.101 k8s-w1 <none> <none>

kube-system kube-proxy-grfn2 1/1 Running 0 52m 192.168.10.100 k8s-ctr <none> <none>

kube-system kube-proxy-p678s 1/1 Running 0 48m 192.168.10.102 k8s-w2 <none> <none>

kube-system kube-scheduler-k8s-ctr 1/1 Running 0 52m 192.168.10.100 k8s-ctr <none> <none>

🌐 Flannel CNI 설치 및 확인

1. 설치 전 클러스터 상태 확인

(1) kubeadm init 시 지정한 Pod CIDR와 Service CIDR 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl cluster-info dump | grep -m 2 -E "cluster-cidr|service-cluster-ip-range"

✅ 출력

1

2

"--service-cluster-ip-range=10.96.0.0/16",

"--cluster-cidr=10.244.0.0/16",

(2) CoreDNS Pod 상태 확인

CNI 설치 전에는 Pod 네트워크가 구성되지 않아 IP를 받지 못함

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get pod -n kube-system -l k8s-app=kube-dns -owide

✅ 출력

1

2

3

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-674b8bbfcf-7gx6f 0/1 Pending 0 59m <none> <none> <none> <none>

coredns-674b8bbfcf-mjnst 0/1 Pending 0 59m <none> <none> <none> <none>

(3) 기본 네트워크 상태 확인

eth0, eth1 인터페이스 확인

1

2

3

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c link

ip -c route

brctl show

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 08:00:27:6b:69:c9 brd ff:ff:ff:ff:ff:ff

altname enp0s3

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 08:00:27:80:23:b9 brd ff:ff:ff:ff:ff:ff

altname enp0s8

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.100

- eth0은 NAT 네트워크 (10.0.2.x), eth1은 Host-Only 네트워크 (192.168.10.x)

(4) iptables 상태 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# iptables-save

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

# Generated by iptables-save v1.8.10 (nf_tables) on Mon Jul 14 23:44:12 2025

*mangle

:PREROUTING ACCEPT [0:0]

:INPUT ACCEPT [0:0]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

:POSTROUTING ACCEPT [0:0]

:KUBE-IPTABLES-HINT - [0:0]

:KUBE-KUBELET-CANARY - [0:0]

:KUBE-PROXY-CANARY - [0:0]

COMMIT

# Completed on Mon Jul 14 23:44:12 2025

# Generated by iptables-save v1.8.10 (nf_tables) on Mon Jul 14 23:44:12 2025

*filter

:INPUT ACCEPT [651613:132029811]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [644732:121246327]

:KUBE-EXTERNAL-SERVICES - [0:0]

:KUBE-FIREWALL - [0:0]

:KUBE-FORWARD - [0:0]

:KUBE-KUBELET-CANARY - [0:0]

:KUBE-NODEPORTS - [0:0]

:KUBE-PROXY-CANARY - [0:0]

:KUBE-PROXY-FIREWALL - [0:0]

:KUBE-SERVICES - [0:0]

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A INPUT -j KUBE-FIREWALL

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A FORWARD -m comment --comment "kubernetes forwarding rules" -j KUBE-FORWARD

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -j KUBE-FIREWALL

-A KUBE-FIREWALL ! -s 127.0.0.0/8 -d 127.0.0.0/8 -m comment --comment "block incoming localnet connections" -m conntrack ! --ctstate RELATED,ESTABLISHED,DNAT -j DROP

-A KUBE-FORWARD -m conntrack --ctstate INVALID -m nfacct --nfacct-name ct_state_invalid_dropped_pkts -j DROP

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding rules" -m mark --mark 0x4000/0x4000 -j ACCEPT

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding conntrack rule" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A KUBE-SERVICES -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp has no endpoints" -m tcp --dport 53 -j REJECT --reject-with icmp-port-unreachable

-A KUBE-SERVICES -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics has no endpoints" -m tcp --dport 9153 -j REJECT --reject-with icmp-port-unreachable

-A KUBE-SERVICES -d 10.96.0.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns has no endpoints" -m udp --dport 53 -j REJECT --reject-with icmp-port-unreachable

COMMIT

# Completed on Mon Jul 14 23:44:12 2025

# Generated by iptables-save v1.8.10 (nf_tables) on Mon Jul 14 23:44:12 2025

*nat

:PREROUTING ACCEPT [0:0]

:INPUT ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

:POSTROUTING ACCEPT [0:0]

:KUBE-KUBELET-CANARY - [0:0]

:KUBE-MARK-MASQ - [0:0]

:KUBE-NODEPORTS - [0:0]

:KUBE-POSTROUTING - [0:0]

:KUBE-PROXY-CANARY - [0:0]

:KUBE-SEP-ETI7FUQQE3BS2IXE - [0:0]

:KUBE-SERVICES - [0:0]

:KUBE-SVC-NPX46M4PTMTKRN6Y - [0:0]

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully

-A KUBE-SEP-ETI7FUQQE3BS2IXE -s 192.168.10.100/32 -m comment --comment "default/kubernetes:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-ETI7FUQQE3BS2IXE -p tcp -m comment --comment "default/kubernetes:https" -m tcp -j DNAT --to-destination 192.168.10.100:6443

-A KUBE-SERVICES -d 10.96.0.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-NPX46M4PTMTKRN6Y

-A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS

-A KUBE-SVC-NPX46M4PTMTKRN6Y ! -s 10.244.0.0/16 -d 10.96.0.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SVC-NPX46M4PTMTKRN6Y -m comment --comment "default/kubernetes:https -> 192.168.10.100:6443" -j KUBE-SEP-ETI7FUQQE3BS2IXE

COMMIT

# Completed on Mon Jul 14 23:44:12 2025

아직 Pod 네트워크가 없기 때문에 coredns 서비스는 endpoint가 없음

1

2

3

-A KUBE-SERVICES -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp has no endpoints" -m tcp --dport 53 -j REJECT --reject-with icmp-port-unreachable

-A KUBE-SERVICES -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics has no endpoints" -m tcp --dport 9153 -j REJECT --reject-with icmp-port-unreachable

-A KUBE-SERVICES -d 10.96.0.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns has no endpoints" -m udp --dport 53 -j REJECT --reject-with icmp-port-unreachable

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# iptables -t nat -S

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

-P PREROUTING ACCEPT

-P INPUT ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N KUBE-KUBELET-CANARY

-N KUBE-MARK-MASQ

-N KUBE-NODEPORTS

-N KUBE-POSTROUTING

-N KUBE-PROXY-CANARY

-N KUBE-SEP-ETI7FUQQE3BS2IXE

-N KUBE-SERVICES

-N KUBE-SVC-NPX46M4PTMTKRN6Y

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully

-A KUBE-SEP-ETI7FUQQE3BS2IXE -s 192.168.10.100/32 -m comment --comment "default/kubernetes:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-ETI7FUQQE3BS2IXE -p tcp -m comment --comment "default/kubernetes:https" -m tcp -j DNAT --to-destination 192.168.10.100:6443

-A KUBE-SERVICES -d 10.96.0.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-NPX46M4PTMTKRN6Y

-A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS

-A KUBE-SVC-NPX46M4PTMTKRN6Y ! -s 10.244.0.0/16 -d 10.96.0.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SVC-NPX46M4PTMTKRN6Y -m comment --comment "default/kubernetes:https -> 192.168.10.100:6443" -j KUBE-SEP-ETI7FUQQE3BS2IXE

(5) CNI 설정 디렉토리 확인 → 존재 x

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# tree /etc/cni/net.d/

✅ 출력

1

2

3

/etc/cni/net.d/

0 directories, 0 files

2. Flannel CNI 설치

(1) Flannel 네임스페이스 및 Helm 준비

1

2

3

4

5

6

7

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl create ns kube-flannel

kubectl label --overwrite ns kube-flannel pod-security.kubernetes.io/enforce=privileged

helm repo add flannel https://flannel-io.github.io/flannel/

helm repo list

helm search repo flannel

helm show values flannel/flannel

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

namespace/kube-flannel created

namespace/kube-flannel labeled

"flannel" has been added to your repositories

NAME URL

flannel https://flannel-io.github.io/flannel/

NAME CHART VERSION APP VERSION DESCRIPTION

flannel/flannel v0.27.1 v0.27.1 Install Flannel Network Plugin.

---

global:

imagePullSecrets:

# - name: "a-secret-name"

# The IPv4 cidr pool to create on startup if none exists. Pod IPs will be

# chosen from this range.

podCidr: "10.244.0.0/16"

podCidrv6: ""

flannel:

# kube-flannel image

image:

repository: ghcr.io/flannel-io/flannel

tag: v0.27.1

image_cni:

repository: ghcr.io/flannel-io/flannel-cni-plugin

tag: v1.7.1-flannel1

# cniBinDir is the directory to which the flannel CNI binary is installed.

cniBinDir: "/opt/cni/bin"

# cniConfDir is the directory where the CNI configuration is located.

cniConfDir: "/etc/cni/net.d"

# skipCNIConfigInstallation skips the installation of the flannel CNI config. This is useful when the CNI config is

# provided externally.

skipCNIConfigInstallation: false

# flannel command arguments

enableNFTables: false

args:

- "--ip-masq"

- "--kube-subnet-mgr"

# Backend for kube-flannel. Backend should not be changed

# at runtime. (vxlan, host-gw, wireguard, udp)

# Documentation at https://github.com/flannel-io/flannel/blob/master/Documentation/backends.md

backend: "vxlan"

# Port used by the backend 0 means default value (VXLAN: 8472, Wireguard: 51821, UDP: 8285)

#backendPort: 0

# MTU to use for outgoing packets (VXLAN and Wiregurad) if not defined the MTU of the external interface is used.

# mtu: 1500

#

# VXLAN Configs:

#

# VXLAN Identifier to be used. On Linux default is 1.

#vni: 1

# Enable VXLAN Group Based Policy (Default false)

# GBP: false

# Enable direct routes (default is false)

# directRouting: false

# MAC prefix to be used on Windows. (Defaults is 0E-2A)

# macPrefix: "0E-2A"

#

# Wireguard Configs:

#

# UDP listen port used with IPv6

# backendPortv6: 51821

# Pre shared key to use

# psk: 0

# IP version to use on Wireguard

# tunnelMode: "separate"

# Persistent keep interval to use

# keepaliveInterval: 0

#

cniConf: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

#

# General daemonset configs

#

resources:

requests:

cpu: 100m

memory: 50Mi

tolerations:

- effect: NoExecute

operator: Exists

- effect: NoSchedule

operator: Exists

nodeSelector: {}

netpol:

enabled: false

args:

- "--hostname-override=$(MY_NODE_NAME)"

- "--v=2"

image:

repository: registry.k8s.io/networking/kube-network-policies

tag: v0.7.0

(2) 네트워크 인터페이스 지정

1

2

3

4

5

6

7

8

9

(⎈|HomeLab:N/A) root@k8s-ctr:~# cat << EOF > flannel-values.yaml

podCidr: "10.244.0.0/16"

flannel:

args:

- "--ip-masq"

- "--kube-subnet-mgr"

- "--iface=eth1"

EOF

(3) Helm으로 Flannel 설치

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# helm install flannel --namespace kube-flannel flannel/flannel -f flannel-values.yaml

✅ 출력

1

2

3

4

5

6

NAME: flannel

LAST DEPLOYED: Mon Jul 14 23:49:17 2025

NAMESPACE: kube-flannel

STATUS: deployed

REVISION: 1

TEST SUITE: None

(4) Pod 동작 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kc describe pod -n kube-flannel -l app=flannel

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

385

386

387

388

389

390

391

392

393

394

395

396

397

398

399

400

401

402

403

404

405

406

407

408

409

410

411

412

413

414

415

416

417

418

419

420

421

422

423

424

425

426

427

428

429

430

431

432

433

434

435

436

437

438

439

440

441

442

443

444

445

446

447

448

449

450

451

452

453

454

455

456

457

458

459

460

461

462

463

464

Name: kube-flannel-ds-9qdxf

Namespace: kube-flannel

Priority: 2000001000

Priority Class Name: system-node-critical

Service Account: flannel

Node: k8s-ctr/192.168.10.100

Start Time: Mon, 14 Jul 2025 23:49:17 +0900

Labels: app=flannel

controller-revision-hash=66c5c78475

pod-template-generation=1

tier=node

Annotations: <none>

Status: Running

IP: 192.168.10.100

IPs:

IP: 192.168.10.100

Controlled By: DaemonSet/kube-flannel-ds

Init Containers:

install-cni-plugin:

Container ID: containerd://7cbb5ee284a7eb7bb13995fd1c656f2d0776973ae1e7cdd3f616fd528270fdcd

Image: ghcr.io/flannel-io/flannel-cni-plugin:v1.7.1-flannel1

Image ID: ghcr.io/flannel-io/flannel-cni-plugin@sha256:cb3176a2c9eae5fa0acd7f45397e706eacb4577dac33cad89f93b775ff5611df

Port: <none>

Host Port: <none>

Command:

cp

Args:

-f

/flannel

/opt/cni/bin/flannel

State: Terminated

Reason: Completed

Exit Code: 0

Started: Mon, 14 Jul 2025 23:49:22 +0900

Finished: Mon, 14 Jul 2025 23:49:22 +0900

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/opt/cni/bin from cni-plugin (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-46mlr (ro)

install-cni:

Container ID: containerd://cfc3ee53844f9139d8ca75f024746fec9163e629f431b97966d10923612a60eb

Image: ghcr.io/flannel-io/flannel:v0.27.1

Image ID: ghcr.io/flannel-io/flannel@sha256:0c95c822b690f83dc827189d691015f92ab7e249e238876b56442b580c492d85

Port: <none>

Host Port: <none>

Command:

cp

Args:

-f

/etc/kube-flannel/cni-conf.json

/etc/cni/net.d/10-flannel.conflist

State: Terminated

Reason: Completed

Exit Code: 0

Started: Mon, 14 Jul 2025 23:49:30 +0900

Finished: Mon, 14 Jul 2025 23:49:30 +0900

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/etc/cni/net.d from cni (rw)

/etc/kube-flannel/ from flannel-cfg (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-46mlr (ro)

Containers:

kube-flannel:

Container ID: containerd://1fda477f80ac40a4e82bd18368b78b7e391bce1436d69a746ecef65773525920

Image: ghcr.io/flannel-io/flannel:v0.27.1

Image ID: ghcr.io/flannel-io/flannel@sha256:0c95c822b690f83dc827189d691015f92ab7e249e238876b56442b580c492d85

Port: <none>

Host Port: <none>

Command:

/opt/bin/flanneld

--ip-masq

--kube-subnet-mgr

--iface=eth1

State: Running

Started: Mon, 14 Jul 2025 23:49:31 +0900

Ready: True

Restart Count: 0

Requests:

cpu: 100m

memory: 50Mi

Environment:

POD_NAME: kube-flannel-ds-9qdxf (v1:metadata.name)

POD_NAMESPACE: kube-flannel (v1:metadata.namespace)

EVENT_QUEUE_DEPTH: 5000

CONT_WHEN_CACHE_NOT_READY: false

Mounts:

/etc/kube-flannel/ from flannel-cfg (rw)

/run/flannel from run (rw)

/run/xtables.lock from xtables-lock (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-46mlr (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

run:

Type: HostPath (bare host directory volume)

Path: /run/flannel

HostPathType:

cni-plugin:

Type: HostPath (bare host directory volume)

Path: /opt/cni/bin

HostPathType:

cni:

Type: HostPath (bare host directory volume)

Path: /etc/cni/net.d

HostPathType:

flannel-cfg:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: kube-flannel-cfg

Optional: false

xtables-lock:

Type: HostPath (bare host directory volume)

Path: /run/xtables.lock

HostPathType: FileOrCreate

kube-api-access-46mlr:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

Optional: false

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: :NoExecute op=Exists

:NoSchedule op=Exists

node.kubernetes.io/disk-pressure:NoSchedule op=Exists

node.kubernetes.io/memory-pressure:NoSchedule op=Exists

node.kubernetes.io/network-unavailable:NoSchedule op=Exists

node.kubernetes.io/not-ready:NoExecute op=Exists

node.kubernetes.io/pid-pressure:NoSchedule op=Exists

node.kubernetes.io/unreachable:NoExecute op=Exists

node.kubernetes.io/unschedulable:NoSchedule op=Exists

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 44s default-scheduler Successfully assigned kube-flannel/kube-flannel-ds-9qdxf to k8s-ctr

Normal Pulling 44s kubelet Pulling image "ghcr.io/flannel-io/flannel-cni-plugin:v1.7.1-flannel1"

Normal Pulled 39s kubelet Successfully pulled image "ghcr.io/flannel-io/flannel-cni-plugin:v1.7.1-flannel1" in 4.137s (4.137s including waiting). Image size: 4878976 bytes.

Normal Created 39s kubelet Created container: install-cni-plugin

Normal Started 39s kubelet Started container install-cni-plugin

Normal Pulling 38s kubelet Pulling image "ghcr.io/flannel-io/flannel:v0.27.1"

Normal Pulled 31s kubelet Successfully pulled image "ghcr.io/flannel-io/flannel:v0.27.1" in 7.208s (7.208s including waiting). Image size: 32389164 bytes.

Normal Created 31s kubelet Created container: install-cni

Normal Started 31s kubelet Started container install-cni

Normal Pulled 30s kubelet Container image "ghcr.io/flannel-io/flannel:v0.27.1" already present on machine

Normal Created 30s kubelet Created container: kube-flannel

Normal Started 30s kubelet Started container kube-flannel

Name: kube-flannel-ds-c4rxb

Namespace: kube-flannel

Priority: 2000001000

Priority Class Name: system-node-critical

Service Account: flannel

Node: k8s-w1/192.168.10.101

Start Time: Mon, 14 Jul 2025 23:49:17 +0900

Labels: app=flannel

controller-revision-hash=66c5c78475

pod-template-generation=1

tier=node

Annotations: <none>

Status: Running

IP: 192.168.10.101

IPs:

IP: 192.168.10.101

Controlled By: DaemonSet/kube-flannel-ds

Init Containers:

install-cni-plugin:

Container ID: containerd://cf6e39697e580d20418e0d1c4efa454479d173ed666612bd752e3f596a44a9bc

Image: ghcr.io/flannel-io/flannel-cni-plugin:v1.7.1-flannel1

Image ID: ghcr.io/flannel-io/flannel-cni-plugin@sha256:cb3176a2c9eae5fa0acd7f45397e706eacb4577dac33cad89f93b775ff5611df

Port: <none>

Host Port: <none>

Command:

cp

Args:

-f

/flannel

/opt/cni/bin/flannel

State: Terminated

Reason: Completed

Exit Code: 0

Started: Mon, 14 Jul 2025 23:49:22 +0900

Finished: Mon, 14 Jul 2025 23:49:22 +0900

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/opt/cni/bin from cni-plugin (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-nppgp (ro)

install-cni:

Container ID: containerd://3a06f8216980b01adfbcd870361c1ae41371a7be206e5456ad98c0c01e8f90f3

Image: ghcr.io/flannel-io/flannel:v0.27.1

Image ID: ghcr.io/flannel-io/flannel@sha256:0c95c822b690f83dc827189d691015f92ab7e249e238876b56442b580c492d85

Port: <none>

Host Port: <none>

Command:

cp

Args:

-f

/etc/kube-flannel/cni-conf.json

/etc/cni/net.d/10-flannel.conflist

State: Terminated

Reason: Completed

Exit Code: 0

Started: Mon, 14 Jul 2025 23:49:30 +0900

Finished: Mon, 14 Jul 2025 23:49:30 +0900

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/etc/cni/net.d from cni (rw)

/etc/kube-flannel/ from flannel-cfg (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-nppgp (ro)

Containers:

kube-flannel:

Container ID: containerd://f49e28157b71d776ec0526e2b87423fda60439a3e5f9dab351a6e465de53ebdb

Image: ghcr.io/flannel-io/flannel:v0.27.1

Image ID: ghcr.io/flannel-io/flannel@sha256:0c95c822b690f83dc827189d691015f92ab7e249e238876b56442b580c492d85

Port: <none>

Host Port: <none>

Command:

/opt/bin/flanneld

--ip-masq

--kube-subnet-mgr

--iface=eth1

State: Running

Started: Mon, 14 Jul 2025 23:49:31 +0900

Ready: True

Restart Count: 0

Requests:

cpu: 100m

memory: 50Mi

Environment:

POD_NAME: kube-flannel-ds-c4rxb (v1:metadata.name)

POD_NAMESPACE: kube-flannel (v1:metadata.namespace)

EVENT_QUEUE_DEPTH: 5000

CONT_WHEN_CACHE_NOT_READY: false

Mounts:

/etc/kube-flannel/ from flannel-cfg (rw)

/run/flannel from run (rw)

/run/xtables.lock from xtables-lock (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-nppgp (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

run:

Type: HostPath (bare host directory volume)

Path: /run/flannel

HostPathType:

cni-plugin:

Type: HostPath (bare host directory volume)

Path: /opt/cni/bin

HostPathType:

cni:

Type: HostPath (bare host directory volume)

Path: /etc/cni/net.d

HostPathType:

flannel-cfg:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: kube-flannel-cfg

Optional: false

xtables-lock:

Type: HostPath (bare host directory volume)

Path: /run/xtables.lock

HostPathType: FileOrCreate

kube-api-access-nppgp:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

Optional: false

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: :NoExecute op=Exists

:NoSchedule op=Exists

node.kubernetes.io/disk-pressure:NoSchedule op=Exists

node.kubernetes.io/memory-pressure:NoSchedule op=Exists

node.kubernetes.io/network-unavailable:NoSchedule op=Exists

node.kubernetes.io/not-ready:NoExecute op=Exists

node.kubernetes.io/pid-pressure:NoSchedule op=Exists

node.kubernetes.io/unreachable:NoExecute op=Exists

node.kubernetes.io/unschedulable:NoSchedule op=Exists

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 44s default-scheduler Successfully assigned kube-flannel/kube-flannel-ds-c4rxb to k8s-w1

Normal Pulling 43s kubelet Pulling image "ghcr.io/flannel-io/flannel-cni-plugin:v1.7.1-flannel1"

Normal Pulled 39s kubelet Successfully pulled image "ghcr.io/flannel-io/flannel-cni-plugin:v1.7.1-flannel1" in 4.101s (4.101s including waiting). Image size: 4878976 bytes.

Normal Created 39s kubelet Created container: install-cni-plugin

Normal Started 39s kubelet Started container install-cni-plugin

Normal Pulling 38s kubelet Pulling image "ghcr.io/flannel-io/flannel:v0.27.1"

Normal Pulled 31s kubelet Successfully pulled image "ghcr.io/flannel-io/flannel:v0.27.1" in 6.891s (6.891s including waiting). Image size: 32389164 bytes.

Normal Created 31s kubelet Created container: install-cni

Normal Started 31s kubelet Started container install-cni

Normal Pulled 30s kubelet Container image "ghcr.io/flannel-io/flannel:v0.27.1" already present on machine

Normal Created 30s kubelet Created container: kube-flannel

Normal Started 30s kubelet Started container kube-flannel

Name: kube-flannel-ds-q4chw

Namespace: kube-flannel

Priority: 2000001000

Priority Class Name: system-node-critical

Service Account: flannel

Node: k8s-w2/192.168.10.102

Start Time: Mon, 14 Jul 2025 23:49:17 +0900

Labels: app=flannel

controller-revision-hash=66c5c78475

pod-template-generation=1

tier=node

Annotations: <none>

Status: Running

IP: 192.168.10.102

IPs:

IP: 192.168.10.102

Controlled By: DaemonSet/kube-flannel-ds

Init Containers:

install-cni-plugin:

Container ID: containerd://8b174a979471fdd203c4572b14db14c8931fdde14b2935e707790c8b913882ce

Image: ghcr.io/flannel-io/flannel-cni-plugin:v1.7.1-flannel1

Image ID: ghcr.io/flannel-io/flannel-cni-plugin@sha256:cb3176a2c9eae5fa0acd7f45397e706eacb4577dac33cad89f93b775ff5611df

Port: <none>

Host Port: <none>

Command:

cp

Args:

-f

/flannel

/opt/cni/bin/flannel

State: Terminated

Reason: Completed

Exit Code: 0

Started: Mon, 14 Jul 2025 23:49:22 +0900

Finished: Mon, 14 Jul 2025 23:49:22 +0900

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/opt/cni/bin from cni-plugin (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-w8bh8 (ro)

install-cni:

Container ID: containerd://d40ae3545353faf7911afb92146557a2996d7bfecd1e47ff9edf8e6d0a23c918

Image: ghcr.io/flannel-io/flannel:v0.27.1

Image ID: ghcr.io/flannel-io/flannel@sha256:0c95c822b690f83dc827189d691015f92ab7e249e238876b56442b580c492d85

Port: <none>

Host Port: <none>

Command:

cp

Args:

-f

/etc/kube-flannel/cni-conf.json

/etc/cni/net.d/10-flannel.conflist

State: Terminated

Reason: Completed

Exit Code: 0

Started: Mon, 14 Jul 2025 23:49:29 +0900

Finished: Mon, 14 Jul 2025 23:49:29 +0900

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/etc/cni/net.d from cni (rw)

/etc/kube-flannel/ from flannel-cfg (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-w8bh8 (ro)

Containers:

kube-flannel:

Container ID: containerd://5305910686764c1ea1a0231fc39a38dd718eeafbb20746d44e52c37e4b17ba72

Image: ghcr.io/flannel-io/flannel:v0.27.1

Image ID: ghcr.io/flannel-io/flannel@sha256:0c95c822b690f83dc827189d691015f92ab7e249e238876b56442b580c492d85

Port: <none>

Host Port: <none>

Command:

/opt/bin/flanneld

--ip-masq

--kube-subnet-mgr

--iface=eth1

State: Running

Started: Mon, 14 Jul 2025 23:49:30 +0900

Ready: True

Restart Count: 0

Requests:

cpu: 100m

memory: 50Mi

Environment:

POD_NAME: kube-flannel-ds-q4chw (v1:metadata.name)

POD_NAMESPACE: kube-flannel (v1:metadata.namespace)

EVENT_QUEUE_DEPTH: 5000

CONT_WHEN_CACHE_NOT_READY: false

Mounts:

/etc/kube-flannel/ from flannel-cfg (rw)

/run/flannel from run (rw)

/run/xtables.lock from xtables-lock (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-w8bh8 (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

run:

Type: HostPath (bare host directory volume)

Path: /run/flannel

HostPathType:

cni-plugin:

Type: HostPath (bare host directory volume)

Path: /opt/cni/bin

HostPathType:

cni:

Type: HostPath (bare host directory volume)

Path: /etc/cni/net.d

HostPathType:

flannel-cfg:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: kube-flannel-cfg

Optional: false

xtables-lock:

Type: HostPath (bare host directory volume)

Path: /run/xtables.lock

HostPathType: FileOrCreate

kube-api-access-w8bh8:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

Optional: false

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: :NoExecute op=Exists

:NoSchedule op=Exists

node.kubernetes.io/disk-pressure:NoSchedule op=Exists

node.kubernetes.io/memory-pressure:NoSchedule op=Exists

node.kubernetes.io/network-unavailable:NoSchedule op=Exists

node.kubernetes.io/not-ready:NoExecute op=Exists

node.kubernetes.io/pid-pressure:NoSchedule op=Exists

node.kubernetes.io/unreachable:NoExecute op=Exists

node.kubernetes.io/unschedulable:NoSchedule op=Exists

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 44s default-scheduler Successfully assigned kube-flannel/kube-flannel-ds-q4chw to k8s-w2

Normal Pulling 43s kubelet Pulling image "ghcr.io/flannel-io/flannel-cni-plugin:v1.7.1-flannel1"

Normal Pulled 39s kubelet Successfully pulled image "ghcr.io/flannel-io/flannel-cni-plugin:v1.7.1-flannel1" in 4.081s (4.081s including waiting). Image size: 4878976 bytes.

Normal Created 39s kubelet Created container: install-cni-plugin

Normal Started 39s kubelet Started container install-cni-plugin

Normal Pulling 39s kubelet Pulling image "ghcr.io/flannel-io/flannel:v0.27.1"

Normal Pulled 32s kubelet Successfully pulled image "ghcr.io/flannel-io/flannel:v0.27.1" in 6.97s (6.97s including waiting). Image size: 32389164 bytes.

Normal Created 32s kubelet Created container: install-cni

Normal Started 32s kubelet Started container install-cni

Normal Pulled 31s kubelet Container image "ghcr.io/flannel-io/flannel:v0.27.1" already present on machine

Normal Created 31s kubelet Created container: kube-flannel

Normal Started 31s kubelet Started container kube-flannel

install-cni-plugin: flannel 바이너리 설치(/opt/cni/bin/flannel)install-cni: CNI설정(/etc/cni/net.d/10-flannel.conflist) 적용

(5) CNI 바이너리 설치 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# tree /opt/cni/bin/

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

/opt/cni/bin/

├── bandwidth

├── bridge

├── dhcp

├── dummy

├── firewall

├── flannel

├── host-device

├── host-local

├── ipvlan

├── LICENSE

├── loopback

├── macvlan

├── portmap

├── ptp

├── README.md

├── sbr

├── static

├── tap

├── tuning

├── vlan

└── vrf

1 directory, 21 files

(6) CNI 설정 파일 확인

1

2

3

4

5

(⎈|HomeLab:N/A) root@k8s-ctr:~# tree /etc/cni/net.d/

/etc/cni/net.d/

└── 10-flannel.conflist

1 directory, 1 file

10-flannel.conflist 확인

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# cat /etc/cni/net.d/10-flannel.conflist | jq

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

(7) ConfigMap 설정 확인

Pod 네트워크 CIDR: 10.244.0.0/16 / Backend: vxlan

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kc describe cm -n kube-flannel kube-flannel-cfg

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

Name: kube-flannel-cfg

Namespace: kube-flannel

Labels: app=flannel

app.kubernetes.io/managed-by=Helm

tier=node

Annotations: meta.helm.sh/release-name: flannel

meta.helm.sh/release-namespace: kube-flannel

Data

====

cni-conf.json:

----

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json:

----

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

BinaryData

====

Events: <none>

(8) 네트워크 인터페이스 변화 확인

설치 후, flannel.1, cni0, veth* 인터페이스 추가됨

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c link

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 08:00:27:6b:69:c9 brd ff:ff:ff:ff:ff:ff

altname enp0s3

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 08:00:27:80:23:b9 brd ff:ff:ff:ff:ff:ff

altname enp0s8

4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN mode DEFAULT group default

link/ether e6:0f:9b:40:c3:ec brd ff:ff:ff:ff:ff:ff

5: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether f6:af:58:af:44:e3 brd ff:ff:ff:ff:ff:ff

6: vethe4603105@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default qlen 1000

link/ether fa:28:80:b2:a3:a2 brd ff:ff:ff:ff:ff:ff link-netns cni-05426de7-dd90-2656-df69-64505867d5df

7: veth470cf46f@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default qlen 1000

link/ether c6:8d:d9:1c:52:0e brd ff:ff:ff:ff:ff:ff link-netns cni-1d343493-f993-e0d5-e30c-163d1baf2a6f

(9) 라우팅 테이블 변화 확인

컨트롤 플레인에서 확인된 라우팅

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c route | grep 10.244.

✅ 출력

1

2

3

10.244.0.0/24 dev cni0 proto kernel scope link src 10.244.0.1

10.244.1.0/24 via 10.244.1.0 dev flannel.1 onlink

10.244.2.0/24 via 10.244.2.0 dev flannel.1 onlink

10.244.1.0/24→ 워커노드1의 flannel.1로 전송10.244.2.0/24→ 워커노드2의 flannel.1로 전송

(10) ping 테스트

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ping -c 1 10.244.1.0

✅ 출력

1

2

3

4

5

6

PING 10.244.1.0 (10.244.1.0) 56(84) bytes of data.

64 bytes from 10.244.1.0: icmp_seq=1 ttl=64 time=0.391 ms

--- 10.244.1.0 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.391/0.391/0.391/0.000 ms

1

(⎈|HomeLab:N/A) root@k8s-ctr:~# ping -c 1 10.244.2.0

✅ 출력

1

2

3

4

5

6