🔧 kind mgmt k8s 배포 + ingress-nginx + Argo CD

1. kind mgmt 클러스터 생성

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

| kind create cluster --name mgmt --image kindest/node:v1.32.8 --config - <<EOF

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

labels:

ingress-ready: true

extraPortMappings:

- containerPort: 80

hostPort: 80

protocol: TCP

- containerPort: 443

hostPort: 443

protocol: TCP

- containerPort: 30000

hostPort: 30000

EOF

Creating cluster "mgmt" ...

✓ Ensuring node image (kindest/node:v1.32.8) 🖼

✓ Preparing nodes 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

Set kubectl context to "kind-mgmt"

You can now use your cluster with:

kubectl cluster-info --context kind-mgmt

Have a nice day! 👋

|

2. NGINX Ingress Controller 배포

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/main/deploy/static/provider/kind/deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

serviceaccount/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

configmap/ingress-nginx-controller created

service/ingress-nginx-controller created

service/ingress-nginx-controller-admission created

deployment.apps/ingress-nginx-controller created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

|

3. Ingress SSL Passthrough 옵션 활성화

1

2

3

4

5

| kubectl get deployment ingress-nginx-controller -n ingress-nginx -o yaml \

| sed '/- --publish-status-address=localhost/a\

- --enable-ssl-passthrough' | kubectl apply -f -

deployment.apps/ingress-nginx-controller configured

|

4. Argo CD용 Self-Signed 인증서 생성

1

2

3

4

| openssl req -x509 -nodes -days 365 -newkey rsa:2048 \

-keyout argocd.example.com.key \

-out argocd.example.com.crt \

-subj "/CN=argocd.example.com/O=argocd"

|

5. Argo CD 네임스페이스 및 TLS Secret 생성

1

2

3

| kubectl create ns argocd

namespace/argocd created

|

1

2

3

4

5

| kubectl -n argocd create secret tls argocd-server-tls \

--cert=argocd.example.com.crt \

--key=argocd.example.com.key

secret/argocd-server-tls created

|

6. Helm으로 Argo CD 설치

1

2

3

4

5

6

7

8

9

10

11

12

13

| cat <<EOF > argocd-values.yaml

global:

domain: argocd.example.com

server:

ingress:

enabled: true

ingressClassName: nginx

annotations:

nginx.ingress.kubernetes.io/force-ssl-redirect: "true"

nginx.ingress.kubernetes.io/ssl-passthrough: "true"

tls: true

EOF

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

| helm repo add argo https://argoproj.github.io/argo-helm

helm install argocd argo/argo-cd --version 9.0.5 -f argocd-values.yaml --namespace argocd

NAME: argocd

LAST DEPLOYED: Mon Nov 17 20:00:25 2025

NAMESPACE: argocd

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

In order to access the server UI you have the following options:

1. kubectl port-forward service/argocd-server -n argocd 8080:443

and then open the browser on http://localhost:8080 and accept the certificate

2. enable ingress in the values file `server.ingress.enabled` and either

- Add the annotation for ssl passthrough: https://argo-cd.readthedocs.io/en/stable/operator-manual/ingress/#option-1-ssl-passthrough

- Set the `configs.params."server.insecure"` in the values file and terminate SSL at your ingress: https://argo-cd.readthedocs.io/en/stable/operator-manual/ingress/#option-2-multiple-ingress-objects-and-hosts

After reaching the UI the first time you can login with username: admin and the random password generated during the installation. You can find the password by running:

kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d

(You should delete the initial secret afterwards as suggested by the Getting Started Guide: https://argo-cd.readthedocs.io/en/stable/getting_started/#4-login-using-the-cli)

|

7. /etc/hosts에 Argo CD 도메인 등록

1

2

3

4

5

| echo "127.0.0.1 argocd.example.com" | sudo tee -a /etc/hosts

cat /etc/hosts

...

127.0.0.1 argocd.example.com

|

- 로컬 환경에서

argocd.example.com 도메인을 127.0.0.1로 해석하도록 /etc/hosts에 등록

8. curl로 Argo CD HTTPS 접속 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| curl -vk https://argocd.example.com/

* Host argocd.example.com:443 was resolved.

* IPv6: (none)

* IPv4: 127.0.0.1

* Trying 127.0.0.1:443...

* ALPN: curl offers h2,http/1.1

* TLSv1.3 (OUT), TLS handshake, Client hello (1):

* SSL Trust: peer verification disabled

* TLSv1.3 (IN), TLS handshake, Server hello (2):

* TLSv1.3 (IN), TLS change cipher, Change cipher spec (1):

* TLSv1.3 (IN), TLS handshake, Encrypted Extensions (8):

* TLSv1.3 (IN), TLS handshake, Certificate (11):

* TLSv1.3 (IN), TLS handshake, CERT verify (15):

* TLSv1.3 (IN), TLS handshake, Finished (20):

* TLSv1.3 (OUT), TLS change cipher, Change cipher spec (1):

* TLSv1.3 (OUT), TLS handshake, Finished (20):

* SSL connection using TLSv1.3 / TLS_AES_128_GCM_SHA256 / X25519MLKEM768 / RSASSA-PSS

...

|

9. Argo CD 초기 admin 비밀번호 조회 및 변수설정

1

2

| kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d ;echo

r0PAK1j7tcDasX7Q

|

1

2

| ARGOPW=<최초 접속 암호>

ARGOPW=r0PAK1j7tcDasX7Q

|

10. Argo CD CLI 로그인 수행

1

2

3

4

| argocd login argocd.example.com --insecure --username admin --password $ARGOPW

'admin:login' logged in successfully

Context 'argocd.example.com' updated

|

11. admin 계정 비밀번호 변경

1

2

3

4

| argocd account update-password --current-password $ARGOPW --new-password qwe12345

Password updated

Context 'argocd.example.com' updated

|

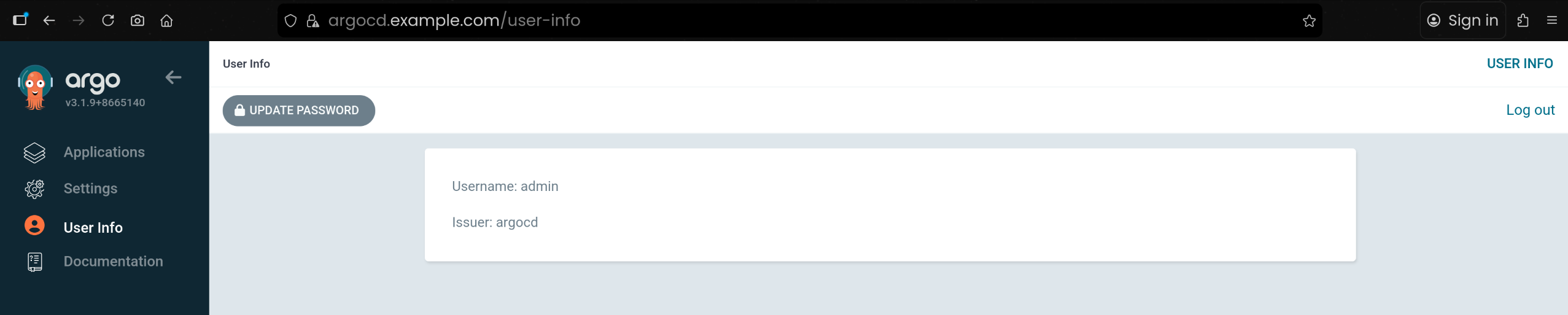

12. Argo CD 웹 콘솔 접속 주소 확인

1

| https://argocd.example.com

|

🌱 kind dev/prd k8s 배포 & k8s 자격증명 수정

1. 설치 전 kubectl 컨텍스트 상태 확인

1

2

3

4

| kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* kind-mgmt kind-mgmt kind-mgmt

|

- 아직은

kind-mgmt 클러스터만 존재하고, 기본 컨텍스트도 kind-mgmt로 설정되어 있음

2. kind 도커 네트워크 정보 확인

1

2

3

4

5

6

7

| docker network ls

NETWORK ID NAME DRIVER SCOPE

f5ad53882464 bridge bridge local

bec308f23ee5 host host local

1da18f85ffec kind bridge local

225e867f21f9 none null local

|

3. mgmt-control-plane 컨테이너 IP 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

| docker network inspect kind | jq

[

{

"Name": "kind",

"Id": "1da18f85ffecfc8a2170a57b9369bf4387586703d6c83f3accf900e9145b7772",

"Created": "2025-10-18T15:06:51.081819223+09:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv4": true,

"EnableIPv6": true,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.18.0.0/16",

"Gateway": "172.18.0.1"

},

{

"Subnet": "fc00:f853:ccd:e793::/64",

"Gateway": "fc00:f853:ccd:e793::1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"4466ac122129f82aef62ae613cf1b1fa9025f61e3337f49bd9f810c5090054b3": {

"Name": "mgmt-control-plane",

"EndpointID": "b831183bd631a8adfdfe4f5659ebdc5192fa74b0c5e9ddada9b2e4b638190565",

"MacAddress": "0e:cf:0f:10:0d:55",

"IPv4Address": "172.18.0.2/16",

"IPv6Address": "fc00:f853:ccd:e793::2/64"

}

},

"Options": {

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

|

mgmt-control-plane 컨테이너의 IPv4 주소가 172.18.0.2인 것을 확인- 이후 dev/prd 클러스터와 통신 및 kubeconfig 수정 시 참고용으로 사용

4. kind dev/prd 클러스터 생성

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| kind create cluster --name dev --image kindest/node:v1.32.8 --config - <<EOF

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

extraPortMappings:

- containerPort: 31000

hostPort: 31000

EOF

Creating cluster "dev" ...

✓ Ensuring node image (kindest/node:v1.32.8) 🖼

✓ Preparing nodes 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

Set kubectl context to "kind-dev"

You can now use your cluster with:

kubectl cluster-info --context kind-dev

Have a nice day! 👋

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| kind create cluster --name prd --image kindest/node:v1.32.8 --config - <<EOF

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

extraPortMappings:

- containerPort: 32000

hostPort: 32000

EOF

Creating cluster "prd" ...

✓ Ensuring node image (kindest/node:v1.32.8) 🖼

✓ Preparing nodes 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

Set kubectl context to "kind-prd"

You can now use your cluster with:

kubectl cluster-info --context kind-prd

Have a nice day! 👋

|

5. dev/prd 클러스터 생성 후 컨텍스트 목록 확인

1

2

3

4

5

6

| kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

kind-dev kind-dev kind-dev

kind-mgmt kind-mgmt kind-mgmt

* kind-prd kind-prd kind-prd

|

- 클러스터 생성 후 kubeconfig에

kind-dev, kind-prd 컨텍스트가 추가됨 - 기본 컨텍스트는 제일 마지막으로 생성된 클러스터인

kind-prd로 지정된 상태임

6. mgmt 클러스터로 컨텍스트 변경

1

2

3

| kubectl config use-context kind-mgmt

Switched to context "kind-mgmt".

|

1

2

3

4

5

6

| kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

kind-dev kind-dev kind-dev

* kind-mgmt kind-mgmt kind-mgmt

kind-prd kind-prd kind-prd

|

7. 각 클러스터 노드 상태 확인 (mgmt/dev/prd)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

| kubectl get node -v=6 --context kind-mgmt

kubectl get node -v=6 --context kind-dev

kubectl get node -v=6 --context kind-prd

I1117 20:13:19.217800 121966 cmd.go:527] kubectl command headers turned on

I1117 20:13:19.224307 121966 loader.go:402] Config loaded from file: /home/devshin/.kube/config

I1117 20:13:19.225823 121966 envvar.go:172] "Feature gate default state" feature="ClientsPreferCBOR" enabled=false

I1117 20:13:19.225838 121966 envvar.go:172] "Feature gate default state" feature="InOrderInformers" enabled=true

I1117 20:13:19.225843 121966 envvar.go:172] "Feature gate default state" feature="InformerResourceVersion" enabled=false

I1117 20:13:19.225847 121966 envvar.go:172] "Feature gate default state" feature="WatchListClient" enabled=false

I1117 20:13:19.225852 121966 envvar.go:172] "Feature gate default state" feature="ClientsAllowCBOR" enabled=false

I1117 20:13:19.237944 121966 round_trippers.go:632] "Response" verb="GET" url="https://127.0.0.1:42415/api/v1/nodes?limit=500" status="200 OK" milliseconds=7

NAME STATUS ROLES AGE VERSION

mgmt-control-plane Ready control-plane 17m v1.32.8

I1117 20:13:19.286485 121989 cmd.go:527] kubectl command headers turned on

I1117 20:13:19.293572 121989 loader.go:402] Config loaded from file: /home/devshin/.kube/config

I1117 20:13:19.295187 121989 envvar.go:172] "Feature gate default state" feature="InOrderInformers" enabled=true

I1117 20:13:19.295222 121989 envvar.go:172] "Feature gate default state" feature="InformerResourceVersion" enabled=false

I1117 20:13:19.295233 121989 envvar.go:172] "Feature gate default state" feature="WatchListClient" enabled=false

I1117 20:13:19.295243 121989 envvar.go:172] "Feature gate default state" feature="ClientsAllowCBOR" enabled=false

I1117 20:13:19.295253 121989 envvar.go:172] "Feature gate default state" feature="ClientsPreferCBOR" enabled=false

I1117 20:13:19.305632 121989 round_trippers.go:632] "Response" verb="GET" url="https://127.0.0.1:35871/api?timeout=32s" status="200 OK" milliseconds=10

I1117 20:13:19.307735 121989 round_trippers.go:632] "Response" verb="GET" url="https://127.0.0.1:35871/apis?timeout=32s" status="200 OK" milliseconds=1

I1117 20:13:19.315440 121989 round_trippers.go:632] "Response" verb="GET" url="https://127.0.0.1:35871/api/v1/nodes?limit=500" status="200 OK" milliseconds=2

NAME STATUS ROLES AGE VERSION

dev-control-plane Ready control-plane 2m21s v1.32.8

I1117 20:13:19.363680 122008 cmd.go:527] kubectl command headers turned on

I1117 20:13:19.369643 122008 loader.go:402] Config loaded from file: /home/devshin/.kube/config

I1117 20:13:19.370592 122008 envvar.go:172] "Feature gate default state" feature="ClientsAllowCBOR" enabled=false

I1117 20:13:19.370614 122008 envvar.go:172] "Feature gate default state" feature="ClientsPreferCBOR" enabled=false

I1117 20:13:19.370620 122008 envvar.go:172] "Feature gate default state" feature="InOrderInformers" enabled=true

I1117 20:13:19.370625 122008 envvar.go:172] "Feature gate default state" feature="InformerResourceVersion" enabled=false

I1117 20:13:19.370631 122008 envvar.go:172] "Feature gate default state" feature="WatchListClient" enabled=false

I1117 20:13:19.378161 122008 round_trippers.go:632] "Response" verb="GET" url="https://127.0.0.1:34469/api?timeout=32s" status="200 OK" milliseconds=7

I1117 20:13:19.380831 122008 round_trippers.go:632] "Response" verb="GET" url="https://127.0.0.1:34469/apis?timeout=32s" status="200 OK" milliseconds=1

I1117 20:13:19.388897 122008 round_trippers.go:632] "Response" verb="GET" url="https://127.0.0.1:34469/api/v1/nodes?limit=500" status="200 OK" milliseconds=2

NAME STATUS ROLES AGE VERSION

prd-control-plane Ready control-plane 106s v1.32.8

|

8. 각 클러스터 Pod 상태 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

| kubectl get pod -A --context kind-mgmt

kubectl get pod -A --context kind-dev

kubectl get pod -A --context kind-prd

NAMESPACE NAME READY STATUS RESTARTS AGE

argocd argocd-application-controller-0 1/1 Running 0 13m

argocd argocd-applicationset-controller-bbff79c6f-9qcf8 1/1 Running 0 13m

argocd argocd-dex-server-6877ddf4f8-fvfll 1/1 Running 0 13m

argocd argocd-notifications-controller-7b5658fc47-26p24 1/1 Running 0 13m

argocd argocd-redis-7d948674-xnl9k 1/1 Running 0 13m

argocd argocd-repo-server-7679dc55f5-swj2g 1/1 Running 0 13m

argocd argocd-server-7d769b6f48-2ts94 1/1 Running 0 13m

ingress-nginx ingress-nginx-controller-5b89cb54f9-5gvfh 1/1 Running 0 16m

kube-system coredns-668d6bf9bc-d5bn7 1/1 Running 0 17m

kube-system coredns-668d6bf9bc-vb4p7 1/1 Running 0 17m

kube-system etcd-mgmt-control-plane 1/1 Running 0 18m

kube-system kindnet-jtm8t 1/1 Running 0 17m

kube-system kube-apiserver-mgmt-control-plane 1/1 Running 0 18m

kube-system kube-controller-manager-mgmt-control-plane 1/1 Running 0 18m

kube-system kube-proxy-b9pmh 1/1 Running 0 17m

kube-system kube-scheduler-mgmt-control-plane 1/1 Running 0 18m

local-path-storage local-path-provisioner-7dc846544d-wltkn 1/1 Running 0 17m

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-668d6bf9bc-lddkn 1/1 Running 0 3m17s

kube-system coredns-668d6bf9bc-ltg98 1/1 Running 0 3m17s

kube-system etcd-dev-control-plane 1/1 Running 0 3m23s

kube-system kindnet-2gks8 1/1 Running 0 3m18s

kube-system kube-apiserver-dev-control-plane 1/1 Running 0 3m23s

kube-system kube-controller-manager-dev-control-plane 1/1 Running 0 3m23s

kube-system kube-proxy-zmdnk 1/1 Running 0 3m18s

kube-system kube-scheduler-dev-control-plane 1/1 Running 0 3m23s

local-path-storage local-path-provisioner-7dc846544d-qtmxj 1/1 Running 0 3m17s

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-668d6bf9bc-2hm7g 1/1 Running 0 2m42s

kube-system coredns-668d6bf9bc-7kbbg 1/1 Running 0 2m42s

kube-system etcd-prd-control-plane 1/1 Running 0 2m48s

kube-system kindnet-lqc7k 1/1 Running 0 2m42s

kube-system kube-apiserver-prd-control-plane 1/1 Running 0 2m48s

kube-system kube-controller-manager-prd-control-plane 1/1 Running 0 2m48s

kube-system kube-proxy-kkhxb 1/1 Running 0 2m42s

kube-system kube-scheduler-prd-control-plane 1/1 Running 0 2m49s

local-path-storage local-path-provisioner-7dc846544d-dj54f 1/1 Running 0 2m42s

|

9. kubectl alias 설정

1

2

3

| alias k8s1='kubectl --context kind-mgmt'

alias k8s2='kubectl --context kind-dev'

alias k8s3='kubectl --context kind-prd'

|

1

2

3

4

5

6

7

8

9

10

11

12

13

| # 각 노드 간단한 정보 확인

k8s1 get node -owide

k8s2 get node -owide

k8s3 get node -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

mgmt-control-plane Ready control-plane 19m v1.32.8 172.18.0.2 <none> Debian GNU/Linux 12 (bookworm) 6.17.8-arch1-1 containerd://2.1.3

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

dev-control-plane Ready control-plane 4m16s v1.32.8 172.18.0.3 <none> Debian GNU/Linux 12 (bookworm) 6.17.8-arch1-1 containerd://2.1.3

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

prd-control-plane Ready control-plane 3m41s v1.32.8 172.18.0.4 <none> Debian GNU/Linux 12 (bookworm) 6.17.8-arch1-1 containerd://2.1.3

|

10. docker 네트워크에서 각 컨트롤 플레인 IP 재확인

1

2

3

4

5

6

7

8

9

| docker network inspect kind | grep -E 'Name|IPv4Address'

"Name": "kind",

"Name": "prd-control-plane",

"IPv4Address": "172.18.0.4/16",

"Name": "mgmt-control-plane",

"IPv4Address": "172.18.0.2/16",

"Name": "dev-control-plane",

"IPv4Address": "172.18.0.3/16",

|

11. 도메인 통신 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

| docker exec -it mgmt-control-plane curl -sk https://dev-control-plane:6443/version

{

"major": "1",

"minor": "32",

"gitVersion": "v1.32.8",

"gitCommit": "2e83bc4bf31e88b7de81d5341939d5ce2460f46f",

"gitTreeState": "clean",

"buildDate": "2025-08-13T14:21:22Z",

"goVersion": "go1.23.11",

"compiler": "gc",

"platform": "linux/amd64"

}

|

1

2

3

4

5

6

7

8

9

10

11

12

13

| docker exec -it mgmt-control-plane curl -sk https://prd-control-plane:6443/version

{

"major": "1",

"minor": "32",

"gitVersion": "v1.32.8",

"gitCommit": "2e83bc4bf31e88b7de81d5341939d5ce2460f46f",

"gitTreeState": "clean",

"buildDate": "2025-08-13T14:21:22Z",

"goVersion": "go1.23.11",

"compiler": "gc",

"platform": "linux/amd64"

}

|

1

2

3

4

5

6

7

8

9

10

11

12

13

| docker exec -it dev-control-plane curl -sk https://prd-control-plane:6443/version

{

"major": "1",

"minor": "32",

"gitVersion": "v1.32.8",

"gitCommit": "2e83bc4bf31e88b7de81d5341939d5ce2460f46f",

"gitTreeState": "clean",

"buildDate": "2025-08-13T14:21:22Z",

"goVersion": "go1.23.11",

"compiler": "gc",

"platform": "linux/amd64"

}

|

mgmt-control-plane에서 dev-control-plane, prd-control-plane의 API 서버 /version 엔드포인트를 curl로 호출dev-control-plane에서도 prd-control-plane API 서버 /version 엔드포인트를 curl로 호출

12. 호스트에서 kind 네트워크 IP에 ping 테스트 수행

1

2

3

4

5

6

7

8

| ping -c 1 172.18.0.2

PING 172.18.0.2 (172.18.0.2) 56(84) bytes of data.

64 bytes from 172.18.0.2: icmp_seq=1 ttl=64 time=0.129 ms

--- 172.18.0.2 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.129/0.129/0.129/0.000 ms

|

1

2

3

4

5

6

7

8

| ping -c 1 172.18.0.3

PING 172.18.0.3 (172.18.0.3) 56(84) bytes of data.

64 bytes from 172.18.0.3: icmp_seq=1 ttl=64 time=0.079 ms

--- 172.18.0.3 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.079/0.079/0.079/0.000 ms

|

1

2

3

4

5

6

7

8

| ping -c 1 172.18.0.4

PING 172.18.0.4 (172.18.0.4) 56(84) bytes of data.

64 bytes from 172.18.0.4: icmp_seq=1 ttl=64 time=0.172 ms

--- 172.18.0.4 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.172/0.172/0.172/0.000 ms

|

- 호스트 ↔ 컨트롤 플레인 컨테이너 간 통신이 정상적으로 가능한 것을 확인

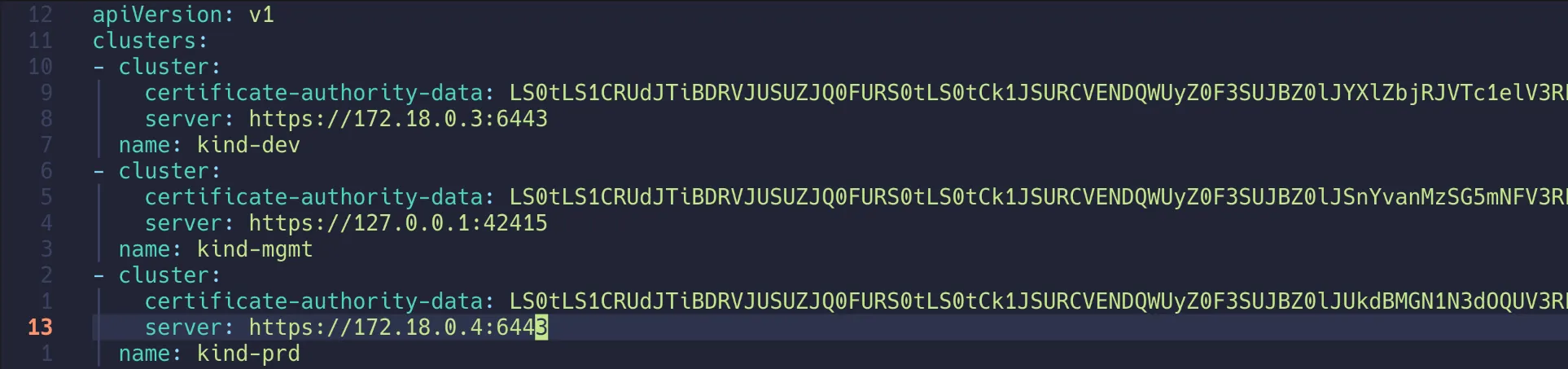

13. dev/prd kubeconfig의 API 서버 주소를 컨테이너 IP로 변경

1

2

3

4

5

6

7

8

9

| vi ~/.kube/config

...

server: https://172.18.0.3:6443

name: kind-dev

...

server: https://172.18.0.4:6443

name: kind-prd

...

|

14. kubeconfig 수정 후 dev/prd API 서버 연결 재검증

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

| kubectl get node -v=6 --context kind-dev

kubectl get node -v=6 --context kind-prd

I1117 23:24:11.853566 160346 cmd.go:527] kubectl command headers turned on

I1117 23:24:11.859417 160346 loader.go:402] Config loaded from file: /home/devshin/.kube/config

I1117 23:24:11.860050 160346 envvar.go:172] "Feature gate default state" feature="ClientsAllowCBOR" enabled=false

I1117 23:24:11.860066 160346 envvar.go:172] "Feature gate default state" feature="ClientsPreferCBOR" enabled=false

I1117 23:24:11.860073 160346 envvar.go:172] "Feature gate default state" feature="InOrderInformers" enabled=true

I1117 23:24:11.860080 160346 envvar.go:172] "Feature gate default state" feature="InformerResourceVersion" enabled=false

I1117 23:24:11.860086 160346 envvar.go:172] "Feature gate default state" feature="WatchListClient" enabled=false

I1117 23:24:11.866956 160346 round_trippers.go:632] "Response" verb="GET" url="https://172.18.0.3:6443/api?timeout=32s" status="200 OK" milliseconds=6

I1117 23:24:11.868615 160346 round_trippers.go:632] "Response" verb="GET" url="https://172.18.0.3:6443/apis?timeout=32s" status="200 OK" milliseconds=0

I1117 23:24:11.876303 160346 round_trippers.go:632] "Response" verb="GET" url="https://172.18.0.3:6443/api/v1/nodes?limit=500" status="200 OK" milliseconds=1

NAME STATUS ROLES AGE VERSION

dev-control-plane Ready control-plane 3h13m v1.32.8

I1117 23:24:11.918305 160370 cmd.go:527] kubectl command headers turned on

I1117 23:24:11.923330 160370 loader.go:402] Config loaded from file: /home/devshin/.kube/config

I1117 23:24:11.924213 160370 envvar.go:172] "Feature gate default state" feature="InformerResourceVersion" enabled=false

I1117 23:24:11.924278 160370 envvar.go:172] "Feature gate default state" feature="WatchListClient" enabled=false

I1117 23:24:11.924302 160370 envvar.go:172] "Feature gate default state" feature="ClientsAllowCBOR" enabled=false

I1117 23:24:11.924337 160370 envvar.go:172] "Feature gate default state" feature="ClientsPreferCBOR" enabled=false

I1117 23:24:11.924358 160370 envvar.go:172] "Feature gate default state" feature="InOrderInformers" enabled=true

I1117 23:24:11.929299 160370 round_trippers.go:632] "Response" verb="GET" url="https://172.18.0.4:6443/api?timeout=32s" status="200 OK" milliseconds=4

I1117 23:24:11.930766 160370 round_trippers.go:632] "Response" verb="GET" url="https://172.18.0.4:6443/apis?timeout=32s" status="200 OK" milliseconds=0

I1117 23:24:11.938976 160370 round_trippers.go:632] "Response" verb="GET" url="https://172.18.0.4:6443/api/v1/nodes?limit=500" status="200 OK" milliseconds=1

NAME STATUS ROLES AGE VERSION

prd-control-plane Ready control-plane 3h12m v1.32.8

|

🌐 Argo CD에 다른 K8S Cluster 등록

1. Argo CD 기본 in-cluster 클러스터 상태 확인

1

2

3

4

| argocd cluster list

SERVER NAME VERSION STATUS MESSAGE PROJECT

https://kubernetes.default.svc in-cluster Unknown Cluster has no applications and is not being monitored.

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

| argocd cluster list -o json | jq

[

{

"server": "https://kubernetes.default.svc",

"name": "in-cluster",

"config": {

"tlsClientConfig": {

"insecure": false

}

},

"connectionState": {

"status": "Unknown",

"message": "Cluster has no applications and is not being monitored.",

"attemptedAt": "2025-11-17T14:25:59Z"

},

"info": {

"connectionState": {

"status": "Unknown",

"message": "Cluster has no applications and is not being monitored.",

"attemptedAt": "2025-11-17T14:25:59Z"

},

"cacheInfo": {},

"applicationsCount": 0

}

}

]

|

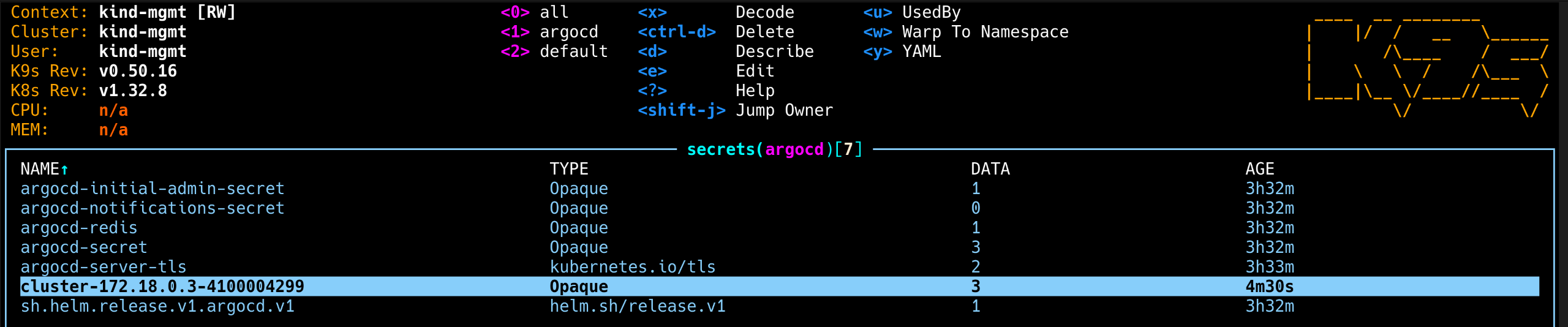

2. Argo CD 네임스페이스 시크릿 목록 확인

1

2

3

4

5

6

7

8

9

| kubectl get secret -n argocd

NAME TYPE DATA AGE

argocd-initial-admin-secret Opaque 1 3h25m

argocd-notifications-secret Opaque 0 3h25m

argocd-redis Opaque 1 3h25m

argocd-secret Opaque 3 3h25m

argocd-server-tls kubernetes.io/tls 2 3h27m

sh.helm.release.v1.argocd.v1 helm.sh/release.v1 1 3h26m

|

3. dev/prd 클러스터 kube-system ServiceAccount 목록 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

| k8s2 get sa -n kube-system

NAME SECRETS AGE

attachdetach-controller 0 3h16m

bootstrap-signer 0 3h16m

certificate-controller 0 3h16m

clusterrole-aggregation-controller 0 3h16m

coredns 0 3h16m

cronjob-controller 0 3h16m

daemon-set-controller 0 3h16m

default 0 3h16m

deployment-controller 0 3h16m

disruption-controller 0 3h16m

endpoint-controller 0 3h16m

endpointslice-controller 0 3h16m

endpointslicemirroring-controller 0 3h16m

ephemeral-volume-controller 0 3h16m

expand-controller 0 3h16m

generic-garbage-collector 0 3h16m

horizontal-pod-autoscaler 0 3h16m

job-controller 0 3h16m

kindnet 0 3h16m

kube-proxy 0 3h16m

legacy-service-account-token-cleaner 0 3h16m

namespace-controller 0 3h16m

node-controller 0 3h16m

persistent-volume-binder 0 3h16m

pod-garbage-collector 0 3h16m

pv-protection-controller 0 3h16m

pvc-protection-controller 0 3h16m

replicaset-controller 0 3h16m

replication-controller 0 3h16m

resourcequota-controller 0 3h16m

root-ca-cert-publisher 0 3h16m

service-account-controller 0 3h16m

statefulset-controller 0 3h16m

token-cleaner 0 3h16m

ttl-after-finished-controller 0 3h16m

ttl-controller 0 3h16m

validatingadmissionpolicy-status-controller 0 3h16m

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

| k8s3 get sa -n kube-system

NAME SECRETS AGE

attachdetach-controller 0 3h15m

bootstrap-signer 0 3h16m

certificate-controller 0 3h16m

clusterrole-aggregation-controller 0 3h16m

coredns 0 3h16m

cronjob-controller 0 3h16m

daemon-set-controller 0 3h15m

default 0 3h15m

deployment-controller 0 3h16m

disruption-controller 0 3h15m

endpoint-controller 0 3h15m

endpointslice-controller 0 3h16m

endpointslicemirroring-controller 0 3h16m

ephemeral-volume-controller 0 3h16m

expand-controller 0 3h16m

generic-garbage-collector 0 3h16m

horizontal-pod-autoscaler 0 3h16m

job-controller 0 3h16m

kindnet 0 3h16m

kube-proxy 0 3h16m

legacy-service-account-token-cleaner 0 3h16m

namespace-controller 0 3h16m

node-controller 0 3h16m

persistent-volume-binder 0 3h16m

pod-garbage-collector 0 3h16m

pv-protection-controller 0 3h16m

pvc-protection-controller 0 3h16m

replicaset-controller 0 3h16m

replication-controller 0 3h16m

resourcequota-controller 0 3h15m

root-ca-cert-publisher 0 3h16m

service-account-controller 0 3h16m

statefulset-controller 0 3h15m

token-cleaner 0 3h16m

ttl-after-finished-controller 0 3h16m

ttl-controller 0 3h16m

validatingadmissionpolicy-status-controller 0 3h16m

|

4. dev 클러스터를 Argo CD에 등록

1

2

3

4

5

6

7

8

| argocd cluster add kind-dev --name dev-k8s

WARNING: This will create a service account `argocd-manager` on the cluster referenced by context `kind-dev` with full cluster level privileges. Do you want to continue [y/N]? y

{"level":"info","msg":"ServiceAccount \"argocd-manager\" created in namespace \"kube-system\"","time":"2025-11-17T23:28:26+09:00"}

{"level":"info","msg":"ClusterRole \"argocd-manager-role\" created","time":"2025-11-17T23:28:26+09:00"}

{"level":"info","msg":"ClusterRoleBinding \"argocd-manager-role-binding\" created","time":"2025-11-17T23:28:26+09:00"}

{"level":"info","msg":"Created bearer token secret \"argocd-manager-long-lived-token\" for ServiceAccount \"argocd-manager\"","time":"2025-11-17T23:28:26+09:00"}

Cluster 'https://172.18.0.3:6443' added

|

5. dev 클러스터에 생성된 argocd-manager ServiceAccount 확인

1

2

3

4

| k8s2 get sa -n kube-system argocd-manager

NAME SECRETS AGE

argocd-manager 0 29s

|

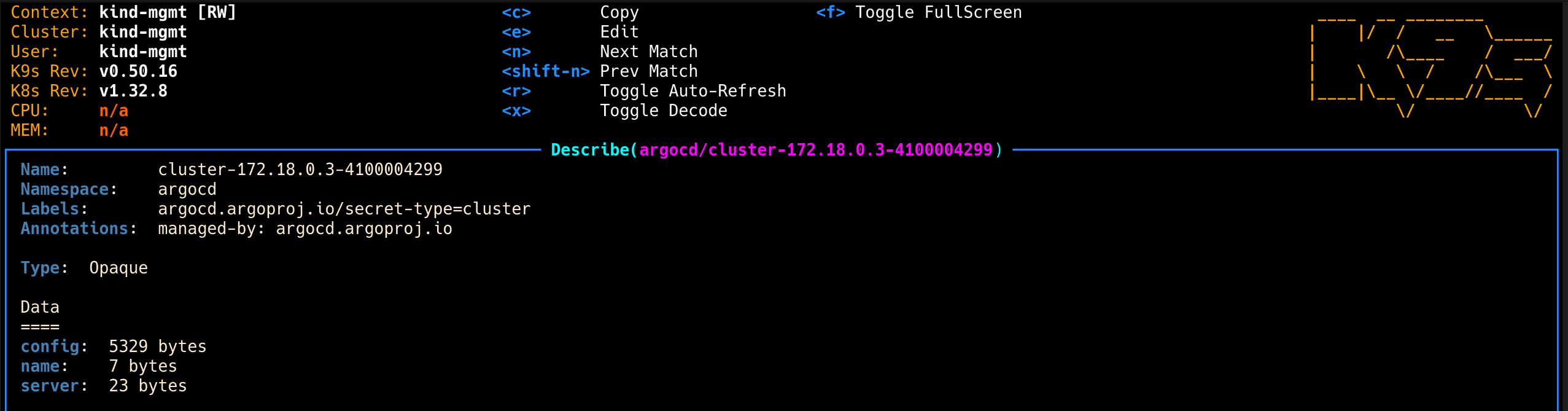

6. Argo CD에서 dev 클러스터용 cluster 시크릿 생성 확인

1

2

3

4

| kubectl get secret -n argocd -l argocd.argoproj.io/secret-type=cluster

NAME TYPE DATA AGE

cluster-172.18.0.3-4100004299 Opaque 3 2m36s

|

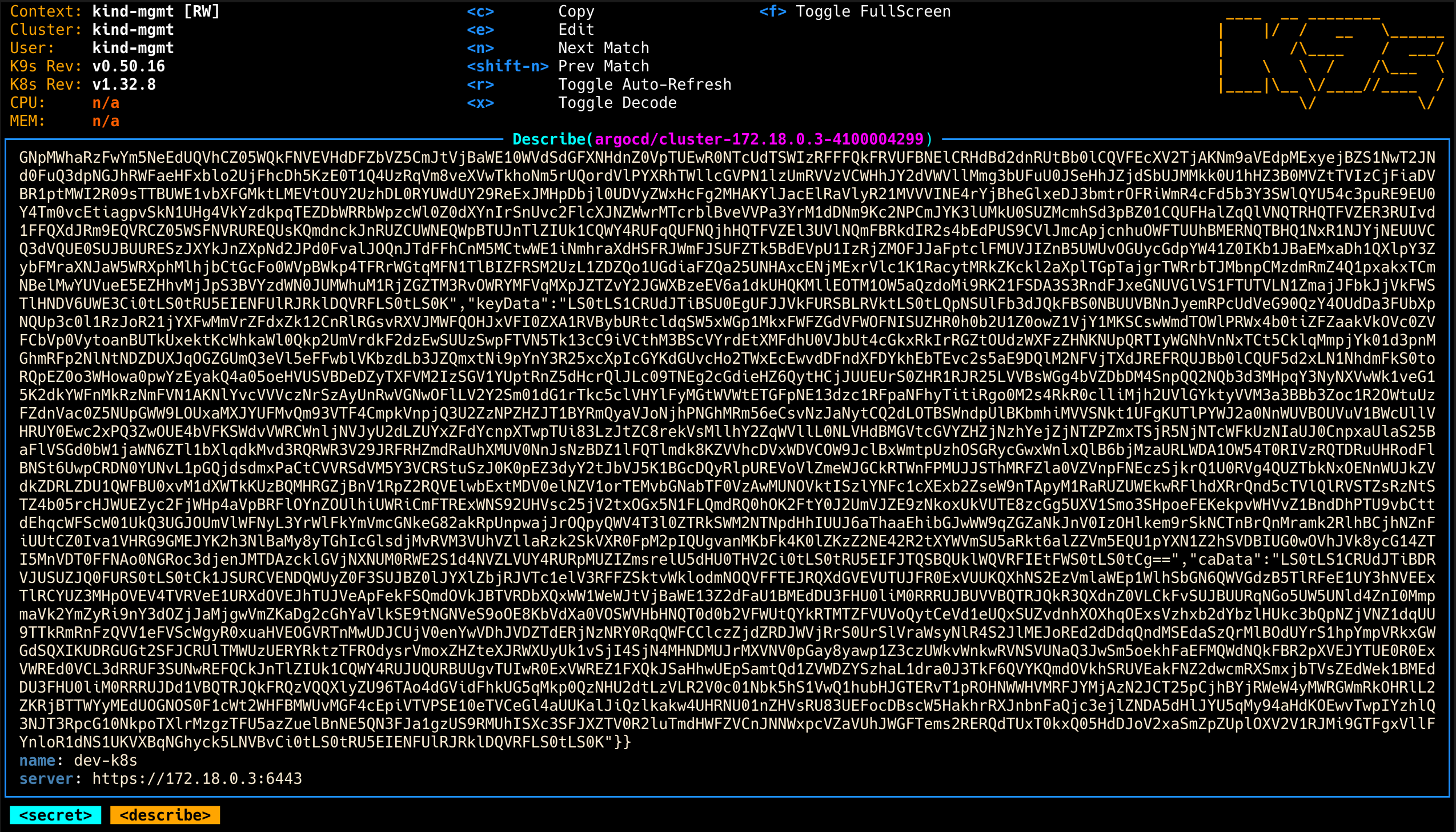

7. k9s로 dev 클러스터 시크릿 내용 디코딩해서 확인

1

| k9s -> : secret argocd -> 아래 secret 에서 d (Describe) -> x (Toggle Decode) 로 확인

|

8. dev-k8s 등록 결과 확인

1

2

3

4

5

| argocd cluster list

SERVER NAME VERSION STATUS MESSAGE PROJECT

https://172.18.0.3:6443 dev-k8s Unknown Cluster has no applications and is not being monitored.

https://kubernetes.default.svc in-cluster Unknown Cluster has no applications and is not being monitored.

|

- dev 클러스터가 Argo CD에 등록된 것을 확인함

9. prd 클러스터를 Argo CD에 등록

1

2

3

4

5

6

7

| argocd cluster add kind-prd --name prd-k8s --yes

{"level":"info","msg":"ServiceAccount \"argocd-manager\" created in namespace \"kube-system\"","time":"2025-11-17T23:36:08+09:00"}

{"level":"info","msg":"ClusterRole \"argocd-manager-role\" created","time":"2025-11-17T23:36:08+09:00"}

{"level":"info","msg":"ClusterRoleBinding \"argocd-manager-role-binding\" created","time":"2025-11-17T23:36:08+09:00"}

{"level":"info","msg":"Created bearer token secret \"argocd-manager-long-lived-token\" for ServiceAccount \"argocd-manager\"","time":"2025-11-17T23:36:08+09:00"}

Cluster 'https://172.18.0.4:6443' added

|

10. prd 클러스터 argocd-manager ServiceAccount 생성 확인

1

2

3

4

| k8s3 get sa -n kube-system argocd-manager

NAME SECRETS AGE

argocd-manager 0 15s

|

11. dev/prd 클러스터용 Argo CD cluster 시크릿 목록 확인

1

2

3

4

5

| kubectl get secret -n argocd -l argocd.argoproj.io/secret-type=cluster

NAME TYPE DATA AGE

cluster-172.18.0.3-4100004299 Opaque 3 8m18s

cluster-172.18.0.4-568336172 Opaque 3 36s

|

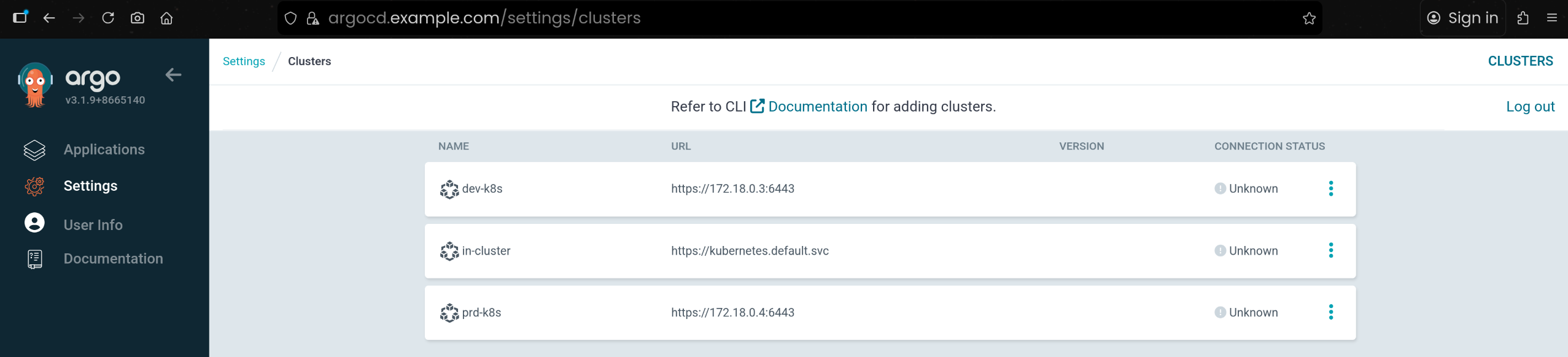

12. 최종 Argo CD 클러스터 목록 확인

1

2

3

4

5

6

| argocd cluster list

SERVER NAME VERSION STATUS MESSAGE PROJECT

https://172.18.0.3:6443 dev-k8s Unknown Cluster has no applications and is not being monitored.

https://172.18.0.4:6443 prd-k8s Unknown Cluster has no applications and is not being monitored.

https://kubernetes.default.svc in-cluster Unknown Cluster has no applications and is not being monitored.

|

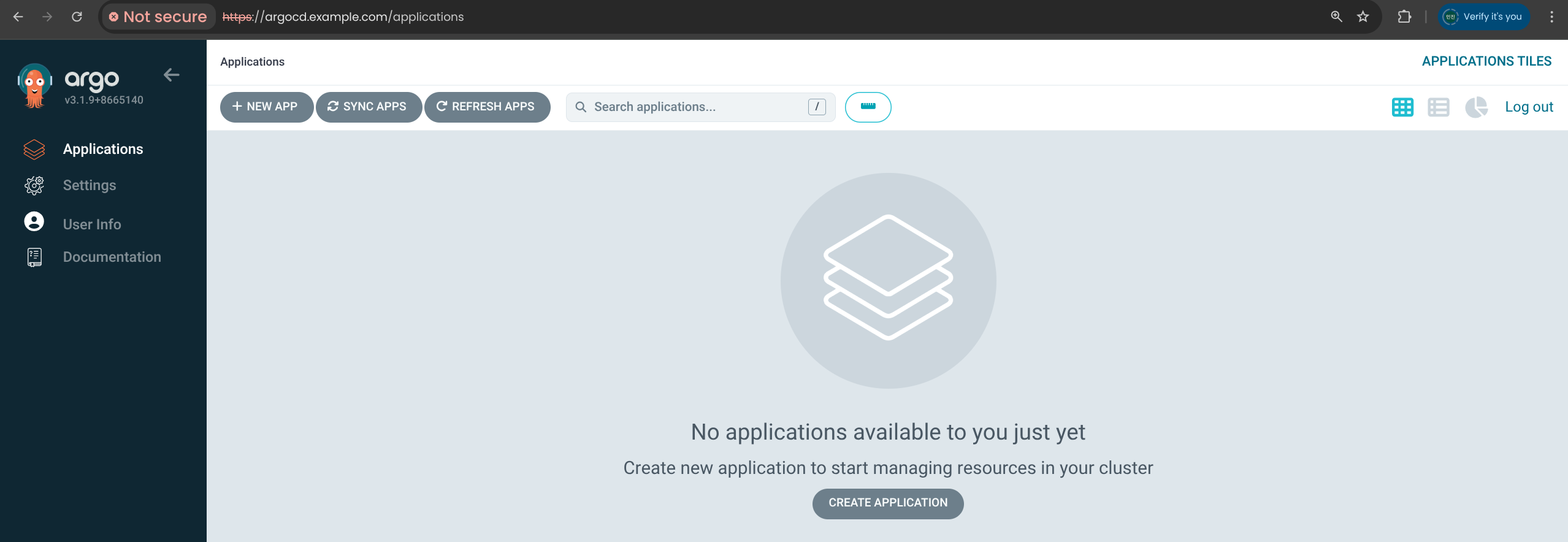

🚀 Argo CD로 3개의 K8S Cluster에 각각 Nginx 배포

1. kind 네트워크에서 dev/prd 클러스터 IP 확인 및 환경 변수 설정

1

2

3

4

5

6

7

8

9

| docker network inspect kind | grep -E 'Name|IPv4Address'

"Name": "kind",

"Name": "prd-control-plane",

"IPv4Address": "172.18.0.4/16",

"Name": "mgmt-control-plane",

"IPv4Address": "172.18.0.2/16",

"Name": "dev-control-plane",

"IPv4Address": "172.18.0.3/16",

|

1

2

3

4

5

| DEVK8SIP=172.18.0.3

PRDK8SIP=172.18.0.4

echo $DEVK8SIP $PRDK8SIP

172.18.0.3 172.18.0.4

|

2. mgmt 클러스터에 Nginx 배포용 Argo CD Application 생성

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| cat <<EOF | kubectl apply -f -

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: mgmt-nginx

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

project: default

source:

helm:

valueFiles:

- values.yaml

path: nginx-chart

repoURL: https://github.com/Shinminjin/cicd-study

targetRevision: HEAD

syncPolicy:

automated:

prune: true

syncOptions:

- CreateNamespace=true

destination:

namespace: mgmt-nginx

server: https://kubernetes.default.svc

EOF

Warning: metadata.finalizers: "resources-finalizer.argocd.argoproj.io": prefer a domain-qualified finalizer name including a path (/) to avoid accidental conflicts with other finalizer writers

application.argoproj.io/mgmt-nginx created

|

3. dev 클러스터에 Nginx 배포용 Argo CD Application 생성

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| cat <<EOF | kubectl apply -f -

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: dev-nginx

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

project: default

source:

helm:

valueFiles:

- values-dev.yaml

path: nginx-chart

repoURL: https://github.com/Shinminjin/cicd-study

targetRevision: HEAD

syncPolicy:

automated:

prune: true

syncOptions:

- CreateNamespace=true

destination:

namespace: dev-nginx

server: https://$DEVK8SIP:6443

EOF

Warning: metadata.finalizers: "resources-finalizer.argocd.argoproj.io": prefer a domain-qualified finalizer name including a path (/) to avoid accidental conflicts with other finalizer writers

application.argoproj.io/dev-nginx created

|

4. prd 클러스터에 Nginx 배포용 Argo CD Application 생성

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| cat <<EOF | kubectl apply -f -

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: prd-nginx

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

project: default

source:

helm:

valueFiles:

- values-prd.yaml

path: nginx-chart

repoURL: https://github.com/Shinminjin/cicd-study

targetRevision: HEAD

syncPolicy:

automated:

prune: true

syncOptions:

- CreateNamespace=true

destination:

namespace: prd-nginx

server: https://$PRDK8SIP:6443

EOF

Warning: metadata.finalizers: "resources-finalizer.argocd.argoproj.io": prefer a domain-qualified finalizer name including a path (/) to avoid accidental conflicts with other finalizer writers

application.argoproj.io/prd-nginx created

|

5. Argo CD에서 세 개 Nginx Application 상태 확인

1

2

3

4

5

6

| argocd app list

NAME CLUSTER NAMESPACE PROJECT STATUS HEALTH SYNCPOLICY CONDITIONS REPO PATH TARGET

argocd/dev-nginx https://172.18.0.3:6443 dev-nginx default Synced Healthy Auto-Prune <none> https://github.com/Shinminjin/cicd-study nginx-chart HEAD

argocd/mgmt-nginx https://kubernetes.default.svc mgmt-nginx default Synced Healthy Auto-Prune <none> https://github.com/Shinminjin/cicd-study nginx-chart HEAD

argocd/prd-nginx https://172.18.0.4:6443 prd-nginx default Synced Healthy Auto-Prune <none> https://github.com/Shinminjin/cicd-study nginx-chart HEAD

|

- 각 Application이 올바른 클러스터와 네임스페이스에 매핑되어 있음을 확인

1

2

3

4

5

6

| kubectl get applications -n argocd

NAME SYNC STATUS HEALTH STATUS

dev-nginx Synced Healthy

mgmt-nginx Synced Healthy

prd-nginx Synced Healthy

|

- Kubernetes 리소스 관점에서도 Application 상태를 재확인함

6. mgmt 클러스터에서 Nginx 리소스 및 NodePort 응답 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| kubectl get pod,svc,ep,cm -n mgmt-nginx

NAME READY STATUS RESTARTS AGE

pod/mgmt-nginx-6fc86948bc-vh7k8 1/1 Running 0 96s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/mgmt-nginx NodePort 10.96.10.62 <none> 80:30000/TCP 96s

NAME ENDPOINTS AGE

endpoints/mgmt-nginx 10.244.0.17:80 96s

NAME DATA AGE

configmap/kube-root-ca.crt 1 96s

configmap/mgmt-nginx 1 96s

|

1

2

3

4

5

6

7

8

9

10

11

12

| curl -s http://127.0.0.1:30000

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Kubernetes!</h1>

<p>Nginx version 1.26.1</p>

</body>

</html>

|

mgmt-nginx 네임스페이스의 Pod, Service, Endpoint, ConfigMap을 확인- 호스트에서 NodePort

30000으로 접속해 Nginx 기본 페이지 대신 커스텀 HTML이 노출되는 것을 확인

7. dev 클러스터에서 Nginx 배포 및 NodePort 응답 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| kubectl get pod,svc,ep,cm -n dev-nginx --context kind-dev

NAME READY STATUS RESTARTS AGE

pod/dev-nginx-59f4c8899-9vpvf 1/1 Running 0 2m4s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dev-nginx NodePort 10.96.108.194 <none> 80:31000/TCP 2m4s

NAME ENDPOINTS AGE

endpoints/dev-nginx 10.244.0.5:80 2m4s

NAME DATA AGE

configmap/dev-nginx 1 2m4s

configmap/kube-root-ca.crt 1 2m4s

|

1

2

3

4

5

6

7

8

9

10

11

12

| curl -s http://127.0.0.1:31000

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Dev - Kubernetes!</h1>

<p>Nginx version 1.26.1</p>

</body>

</html>

|

kind-dev 컨텍스트에서 dev-nginx 네임스페이스 리소스를 조회함- 호스트에서

31000 포트로 접속해 dev 환경용 문구가 출력되는지 확인

8. prd 클러스터에서 Nginx 다중 Pod/Endpoint 및 NodePort 응답 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| kubectl get pod,svc,ep,cm -n prd-nginx --context kind-prd

NAME READY STATUS RESTARTS AGE

pod/prd-nginx-86d9bc9f7f-bfgg7 1/1 Running 0 3m47s

pod/prd-nginx-86d9bc9f7f-g24p8 1/1 Running 0 3m47s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/prd-nginx NodePort 10.96.193.167 <none> 80:32000/TCP 3m47s

NAME ENDPOINTS AGE

endpoints/prd-nginx 10.244.0.5:80,10.244.0.6:80 3m47s

NAME DATA AGE

configmap/kube-root-ca.crt 1 3m47s

configmap/prd-nginx 1 3m47s

|

1

2

3

4

5

6

7

8

9

10

11

12

| curl -s http://127.0.0.1:32000

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Prd - Kubernetes!</h1>

<p>Nginx version 1.26.1</p>

</body>

</html>

|

kind-prd 컨텍스트에서 prd-nginx 네임스페이스 리소스를 확인함- Pod:

prd-nginx-... 2개 (Replica 2개로 운영 환경 스케일 설정) - Service: NodePort

80:32000/TCP - Endpoint: 두 Pod IP가 모두 등록되어 있음

9. Argo CD Application 삭제

1

2

3

4

5

| kubectl delete applications -n argocd mgmt-nginx dev-nginx prd-nginx

application.argoproj.io "mgmt-nginx" deleted from argocd namespace

application.argoproj.io "dev-nginx" deleted from argocd namespace

application.argoproj.io "prd-nginx" deleted from argocd namespace

|

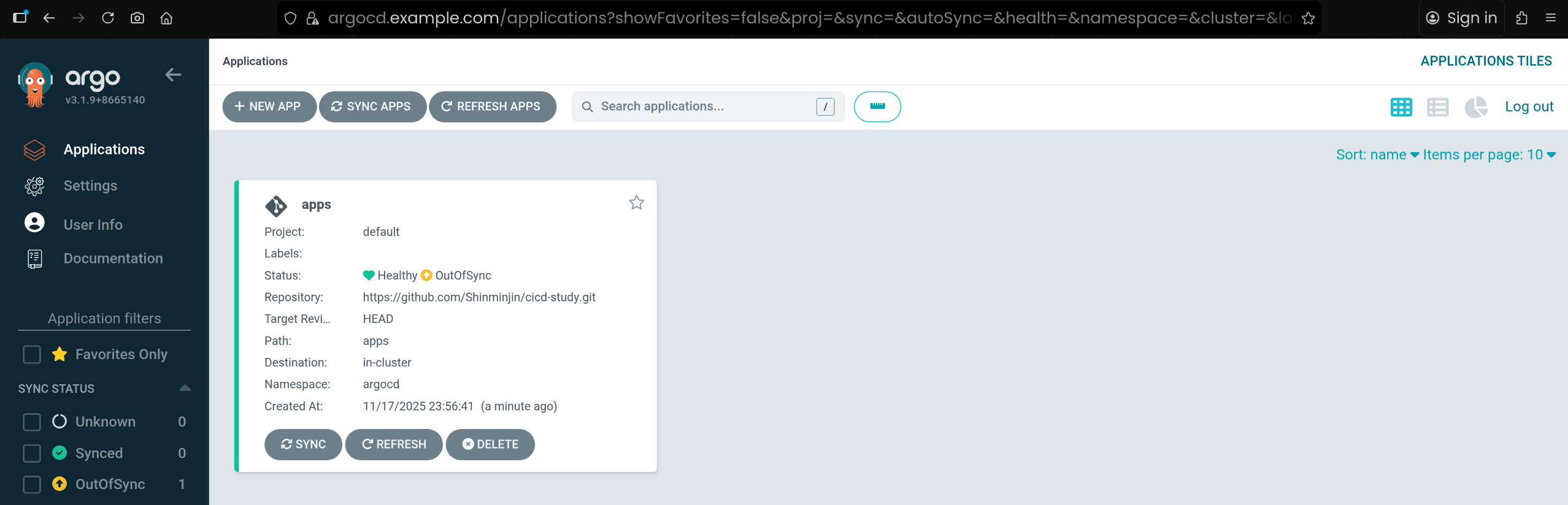

🌳 Argo CD App of Apps 패턴 정리

1. Root Application(apps) 생성

1

2

3

4

5

6

7

| argocd app create apps \

--dest-namespace argocd \

--dest-server https://kubernetes.default.svc \

--repo https://github.com/Shinminjin/cicd-study.git \

--path apps

application 'apps' created

|

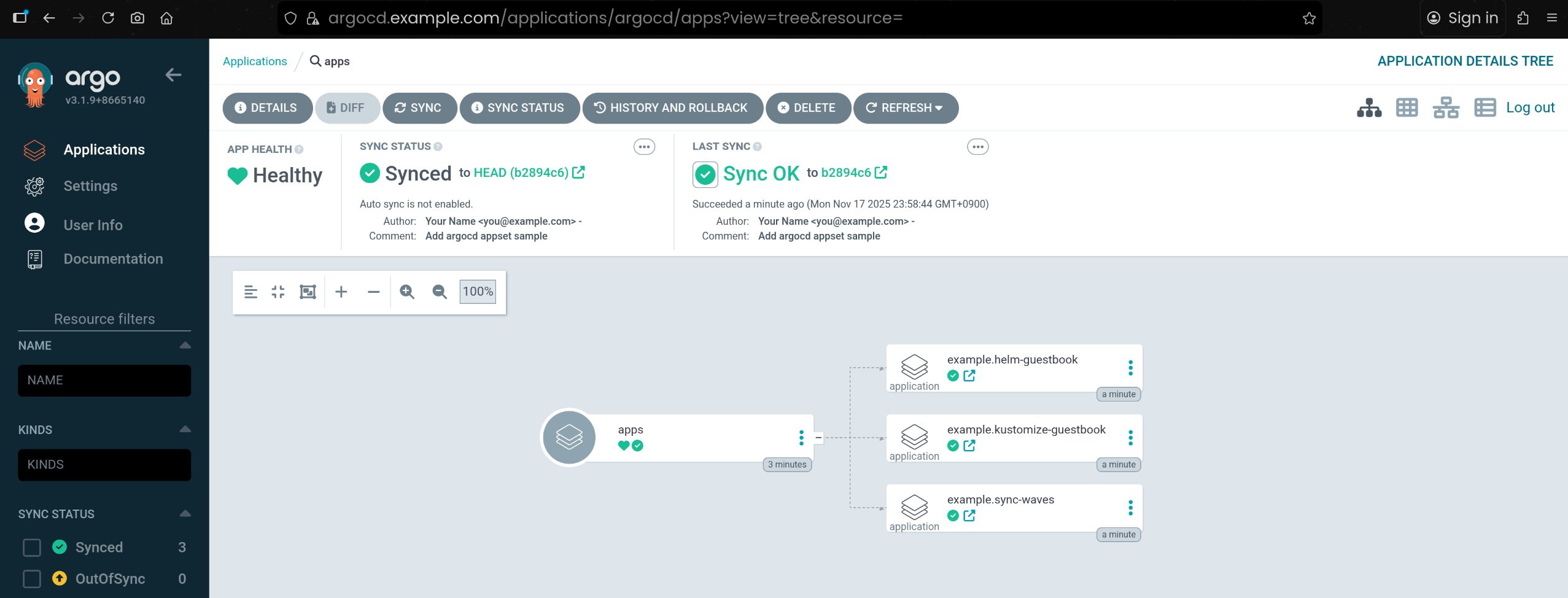

argocd 네임스페이스에 apps라는 이름의 Root Application을 생성함https://github.com/Shinminjin/cicd-study.git 저장소의 apps 디렉터리를 소스로 사용함- 목적: 이

apps 안에 정의된 여러 Application 매니페스트를 한 번에 관리하기 위함

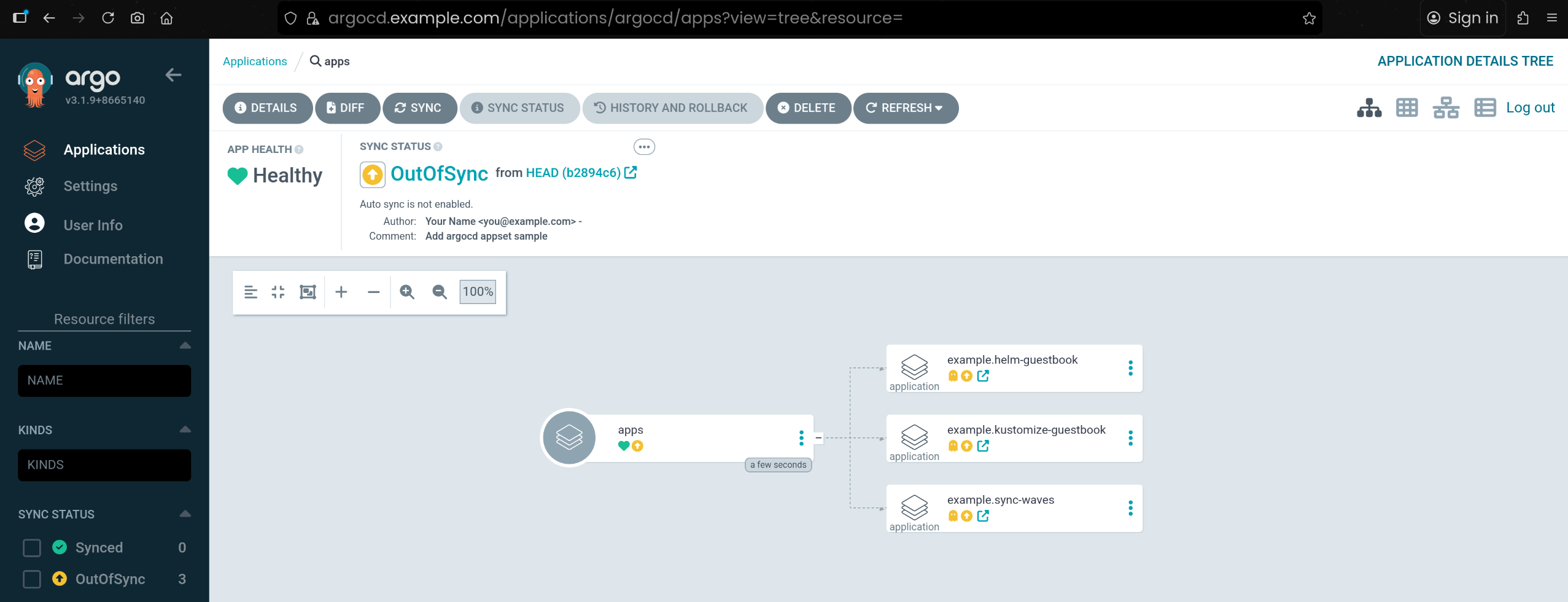

3. Root Application Sync → 하위 Application 자동 생성

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

| argocd app sync apps

TIMESTAMP GROUP KIND NAMESPACE NAME STATUS HEALTH HOOK MESSAGE

2025-11-17T23:58:44+09:00 argoproj.io Application argocd example.helm-guestbook OutOfSync Missing

2025-11-17T23:58:44+09:00 argoproj.io Application argocd example.kustomize-guestbook OutOfSync Missing

2025-11-17T23:58:44+09:00 argoproj.io Application argocd example.sync-waves OutOfSync Missing

2025-11-17T23:58:44+09:00 argoproj.io Application argocd example.helm-guestbook Synced Missing

2025-11-17T23:58:44+09:00 argoproj.io Application argocd example.sync-waves OutOfSync Missing application.argoproj.io/example.sync-waves created

2025-11-17T23:58:44+09:00 argoproj.io Application argocd example.kustomize-guestbook OutOfSync Missing application.argoproj.io/example.kustomize-guestbook created

2025-11-17T23:58:44+09:00 argoproj.io Application argocd example.helm-guestbook Synced Missing application.argoproj.io/example.helm-guestbook created

Name: argocd/apps

Project: default

Server: https://kubernetes.default.svc

Namespace: argocd

URL: https://argocd.example.com/applications/apps

Source:

- Repo: https://github.com/Shinminjin/cicd-study.git

Target:

Path: apps

SyncWindow: Sync Allowed

Sync Policy: Manual

Sync Status: Synced to (b2894c6)

Health Status: Healthy

Operation: Sync

Sync Revision: b2894c67f7a64e42b408da5825cb0b87ee306b04

Phase: Succeeded

Start: 2025-11-17 23:58:44 +0900 KST

Finished: 2025-11-17 23:58:44 +0900 KST

Duration: 0s

Message: successfully synced (all tasks run)

GROUP KIND NAMESPACE NAME STATUS HEALTH HOOK MESSAGE

argoproj.io Application argocd example.helm-guestbook Synced application.argoproj.io/example.helm-guestbook created

argoproj.io Application argocd example.sync-waves Synced application.argoproj.io/example.sync-waves created

argoproj.io Application argocd example.kustomize-guestbook Synced application.argoproj.io/example.kustomize-guestbook created

|

3. Root Application(apps) 상세 상태 확인

1

2

3

4

5

6

7

| argocd app list

NAME CLUSTER NAMESPACE PROJECT STATUS HEALTH SYNCPOLICY CONDITIONS REPO PATH TARGET

argocd/apps https://kubernetes.default.svc argocd default Synced Healthy Manual <none> https://github.com/Shinminjin/cicd-study.git apps

argocd/example.helm-guestbook https://kubernetes.default.svc helm-guestbook default Synced Healthy Auto-Prune <none> https://github.com/gasida/cicd-study helm-guestbook main

argocd/example.kustomize-guestbook https://kubernetes.default.svc kustomize-guestbook default Synced Healthy Auto-Prune <none> https://github.com/gasida/cicd-study kustomize-guestbook main

argocd/example.sync-waves https://kubernetes.default.svc sync-waves default Synced Healthy Auto-Prune <none> https://github.com/gasida/cicd-study sync-waves main

|

4. 실제 쿠버네티스 리소스 생성 상태 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

| kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

argocd argocd-application-controller-0 1/1 Running 0 4h

argocd argocd-applicationset-controller-bbff79c6f-9qcf8 1/1 Running 0 4h

argocd argocd-dex-server-6877ddf4f8-fvfll 1/1 Running 0 4h

argocd argocd-notifications-controller-7b5658fc47-26p24 1/1 Running 0 4h

argocd argocd-redis-7d948674-xnl9k 1/1 Running 0 4h

argocd argocd-repo-server-7679dc55f5-swj2g 1/1 Running 0 4h

argocd argocd-server-7d769b6f48-2ts94 1/1 Running 0 4h

helm-guestbook helm-guestbook-667dffd5cf-f24hj 1/1 Running 0 2m40s

ingress-nginx ingress-nginx-controller-5b89cb54f9-5gvfh 1/1 Running 0 4h3m

kube-system coredns-668d6bf9bc-d5bn7 1/1 Running 0 4h5m

kube-system coredns-668d6bf9bc-vb4p7 1/1 Running 0 4h5m

kube-system etcd-mgmt-control-plane 1/1 Running 0 4h5m

kube-system kindnet-jtm8t 1/1 Running 0 4h5m

kube-system kube-apiserver-mgmt-control-plane 1/1 Running 0 4h5m

kube-system kube-controller-manager-mgmt-control-plane 1/1 Running 0 4h5m

kube-system kube-proxy-b9pmh 1/1 Running 0 4h5m

kube-system kube-scheduler-mgmt-control-plane 1/1 Running 0 4h5m

kustomize-guestbook kustomize-guestbook-ui-85db984648-mzc87 1/1 Running 0 2m40s

local-path-storage local-path-provisioner-7dc846544d-wltkn 1/1 Running 0 4h5m

sync-waves backend-z4kpq 1/1 Running 0 2m28s

sync-waves frontend-x8xjc 1/1 Running 0 108s

sync-waves maint-page-down-scbbs 0/1 Completed 0 105s

sync-waves maint-page-up-tr6d2 0/1 Completed 0 115s

sync-waves upgrade-sql-schemab2894c6-presync-1763391525-5qk25 0/1 Completed 0 2m41s

|

5. Root Application 삭제로 App of Apps 정리

1

2

3

| argocd app delete argocd/apps --yes

application 'argocd/apps' deleted

|

📦 ApplicationSet List 제네레이터 실습

1. List Generator 기반 ApplicationSet 생성

1

2

| echo $DEVK8SIP $PRDK8SIP

172.18.0.3 172.18.0.4

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

| cat <<EOF | kubectl apply -f -

apiVersion: argoproj.io/v1alpha1

kind: ApplicationSet

metadata:

name: guestbook

namespace: argocd

spec:

goTemplate: true

goTemplateOptions: ["missingkey=error"]

generators:

- list:

elements:

- cluster: dev-k8s

url: https://$DEVK8SIP:6443

- cluster: prd-k8s

url: https://$PRDK8SIP:6443

template:

metadata:

name: '-guestbook'

labels:

environment: ''

managed-by: applicationset

spec:

project: default

source:

repoURL: https://github.com/Shinminjin/cicd-study.git

targetRevision: HEAD

path: appset/list/

destination:

server: ''

namespace: guestbook

syncPolicy:

syncOptions:

- CreateNamespace=true

EOF

applicationset.argoproj.io/guestbook created

|

2. ApplicationSet guestbook 정의 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

| kubectl get applicationsets -n argocd guestbook -o yaml | kubectl neat | yq

{

"apiVersion": "argoproj.io/v1alpha1",

"kind": "ApplicationSet",

"metadata": {

"name": "guestbook",

"namespace": "argocd"

},

"spec": {

"generators": [

{

"list": {

"elements": [

{

"cluster": "dev-k8s",

"url": "https://172.18.0.3:6443"

},

{

"cluster": "prd-k8s",

"url": "https://172.18.0.4:6443"

}

]

}

}

],

"goTemplate": true,

"goTemplateOptions": [

"missingkey=error"

],

"template": {

"metadata": {

"labels": {

"environment": "",

"managed-by": "applicationset"

},

"name": "-guestbook"

},

"spec": {

"destination": {

"namespace": "guestbook",

"server": ""

},

"project": "default",

"source": {

"path": "appset/list/",

"repoURL": "https://github.com/Shinminjin/cicd-study.git",

"targetRevision": "HEAD"

},

"syncPolicy": {

"syncOptions": [

"CreateNamespace=true"

]

}

}

}

}

}

|

3. ApplicationSet 리소스 및 상태 확인

1

2

3

4

| kubectl get applicationsets -n argocd

NAME AGE

guestbook 108s

|

1

2

3

4

| argocd appset list

NAME PROJECT SYNCPOLICY CONDITIONS REPO PATH TARGET

argocd/guestbook default nil [{ParametersGenerated Successfully generated parameters for all Applications 2025-11-18 00:09:45 +0900 KST True ParametersGenerated} {ResourcesUpToDate ApplicationSet up to date 2025-11-18 00:09:45 +0900 KST True ApplicationSetUpToDate}] https://github.com/Shinminjin/cicd-study.git appset/list/ HEAD

|

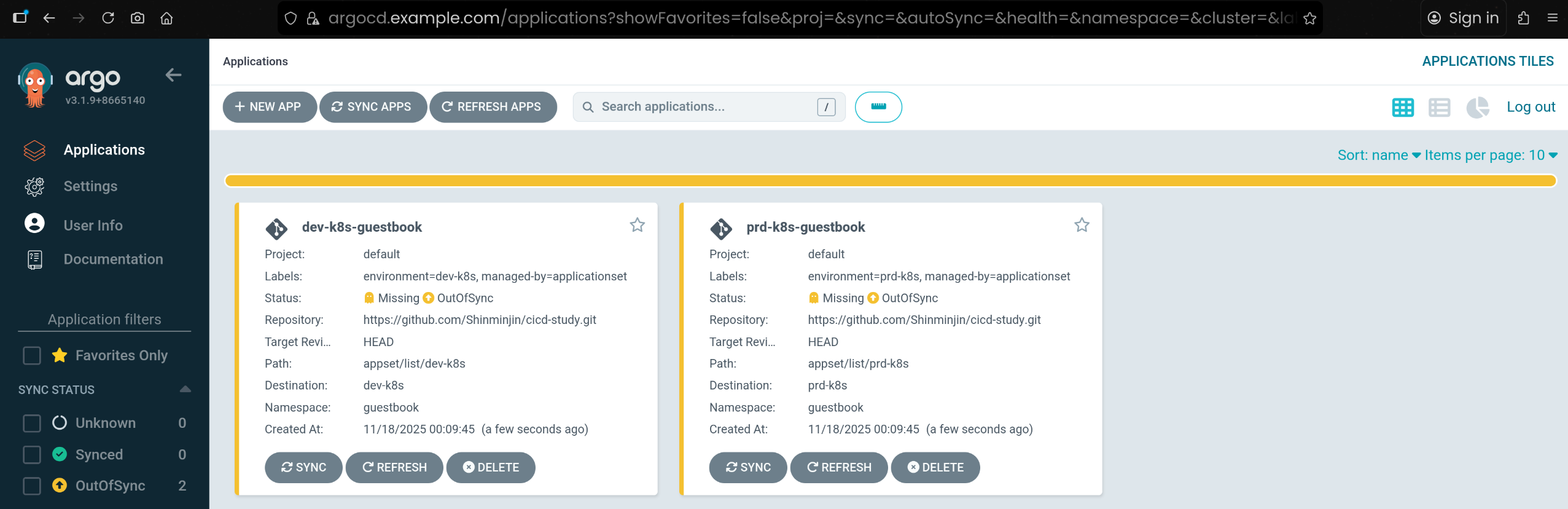

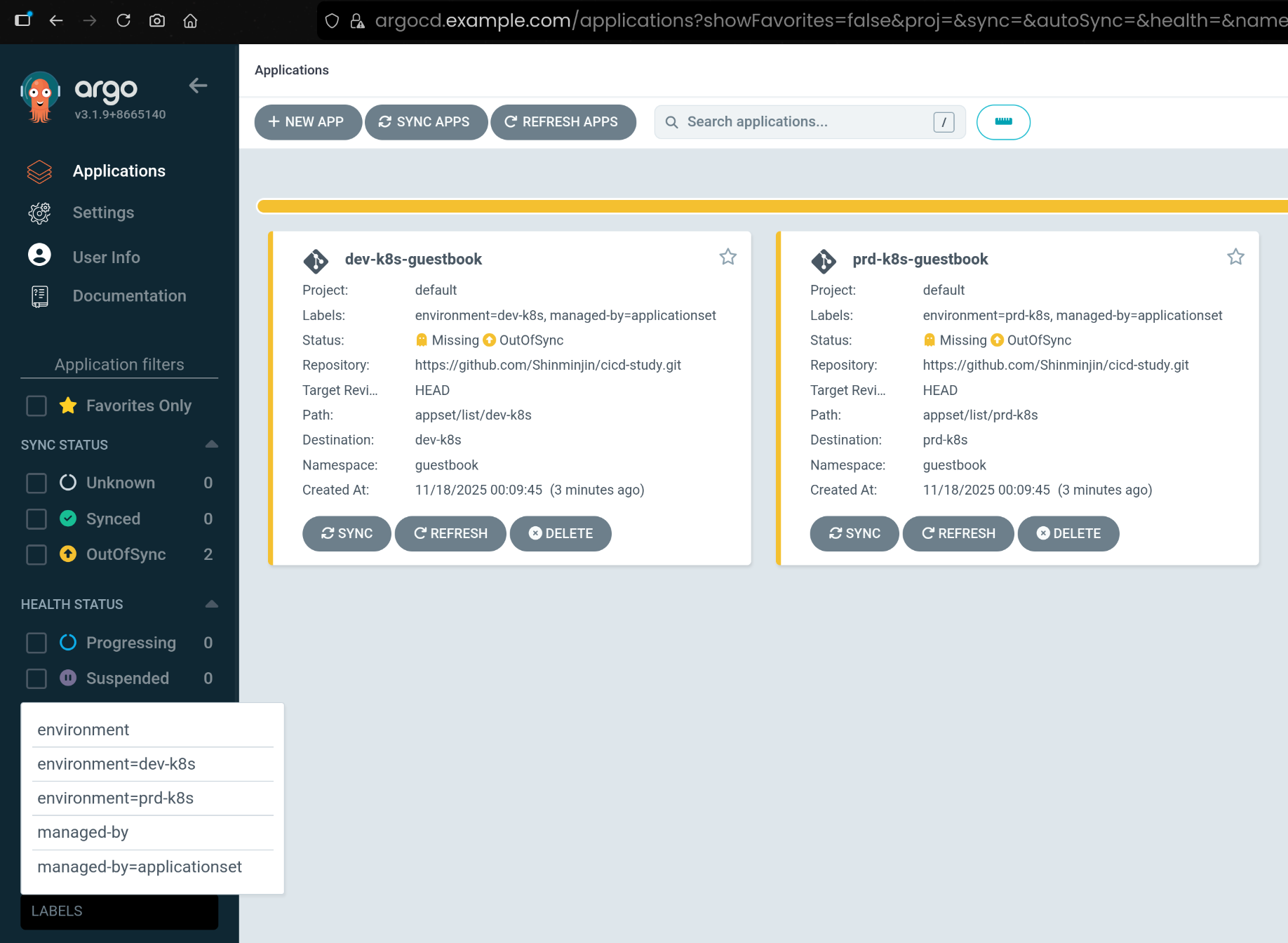

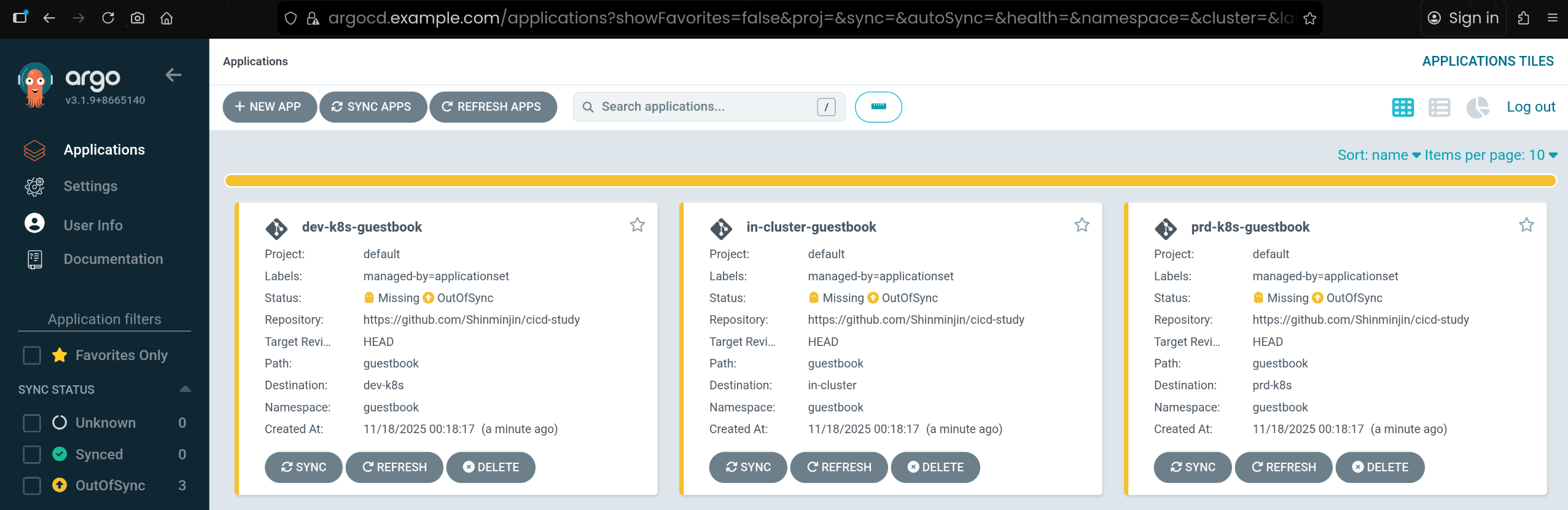

4. ApplicationSet이 생성한 Application 목록 확인

1

2

3

4

5

| argocd app list

NAME CLUSTER NAMESPACE PROJECT STATUS HEALTH SYNCPOLICY CONDITIONS REPO PATH TARGET

argocd/dev-k8s-guestbook https://172.18.0.3:6443 guestbook default OutOfSync Missing Manual <none> https://github.com/Shinminjin/cicd-study.git appset/list/dev-k8s HEAD

argocd/prd-k8s-guestbook https://172.18.0.4:6443 guestbook default OutOfSync Missing Manual <none> https://github.com/Shinminjin/cicd-study.git appset/list/prd-k8s HEAD

|

- ApplicationSet이 생성한 두 개의 Application(

dev-k8s-guestbook, prd-k8s-guestbook)을 확인 - 처음 상태는 아직 Sync 전이라

OutOfSync / Missing 상태임

5. ApplicationSet이 부여한 라벨 기반 필터링

1

2

3

4

5

| argocd app list -l managed-by=applicationset

NAME CLUSTER NAMESPACE PROJECT STATUS HEALTH SYNCPOLICY CONDITIONS REPO PATH TARGET

argocd/dev-k8s-guestbook https://172.18.0.3:6443 guestbook default OutOfSync Missing Manual <none> https://github.com/Shinminjin/cicd-study.git appset/list/dev-k8s HEAD

argocd/prd-k8s-guestbook https://172.18.0.4:6443 guestbook default OutOfSync Missing Manual <none> https://github.com/Shinminjin/cicd-study.git appset/list/prd-k8s HEAD

|

- Application 템플릿에서 부여한

managed-by=applicationset 라벨을 기준으로 Argo CD 앱 목록을 필터링함

1

2

3

4

5

| kubectl get applications -n argocd

NAME SYNC STATUS HEALTH STATUS

dev-k8s-guestbook OutOfSync Missing

prd-k8s-guestbook OutOfSync Missing

|

1

2

3

4

5

| kubectl get applications -n argocd --show-labels

NAME SYNC STATUS HEALTH STATUS LABELS

dev-k8s-guestbook OutOfSync Missing environment=dev-k8s,managed-by=applicationset

prd-k8s-guestbook OutOfSync Missing environment=prd-k8s,managed-by=applicationset

|

- Kubernetes 리소스 측면에서도 Application CR에 라벨이 잘 들어갔는지 확인함

6. Label Selector 기반 일괄 Sync 수행

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

| argocd app sync -l managed-by=applicationset

TIMESTAMP GROUP KIND NAMESPACE NAME STATUS HEALTH HOOK MESSAGE

2025-11-18T00:14:46+09:00 Service guestbook guestbook-ui OutOfSync Missing

2025-11-18T00:14:46+09:00 apps Deployment guestbook guestbook-ui OutOfSync Missing

2025-11-18T00:14:46+09:00 Namespace guestbook Running Synced namespace/guestbook created

Name: argocd/dev-k8s-guestbook

Project: default

Server: https://172.18.0.3:6443

Namespace: guestbook

URL: https://argocd.example.com/applications/argocd/dev-k8s-guestbook

Source:

- Repo: https://github.com/Shinminjin/cicd-study.git

Target: HEAD

Path: appset/list/dev-k8s

SyncWindow: Sync Allowed

Sync Policy: Manual

Sync Status: Synced to HEAD (b2894c6)

Health Status: Progressing

Operation: Sync

Sync Revision: b2894c67f7a64e42b408da5825cb0b87ee306b04

Phase: Succeeded

Start: 2025-11-18 00:14:46 +0900 KST

Finished: 2025-11-18 00:14:46 +0900 KST

Duration: 0s

Message: successfully synced (all tasks run)

GROUP KIND NAMESPACE NAME STATUS HEALTH HOOK MESSAGE

Namespace guestbook Running Synced namespace/guestbook created

Service guestbook guestbook-ui Synced Healthy service/guestbook-ui created

apps Deployment guestbook guestbook-ui Synced Progressing deployment.apps/guestbook-ui created

TIMESTAMP GROUP KIND NAMESPACE NAME STATUS HEALTH HOOK MESSAGE

2025-11-18T00:14:47+09:00 apps Deployment guestbook guestbook-ui OutOfSync Missing

2025-11-18T00:14:47+09:00 Service guestbook guestbook-ui OutOfSync Missing

2025-11-18T00:14:47+09:00 Namespace guestbook Running Synced namespace/guestbook created

Name: argocd/prd-k8s-guestbook

Project: default

Server: https://172.18.0.4:6443

Namespace: guestbook

URL: https://argocd.example.com/applications/argocd/prd-k8s-guestbook

Source:

- Repo: https://github.com/Shinminjin/cicd-study.git

Target: HEAD

Path: appset/list/prd-k8s

SyncWindow: Sync Allowed

Sync Policy: Manual

Sync Status: Synced to HEAD (b2894c6)

Health Status: Progressing

Operation: Sync

Sync Revision: b2894c67f7a64e42b408da5825cb0b87ee306b04

Phase: Succeeded

Start: 2025-11-18 00:14:47 +0900 KST

Finished: 2025-11-18 00:14:47 +0900 KST

Duration: 0s

Message: successfully synced (all tasks run)

GROUP KIND NAMESPACE NAME STATUS HEALTH HOOK MESSAGE

Namespace guestbook Running Synced namespace/guestbook created

Service guestbook guestbook-ui Synced Healthy service/guestbook-ui created

apps Deployment guestbook guestbook-ui Synced Progressing deployment.apps/guestbook-ui created

|

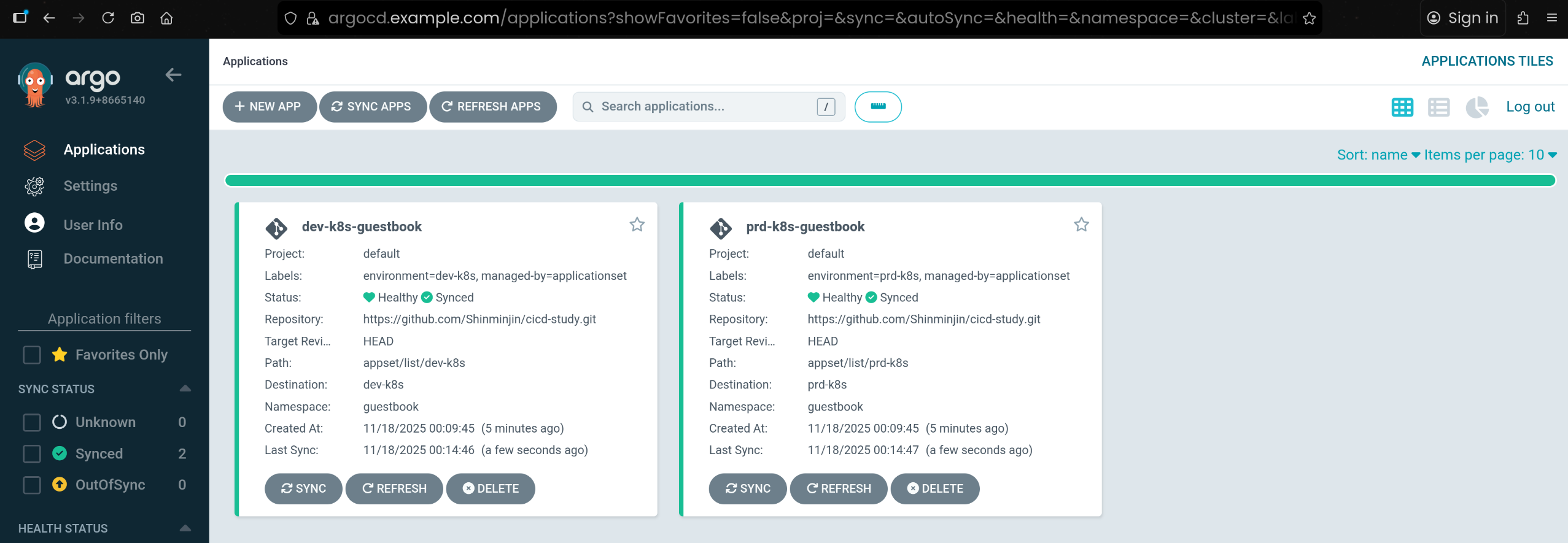

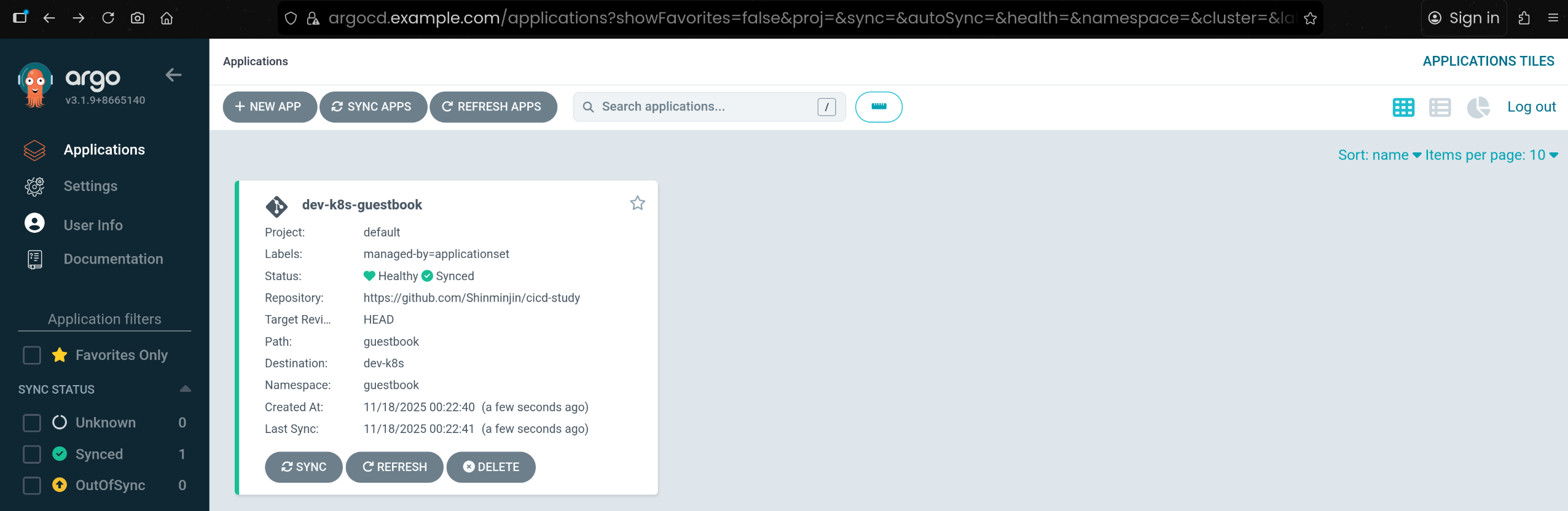

managed-by=applicationset 라벨을 기준으로 두 개의 guestbook 앱을 한 번에 sync 수행함

7. Sync 이후 Application 상태 및 리소스 상태 확인

1

2

3

4

| k8s2 get pod -n guestbook

NAME READY STATUS RESTARTS AGE

guestbook-ui-7cf4fd7cb9-m7l46 1/1 Running 0 118s

|

1

2

3

4

5

| k8s3 get pod -n guestbook

NAME READY STATUS RESTARTS AGE

guestbook-ui-7cf4fd7cb9-9gkql 1/1 Running 0 2m6s

guestbook-ui-7cf4fd7cb9-hbf9s 1/1 Running 0 2m6s

|

- dev/prd 클러스터에 실제 배포된 guestbook Pod 상태 확인

8. 생성된 Application 매니페스트 내용 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

| kubectl get applications -n argocd dev-k8s-guestbook -o yaml | kubectl neat | yq

{

"apiVersion": "argoproj.io/v1alpha1",

"kind": "Application",

"metadata": {

"labels": {

"environment": "dev-k8s",

"managed-by": "applicationset"

},

"name": "dev-k8s-guestbook",

"namespace": "argocd"

},

"spec": {

"destination": {

"namespace": "guestbook",

"server": "https://172.18.0.3:6443"

},

"project": "default",

"source": {

"path": "appset/list/dev-k8s",

"repoURL": "https://github.com/Shinminjin/cicd-study.git",

"targetRevision": "HEAD"

},

"syncPolicy": {

"syncOptions": [

"CreateNamespace=true"

]

}

}

}

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

| kubectl get applications -n argocd prd-k8s-guestbook -o yaml | kubectl neat | yq

{

"apiVersion": "argoproj.io/v1alpha1",

"kind": "Application",

"metadata": {

"labels": {

"environment": "prd-k8s",

"managed-by": "applicationset"

},

"name": "prd-k8s-guestbook",

"namespace": "argocd"

},

"spec": {

"destination": {

"namespace": "guestbook",

"server": "https://172.18.0.4:6443"

},

"project": "default",

"source": {

"path": "appset/list/prd-k8s",

"repoURL": "https://github.com/Shinminjin/cicd-study.git",

"targetRevision": "HEAD"

},

"syncPolicy": {

"syncOptions": [

"CreateNamespace=true"

]

}

}

}

|

9. ApplicationSet 삭제로 제어 리소스 정리

1

2

3

| argocd appset delete guestbook --yes

applicationset 'guestbook' deleted

|

🌍 ApplicationSet Cluster 제네레이터 실습

1. Cluster 제네레이터로 3개 클러스터에 일괄 배포

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

| cat <<EOF | kubectl apply -f -

apiVersion: argoproj.io/v1alpha1

kind: ApplicationSet

metadata:

name: guestbook

namespace: argocd

spec:

goTemplate: true

goTemplateOptions: ["missingkey=error"]

generators:

- clusters: {}

template:

metadata:

name: '-guestbook'

labels:

managed-by: applicationset

spec:

project: "default"

source:

repoURL: https://github.com/Shinminjin/cicd-study

targetRevision: HEAD

path: guestbook

destination:

server: ''

namespace: guestbook

syncPolicy:

syncOptions:

- CreateNamespace=true

EOF

applicationset.argoproj.io/guestbook created

|

clusters: {} 제네레이터를 사용해 Argo CD에 등록된 모든 클러스터에 guestbook 애플리케이션을 배포함- 각 클러스터의 이름(

.name)과 API 서버 주소(.server)를 템플릿에서 사용하여, 클러스터별로 Application이 자동 생성되도록 구성함

2. Cluster 제네레이터 ApplicationSet 제거

1

2

3

| argocd appset delete guestbook --yes

applicationset 'guestbook' deleted

|

3. dev 클러스터만 대상으로 필터링 준비 (cluster 시크릿에 라벨 추가)

1

2

3

4

5

| kubectl get secret -n argocd -l argocd.argoproj.io/secret-type=cluster

NAME TYPE DATA AGE

cluster-172.18.0.3-4100004299 Opaque 3 51m

cluster-172.18.0.4-568336172 Opaque 3 44m

|

- Argo CD가 관리 중인 클러스터 시크릿 목록을 조회해 dev/prd 클러스터를 식별함

1

2

3

4

| DEVK8S=cluster-172.18.0.3-4100004299

kubectl label secrets $DEVK8S -n argocd env=stg

secret/cluster-172.18.0.3-4100004299 labeled

|

- dev 클러스터에 해당하는 시크릿 이름을 변수로 지정하고, 여기에

env=stg 라벨을 부여함

1

2

3

4

| kubectl get secret -n argocd -l env=stg

NAME TYPE DATA AGE

cluster-172.18.0.3-4100004299 Opaque 3 53m

|

4. 라벨 셀렉터 기반 Cluster 제네레이터 ApplicationSet 생성

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

| cat <<EOF | kubectl apply -f -

apiVersion: argoproj.io/v1alpha1

kind: ApplicationSet

metadata:

name: guestbook

namespace: argocd

spec:

goTemplate: true

goTemplateOptions: ["missingkey=error"]

generators:

- clusters:

selector:

matchLabels:

env: "stg"

template:

metadata:

name: '-guestbook'

labels:

managed-by: applicationset

spec:

project: "default"

source:

repoURL: https://github.com/Shinminjin/cicd-study

targetRevision: HEAD

path: guestbook

destination:

server: ''

namespace: guestbook

syncPolicy:

syncOptions:

- CreateNamespace=true

automated:

prune: true

selfHeal: true

EOF

applicationset.argoproj.io/guestbook created

|

5. 라벨 기반 Cluster ApplicationSet 실습 정리

1

2

3

| argocd appset delete guestbook --yes

applicationset 'guestbook' deleted

|

🔐 keycloak 파드로 배포

1. Keycloak Deployment/Service/Ingress로 배포 준비

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

| cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: keycloak

labels:

app: keycloak

spec:

replicas: 1

selector:

matchLabels:

app: keycloak

template:

metadata:

labels:

app: keycloak

spec:

containers:

- name: keycloak

image: quay.io/keycloak/keycloak:26.4.0

args: ["start-dev"] # dev mode 실행

env:

- name: KEYCLOAK_ADMIN

value: admin

- name: KEYCLOAK_ADMIN_PASSWORD

value: admin

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: keycloak

spec:

selector:

app: keycloak

ports:

- name: http

port: 80

targetPort: 8080

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: keycloak

namespace: default

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

nginx.ingress.kubernetes.io/ssl-redirect: "false"

spec:

ingressClassName: nginx

rules:

- host: keycloak.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: keycloak

port:

number: 8080

EOF

deployment.apps/keycloak created

service/keycloak created

ingress.networking.k8s.io/keycloak created

|

2. Keycloak 리소스 상태 확인

1

2

3

4

5

6

7

8

9

10

| kubectl get deploy,svc,ep keycloak

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/keycloak 1/1 1 1 40s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/keycloak ClusterIP 10.96.249.212 <none> 80/TCP 40s

NAME ENDPOINTS AGE

endpoints/keycloak 10.244.0.25:8080 40s

|

1

2

3

4

| kubectl get ingress keycloak

NAME CLASS HOSTS ADDRESS PORTS AGE

keycloak nginx keycloak.example.com localhost 80 58s

|

3. Ingress + /etc/hosts 설정으로 Keycloak 도메인 접근 검증

1

2

3

4

5

6

7

8

9

| curl -s -H "Host: keycloak.example.com" http://127.0.0.1 -I

HTTP/1.1 302 Found

Date: Tue, 18 Nov 2025 12:02:15 GMT

Connection: keep-alive

Location: http://keycloak.example.com/admin/

Referrer-Policy: no-referrer

Strict-Transport-Security: max-age=31536000; includeSubDomains

X-Content-Type-Options: nosniff

|

1

2

3

| echo "127.0.0.1 keycloak.example.com" | sudo tee -a /etc/hosts

127.0.0.1 keycloak.example.com

|

1

2

3

4

5

6

7

8

9

| curl -s http://keycloak.example.com -I

HTTP/1.1 302 Found

Date: Tue, 18 Nov 2025 12:04:40 GMT

Connection: keep-alive

Location: http://keycloak.example.com/admin/

Referrer-Policy: no-referrer

Strict-Transport-Security: max-age=31536000; includeSubDomains

X-Content-Type-Options: nosniff

|

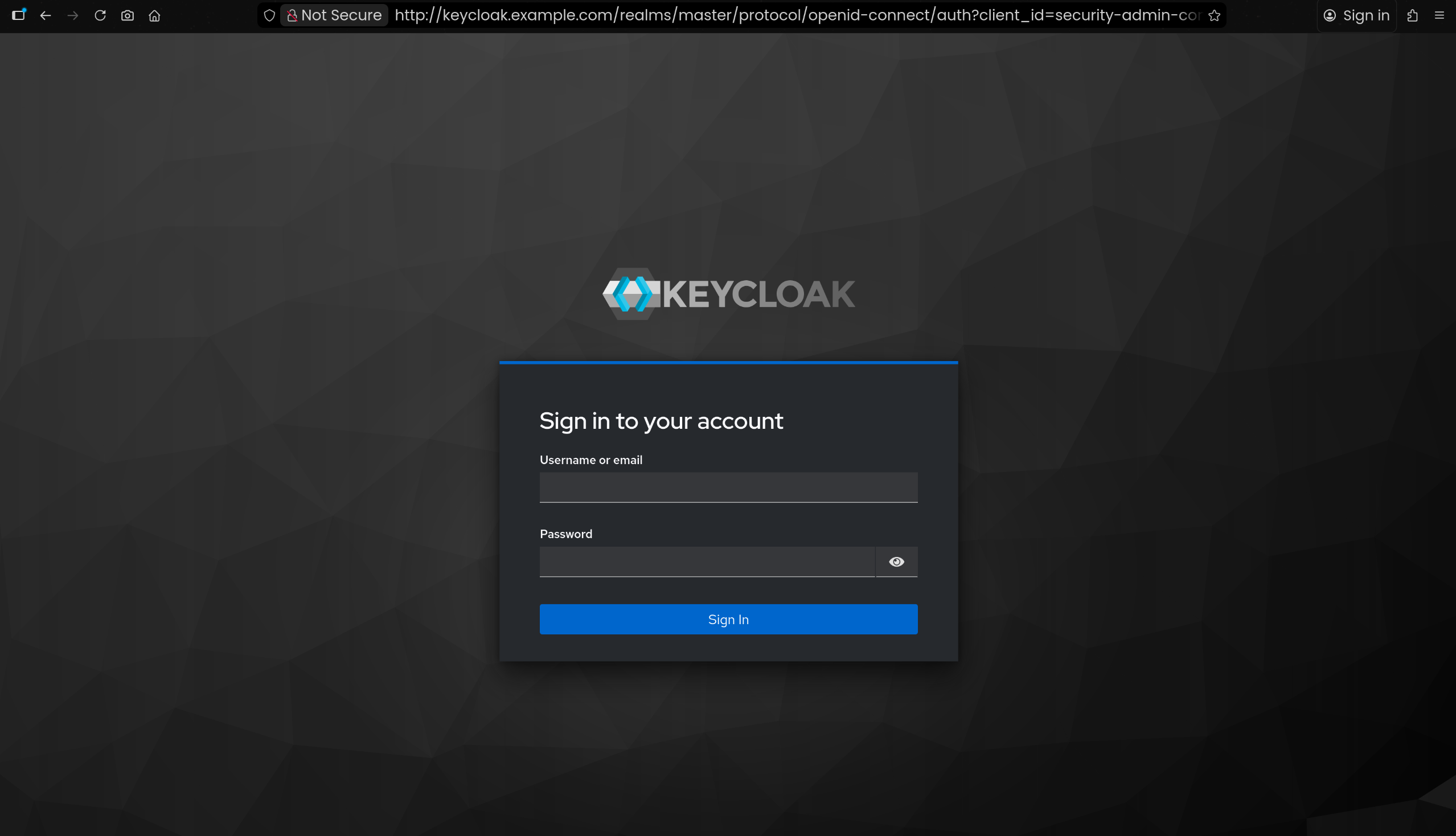

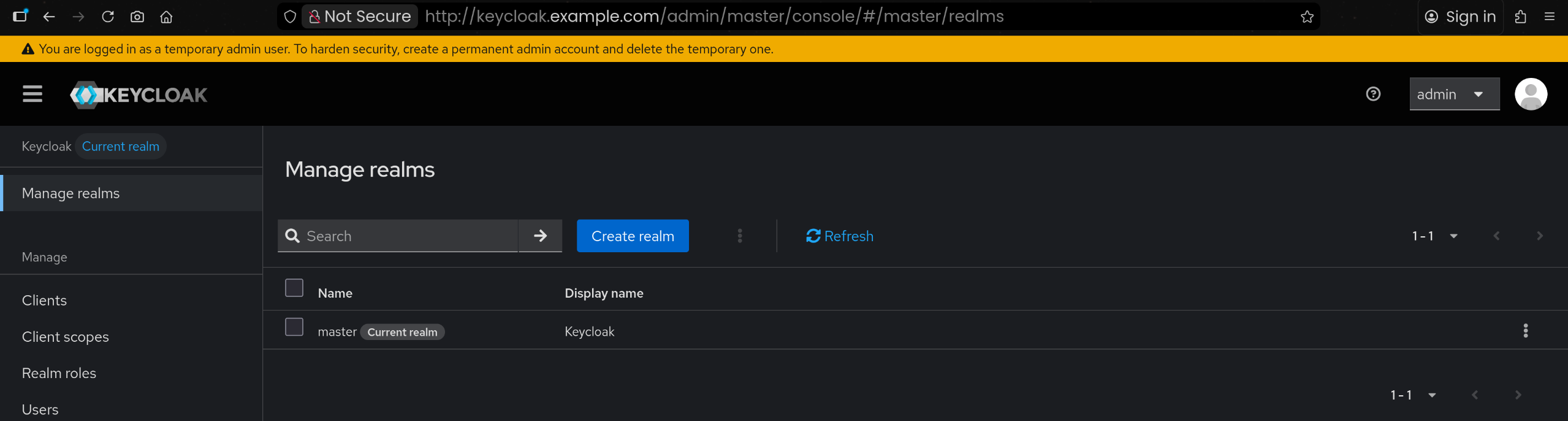

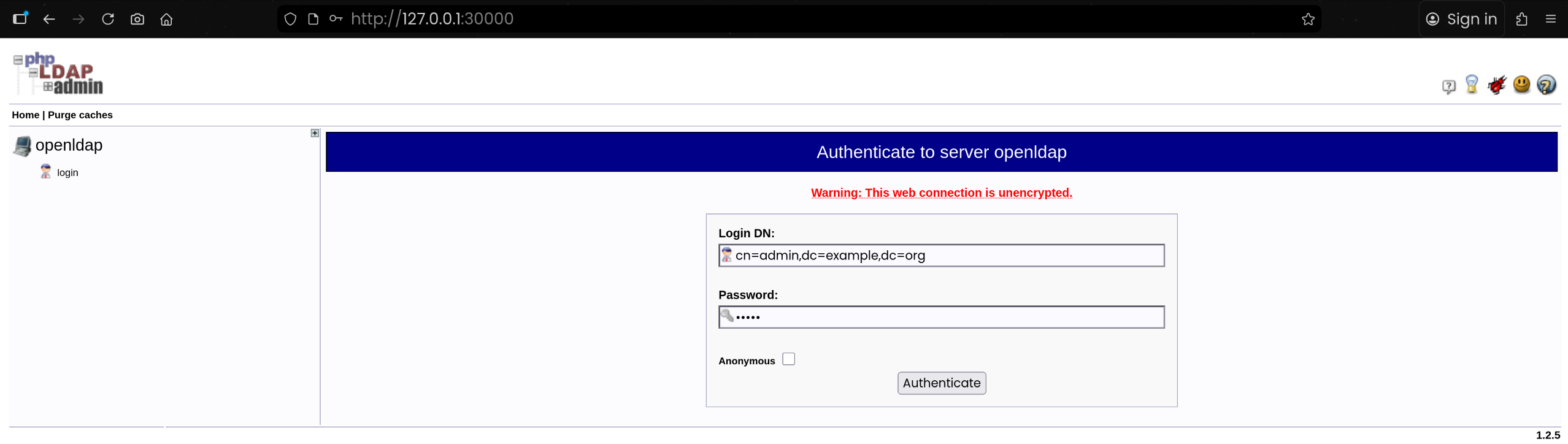

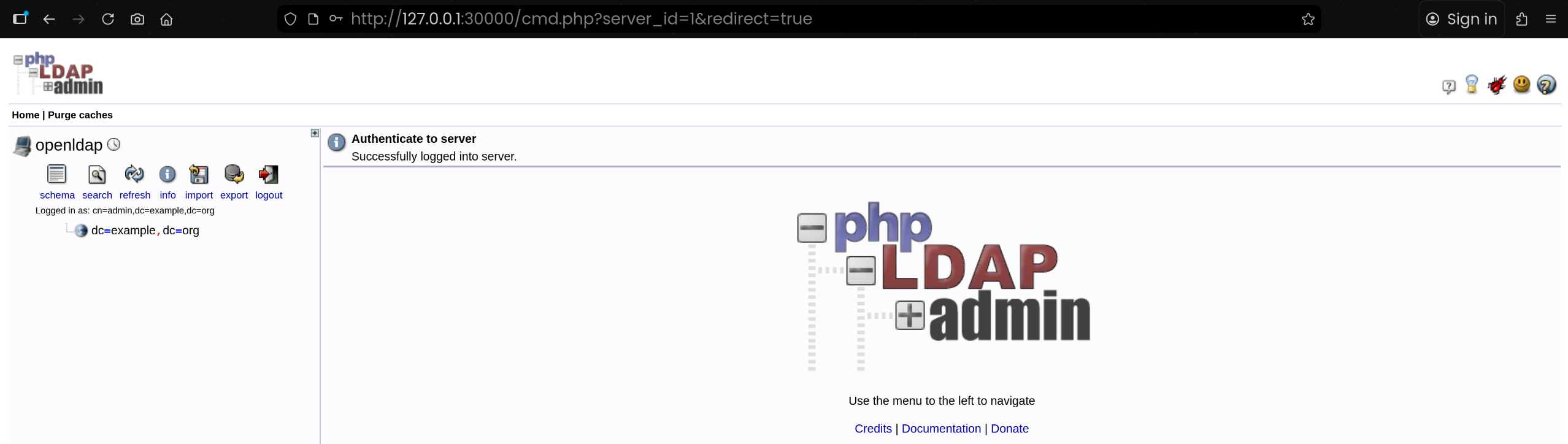

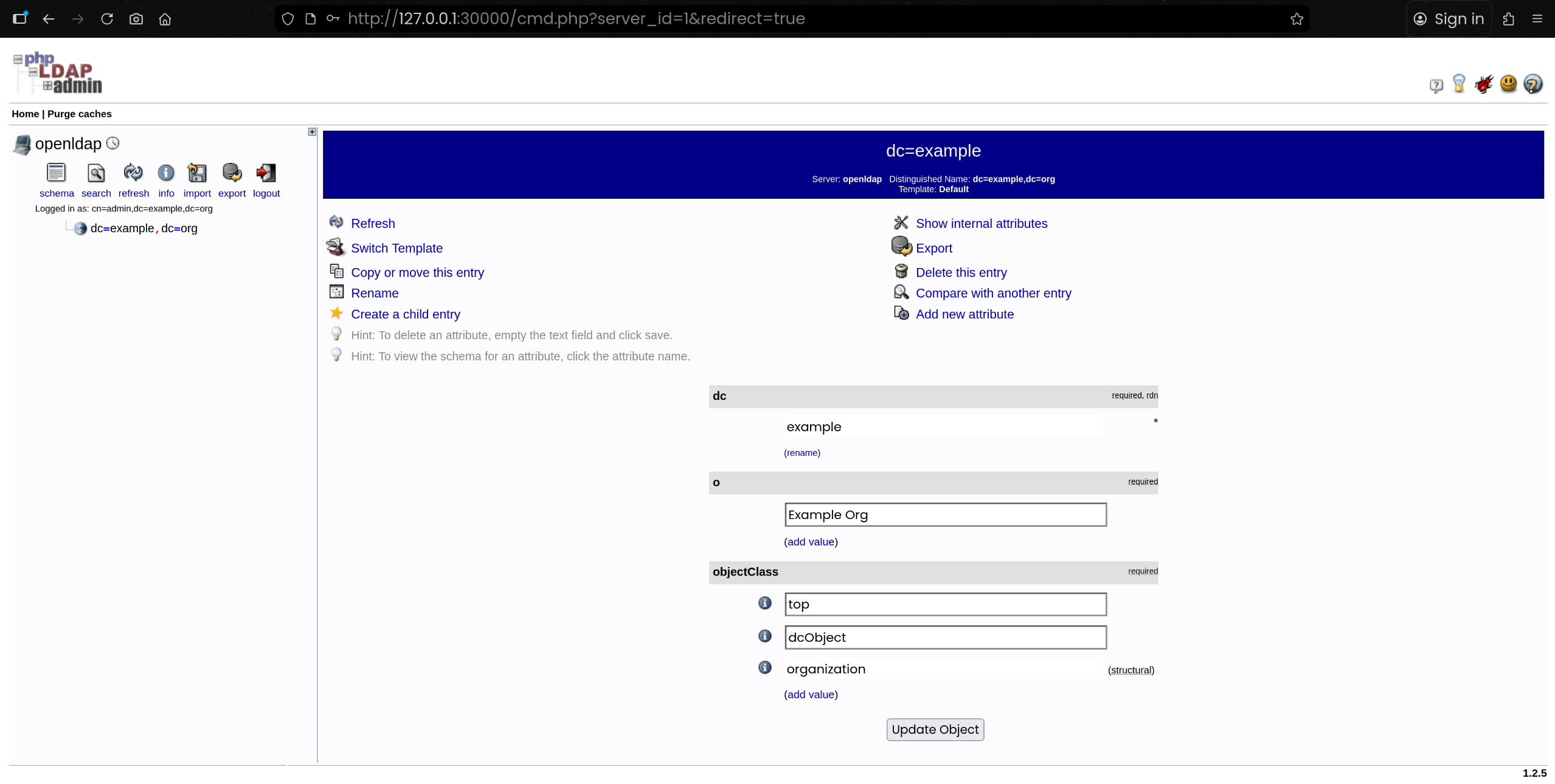

4. Keycloak Admin 콘솔 접속 및 로그인

1

2

| http://keycloak.example.com/admin

username, password: admin / admin

|

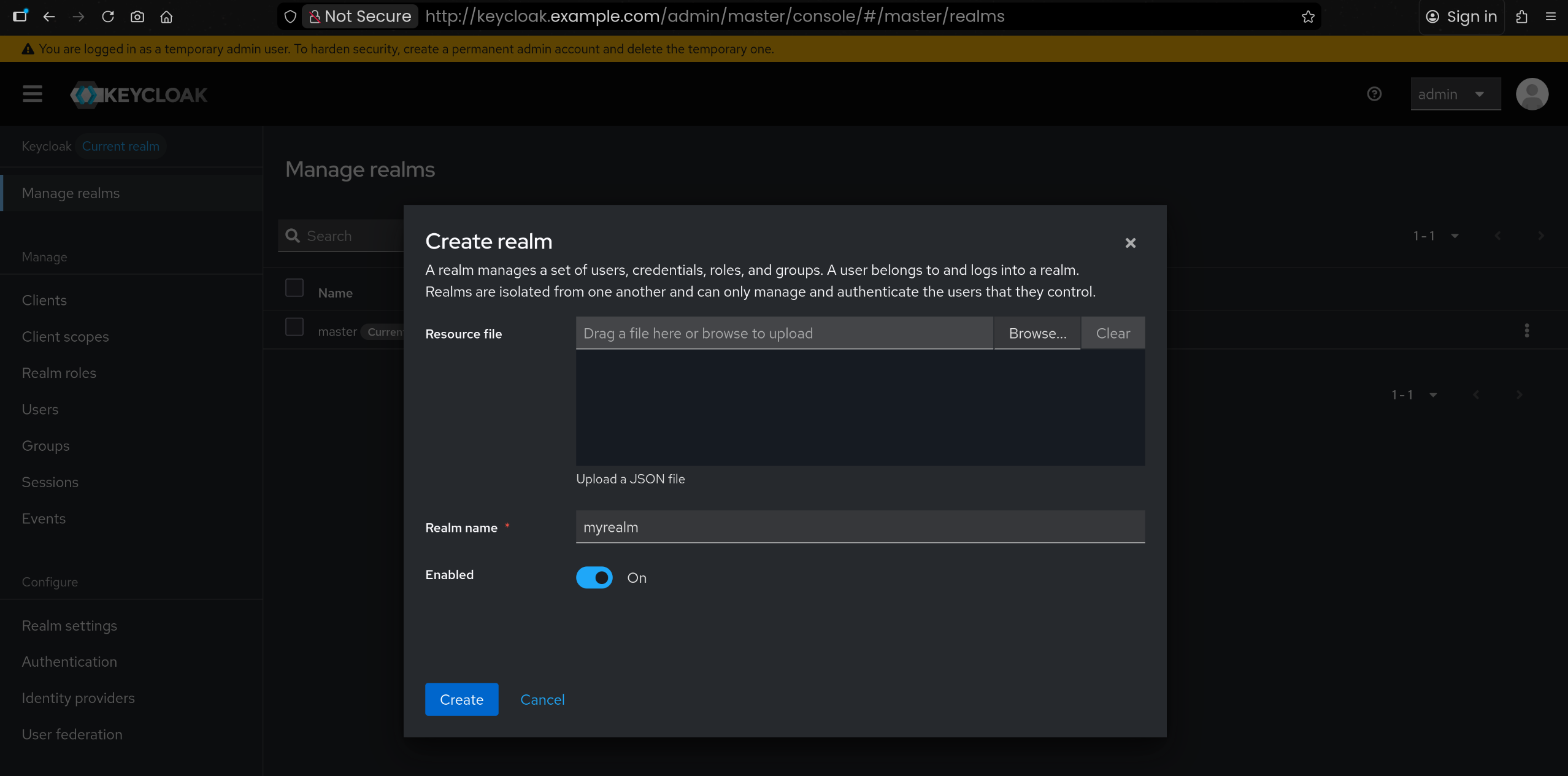

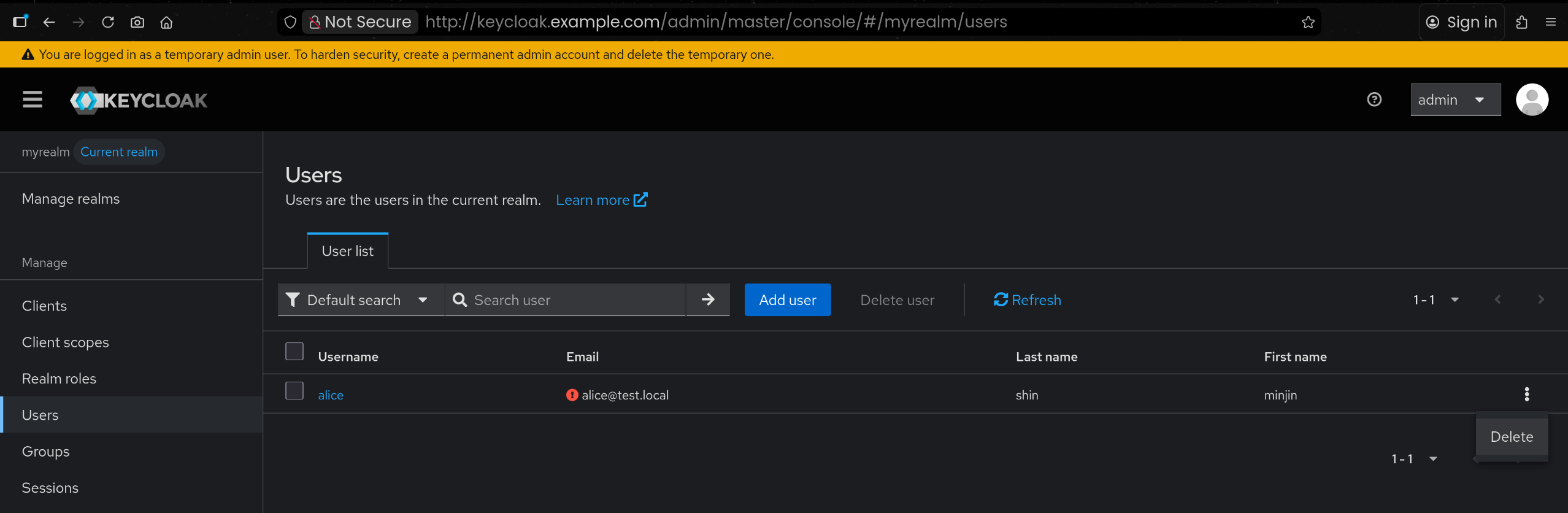

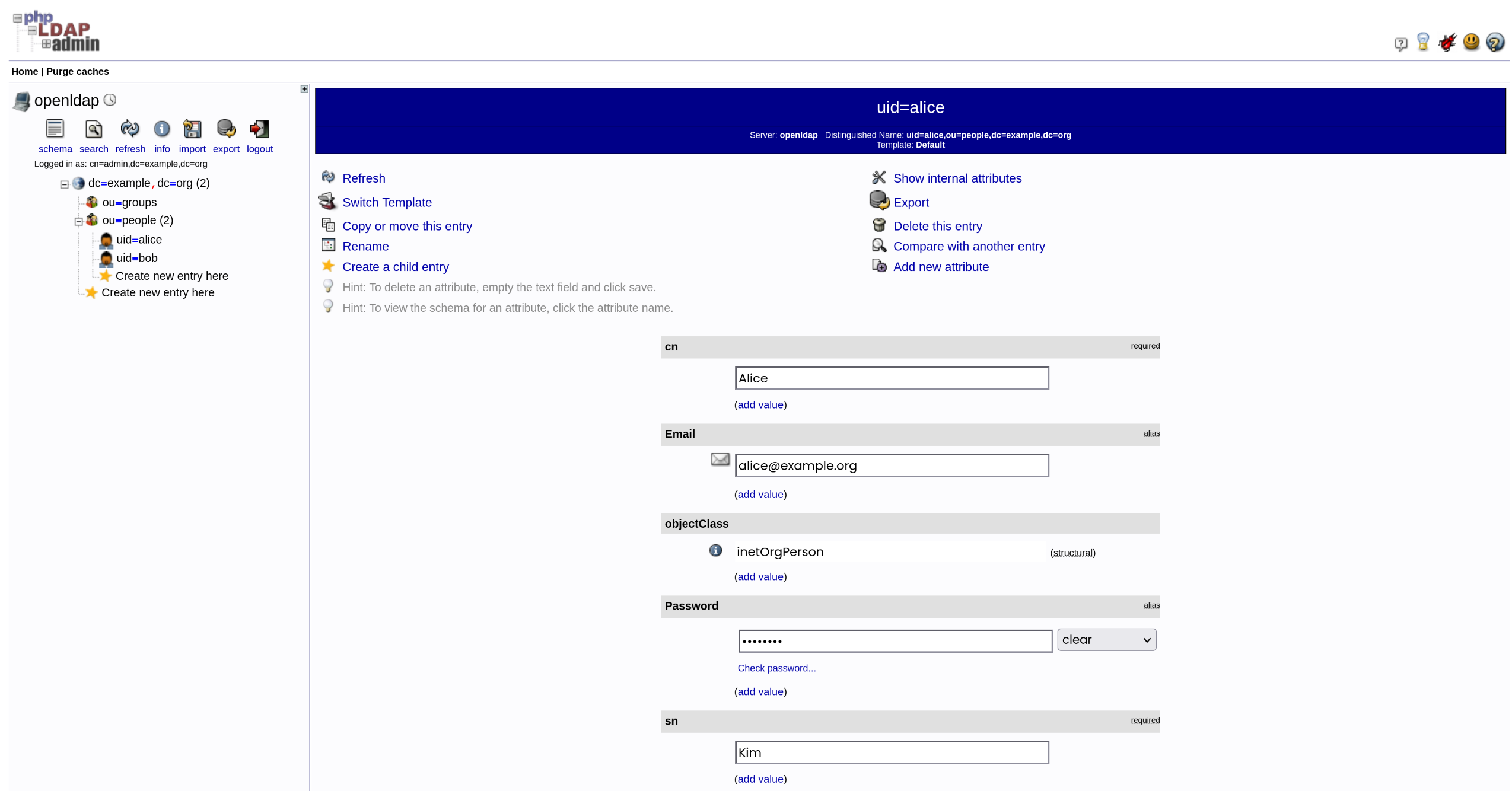

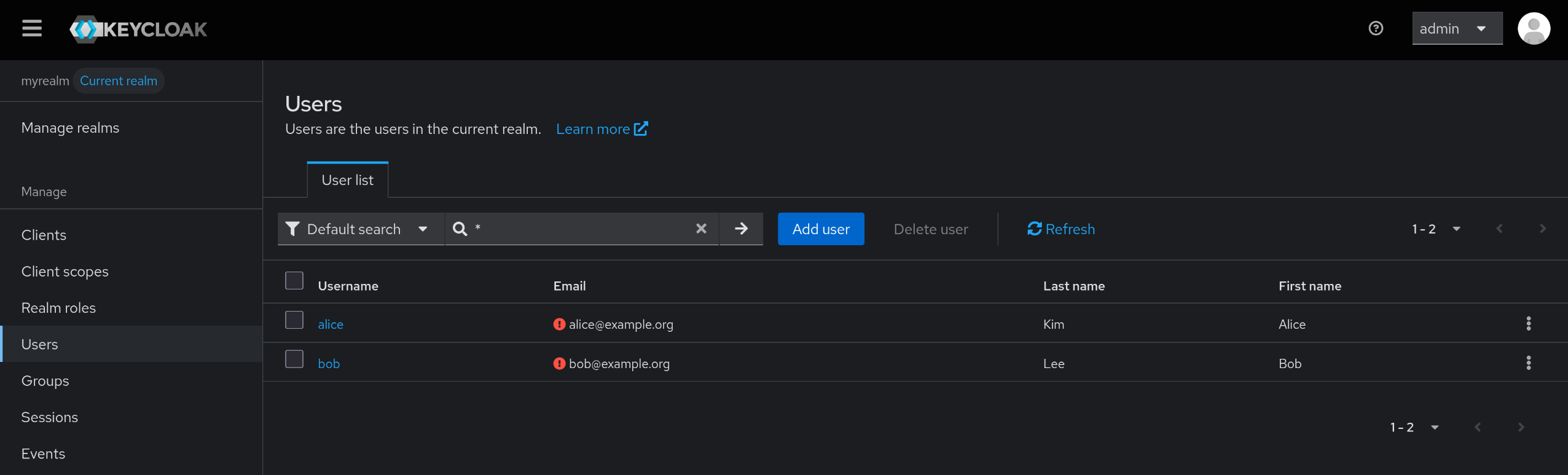

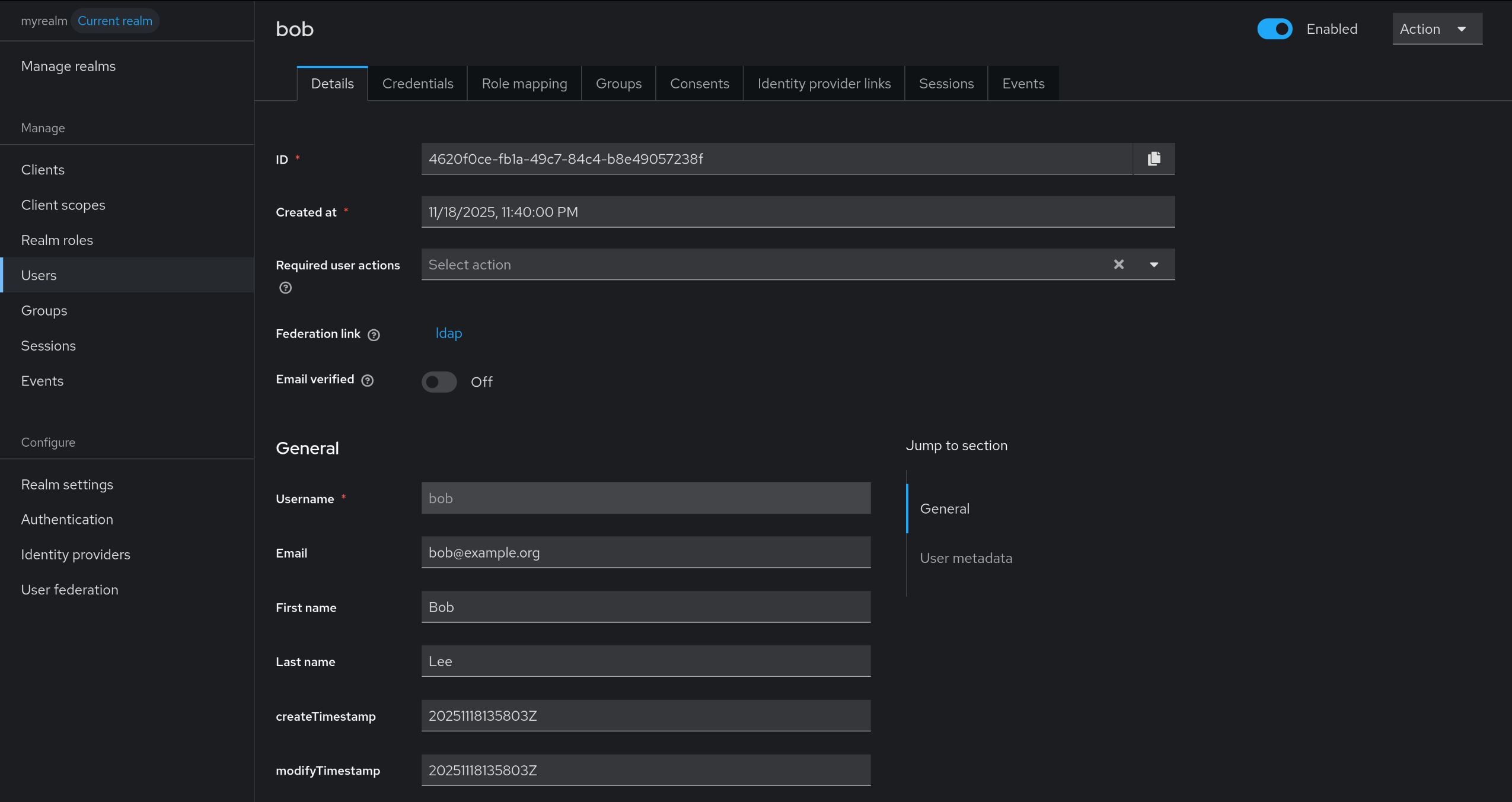

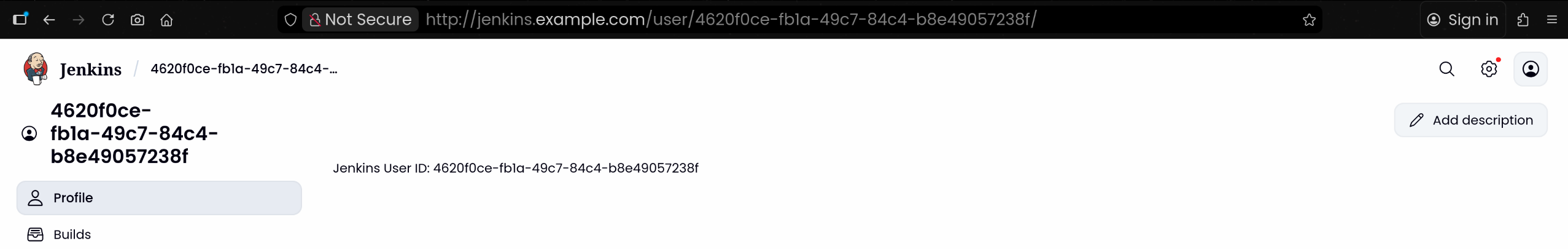

5. 새로운 Realm 생성 – myrealm

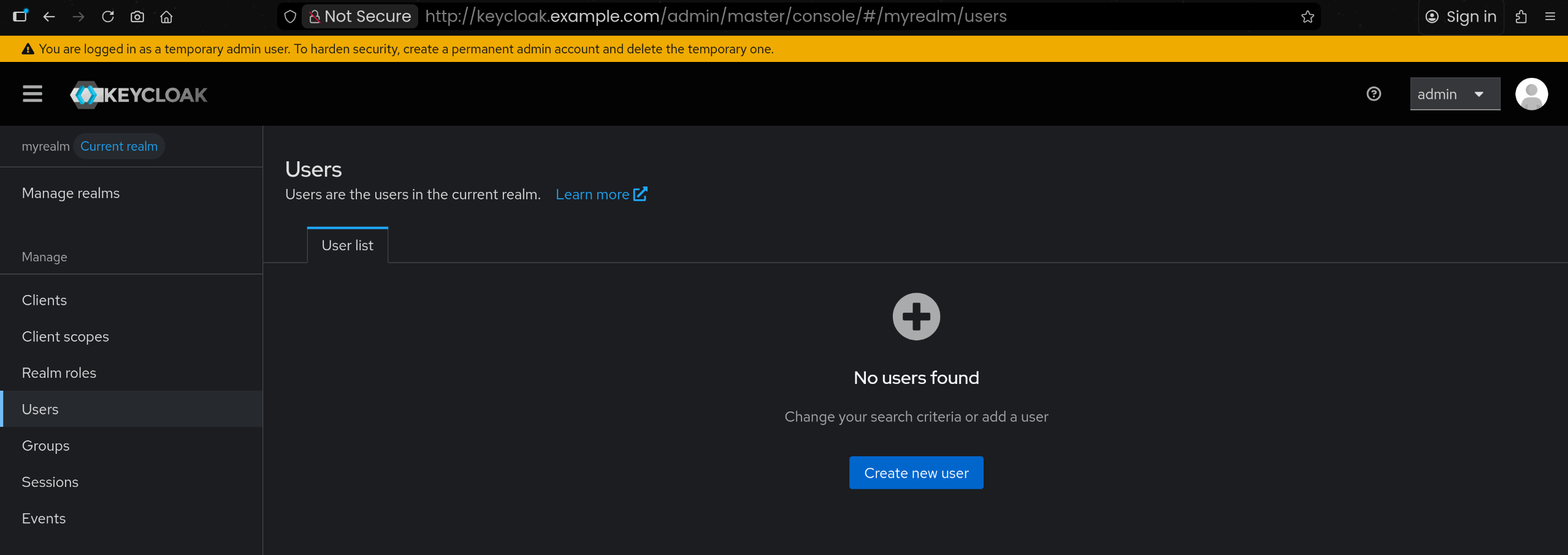

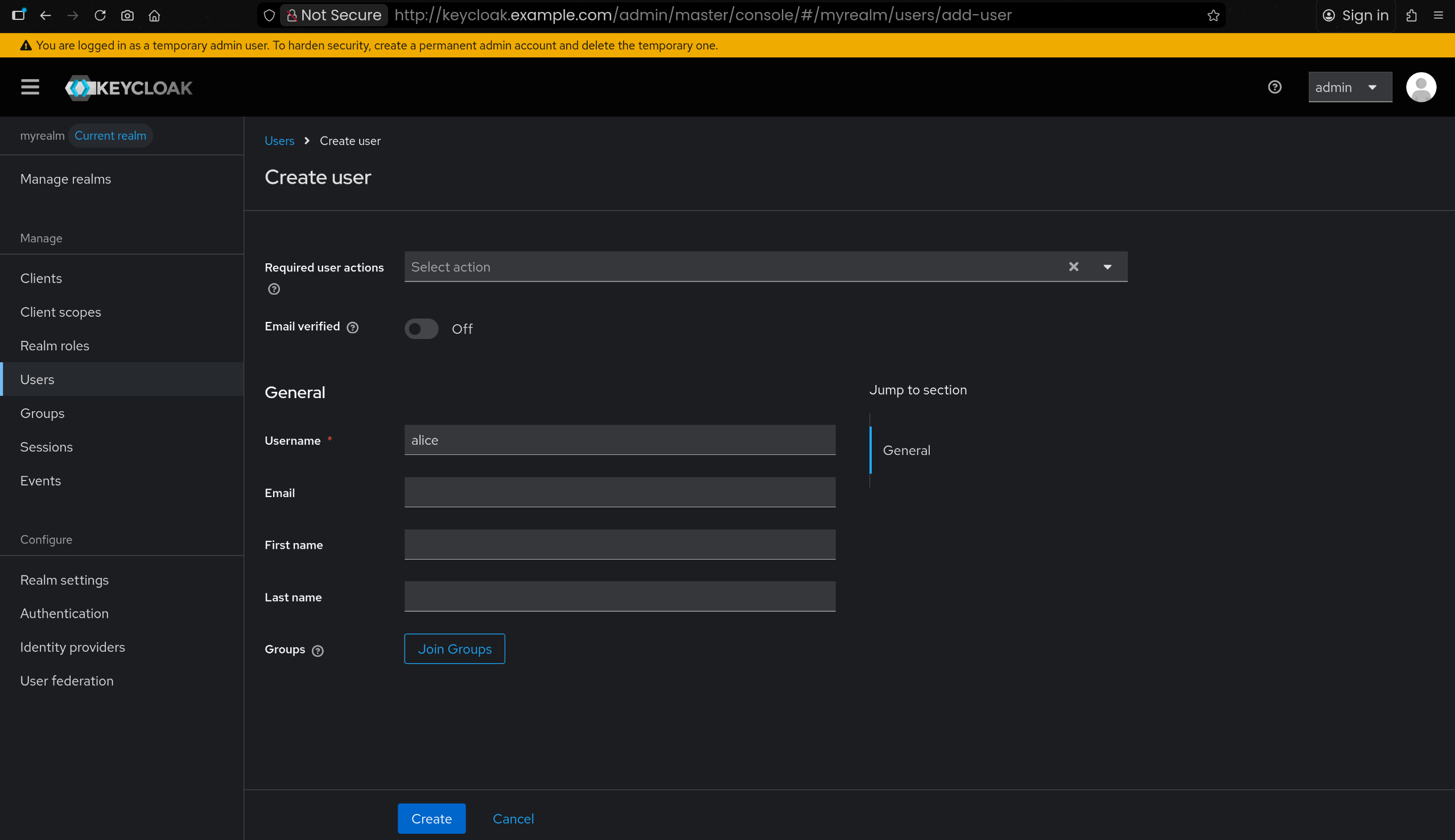

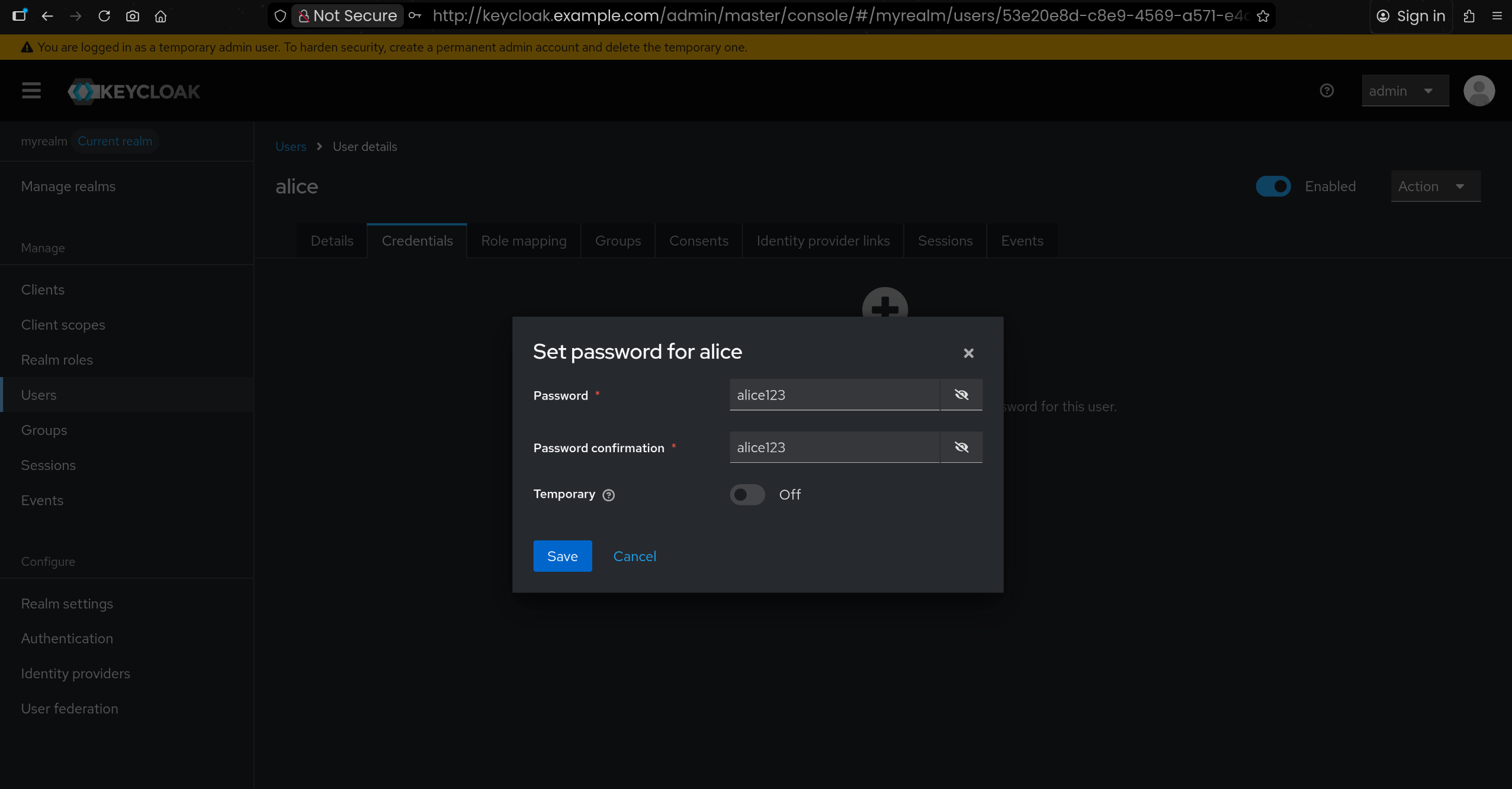

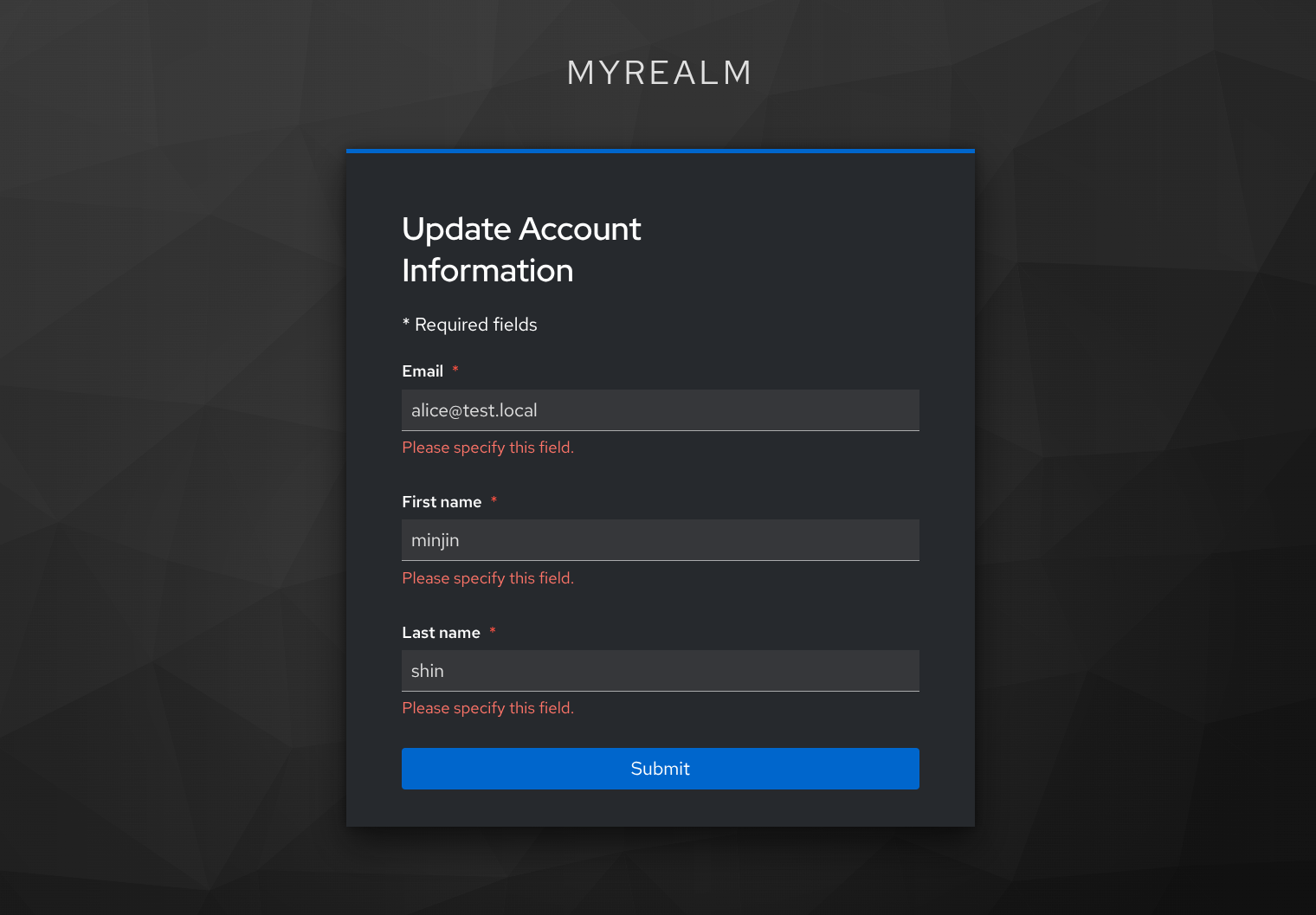

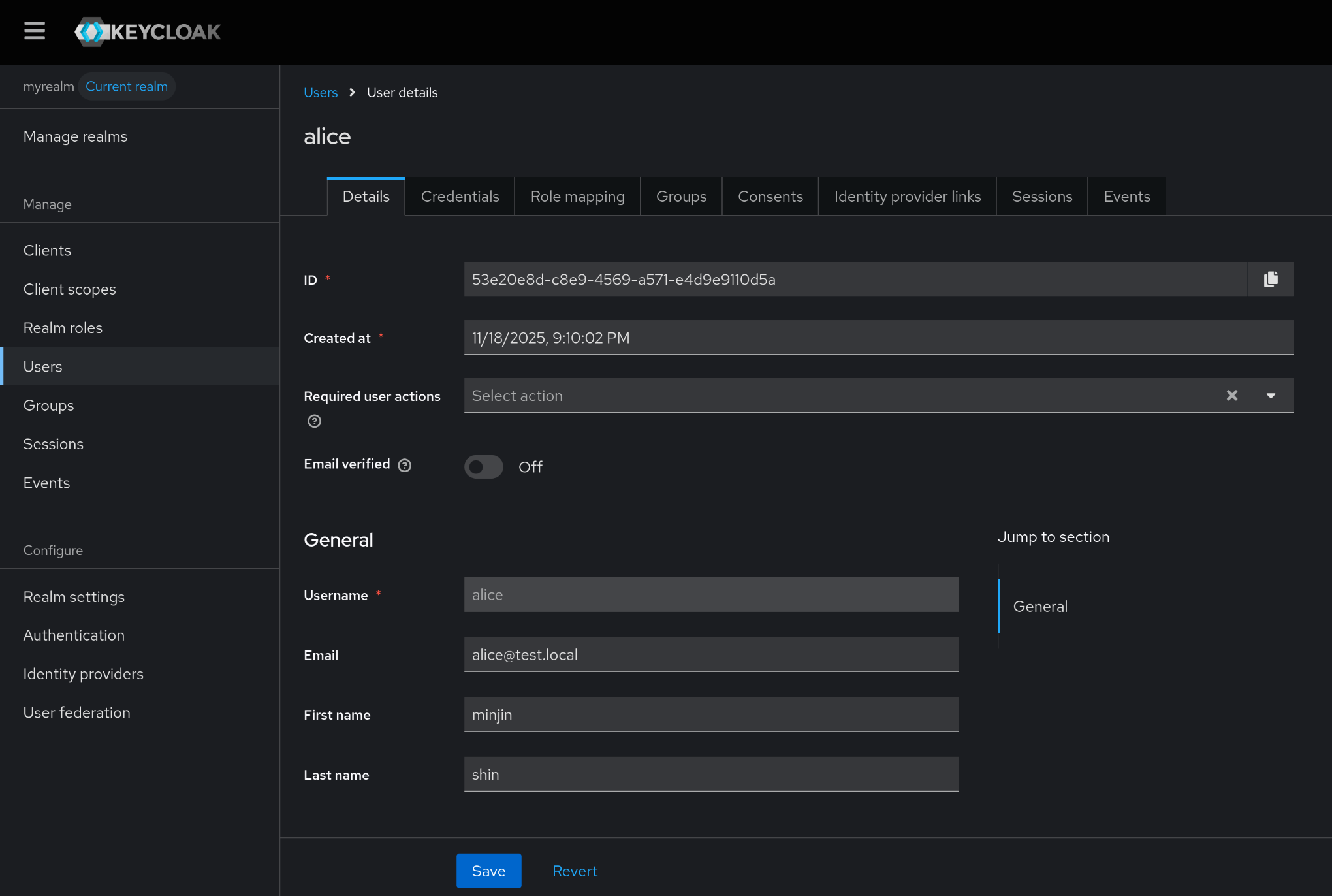

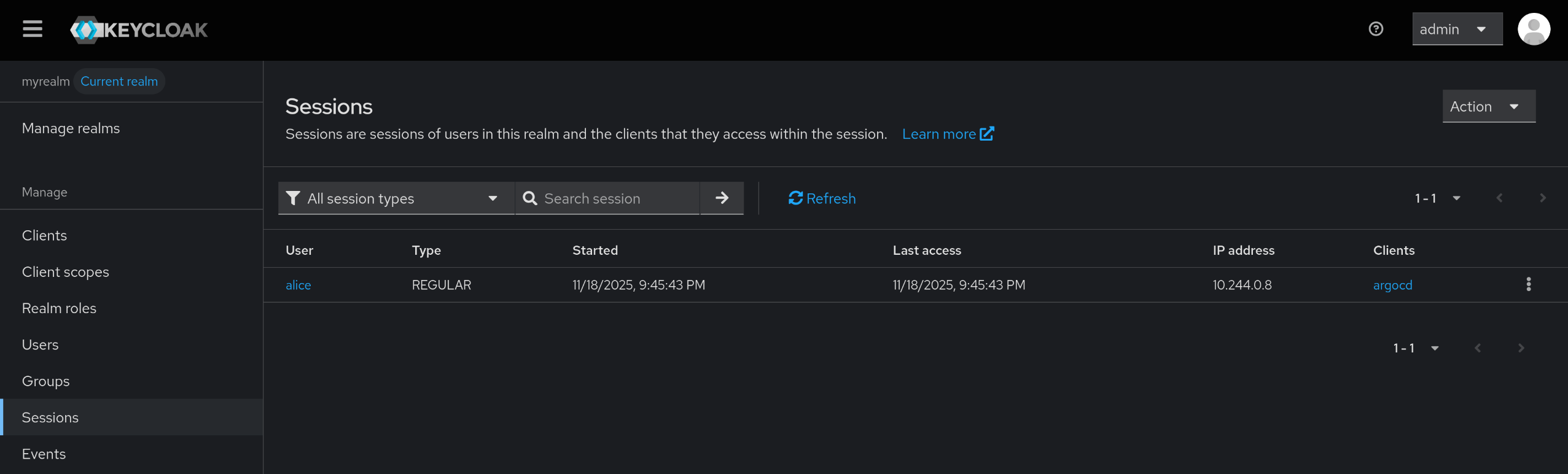

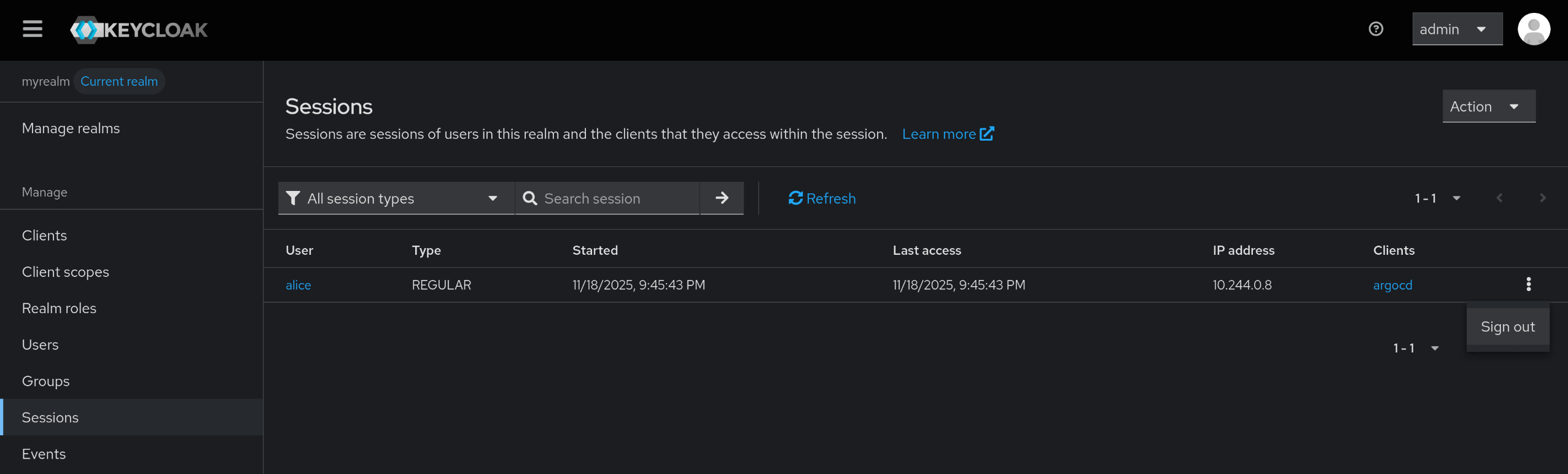

6. myrealm 내 테스트 사용자 alice 생성

🧪 mgmt k8s in-cluster 내부에서도 keycloak / argocd 도메인 호출 가능하게 설정

1. curl 테스트용 파드로 네트워크 기본 상태 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: curl

namespace: default

spec:

containers:

- name: curl

image: curlimages/curl:latest

command: ["sleep", "infinity"]

EOF

pod/curl created

|

- 클러스터 내부에서 HTTP/DNS를 테스트하기 위해

curl 이미지를 사용하는 경량 파드를 생성

1

2

3

4

| kubectl get pod -l app=keycloak -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

keycloak-846cb4c68-lphrw 1/1 Running 0 12m 10.244.0.25 mgmt-control-plane <none> <none>

|

1

2

3

| kubectl get pod -l app=keycloak -o jsonpath='{.items[0].status.podIP}'

10.244.0.25

|

1

2

3

4

| KEYCLOAKIP=$(kubectl get pod -l app=keycloak -o jsonpath='{.items[0].status.podIP}')

echo $KEYCLOAKIP

10.244.0.25

|

- keycloak 파드 IP 확인 후, 변수로 지정

1

2

3

4

5

6

7

8

| kubectl exec -it curl -- ping -c 1 $KEYCLOAKIP

PING 10.244.0.25 (10.244.0.25): 56 data bytes

64 bytes from 10.244.0.25: seq=0 ttl=42 time=0.138 ms

--- 10.244.0.25 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.138/0.138/0.138 ms

|

- curl 파드에서 해당 IP로 ping이 잘 되는 것을 확인함

2. cluster DNS 이름으로 keycloak OIDC 엔드포인트 호출 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| kubectl exec -it curl -- curl -s http://keycloak.default.svc.cluster.local/realms/myrealm/.well-known/openid-configuration | jq

{

"issuer": "http://keycloak.default.svc.cluster.local/realms/myrealm",

"authorization_endpoint": "http://keycloak.default.svc.cluster.local/realms/myrealm/protocol/openid-connect/auth",

"token_endpoint": "http://keycloak.default.svc.cluster.local/realms/myrealm/protocol/openid-connect/token",

"introspection_endpoint": "http://keycloak.default.svc.cluster.local/realms/myrealm/protocol/openid-connect/token/introspect",

"userinfo_endpoint": "http://keycloak.default.svc.cluster.local/realms/myrealm/protocol/openid-connect/userinfo",

"end_session_endpoint": "http://keycloak.default.svc.cluster.local/realms/myrealm/protocol/openid-connect/logout",

"frontchannel_logout_session_supported": true,

"frontchannel_logout_supported": true,

"jwks_uri": "http://keycloak.default.svc.cluster.local/realms/myrealm/protocol/openid-connect/certs",

"check_session_iframe": "http://keycloak.default.svc.cluster.local/realms/myrealm/protocol/openid-connect/login-status-iframe.html",

...

}

|

1

2

3

| kubectl exec -it curl -- curl -s http://keycloak.example.com -I

command terminated with exit code 6

|

1

2

3

4

5

6

7

8

9

10

11

12

| kubectl exec -it curl -- nslookup -debug keycloak.example.com

Server: 10.96.0.10

Address: 10.96.0.10:53

Query #0 completed in 145ms:

** server can't find keycloak.example.com: NXDOMAIN

Query #1 completed in 299ms:

** server can't find keycloak.example.com: NXDOMAIN

command terminated with exit code 1

|

- Pod 내부에서의 DNS 조회는 노드

/etc/hosts 를 직접 보지 않고 CoreDNS(서비스 IP 10.96.0.10)를 사용함

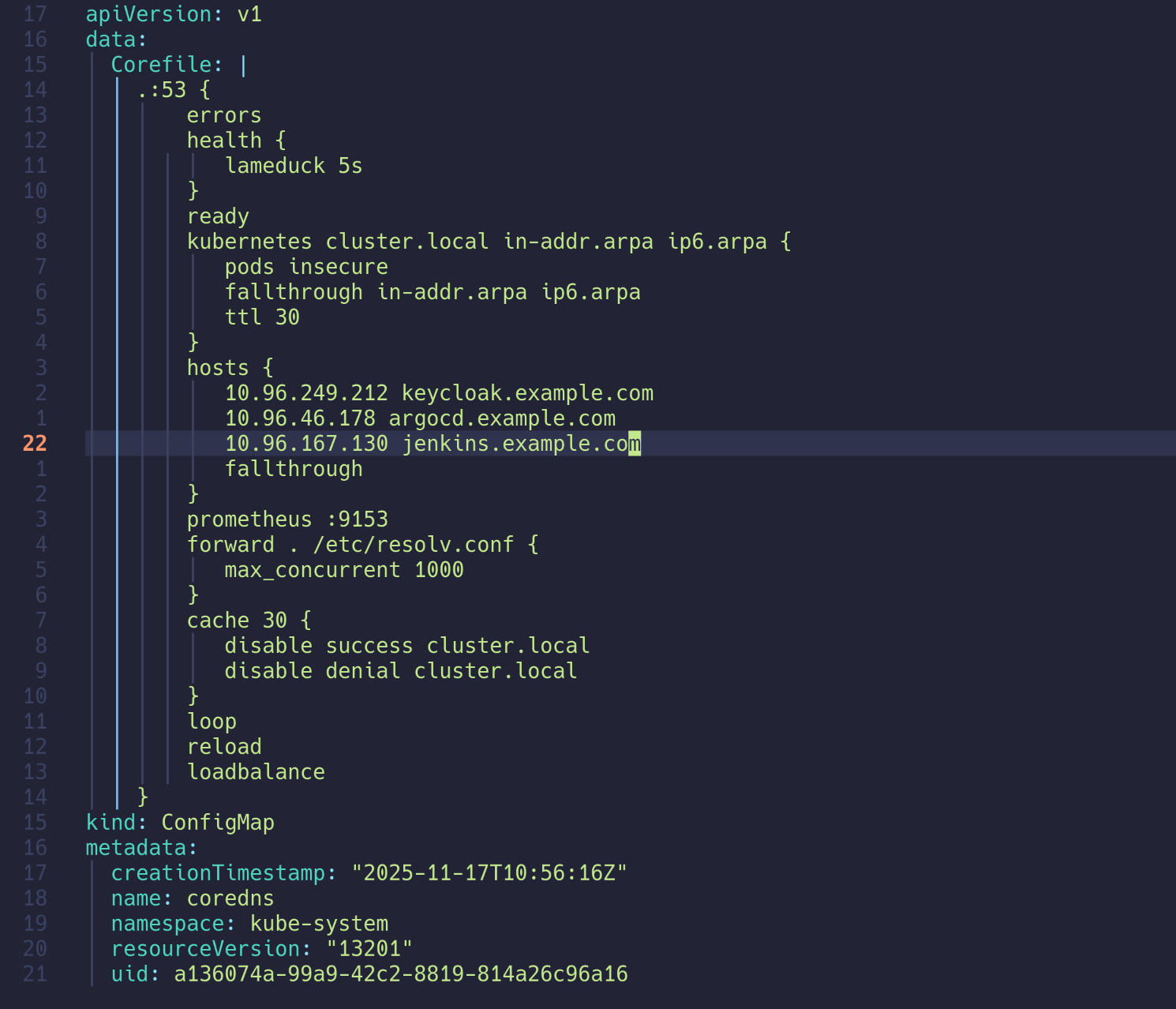

4. CoreDNS ConfigMap 수정으로 keycloak / argocd 도메인 등록

(1) 먼저 Service IP 확인

1

2

3

4

5

6

7

| kubectl get svc keycloak

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

keycloak ClusterIP 10.96.249.212 <none> 80/TCP 19m

kubectl get svc -n argocd argocd-server

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

argocd-server ClusterIP 10.96.46.178 <none> 80/TCP,443/TCP 25h

|

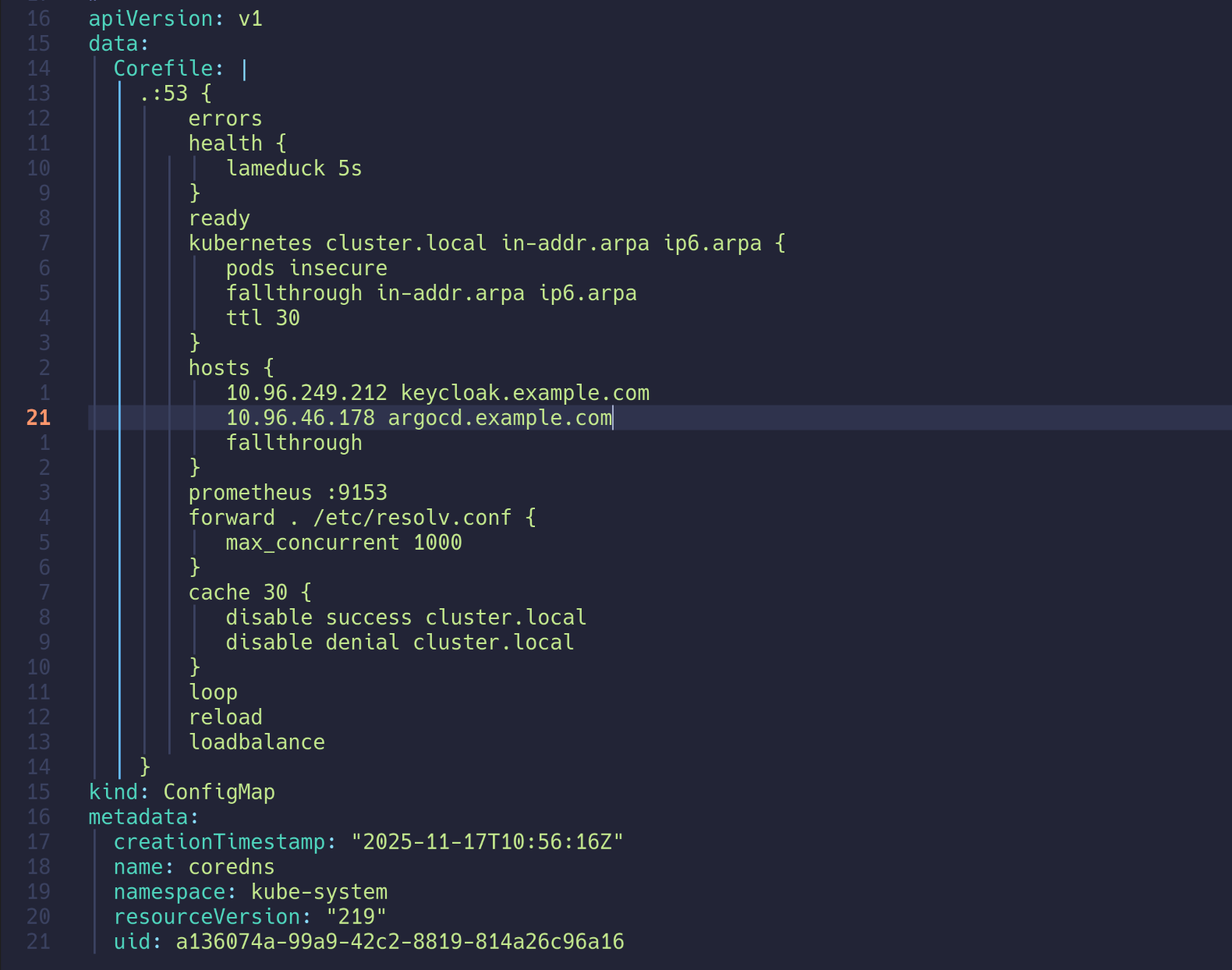

(2) CoreDNS 설정 수정

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| kubectl edit cm -n kube-system coredns

.:53 {

...

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

hosts {

<CLUSTER IP> keycloak.example.com

<CLUSTER IP> argocd.example.com

fallthrough

}

...

configmap/coredns edited

|

5. 수정 후 in-cluster DNS / 도메인 호출 확인

1

2

3

4

5

6

7

| kubectl exec -it curl -- nslookup keycloak.example.com

Server: 10.96.0.10

Address: 10.96.0.10:53

Name: keycloak.example.com

Address: 10.96.249.212

|

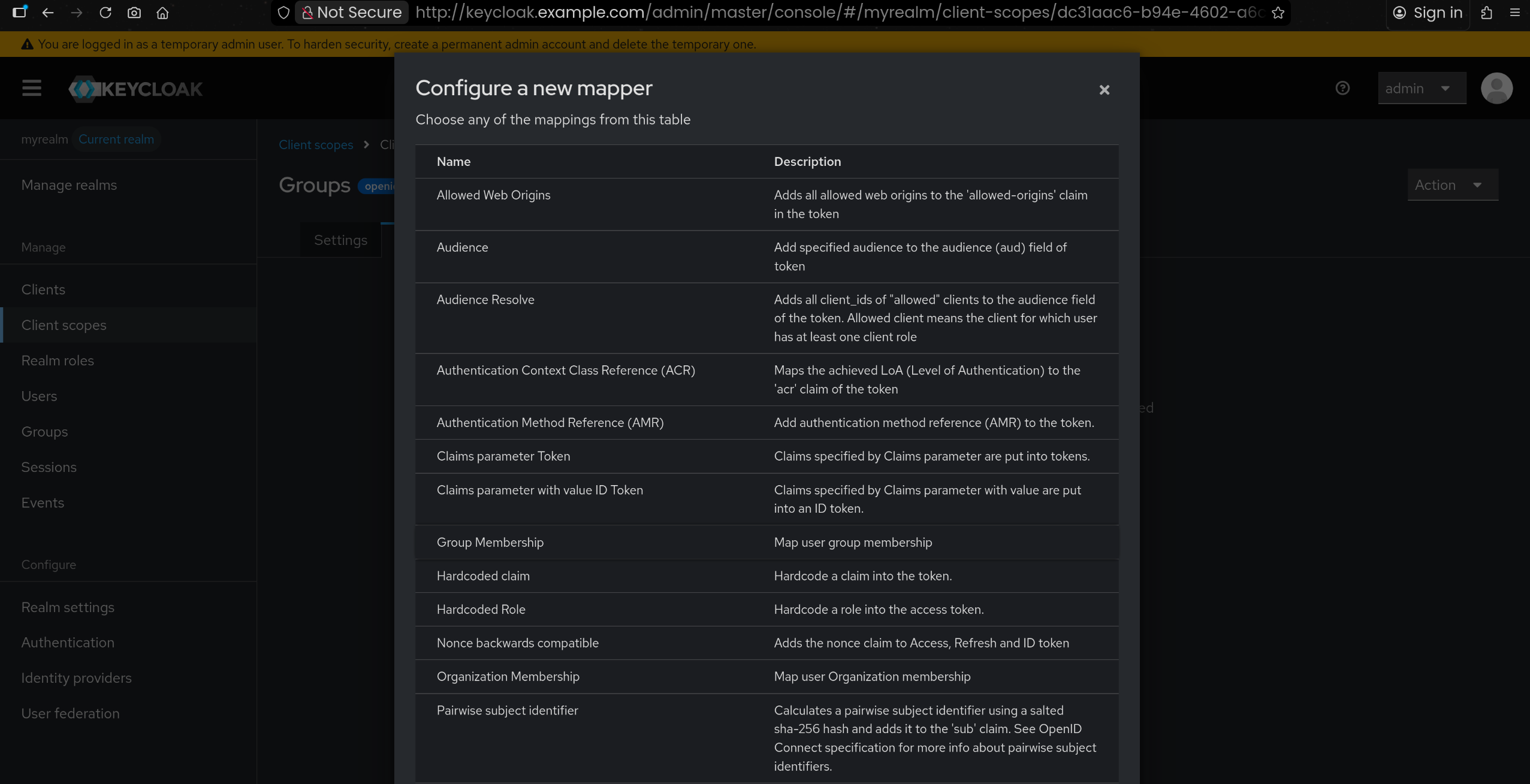

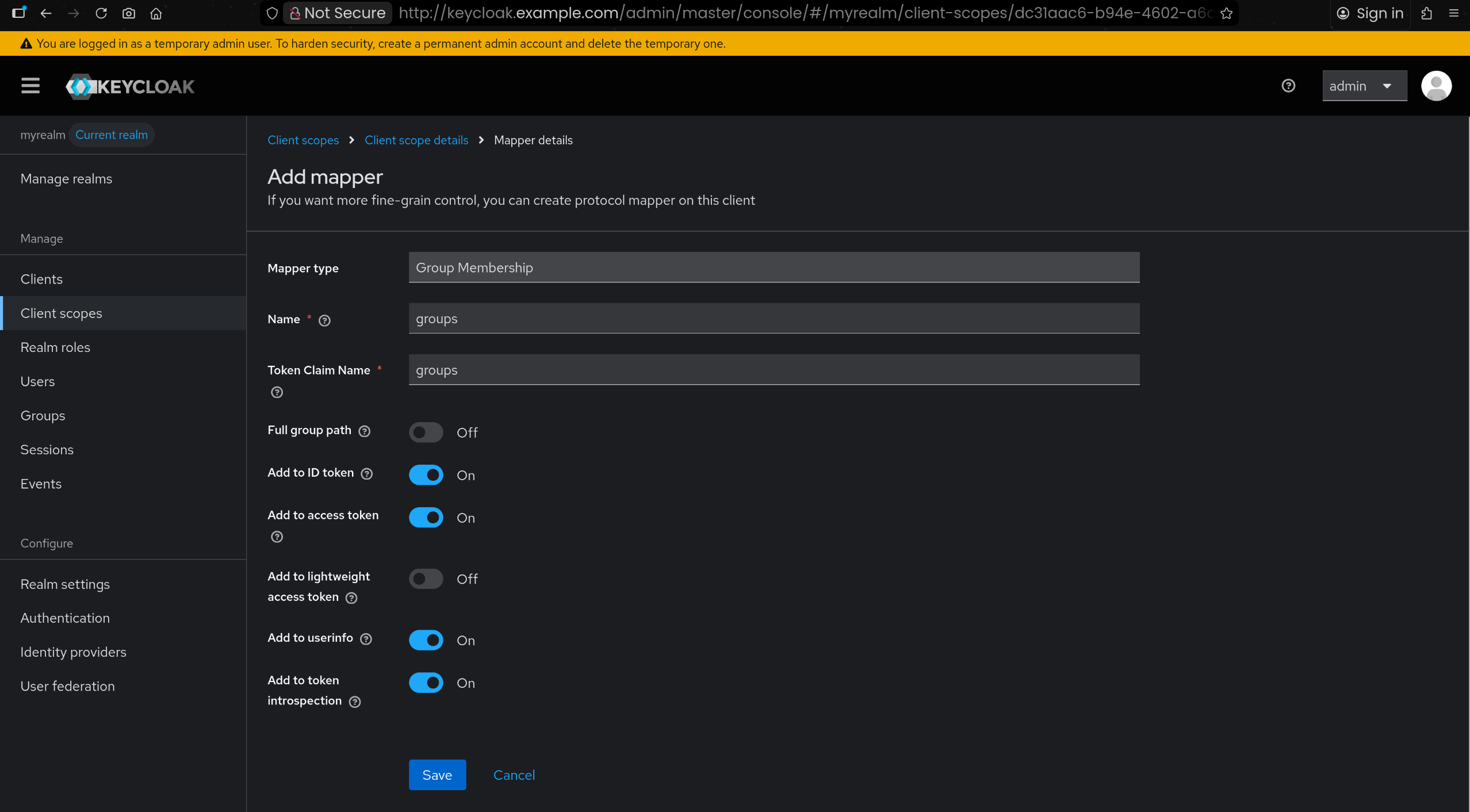

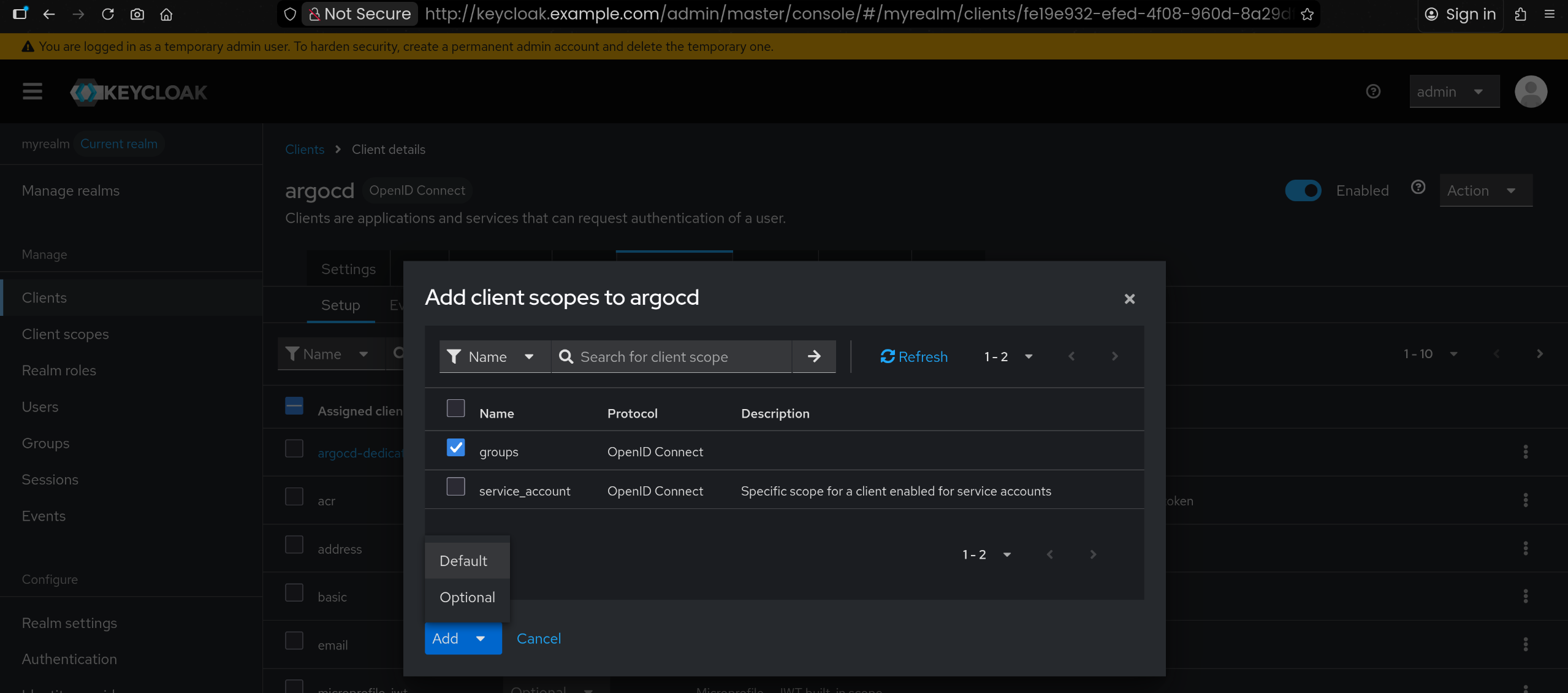

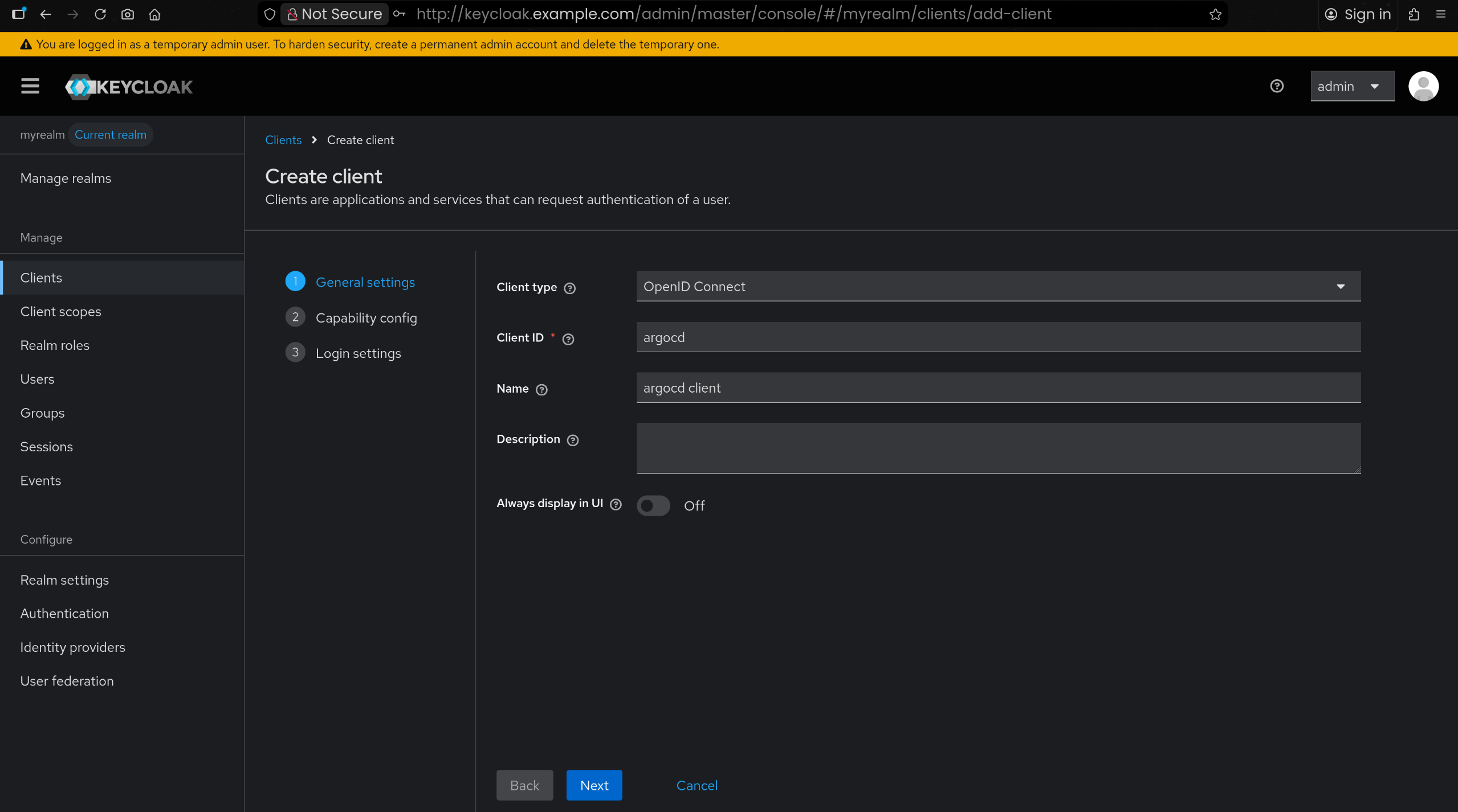

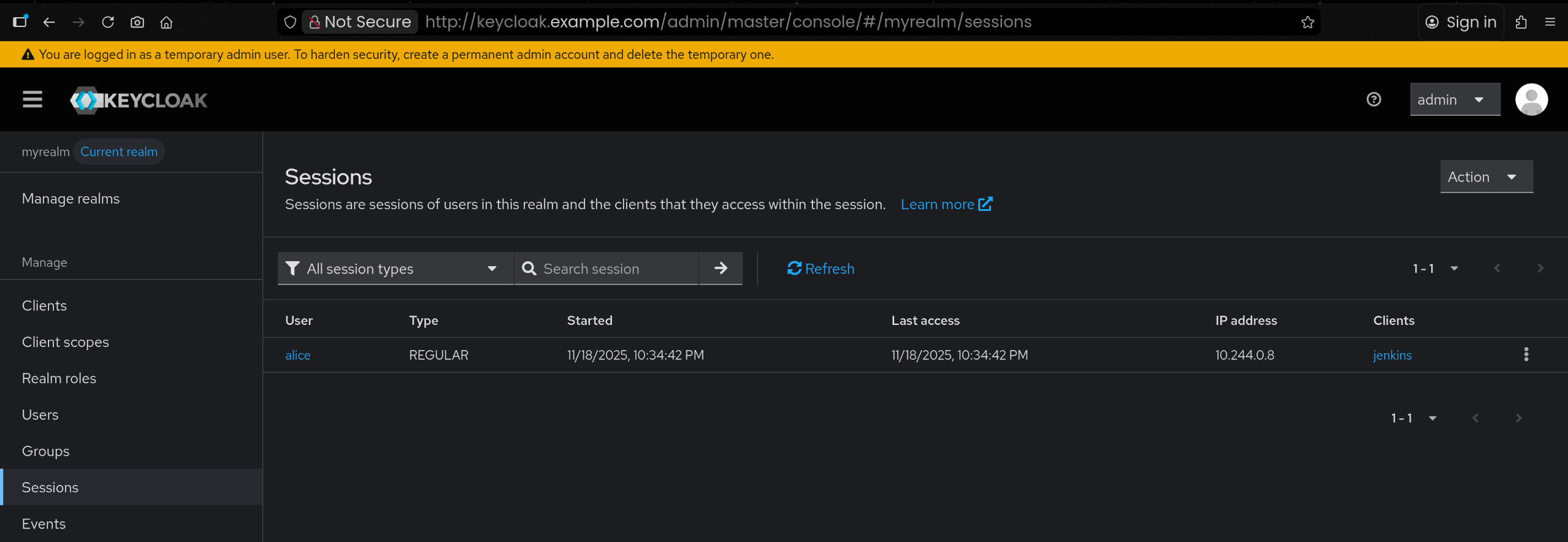

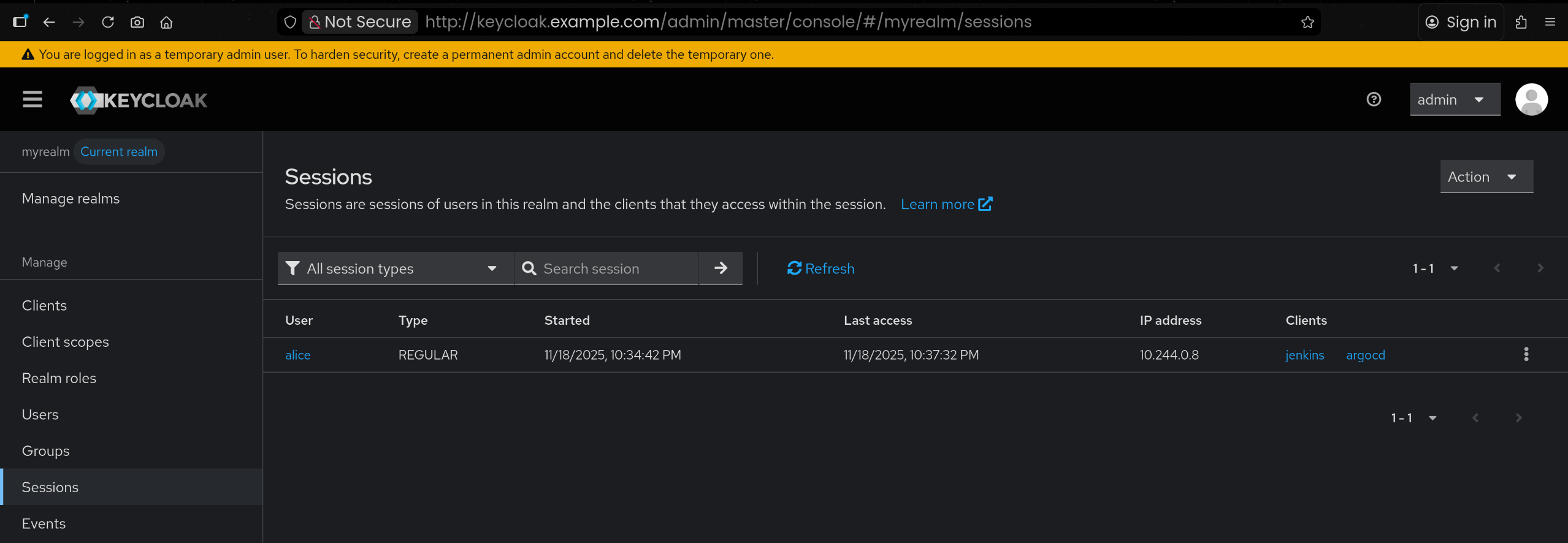

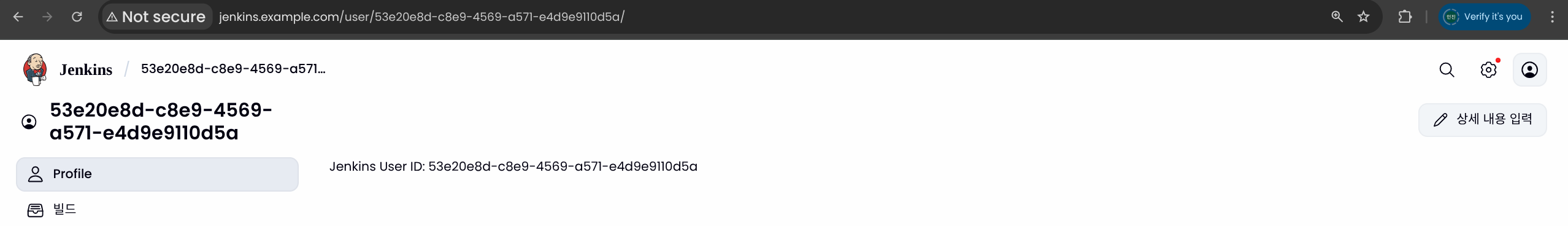

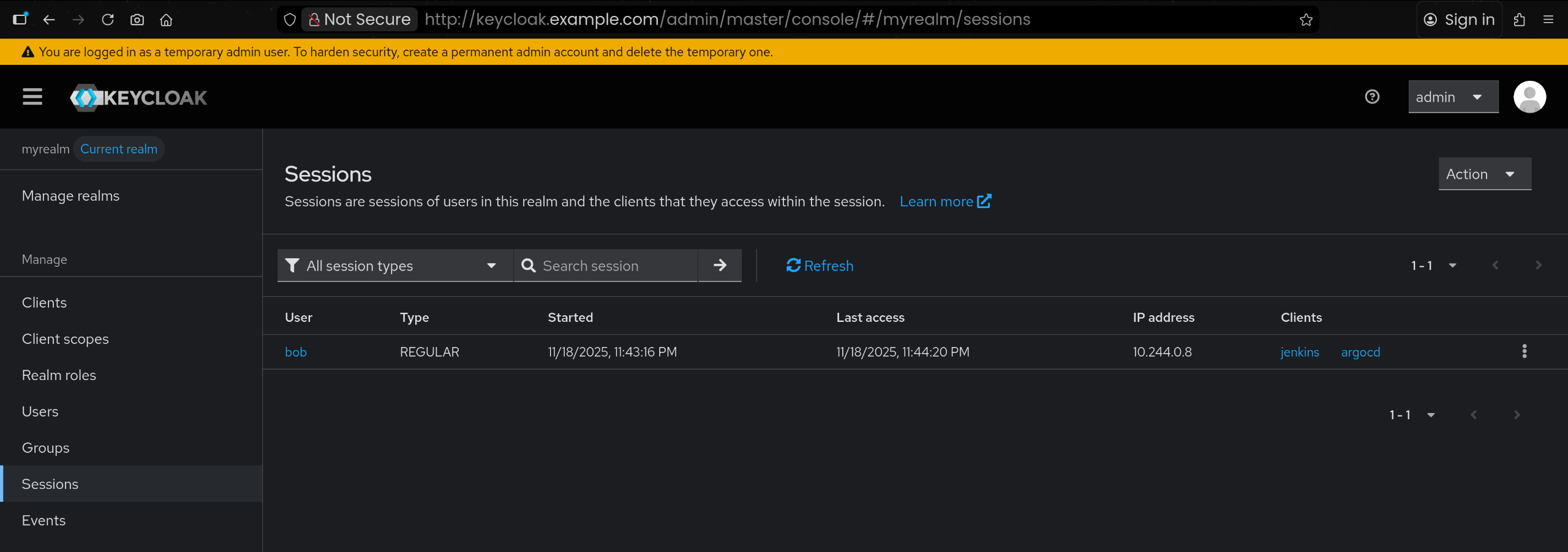

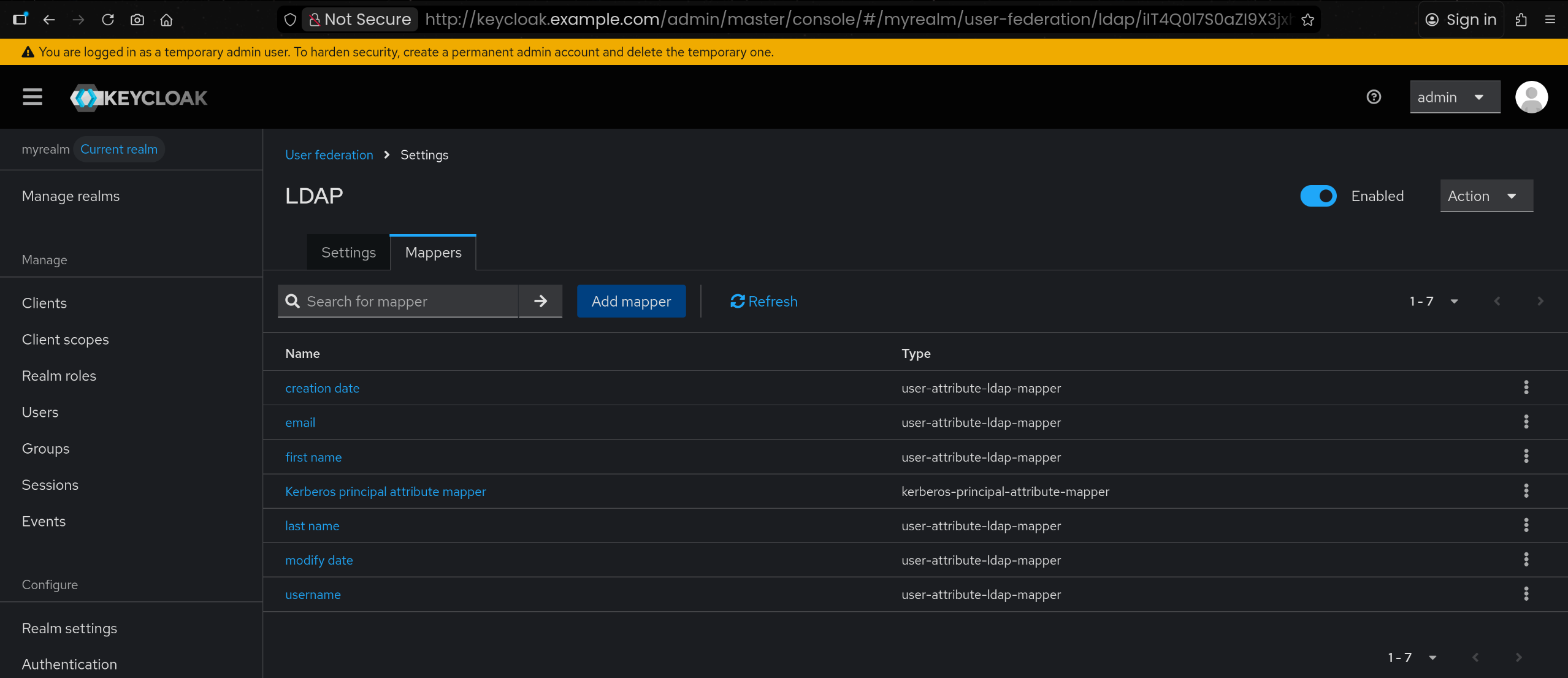

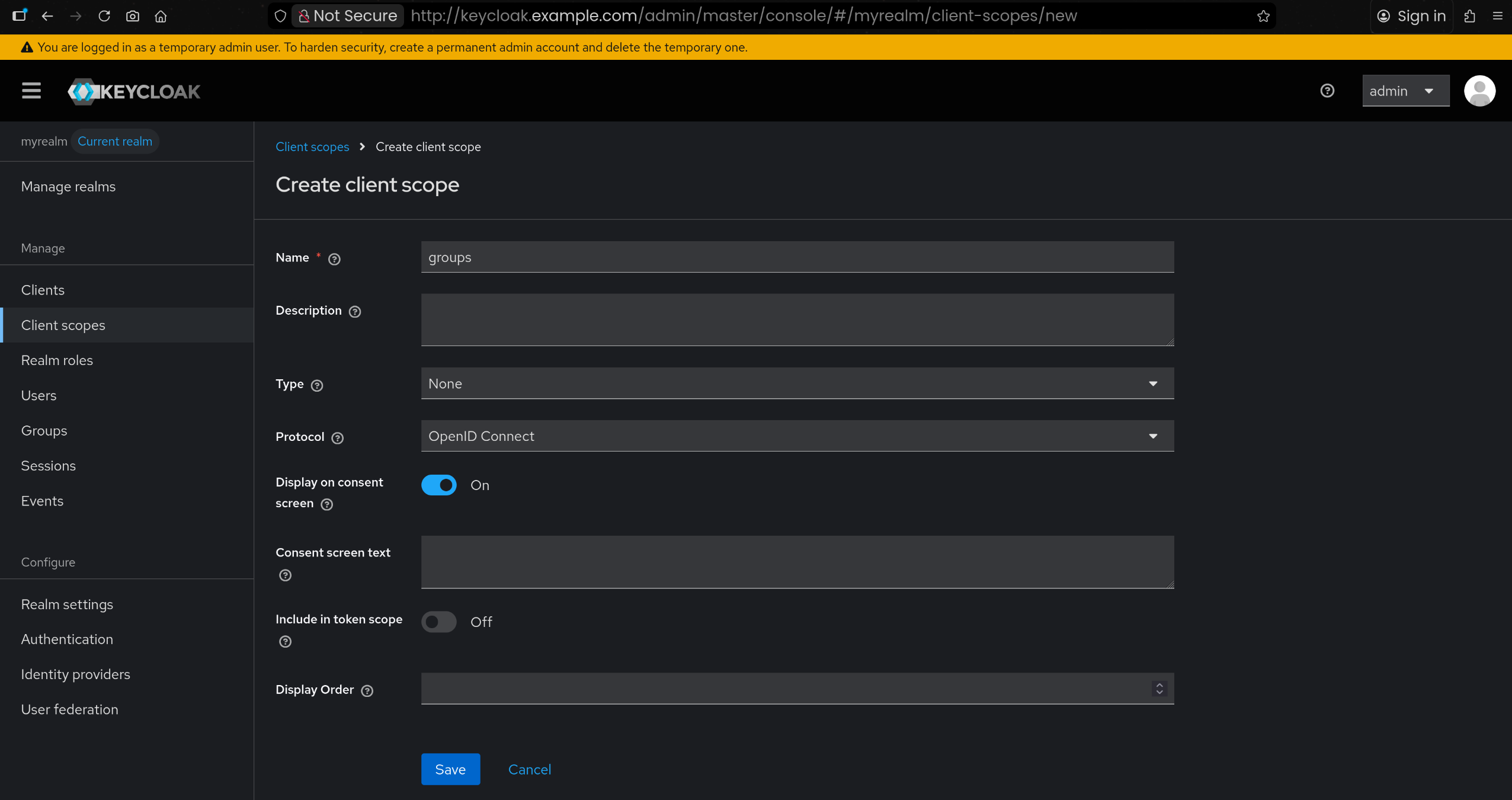

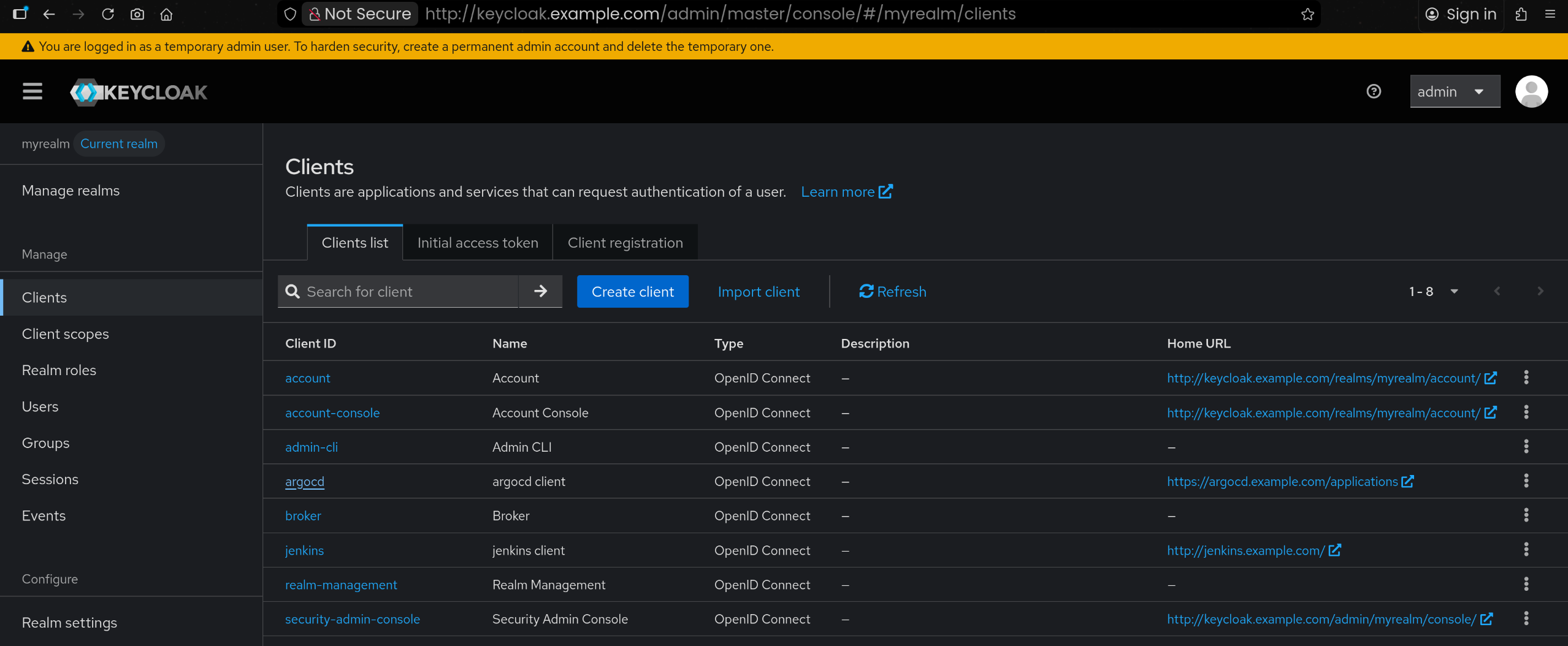

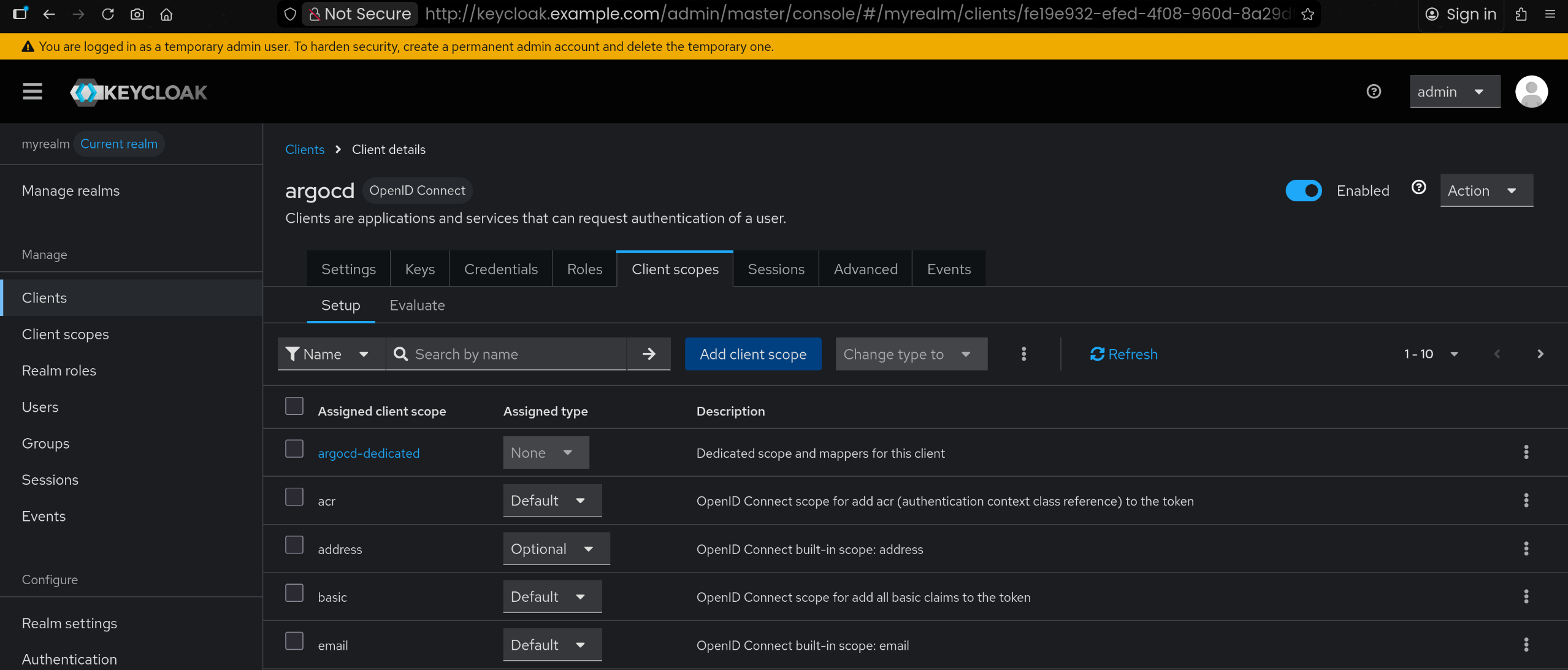

🔑 keycloak에 argocd를 위한 client 생성

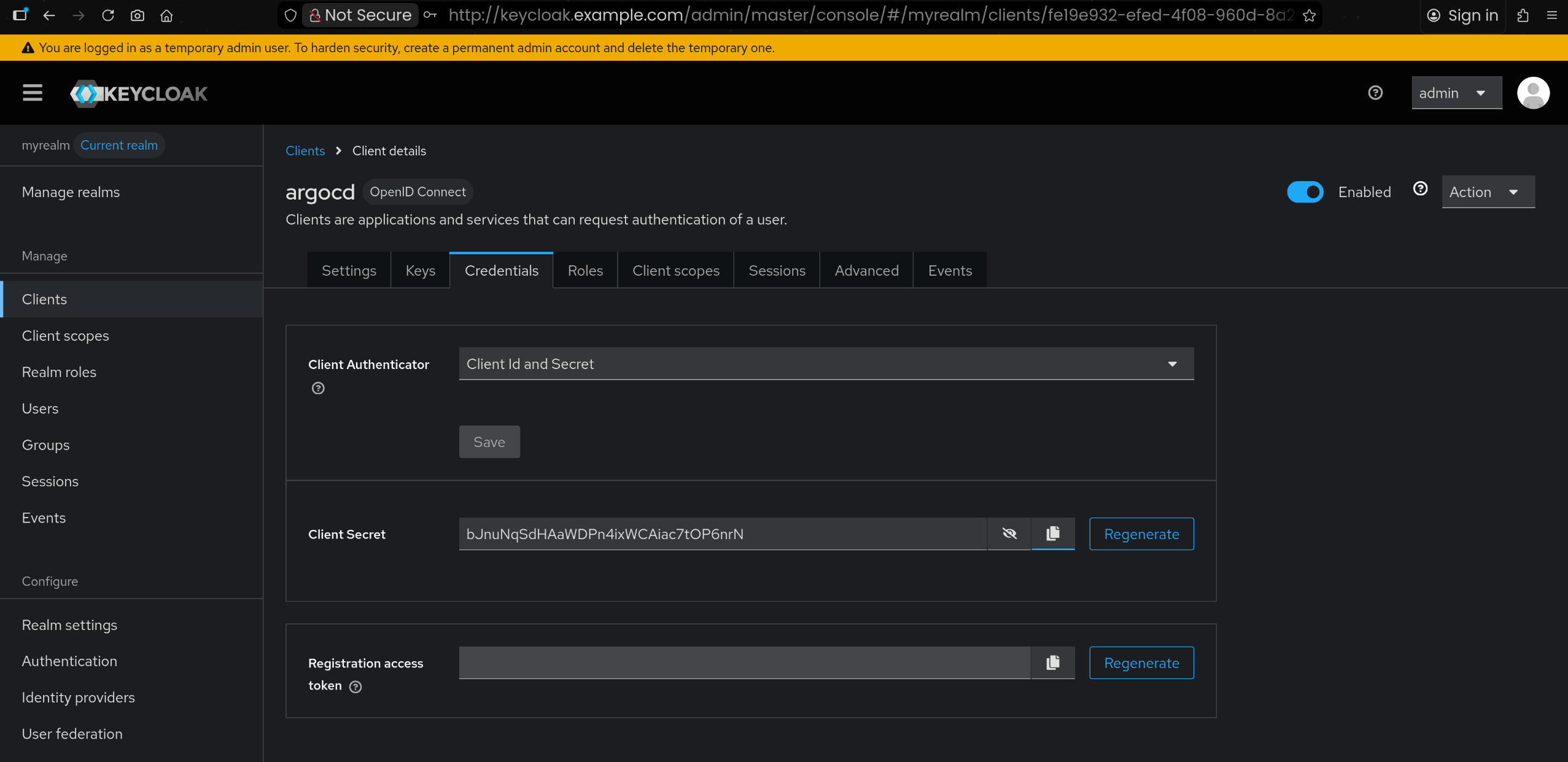

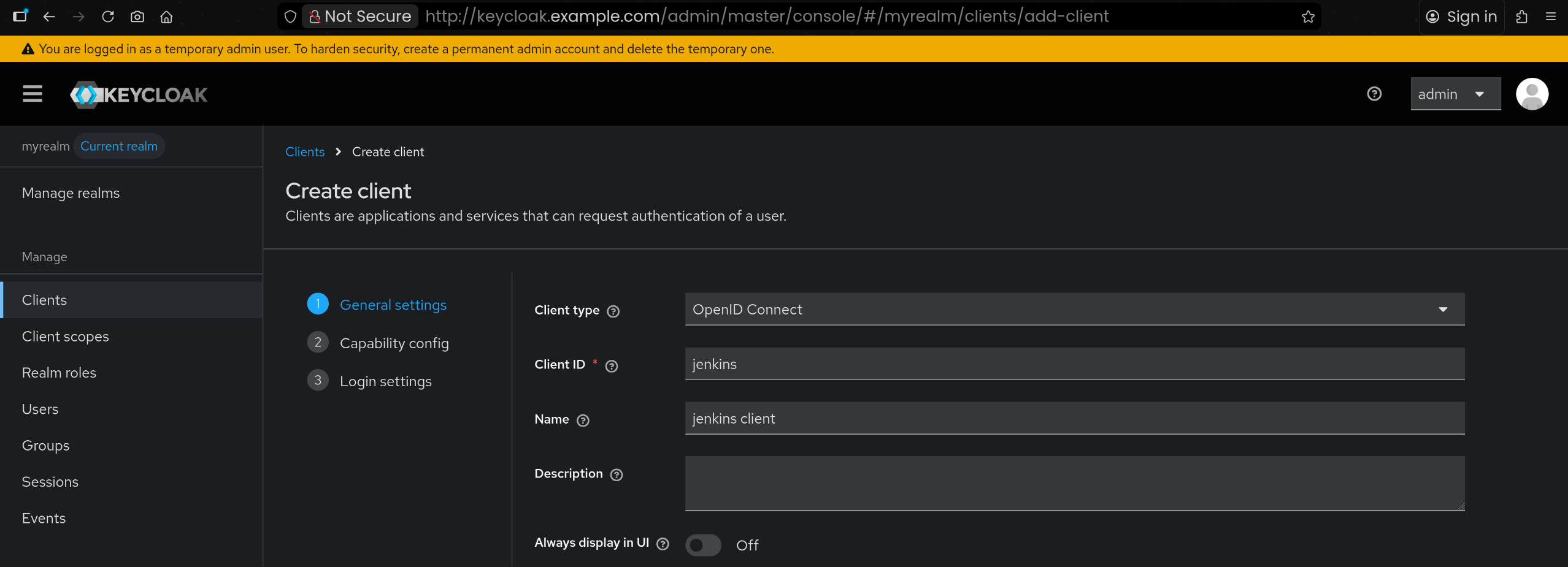

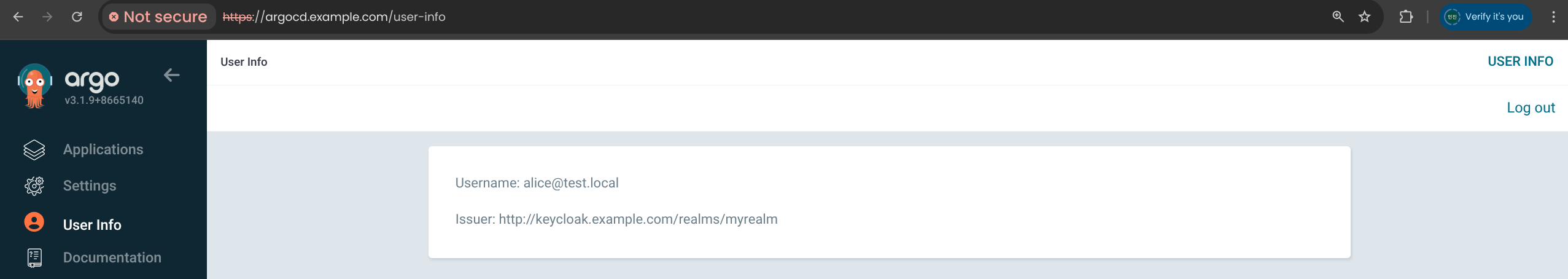

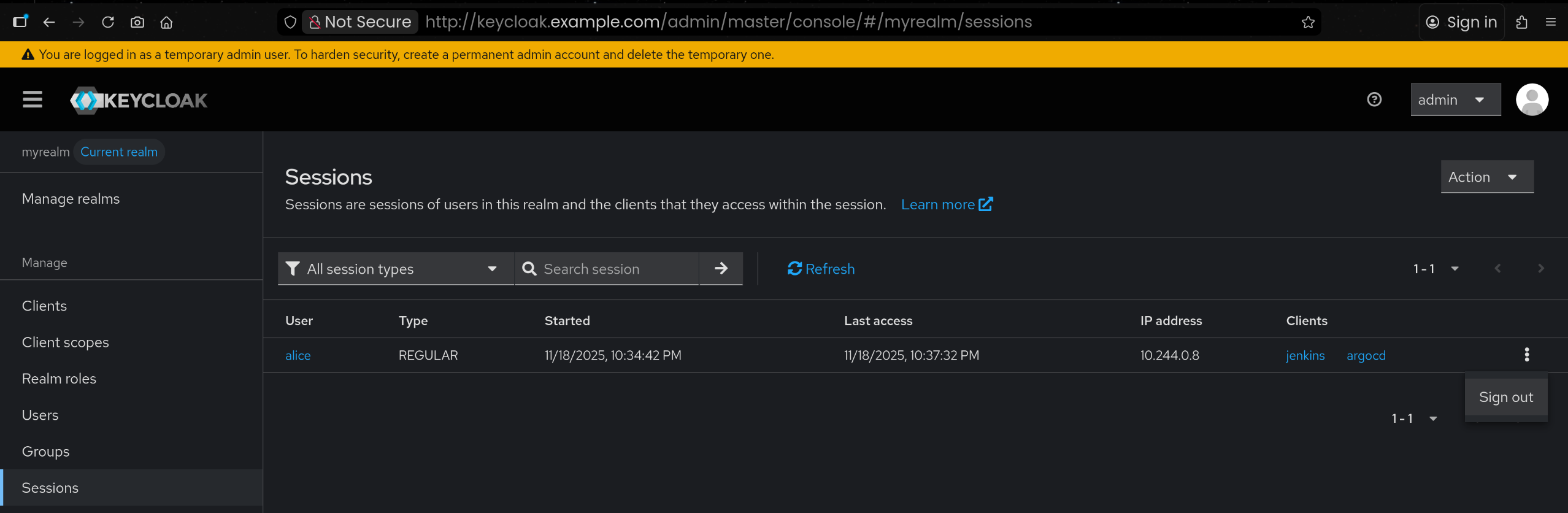

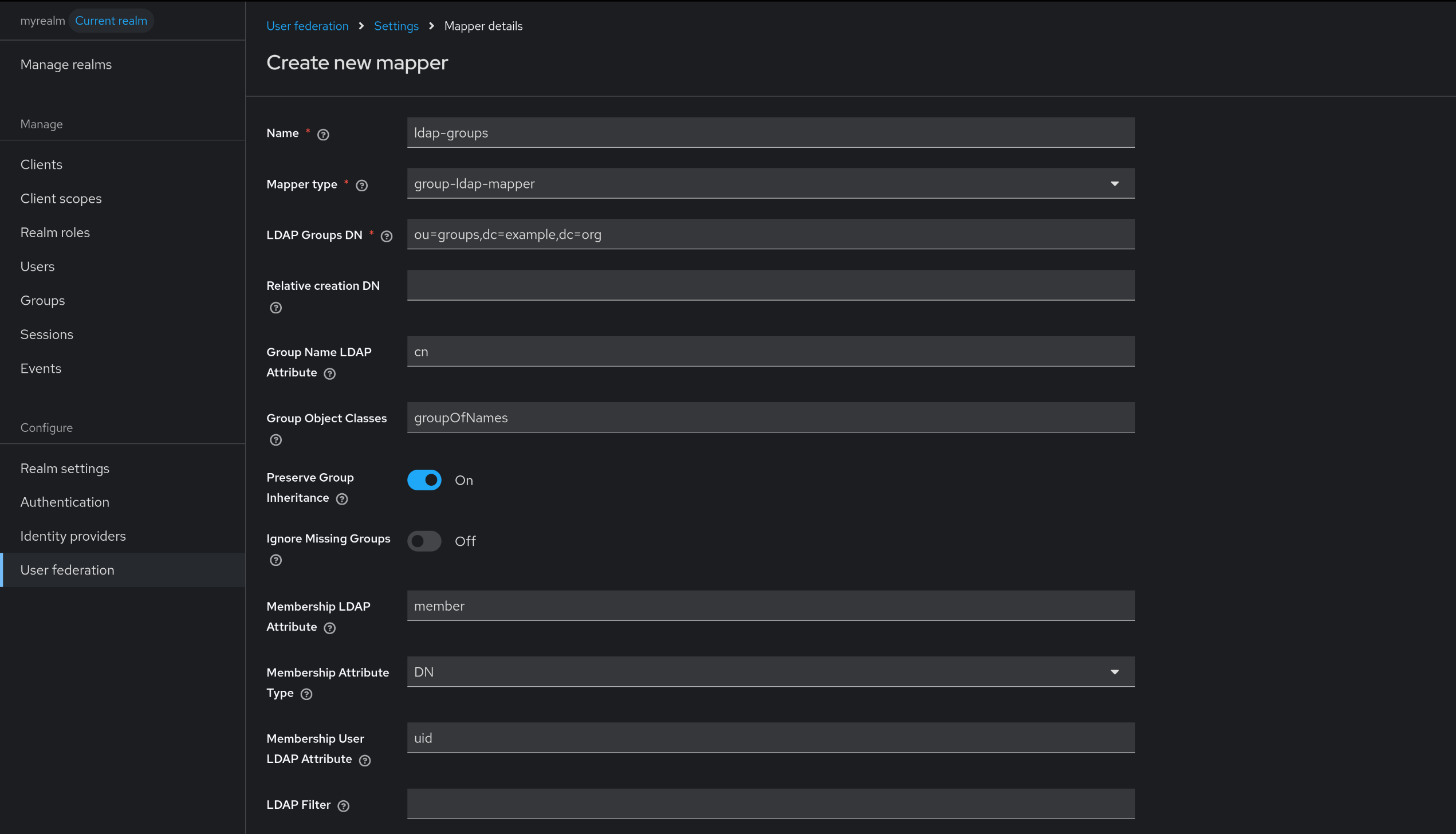

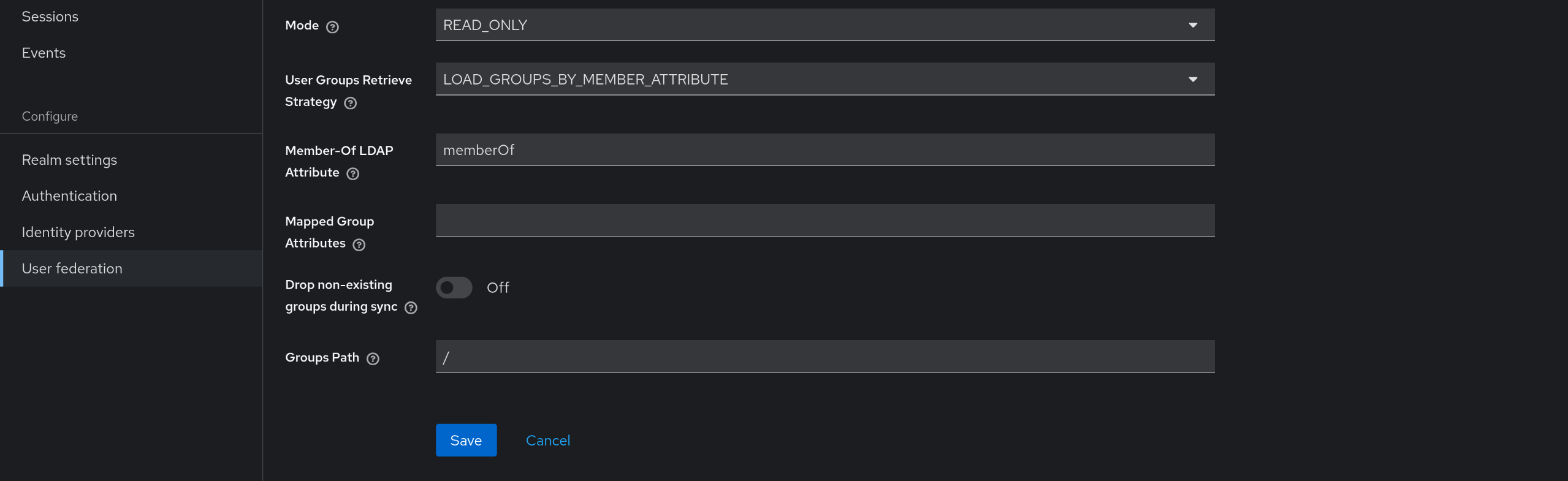

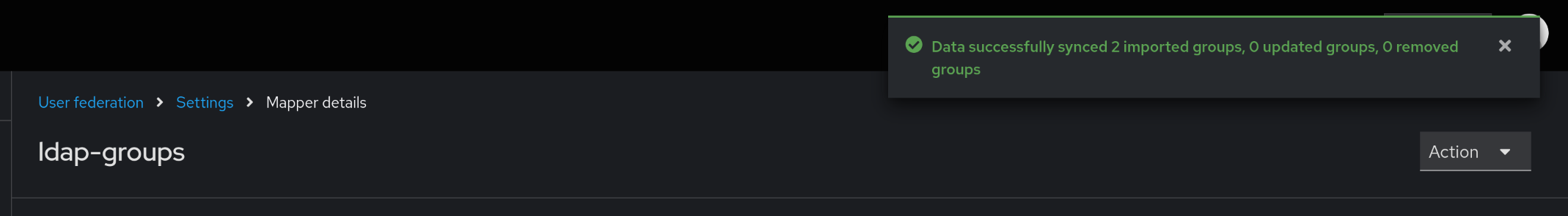

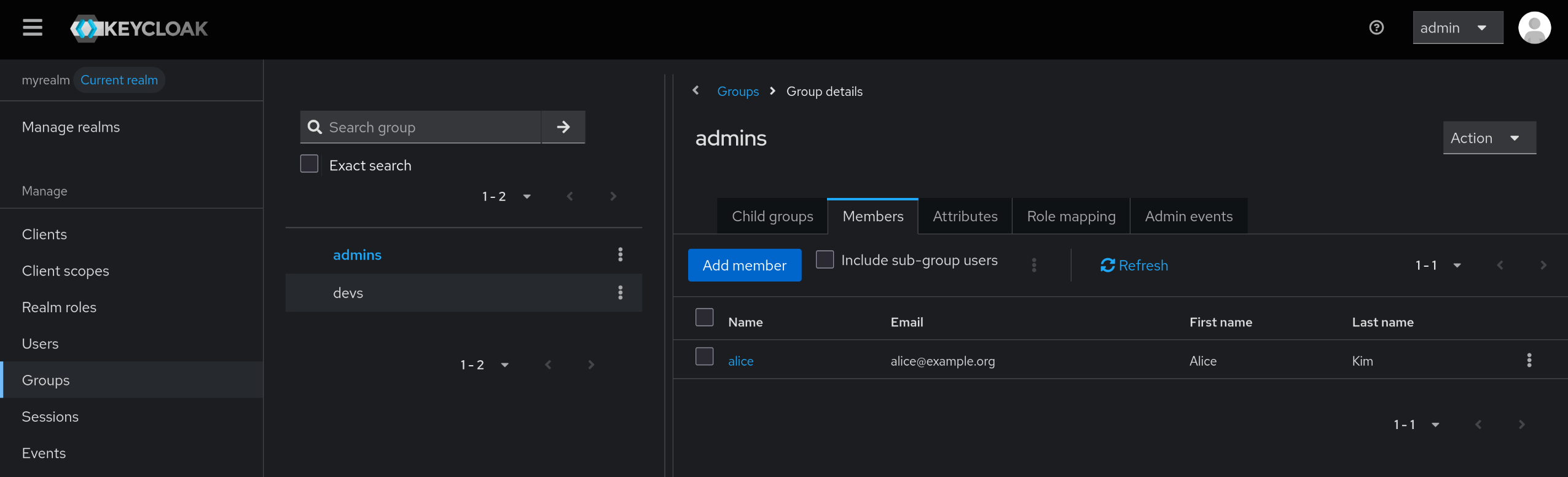

1. Argo CD 연동용 Realm / Client 생성

1

2

3

| 사용 Realm : myrealm

Client id : argocd

name : argocd client

|

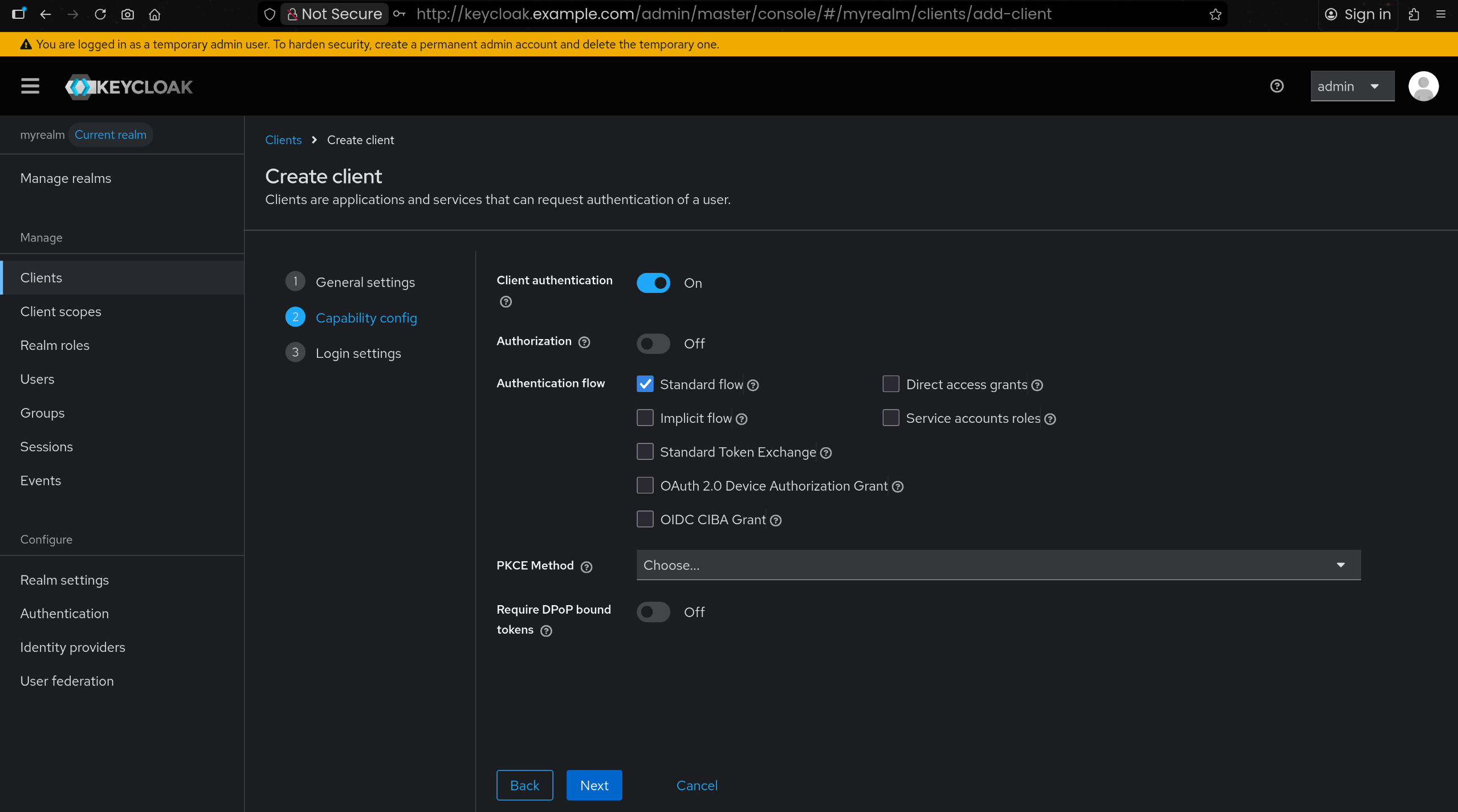

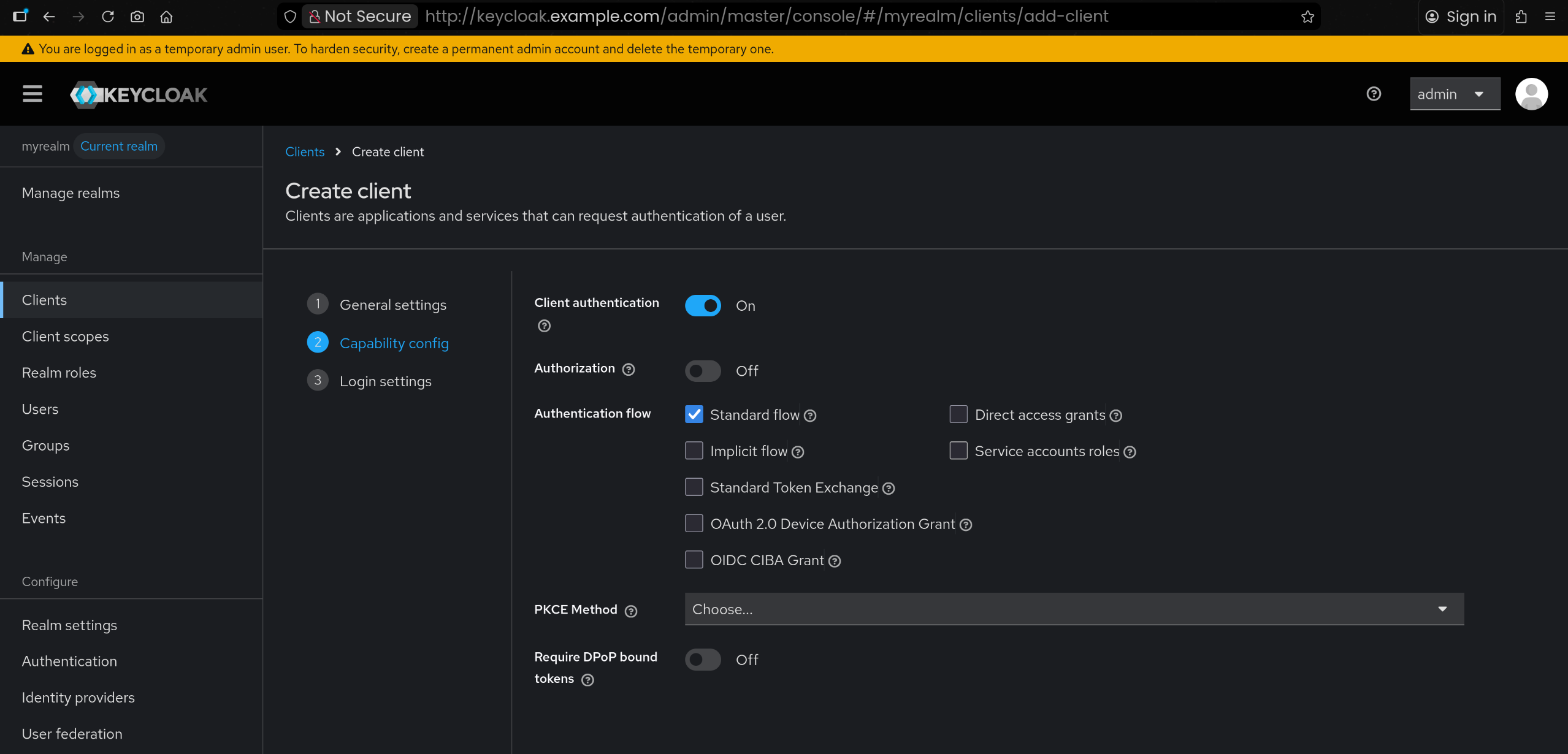

1

| Client authentication : ON

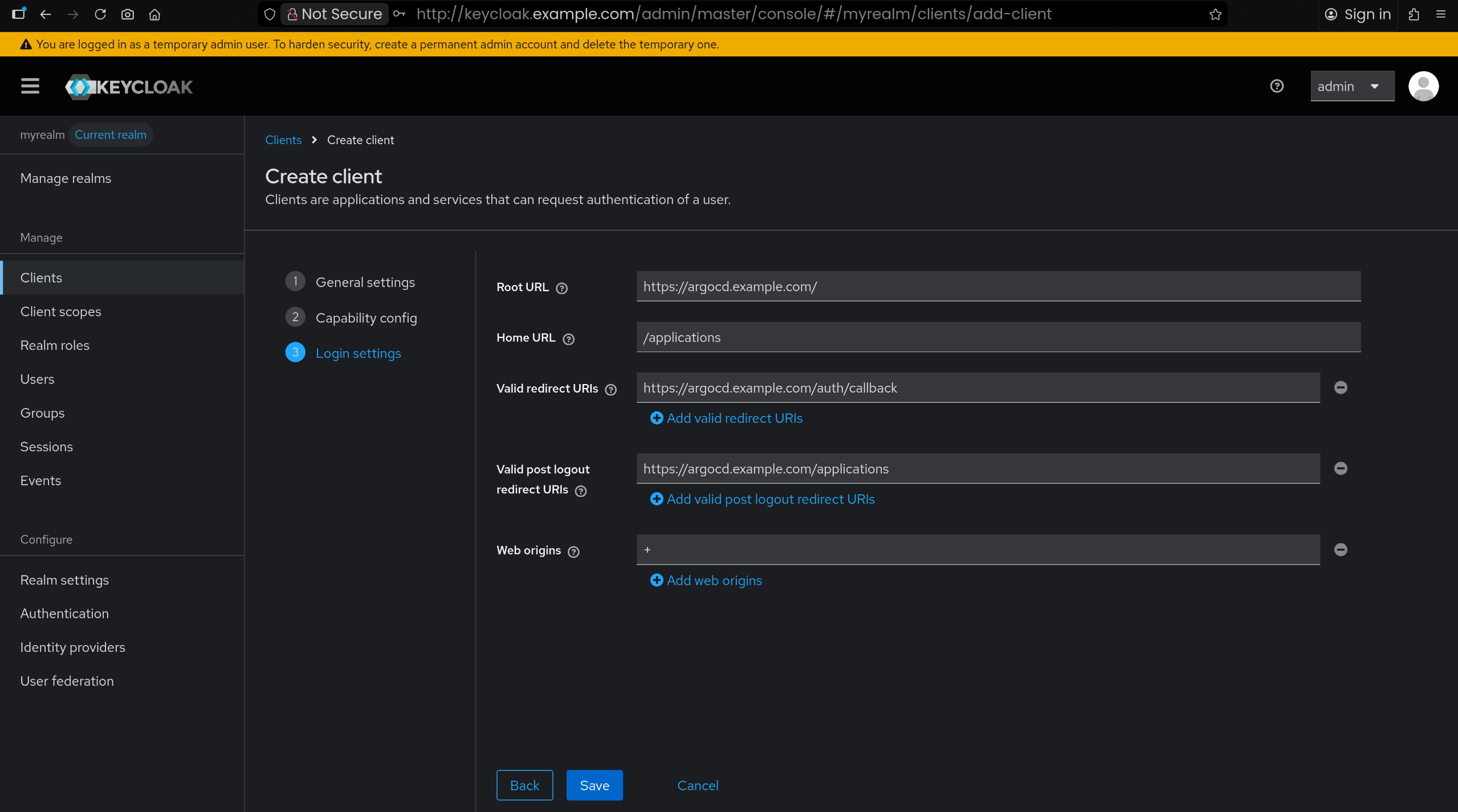

|

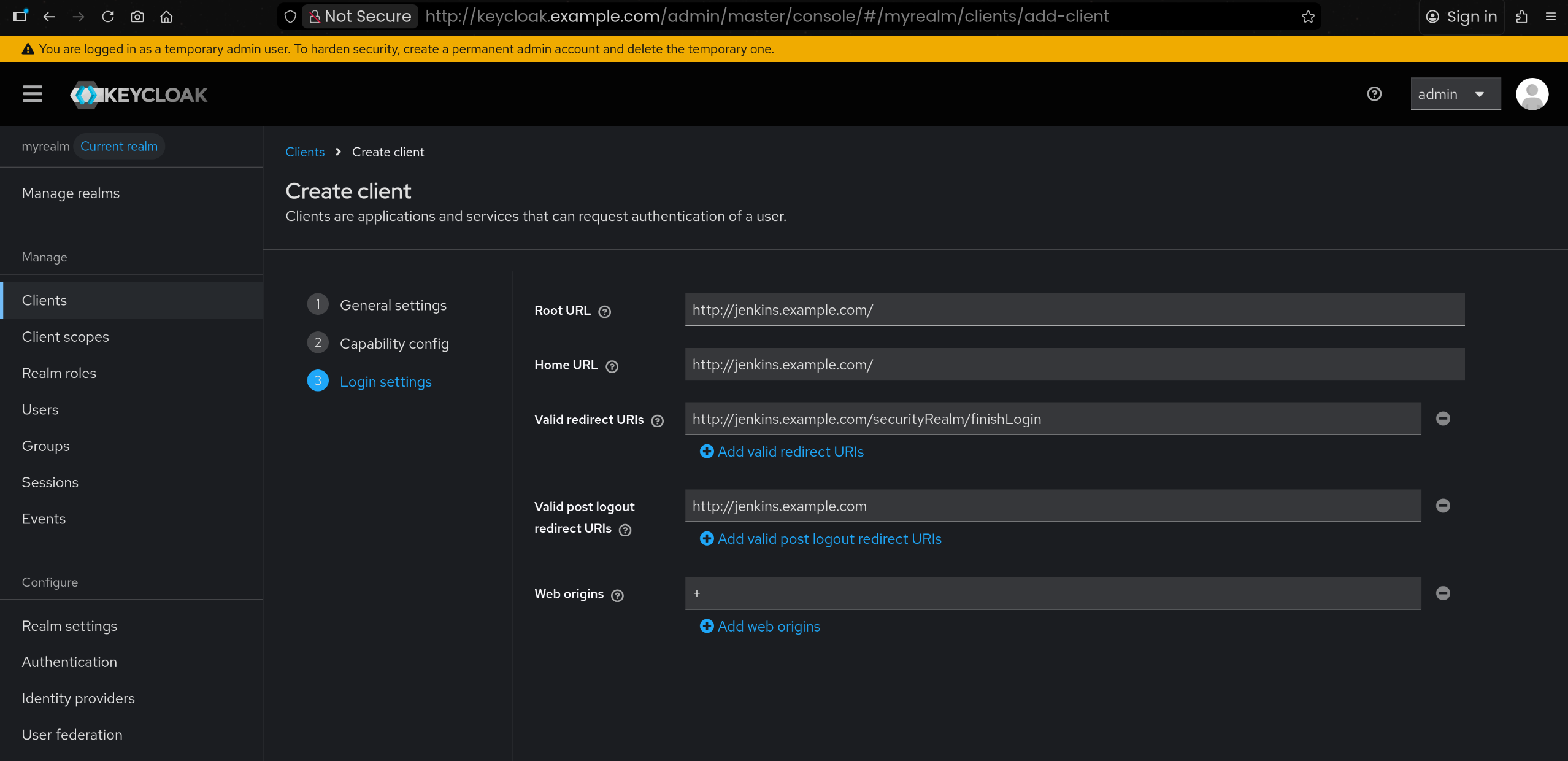

1

2

3

4

5

| Root URL : https://argocd.example.com/

Home URL : /applications

Valid redirect URIs : https://argocd.example.com/auth/callback

Valid post logout redirect URIs : https://argocd.example.com/applications

Web origins : +

|

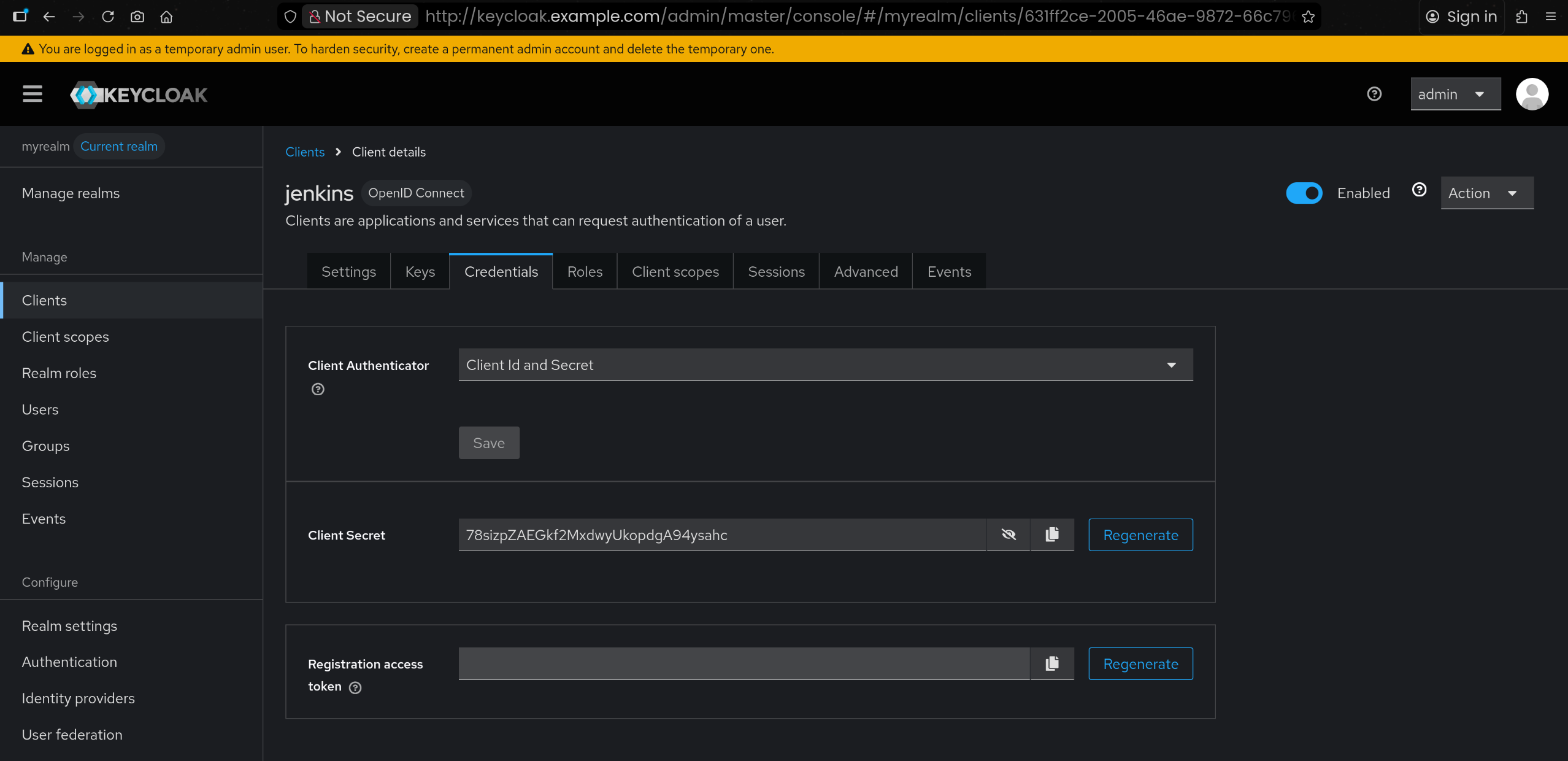

1

2

| # 생성된 client에서 → Credentials: 메모 해두기

bJnuNqSdHAaWDPn4ixWCAiac7tOP6nrN

|

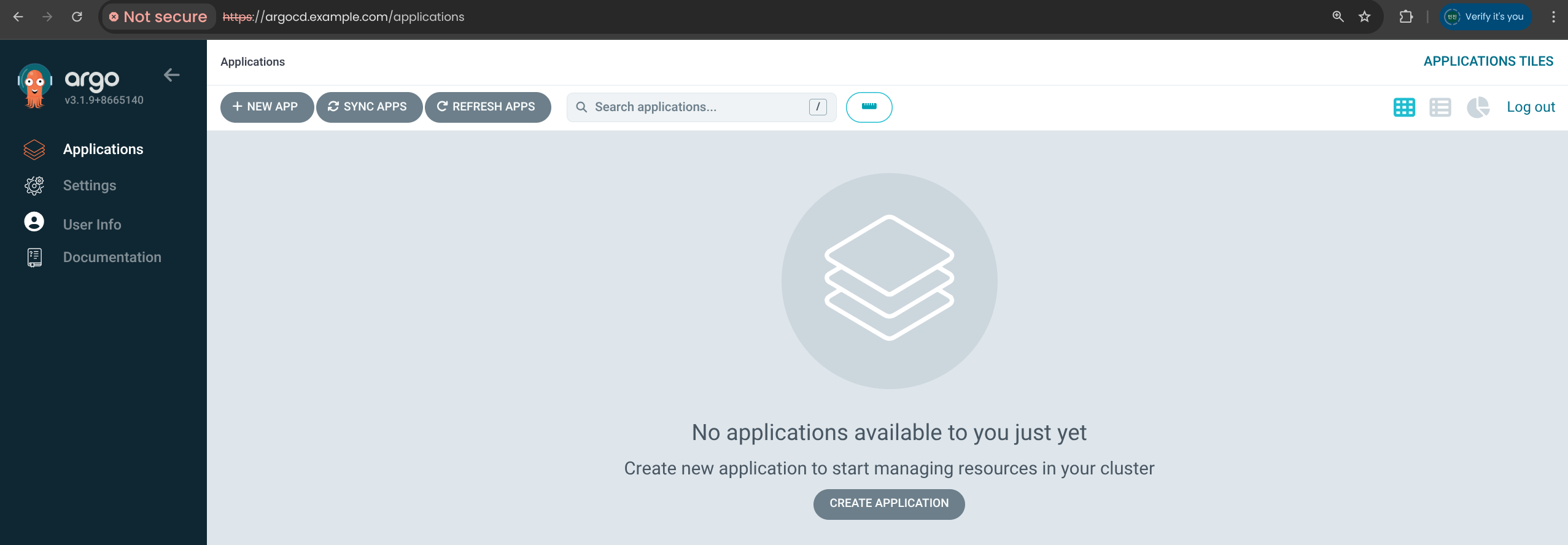

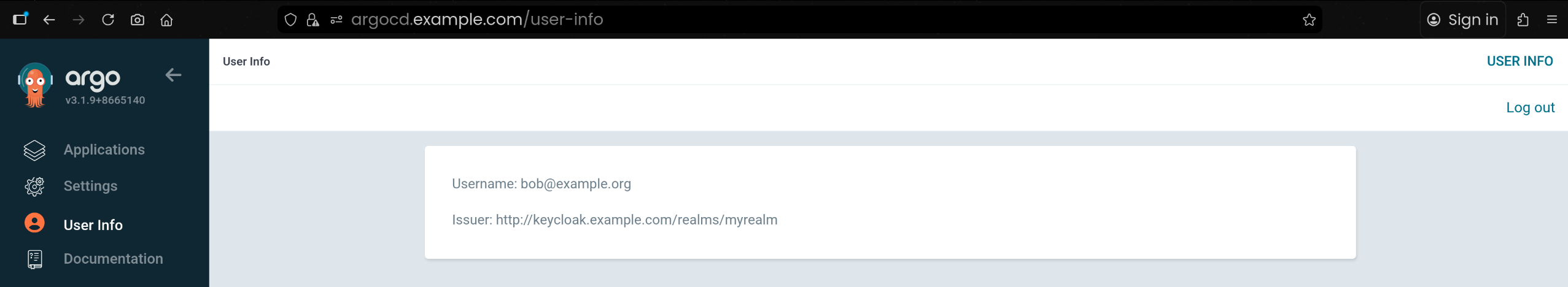

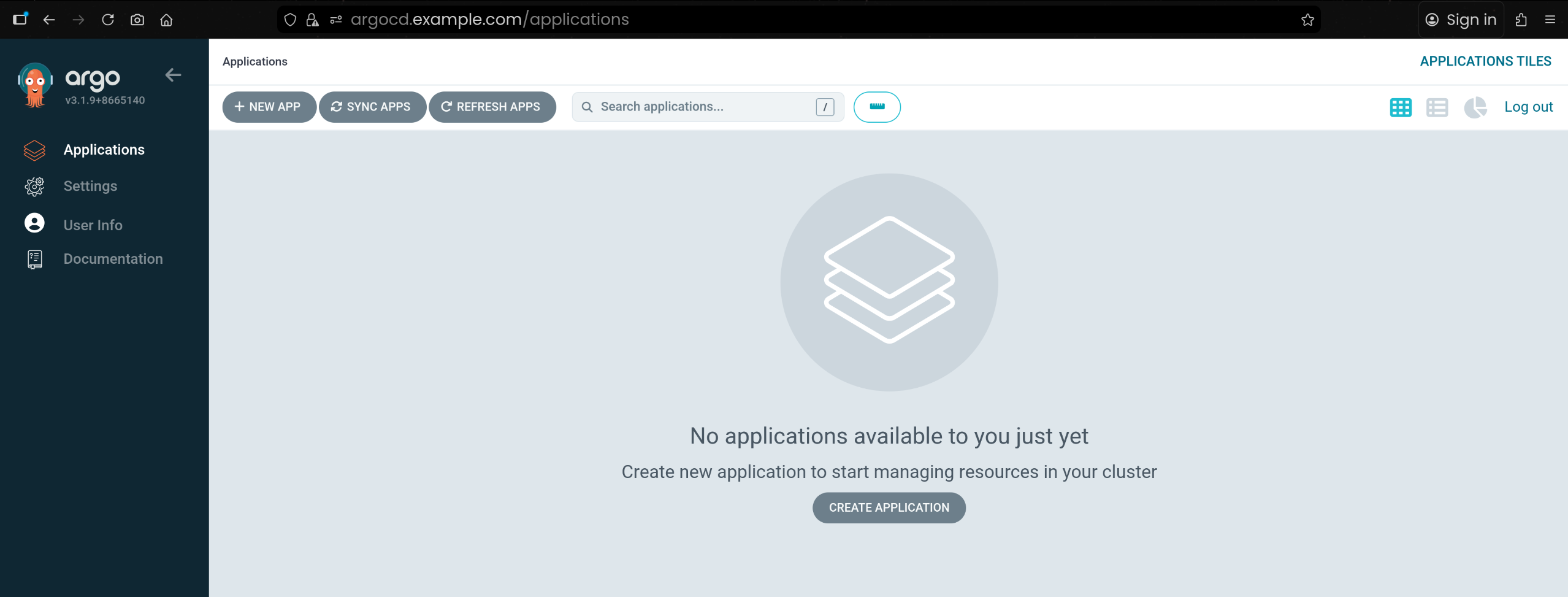

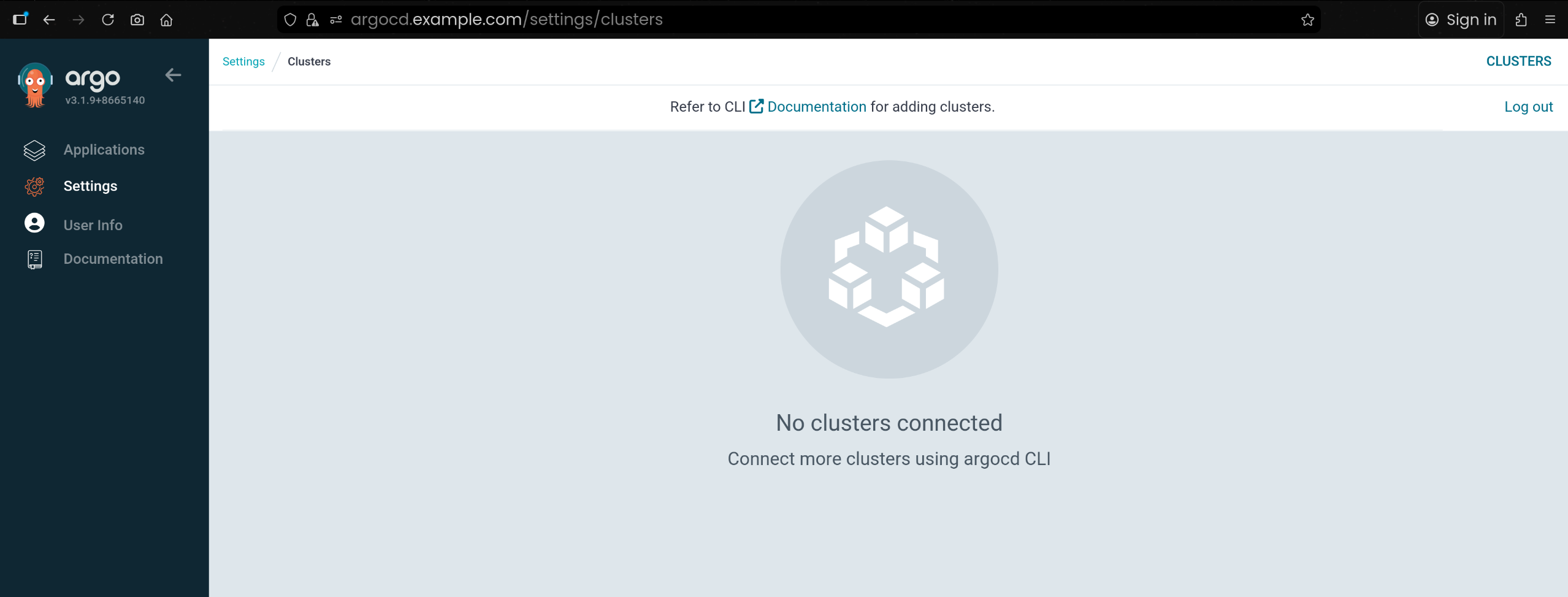

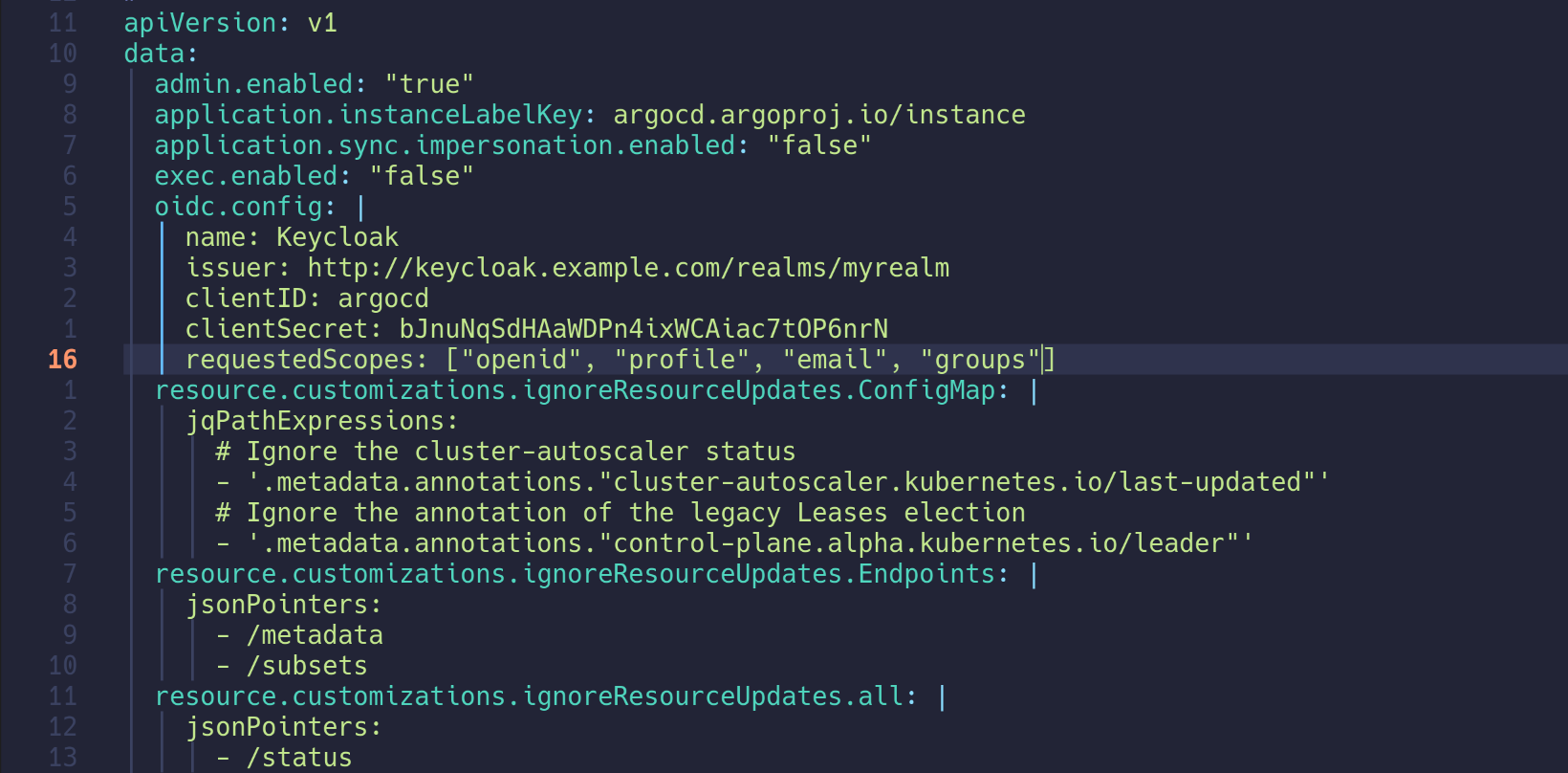

🛡️ Configuring ArgoCD OIDC

1. Keycloak Client Secret 을 Argo CD Secret 에 저장

1

2

3

| kubectl -n argocd patch secret argocd-secret --patch='{"stringData": { "oidc.keycloak.clientSecret": "<REPLACE_WITH_CLIENT_SECRET>" }}'

kubectl -n argocd patch secret argocd-secret --patch='{"stringData": { "oidc.keycloak.clientSecret": "bJnuNqSdHAaWDPn4ixWCAiac7tOP6nrN" }}'

secret/argocd-secret patched

|

- Keycloak 에서 발급받은