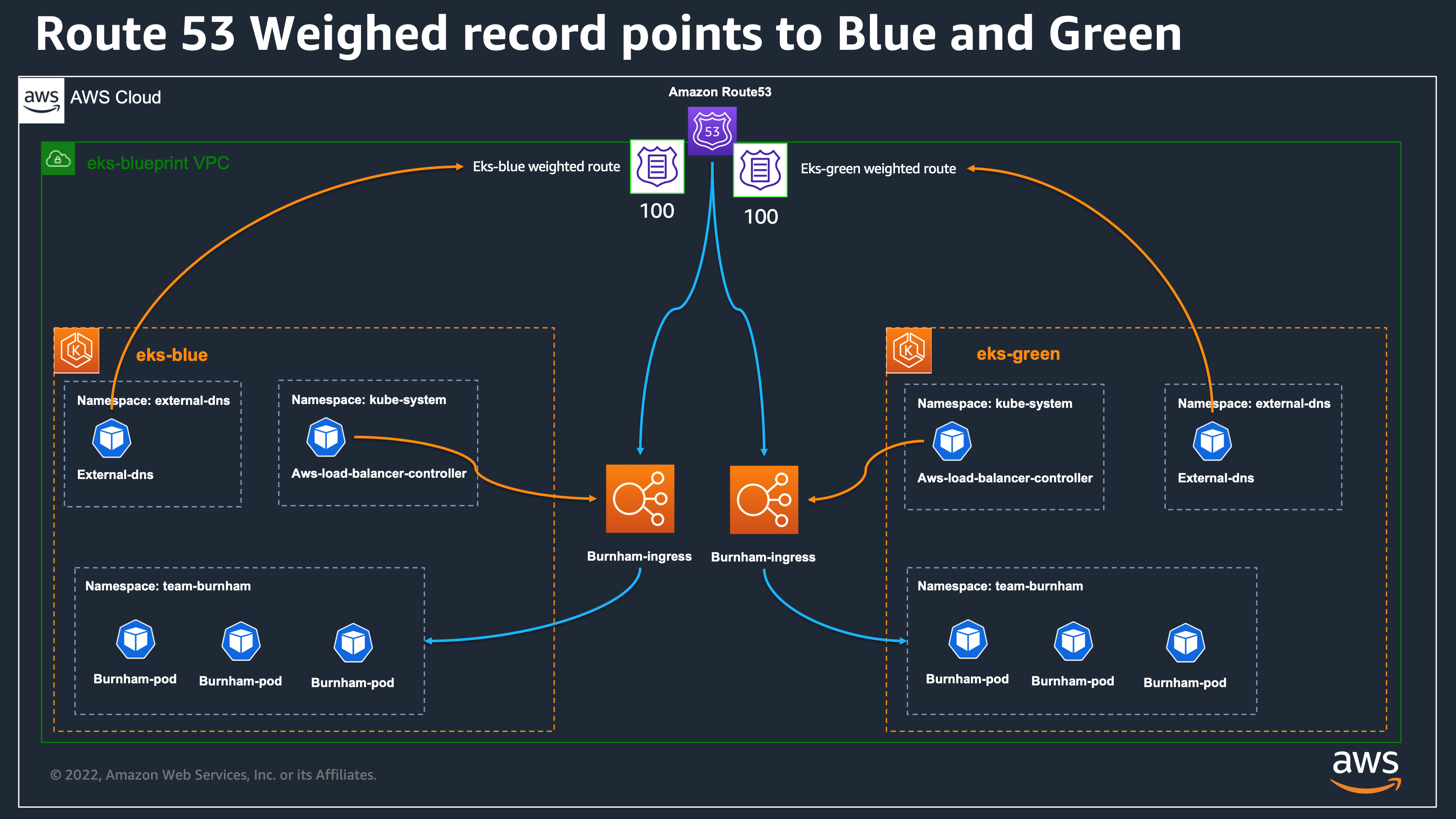

🌐 Blue/Green Upgrade 개요

- 무중단 배포를 위해 거의 동일한 운영 환경(

Blue, Green) 활용 - 새로운 버전을 안전하게 배포하고 롤백 가능성 확보

- EKS Blueprints로 두 개의 클러스터 또는 두 개의 디플로이먼트 버전 효율적으로 관리

💻 Blue/Green Upgrade 실습

- https://aws-ia.github.io/terraform-aws-eks-blueprints/patterns/blue-green-upgrade/

🔑 AWS Secrets Manager 시크릿 설정

1. GitOps 저장소 접근을 위한 SSH 키 생성

- SSH 키 생성 시,

~/.ssh/id_rsa (프라이빗 키)와 ~/.ssh/id_rsa.pub (퍼블릭 키) 파일이 생성됨

1

| ssh-keygen -t rsa -b 4096 -C "your_email@example.com"

|

2. 공개 키를 GitHub 계정의 SSH 및 GPG 키 설정 페이지에 추가

- https://github.com/settings/keys

3. AWS Secrets Manager에 시크릿 생성

- GitHub 저장소 접근용 SSH 프라이빗 키를 AWS Secrets Manager에 저장

1

2

3

4

| aws secretsmanager create-secret \

--name github-blueprint-ssh-key \

--secret-string "$(cat ~/.ssh/id_rsa.pub)" \

--region ap-northeast-2

|

📦 스택 구성하기

1. EKS Blueprints 레포지토리 클론

1

2

| git clone https://github.com/aws-ia/terraform-aws-eks-blueprints.git

cd terraform-aws-eks-blueprints/patterns/blue-green-upgrade/

|

✅ 출력

1

2

3

4

5

6

7

| Cloning into 'terraform-aws-eks-blueprints'...

remote: Enumerating objects: 36056, done.

remote: Counting objects: 100% (43/43), done.

remote: Compressing objects: 100% (36/36), done.

remote: Total 36056 (delta 20), reused 17 (delta 7), pack-reused 36013 (from 2)

Receiving objects: 100% (36056/36056), 38.38 MiB | 13.11 MiB/s, done.

Resolving deltas: 100% (21367/21367), done.

|

- 예제 파일(

terraform.tfvars.example) 복사하여 terraform.tfvars 파일 생성

1

| cp terraform.tfvars.example terraform.tfvars

|

- 각 환경 디렉토리(

environment, eks-blue, eks-green)에 심볼릭 링크 생성

1

2

3

| ln -s ../terraform.tfvars environment/terraform.tfvars

ln -s ../terraform.tfvars eks-blue/terraform.tfvars

ln -s ../terraform.tfvars eks-green/terraform.tfvars

|

3. 변수수정

terraform.tfvars 파일에서 아래 항목들을 본인 환경에 맞게 수정

1

2

3

4

5

6

7

8

9

10

11

12

13

| aws_region = "ap-northeast-2"

environment_name = "eks-blueprint"

hosted_zone_name = "gagajin.com"

eks_admin_role_name = "Admin" # Additional role admin in the cluster (usually the role I use in the AWS console)

# EKS Blueprint Workloads ArgoCD App of App repository

gitops_workloads_org = "git@github.com:aws-samples"

gitops_workloads_repo = "eks-blueprints-workloads"

gitops_workloads_revision = "main"

gitops_workloads_path = "envs/dev"

#Secret manager secret for github ssk jey

aws_secret_manager_git_private_ssh_key_name = "github-blueprint-ssh-key"

|

🏗️ Environment 스택 생성

1. Environment 디렉토리 초기화

1

2

| cd environment

terraform init

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| Initializing the backend...

Initializing modules...

Downloading registry.terraform.io/terraform-aws-modules/acm/aws 4.5.0 for acm...

- acm in .terraform/modules/acm

Downloading registry.terraform.io/terraform-aws-modules/vpc/aws 5.19.0 for vpc...

- vpc in .terraform/modules/vpc

Initializing provider plugins...

- Finding hashicorp/aws versions matching ">= 4.40.0, >= 4.67.0, >= 5.79.0"...

- Finding hashicorp/random versions matching ">= 3.0.0"...

- Installing hashicorp/random v3.7.1...

- Installed hashicorp/random v3.7.1 (signed by HashiCorp)

- Installing hashicorp/aws v5.94.1...

- Installed hashicorp/aws v5.94.1 (signed by HashiCorp)

Terraform has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that Terraform can guarantee to make the same selections by default when

you run "terraform init" in the future.

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

|

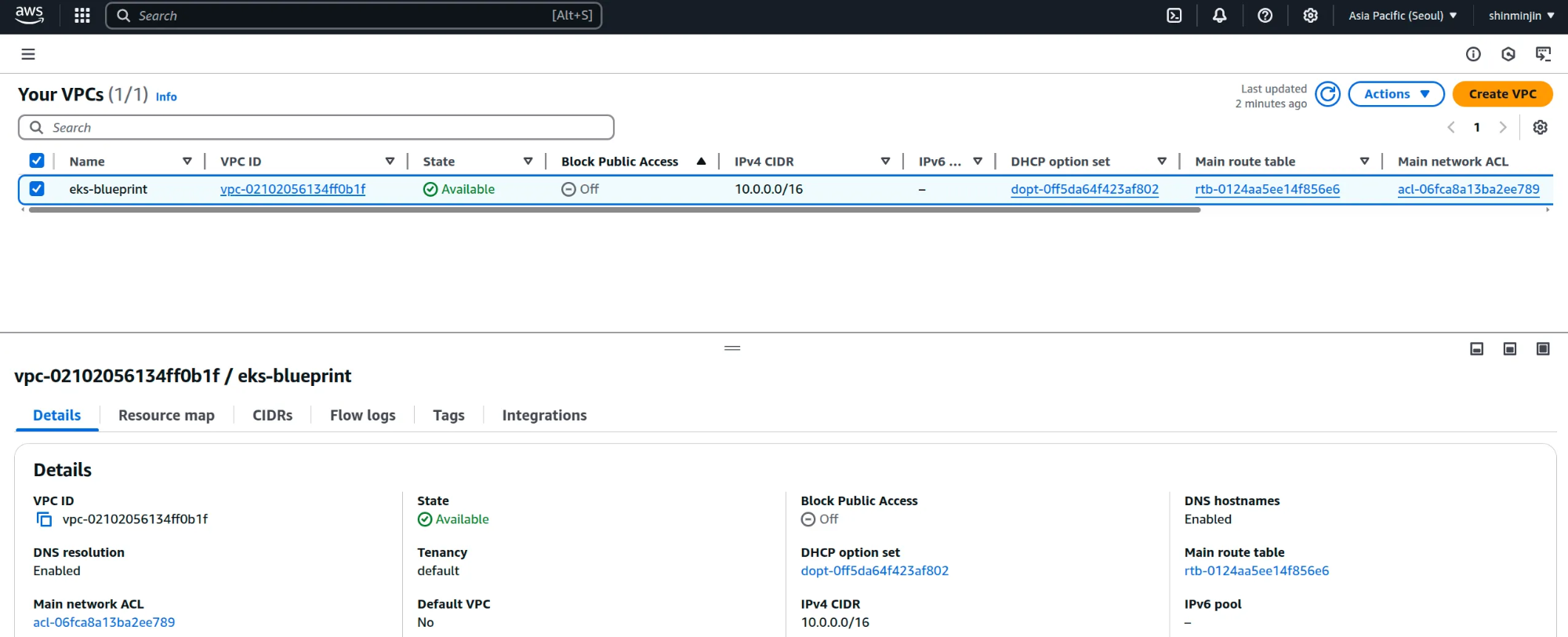

- 공통 인프라(VPC, Route53 서브도메인, 인증서 등) 구성 진행

2. Environment 리소스 생성

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

| ...

random_password.argocd: Creating...

random_password.argocd: Creation complete after 0s [id=none]

aws_secretsmanager_secret.argocd: Creating...

aws_route53_zone.sub: Creating...

module.vpc.aws_vpc.this[0]: Creating...

module.acm.aws_acm_certificate.this[0]: Creating...

aws_secretsmanager_secret.argocd: Creation complete after 0s [id=arn:aws:secretsmanager:ap-northeast-2:378102432899:secret:argocd-admin-secret.eks-blueprint-Ms4QgE]

aws_secretsmanager_secret_version.argocd: Creating...

aws_secretsmanager_secret_version.argocd: Creation complete after 0s [id=arn:aws:secretsmanager:ap-northeast-2:378102432899:secret:argocd-admin-secret.eks-blueprint-Ms4QgE|terraform-20250405073951021700000004]

module.acm.aws_acm_certificate.this[0]: Creation complete after 8s [id=arn:aws:acm:ap-northeast-2:378102432899:certificate/d5d1e891-cc95-4556-9cd9-80685627871b]

aws_route53_zone.sub: Still creating... [10s elapsed]

module.vpc.aws_vpc.this[0]: Still creating... [10s elapsed]

module.vpc.aws_vpc.this[0]: Creation complete after 11s [id=vpc-02102056134ff0b1f]

module.vpc.aws_internet_gateway.this[0]: Creating...

module.vpc.aws_default_route_table.default[0]: Creating...

module.vpc.aws_default_security_group.this[0]: Creating...

module.vpc.aws_subnet.private[2]: Creating...

module.vpc.aws_subnet.public[0]: Creating...

module.vpc.aws_subnet.private[1]: Creating...

module.vpc.aws_subnet.private[0]: Creating...

module.vpc.aws_subnet.public[2]: Creating...

module.vpc.aws_default_network_acl.this[0]: Creating...

module.vpc.aws_default_route_table.default[0]: Creation complete after 1s [id=rtb-0124aa5ee14f856e6]

module.vpc.aws_route_table.private[0]: Creating...

module.vpc.aws_internet_gateway.this[0]: Creation complete after 1s [id=igw-07f90ed8846154dc7]

module.vpc.aws_subnet.public[1]: Creating...

module.vpc.aws_subnet.private[1]: Creation complete after 1s [id=subnet-03991ff519a9ebc0f]

module.vpc.aws_route_table.public[0]: Creating...

module.vpc.aws_route_table.private[0]: Creation complete after 0s [id=rtb-0ef279f7062ca6aa2]

module.vpc.aws_eip.nat[0]: Creating...

module.vpc.aws_default_network_acl.this[0]: Creation complete after 1s [id=acl-06fca8a13ba2ee789]

module.vpc.aws_subnet.public[1]: Creation complete after 0s [id=subnet-03d52a875f66ddd8a]

module.vpc.aws_default_security_group.this[0]: Creation complete after 1s [id=sg-03a71ab8031e11e16]

module.vpc.aws_route_table.public[0]: Creation complete after 0s [id=rtb-03bd18748f49b08f3]

module.vpc.aws_route.public_internet_gateway[0]: Creating...

module.vpc.aws_eip.nat[0]: Creation complete after 1s [id=eipalloc-0c88e7961a72251f4]

module.vpc.aws_route.public_internet_gateway[0]: Creation complete after 1s [id=r-rtb-03bd18748f49b08f31080289494]

module.vpc.aws_subnet.private[0]: Creation complete after 2s [id=subnet-06420b1a16c575db4]

module.vpc.aws_subnet.public[2]: Creation complete after 3s [id=subnet-019591fc997c1a12d]

module.vpc.aws_subnet.private[2]: Creation complete after 3s [id=subnet-03c9e0bbb58ab87fc]

module.vpc.aws_route_table_association.private[2]: Creating...

module.vpc.aws_route_table_association.private[1]: Creating...

module.vpc.aws_route_table_association.private[0]: Creating...

module.vpc.aws_route_table_association.private[0]: Creation complete after 0s [id=rtbassoc-0d18e71a6075d9eb3]

module.vpc.aws_route_table_association.private[2]: Creation complete after 0s [id=rtbassoc-0700271c4d899c71a]

module.vpc.aws_route_table_association.private[1]: Creation complete after 0s [id=rtbassoc-04c1f45670e9cda31]

module.vpc.aws_subnet.public[0]: Creation complete after 3s [id=subnet-0260e5eff8a018c93]

module.vpc.aws_route_table_association.public[1]: Creating...

module.vpc.aws_route_table_association.public[0]: Creating...

module.vpc.aws_route_table_association.public[2]: Creating...

module.vpc.aws_nat_gateway.this[0]: Creating...

module.vpc.aws_route_table_association.public[0]: Creation complete after 0s [id=rtbassoc-06d2031598d7be981]

module.vpc.aws_route_table_association.public[2]: Creation complete after 0s [id=rtbassoc-018124f7b1b734e38]

module.vpc.aws_route_table_association.public[1]: Creation complete after 0s [id=rtbassoc-0065f49ae8faa8f0f]

aws_route53_zone.sub: Still creating... [20s elapsed]

module.vpc.aws_nat_gateway.this[0]: Still creating... [10s elapsed]

aws_route53_zone.sub: Still creating... [30s elapsed]

aws_route53_zone.sub: Creation complete after 33s [id=Z057908416N7Y1CDR3TL9]

aws_route53_record.ns: Creating...

module.acm.aws_route53_record.validation[0]: Creating...

module.vpc.aws_nat_gateway.this[0]: Still creating... [20s elapsed]

aws_route53_record.ns: Still creating... [10s elapsed]

module.acm.aws_route53_record.validation[0]: Still creating... [10s elapsed]

module.vpc.aws_nat_gateway.this[0]: Still creating... [30s elapsed]

module.acm.aws_route53_record.validation[0]: Still creating... [20s elapsed]

aws_route53_record.ns: Still creating... [20s elapsed]

module.vpc.aws_nat_gateway.this[0]: Still creating... [40s elapsed]

aws_route53_record.ns: Still creating... [30s elapsed]

module.acm.aws_route53_record.validation[0]: Still creating... [30s elapsed]

module.vpc.aws_nat_gateway.this[0]: Still creating... [50s elapsed]

aws_route53_record.ns: Creation complete after 33s [id=Z099663315X74TRCYB7J5_eks-blueprint.gagajin.com_NS]

module.acm.aws_route53_record.validation[0]: Creation complete after 33s [id=Z057908416N7Y1CDR3TL9__0d4f0159131bcdf9bf331ac6102cb4ec.eks-blueprint.gagajin.com._CNAME]

module.acm.aws_acm_certificate_validation.this[0]: Creating...

module.vpc.aws_nat_gateway.this[0]: Still creating... [1m0s elapsed]

module.acm.aws_acm_certificate_validation.this[0]: Still creating... [10s elapsed]

module.vpc.aws_nat_gateway.this[0]: Still creating... [1m10s elapsed]

module.acm.aws_acm_certificate_validation.this[0]: Still creating... [20s elapsed]

module.acm.aws_acm_certificate_validation.this[0]: Creation complete after 24s [id=0001-01-01 00:00:00 +0000 UTC]

module.vpc.aws_nat_gateway.this[0]: Still creating... [1m20s elapsed]

module.vpc.aws_nat_gateway.this[0]: Still creating... [1m30s elapsed]

module.vpc.aws_nat_gateway.this[0]: Still creating... [1m40s elapsed]

module.vpc.aws_nat_gateway.this[0]: Still creating... [1m50s elapsed]

module.vpc.aws_nat_gateway.this[0]: Creation complete after 1m54s [id=nat-047f4564e210bbdfc]

module.vpc.aws_route.private_nat_gateway[0]: Creating...

module.vpc.aws_route.private_nat_gateway[0]: Creation complete after 0s [id=r-rtb-0ef279f7062ca6aa21080289494]

Apply complete! Resources: 31 added, 0 changed, 0 destroyed.

Outputs:

aws_acm_certificate_status = "PENDING_VALIDATION"

aws_route53_zone = "eks-blueprint.gagajin.com"

vpc_id = "vpc-02102056134ff0b1f"

|

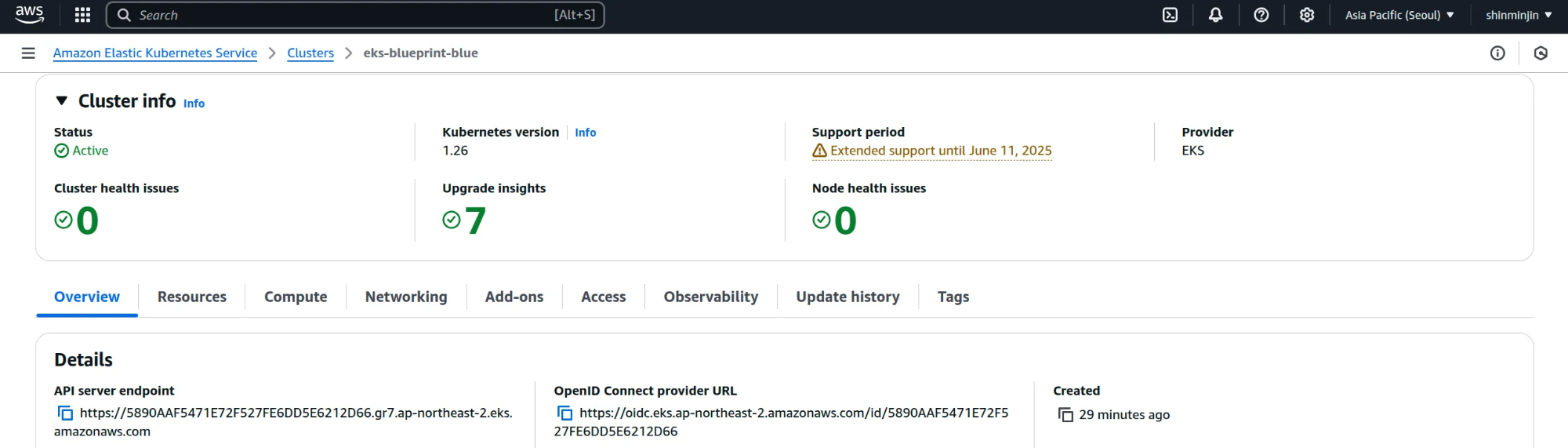

🔵 Blue 클러스터 생성

1. Blue 클러스터 디렉토리 초기화

- Blue 클러스터 생성용 디렉토리로 이동한 후 테라폼 초기화 진행

1

2

| cd ../eks-blue

terraform init

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

| Initializing the backend...

Initializing modules...

....

Initializing provider plugins...

- Finding hashicorp/aws versions matching ">= 3.72.0, >= 4.0.0, >= 4.36.0, >= 4.47.0, >= 4.57.0, >= 5.0.0"...

- Finding hashicorp/kubernetes versions matching ">= 2.10.0, >= 2.17.0, >= 2.20.0, >= 2.22.0, 2.22.0"...

- Finding hashicorp/helm versions matching ">= 2.9.0, >= 2.10.1"...

- Finding hashicorp/time versions matching ">= 0.9.0"...

- Finding hashicorp/tls versions matching ">= 3.0.0"...

- Finding hashicorp/cloudinit versions matching ">= 2.0.0"...

- Finding hashicorp/random versions matching ">= 3.6.0"...

- Installing hashicorp/random v3.7.1...

- Installed hashicorp/random v3.7.1 (signed by HashiCorp)

- Installing hashicorp/aws v5.94.1...

- Installed hashicorp/aws v5.94.1 (signed by HashiCorp)

- Installing hashicorp/kubernetes v2.22.0...

- Installed hashicorp/kubernetes v2.22.0 (signed by HashiCorp)

- Installing hashicorp/helm v2.17.0...

- Installed hashicorp/helm v2.17.0 (signed by HashiCorp)

- Installing hashicorp/time v0.13.0...

- Installed hashicorp/time v0.13.0 (signed by HashiCorp)

- Installing hashicorp/tls v4.0.6...

- Installed hashicorp/tls v4.0.6 (signed by HashiCorp)

- Installing hashicorp/cloudinit v2.3.6...

- Installed hashicorp/cloudinit v2.3.6 (signed by HashiCorp)

Terraform has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that Terraform can guarantee to make the same selections by default when

you run "terraform init" in the future.

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

|

2. Blue 클러스터 생성 중 에러 발생

✅ 출력

1

2

| ...

reading IAM Role (Admin): couldn't find resource

|

3. 문제 해결 - 관리자 역할 생성하기

(1) trust-policy.json 파일 생성

1

2

3

4

5

6

7

8

9

10

11

12

| {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::123456789012:user/myusername" # AccountId, user-name 셋팅

},

"Action": "sts:AssumeRole"

}

]

}

|

(2) Admin 역할 생성

1

2

3

4

| aws iam create-role \

--role-name Admin \

--assume-role-policy-document file://trust-policy.json \

--region ap-northeast-2

|

(3) EKS 클러스터 관리를 위한 정책 첨부

1

2

3

4

| aws iam attach-role-policy \

--role-name Admin \

--policy-arn arn:aws:iam::aws:policy/AdministratorAccess \

--region ap-northeast-2

|

4. Blue 클러스터 생성 (약 20분 소요)

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

| ...

module.eks_cluster.module.eks_blueprints_addons.aws_eks_addon.this["coredns"]: Still creating... [15m0s elapsed]

module.eks_cluster.module.eks_blueprints_addons.aws_eks_addon.this["coredns"]: Creation complete after 15m7s [id=eks-blueprint-blue:coredns]

module.eks_cluster.kubernetes_namespace.argocd: Creating...

module.eks_cluster.kubernetes_namespace.argocd: Creation complete after 0s [id=argocd]

module.eks_cluster.kubernetes_secret.git_secrets["git-workloads"]: Creating...

module.eks_cluster.kubernetes_secret.git_secrets["git-addons"]: Creating...

module.eks_cluster.kubernetes_secret.git_secrets["git-addons"]: Creation complete after 0s [id=argocd/git-addons]

module.eks_cluster.kubernetes_secret.git_secrets["git-workloads"]: Creation complete after 0s [id=argocd/git-workloads]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.argocd[0]: Creating...

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.argocd[0]: Still creating... [10s elapsed]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.argocd[0]: Still creating... [20s elapsed]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.argocd[0]: Still creating... [30s elapsed]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.argocd[0]: Still creating... [40s elapsed]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.argocd[0]: Creation complete after 43s [id=argo-cd]

module.eks_cluster.module.gitops_bridge_bootstrap.kubernetes_secret_v1.cluster[0]: Creating...

module.eks_cluster.module.gitops_bridge_bootstrap.kubernetes_secret_v1.cluster[0]: Creation complete after 0s [id=argocd/eks-blueprint-blue]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.bootstrap["addons"]: Creating...

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.bootstrap["workloads"]: Creating...

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.bootstrap["workloads"]: Creation complete after 1s [id=workloads]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.bootstrap["addons"]: Creation complete after 1s [id=addons]

╷

│ Warning: Argument is deprecated

│

│ with module.eks_cluster.module.eks.aws_iam_role.this[0],

│ on .terraform/modules/eks_cluster.eks/main.tf line 285, in resource "aws_iam_role" "this":

│ 285: resource "aws_iam_role" "this" {

│

│ inline_policy is deprecated. Use the aws_iam_role_policy resource instead. If

│ Terraform should exclusively manage all inline policy associations (the current

│ behavior of this argument), use the aws_iam_role_policies_exclusive resource as

│ well.

╵

Apply complete! Resources: 130 added, 0 changed, 0 destroyed.

Outputs:

access_argocd = <<EOT

export KUBECONFIG="/tmp/eks-blueprint-blue"

aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue

echo "ArgoCD URL: https://$(kubectl get svc -n argocd argo-cd-argocd-server -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')"

echo "ArgoCD Username: admin"

echo "ArgoCD Password: $(aws secretsmanager get-secret-value --secret-id argocd-admin-secret.eks-blueprint --query SecretString --output text --region ap-northeast-2)"

EOT

configure_kubectl = "aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue"

eks_blueprints_dev_teams_configure_kubectl = [

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue --role-arn arn:aws:iam::123456789012:role/team-burnham-20250405082641683400000021",

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue --role-arn arn:aws:iam::123456789012:role/team-riker-2025040508264060520000001d",

]

eks_blueprints_ecsdemo_teams_configure_kubectl = [

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue --role-arn arn:aws:iam::123456789012:role/team-ecsdemo-crystal-2025040508264060600000001e",

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue --role-arn arn:aws:iam::123456789012:role/team-ecsdemo-frontend-2025040508264060490000001c",

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue --role-arn arn:aws:iam::123456789012:role/team-ecsdemo-nodejs-2025040508264085510000001f",

]

eks_blueprints_platform_teams_configure_kubectl = "aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue --role-arn arn:aws:iam::123456789012:role/team-platform-20250405081813449700000002"

eks_cluster_id = "eks-blueprint-blue"

|

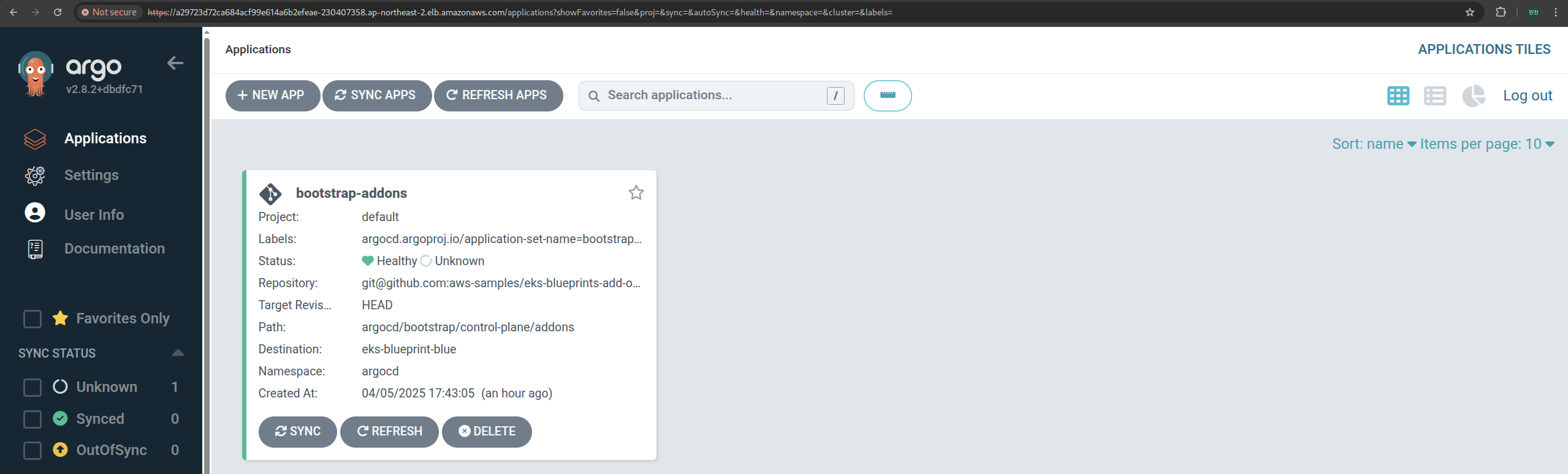

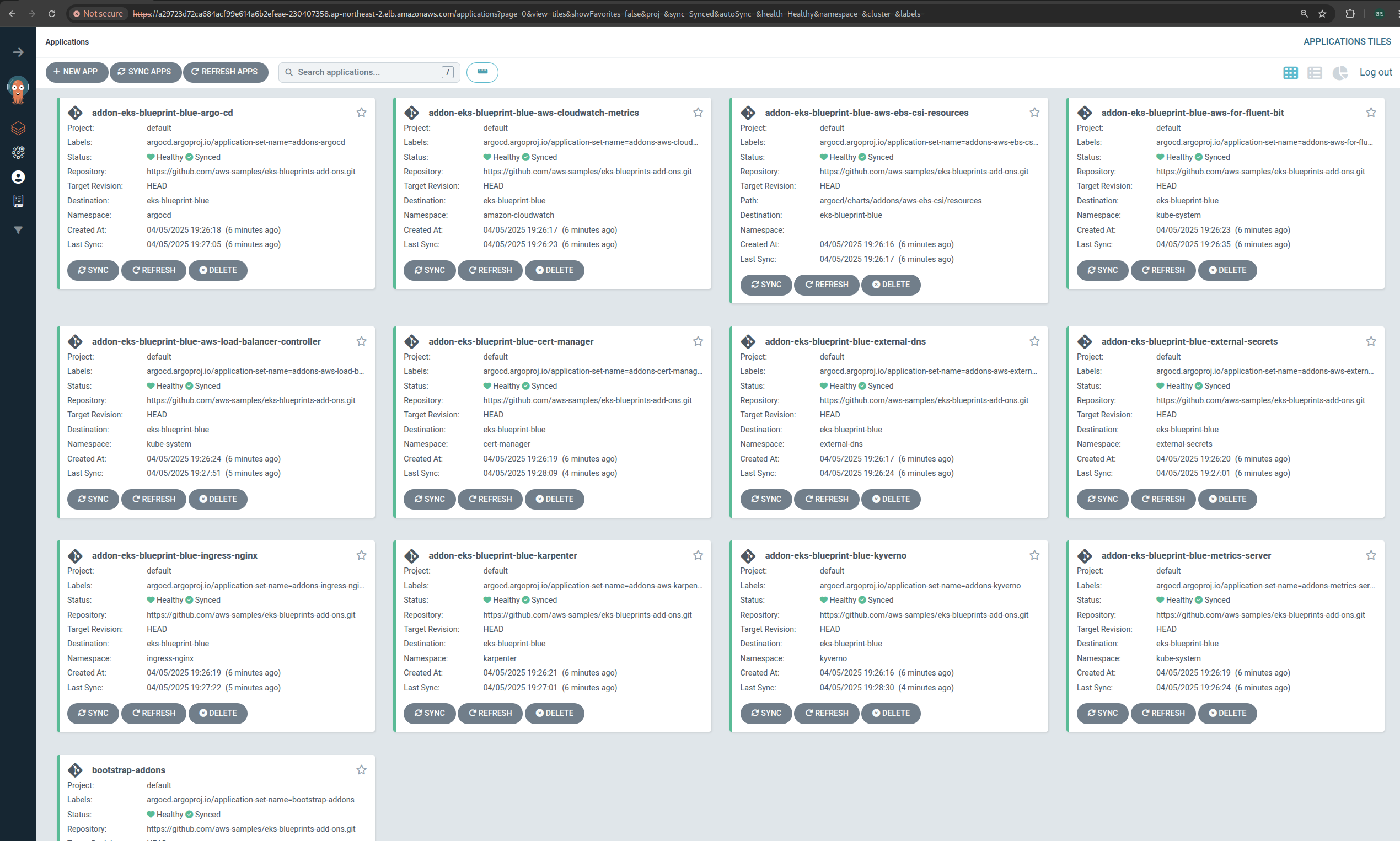

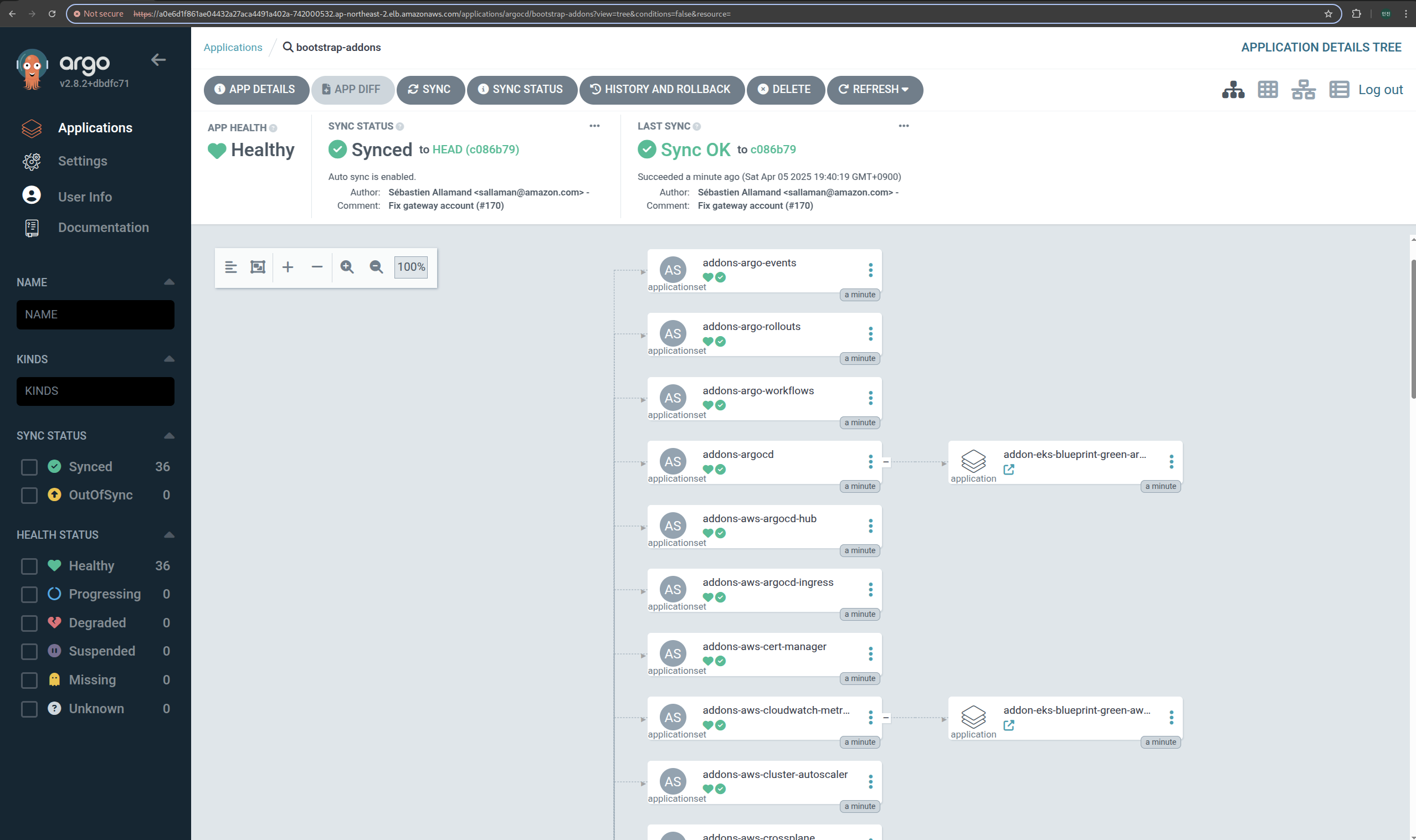

5. ArgoCD 접근 및 확인

(1) KUBECONFIG 설정 및 클러스터 업데이트 진행

1

2

| export KUBECONFIG="/tmp/eks-blueprint-blue"

aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue

|

✅ 출력

1

| Added new context arn:aws:eks:ap-northeast-2:123456789012:cluster/eks-blueprint-blue to /tmp/eks-blueprint-blue

|

(2) ArgoCD URL, Username, Password 확인

1

2

3

| echo "ArgoCD URL: https://$(kubectl get svc -n argocd argo-cd-argocd-server -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')"

echo "ArgoCD Username: admin"

echo "ArgoCD Password: $(aws secretsmanager get-secret-value --secret-id argocd-admin-secret.eks-blueprint --query SecretString --output text --region ap-northeast-2)"

|

✅ 출력

1

2

3

| ArgoCD URL: https://a29723d72ca684acf99e614a6b2efeae-230407358.ap-northeast-2.elb.amazonaws.com

ArgoCD Username: admin

ArgoCD Password: q<T2ETwcZa1PCVx4

|

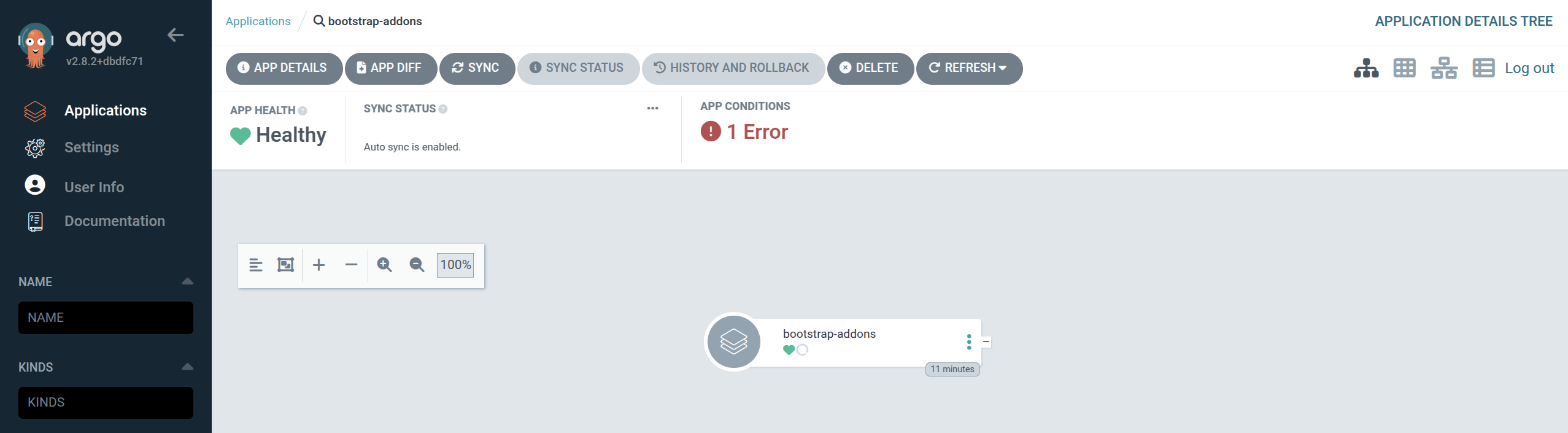

6. 레포지토리 URL 관련 에러 해결

(1) 에러 확인

- SSH키 관련 설정 문제로 보임

- Settings → Repositories credential 설정이 비어있음

1

| Failed to load target state: failed to generate manifest for source 1 of 1: rpc error: code = Unknown desc = ssh: no key found

|

(2) 레포지토리 URL을 https로 변경하는 패치 진행

1

2

| kubectl patch secret eks-blueprint-blue -n argocd --type merge \

-p '{"metadata": {"annotations": {"addons_repo_url": "https://github.com/aws-samples/eks-blueprints-add-ons.git"}}}'

|

✅ 출력

1

| secret/eks-blueprint-blue patched

|

(3) 레포지토리 경로 수정 후, 싱크가 맞춰짐

(4) 파드 확인

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

| k get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

amazon-cloudwatch aws-cloudwatch-metrics-9mf62 1/1 Running 0 9m26s

amazon-cloudwatch aws-cloudwatch-metrics-f9fr4 1/1 Running 0 9m26s

amazon-cloudwatch aws-cloudwatch-metrics-zc2jq 1/1 Running 0 9m26s

argocd argo-cd-argocd-application-controller-0 1/1 Running 0 8m56s

argocd argo-cd-argocd-applicationset-controller-55c4c79d49-jb9bz 1/1 Running 0 113m

argocd argo-cd-argocd-dex-server-6c5d66ddf7-4jdtc 1/1 Running 0 113m

argocd argo-cd-argocd-notifications-controller-69fd44d464-2rxmd 1/1 Running 0 113m

argocd argo-cd-argocd-redis-5f474f9d77-6frts 1/1 Running 0 113m

argocd argo-cd-argocd-repo-server-544b5b8d66-c9w5v 1/1 Running 0 7m48s

argocd argo-cd-argocd-repo-server-544b5b8d66-77jjn 1/1 Running 0 7m58s

argocd argo-cd-argocd-repo-server-544b5b8d66-x1lpa 1/1 Running 0 7m59s

argocd argo-cd-argocd-repo-server-544b5b8d66-llmma 1/1 Running 0 8m1s

argocd argo-cd-argocd-repo-server-544b5b8d66-xckhc 1/1 Running 0 8m3s

argocd argo-cd-argocd-server-5dbd8864d4-kh24q 1/1 Running 0 9m4s

cert-manager cert-manager-5c84984c7b-5xjhk 1/1 Running 0 9m21s

cert-manager cert-manager-cainjector-67b46dfbc4-7qdxd 1/1 Running 0 9m21s

cert-manager cert-manager-webhook-557cf8b498-z9r2h 1/1 Running 0 9m21s

external-dns external-dns-7776997dbc-5vghq 1/1 Running 0 9m26s

external-secrets external-secrets-5f7fc744fb-5vfsv 1/1 Running 0 9m9s

external-secrets external-secrets-cert-controller-6ddf99cb9d-94jnx 1/1 Running 0 9m9s

external-secrets external-secrets-webhook-64449c7c97-fnf2h 1/1 Running 0 9m9s

geolocationapi geolocationapi-8a6bfb531a-a12mm 2/2 Running 0 7m50s

geolocationapi geolocationapi-8a6bfb531a-ldtka 2/2 Running 0 7m51s

geordie downstream0-4779f7bc48-klscr 1/1 Running 0 8m1s

geordie downstream1-3ee6b6fd8c-u7pf0 1/1 Running 0 8m1s

geordie frontend-34531fdbbb-dcrpa 1/1 Running 0 8m2s

geordie redis-server-76d7b647dd-28hlq 1/1 Running 0 8m2s

geordie yelb-appserver-56d6d6685b-mwzbc 1/1 Running 0 8m2s

geordie yelb-db-3dfdd5d11e-dvvka 1/1 Running 0 8m2s

geordie yelb-ui-47545895f-3ch8r 1/1 Running 0 8m2s

ingress-nginx ingress-nginx-controller-85b4dd96b4-dqvzg 1/1 Running 0 9m21s

ingress-nginx ingress-nginx-controller-85b4dd96b4-z49b7 1/1 Running 0 9m21s

ingress-nginx ingress-nginx-controller-85b4dd96b4-zgtjc 1/1 Running 0 9m22s

karpenter karpenter-5cc4fd495-hlz4g 1/1 Running 0 9m10s

karpenter karpenter-5cc4fd495-rzzlk 1/1 Running 0 9m10s

kube-system aws-for-fluent-bit-qcbx7 1/1 Running 0 9m12s

kube-system aws-for-fluent-bit-v9429 1/1 Running 0 9m12s

kube-system aws-for-fluent-bit-wq87n 1/1 Running 0 9m12s

kube-system aws-load-balancer-controller-7c68859454-sjkwq 1/1 Running 0 8m46s

kube-system aws-load-balancer-controller-7c68859454-vmhgg 1/1 Running 0 8m46s

kube-system aws-node-7fsml 2/2 Running 0 127m

kube-system aws-node-b8dtx 2/2 Running 0 127m

kube-system aws-node-lvnkf 2/2 Running 0 127m

kube-system coredns-767f9b58bd-7tll7 1/1 Running 0 128m

kube-system coredns-767f9b58bd-89bc8 1/1 Running 0 128m

kube-system kube-proxy-cf85x 1/1 Running 0 127m

kube-system kube-proxy-hnmhn 1/1 Running 0 127m

kube-system kube-proxy-msjzg 1/1 Running 0 127m

kube-system metrics-server-8794b9cdf-dhqf9 1/1 Running 0 9m23s

kyverno kyverno-admission-controller-757766c5f5-gg598 1/1 Running 0 8m37s

kyverno kyverno-background-controller-bc589cf8c-m27wl 1/1 Running 0 8m37s

kyverno kyverno-cleanup-admission-reports-29064150-jl2nb 0/1 Completed 0 5m46s

kyverno kyverno-cleanup-cluster-admission-reports-29064150-ch97g 0/1 Completed 0 5m46s

kyverno kyverno-cleanup-controller-5c9fdbbc5c-rc56n 1/1 Running 0 8m37s

kyverno kyverno-reports-controller-6cc9fd6979-qjt5g 1/1 Running 0 8m37s

team-burnham burnham-97a8b5dd0d-6ijkm 1/1 Running 0 9m2s

team-burnham burnham-97a8b5dd0d-rstuv 1/1 Running 0 9m2s

team-burnham burnham-97a8b5dd0d-uvwxy 1/1 Running 0 9m2s

team-burnham nginx-5769f5bf7c-d5s7s 1/1 Running 0 9m2s

team-riker deployment-2048-48c41356a2-k3l4m 1/1 Running 0 9m1s

team-riker deployment-2048-48c41356a2-l4m5n 1/1 Running 0 9m1s

team-riker deployment-2048-48c41356a2-m5n6o 1/1 Running 0 9m1s

team-riker guestbook-ui-6bb6d8f45d-lvf25 1/1 Running 0 9m1s

|

(5) team-burnham 확인

1

| kubectl get deployment -n team-burnham -l app=burnham

|

✅ 출력

1

2

3

| NAME READY UP-TO-DATE AVAILABLE AGE

burnham 3/3 3 3 9m35s

nginx 1/1 1 1 9m35s

|

1

| kubectl get pods -n team-burnham -l app=burnham

|

✅ 출력

1

2

3

4

| NAME READY STATUS RESTARTS AGE

burnham-97a8b5dd0d-6ijkm 1/1 Running 0 10m15s

burnham-97a8b5dd0d-rstuv 1/1 Running 0 10m15s

burnham-97a8b5dd0d-uvwxy 1/1 Running 0 10m15s

|

1

| kubectl logs -n team-burnham -l app=burnham

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

| 2025/04/05 13:21:21 {url: / }, cluster: eks-blueprint-blue }

2025/04/05 13:21:31 {url: / }, cluster: eks-blueprint-blue }

2025/04/05 13:21:41 {url: / }, cluster: eks-blueprint-blue }

2025/04/05 13:21:51 {url: / }, cluster: eks-blueprint-blue }

2025/04/05 13:22:01 {url: / }, cluster: eks-blueprint-blue }

2025/04/05 13:22:11 {url: / }, cluster: eks-blueprint-blue }

2025/04/05 13:22:21 {url: / }, cluster: eks-blueprint-blue }

2025/04/05 13:22:31 {url: / }, cluster: eks-blueprint-blue }

2025/04/05 13:22:41 {url: / }, cluster: eks-blueprint-blue }

2025/04/05 13:22:51 {url: / }, cluster: eks-blueprint-blue }

|

- Ingress URL로,

CLUSTER_NAME 확인

1

2

| URL=$(echo -n "https://" ; kubectl get ing -n team-burnham burnham-ingress -o json | jq ".spec.rules[0].host" -r)

curl -s $URL | grep CLUSTER_NAME | awk -F "<span>|</span>" '{print $4}'

|

✅ 출력

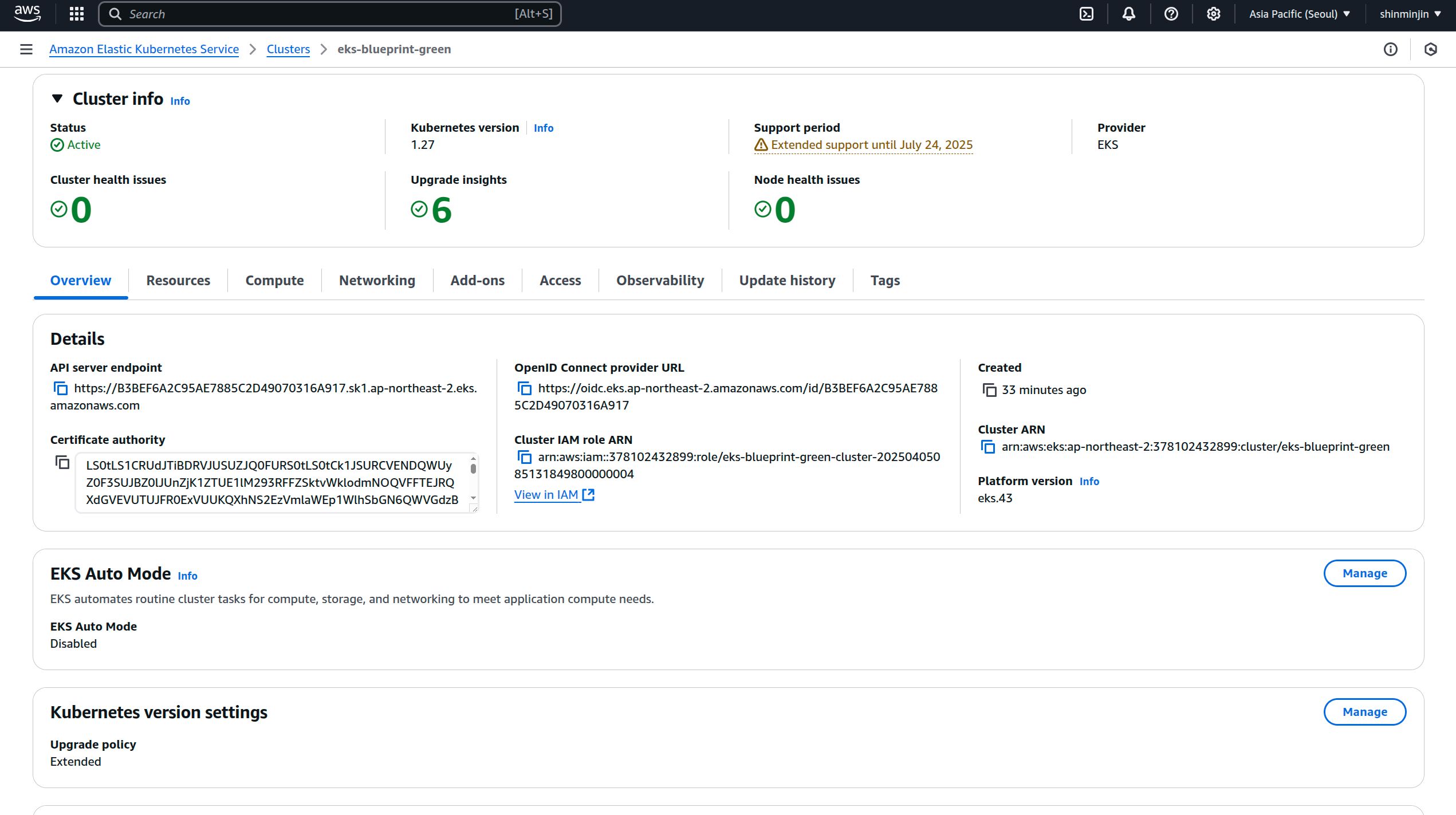

🟢 Green 클러스터 생성

1. Green 클러스터 디렉토리 초기화

- Green 클러스터 생성용 디렉토리로 이동한 후 테라폼 초기화 진행

1

2

| cd ../eks-green

terraform init

|

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

| Initializing the backend...

Initializing modules...

- eks_cluster in ../modules/eks_cluster

...

Initializing provider plugins...

- Finding hashicorp/kubernetes versions matching ">= 2.10.0, >= 2.17.0, >= 2.20.0, >= 2.22.0, 2.22.0"...

- Finding hashicorp/tls versions matching ">= 3.0.0"...

- Finding hashicorp/time versions matching ">= 0.9.0"...

- Finding hashicorp/cloudinit versions matching ">= 2.0.0"...

- Finding hashicorp/random versions matching ">= 3.6.0"...

- Finding hashicorp/helm versions matching ">= 2.9.0, >= 2.10.1"...

- Finding hashicorp/aws versions matching ">= 3.72.0, >= 4.0.0, >= 4.36.0, >= 4.47.0, >= 4.57.0, >= 5.0.0"...

- Installing hashicorp/helm v2.17.0...

- Installed hashicorp/helm v2.17.0 (signed by HashiCorp)

- Installing hashicorp/aws v5.94.1...

- Installed hashicorp/aws v5.94.1 (signed by HashiCorp)

- Installing hashicorp/kubernetes v2.22.0...

- Installed hashicorp/kubernetes v2.22.0 (signed by HashiCorp)

- Installing hashicorp/tls v4.0.6...

- Installed hashicorp/tls v4.0.6 (signed by HashiCorp)

- Installing hashicorp/time v0.13.0...

- Installed hashicorp/time v0.13.0 (signed by HashiCorp)

- Installing hashicorp/cloudinit v2.3.6...

- Installed hashicorp/cloudinit v2.3.6 (signed by HashiCorp)

- Installing hashicorp/random v3.7.1...

- Installed hashicorp/random v3.7.1 (signed by HashiCorp)

Terraform has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that Terraform can guarantee to make the same selections by default when

you run "terraform init" in the future.

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

|

2. Green 클러스터 생성 (약 20분 소요)

✅ 출력

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

| ...

module.eks_cluster.module.eks_blueprints_addons.aws_eks_addon.this["coredns"]: Still creating... [15m0s elapsed]

module.eks_cluster.module.eks_blueprints_addons.aws_eks_addon.this["coredns"]: Creation complete after 15m7s [id=eks-blueprint-green:coredns]

module.eks_cluster.kubernetes_namespace.argocd: Creating...

module.eks_cluster.kubernetes_namespace.argocd: Creation complete after 0s [id=argocd]

module.eks_cluster.kubernetes_secret.git_secrets["git-addons"]: Creating...

module.eks_cluster.kubernetes_secret.git_secrets["git-workloads"]: Creating...

module.eks_cluster.kubernetes_secret.git_secrets["git-workloads"]: Creation complete after 0s [id=argocd/git-workloads]

module.eks_cluster.kubernetes_secret.git_secrets["git-addons"]: Creation complete after 0s [id=argocd/git-addons]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.argocd[0]: Creating...

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.argocd[0]: Still creating... [10s elapsed]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.argocd[0]: Still creating... [20s elapsed]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.argocd[0]: Still creating... [30s elapsed]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.argocd[0]: Still creating... [40s elapsed]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.argocd[0]: Creation complete after 44s [id=argo-cd]

module.eks_cluster.module.gitops_bridge_bootstrap.kubernetes_secret_v1.cluster[0]: Creating...

module.eks_cluster.module.gitops_bridge_bootstrap.kubernetes_secret_v1.cluster[0]: Creation complete after 0s [id=argocd/eks-blueprint-green]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.bootstrap["workloads"]: Creating...

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.bootstrap["addons"]: Creating...

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.bootstrap["addons"]: Creation complete after 1s [id=addons]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.bootstrap["workloads"]: Creation complete after 1s [id=workloads]

╷

│ Warning: Argument is deprecated

│

│ with module.eks_cluster.module.eks.aws_iam_role.this[0],

│ on .terraform/modules/eks_cluster.eks/main.tf line 285, in resource "aws_iam_role" "this":

│ 285: resource "aws_iam_role" "this" {

│

│ inline_policy is deprecated. Use the aws_iam_role_policy

│ resource instead. If Terraform should exclusively manage

│ all inline policy associations (the current behavior of

│ this argument), use the aws_iam_role_policies_exclusive

│ resource as well.

╵

Apply complete! Resources: 130 added, 0 changed, 0 destroyed.

Outputs:

access_argocd = <<EOT

export KUBECONFIG="/tmp/eks-blueprint-green"

aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green

echo "ArgoCD URL: https://$(kubectl get svc -n argocd argo-cd-argocd-server -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')"

echo "ArgoCD Username: admin"

echo "ArgoCD Password: $(aws secretsmanager get-secret-value --secret-id argocd-admin-secret.eks-blueprint --query SecretString --output text --region ap-northeast-2)"

EOT

configure_kubectl = "aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green"

eks_blueprints_dev_teams_configure_kubectl = [

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green --role-arn arn:aws:iam::123456789012:role/team-burnham-2025040508595947340000001e",

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green --role-arn arn:aws:iam::123456789012:role/team-riker-2025040508595943610000001d",

]

eks_blueprints_ecsdemo_teams_configure_kubectl = [

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green --role-arn arn:aws:iam::123456789012:role/team-ecsdemo-crystal-20250405090000253500000020",

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green --role-arn arn:aws:iam::123456789012:role/team-ecsdemo-frontend-20250405090000763200000021",

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green --role-arn arn:aws:iam::123456789012:role/team-ecsdemo-nodejs-2025040508595963760000001f",

]

eks_blueprints_platform_teams_configure_kubectl = "aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green --role-arn arn:aws:iam::123456789012:role/team-platform-20250405085131848600000002"

eks_cluster_id = "eks-blueprint-green"

|

3. ArgoCD 접근 및 확인

(1) KUBECONFIG 설정 및 클러스터 업데이트 진행

1

2

| export KUBECONFIG="/tmp/eks-blueprint-green"

aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green

|

✅ 출력

1

| Added new context arn:aws:eks:ap-northeast-2:378102432899:cluster/eks-blueprint-green to /tmp/eks-blueprint-green

|

(2) ArgoCD URL, Username, Password 확인

1

2

3

| echo "ArgoCD URL: https://$(kubectl get svc -n argocd argo-cd-argocd-server -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')"

echo "ArgoCD Username: admin"

echo "ArgoCD Password: $(aws secretsmanager get-secret-value --secret-id argocd-admin-secret.eks-blueprint --query SecretString --output text --region ap-northeast-2)"

|

✅ 출력

1

2

3

| ArgoCD URL: https://a0e6d1f861ae04432a27aca4491a402a-742000532.ap-northeast-2.elb.amazonaws.com

ArgoCD Username: admin

ArgoCD Password: q<T2ETwcZa1PCVx4

|

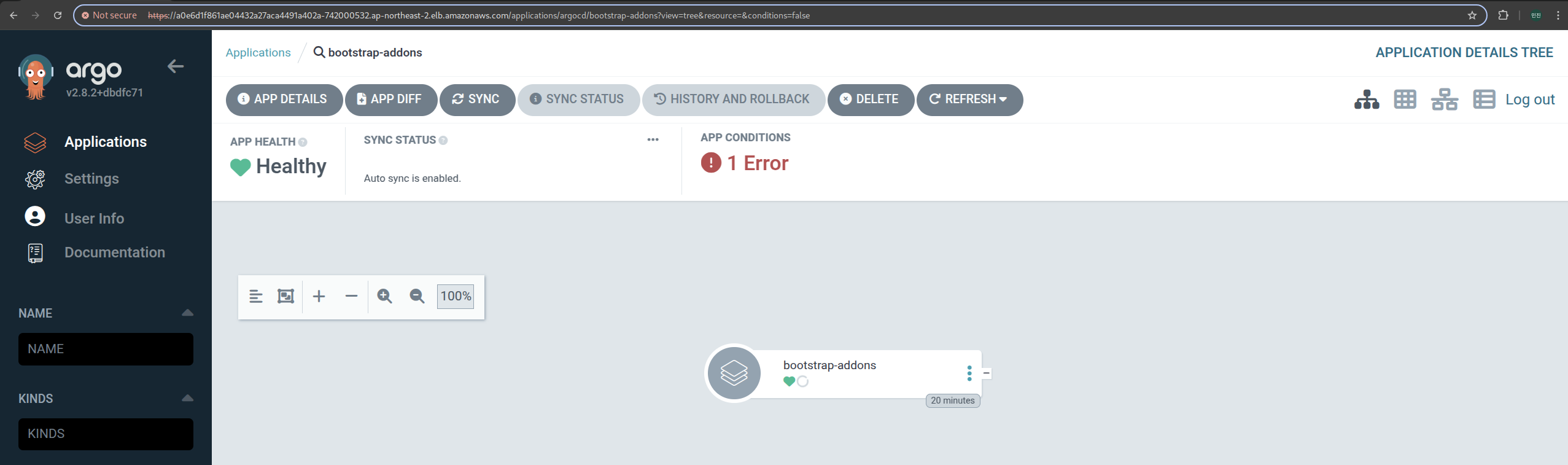

4. 레포지토리 URL 관련 에러 해결

(1) Blue 클러스터와 동일한 에러발생

1

| Failed to load target state: failed to generate manifest for source 1 of 1: rpc error: code = Unknown desc = ssh: no key found

|

(2) 레포지토리 URL을 https로 변경하는 패치 진행

1

2

| kubectl patch secret eks-blueprint-green -n argocd --type merge \

-p '{"metadata": {"annotations": {"addons_repo_url": "https://github.com/aws-samples/eks-blueprints-add-ons.git"}}}'

|

✅ 출력

1

| secret/eks-blueprint-green patched

|

(3) 레포지토리 경로 수정 후, 싱크가 맞춰짐

🚀 Blue -> Green 마이그레이션

- 현재 DNS 레코드의 가중치가 eks‑blue 100, eks‑green 0으로 설정되어 있어 모든 트래픽이 eks‑blue로 라우팅됨

1. eks-blue DNS 가중치 확인

1

| cat eks-blue/main.tf | grep route

|

✅ 출력

1

2

3

| argocd_route53_weight = "100"

route53_weight = "100"

ecsfrontend_route53_weight = "100"

|

2. eks-green DNS 가중치 확인

1

| cat eks-green/main.tf | grep route

|

✅ 출력

1

2

3

| argocd_route53_weight = "0" # We control with theses parameters how we send traffic to the workloads in the new cluster

route53_weight = "0"

ecsfrontend_route53_weight = "0"

|

3. 테라폼 구성 파일

(1) main.tf 파일 내 eks_cluster 모듈 구성

1

2

3

4

5

6

7

8

9

10

| module "eks_cluster" {

source = "../modules/eks_cluster"

aws_region = var.aws_region

service_name = "green"

cluster_version = "1.27"

argocd_route53_weight = "0"

route53_weight = var.route53_weight

}

|

(2) variables.tf 파일 내 route53_weight 변수

1

2

3

4

5

| variable "route53_weight" {

type = string

description = "Route53 weight for DNS records"

default = "50"

}

|

4. DNS 라우팅 결과 확인

1

| curl -s $URL | grep CLUSTER_NAME | awk -F "<span>|</span>" '{print $4}'

|

✅ 출력

1

2

3

4

5

6

7

| eks-blueprint-blue

eks-blueprint-blue

eks-blueprint-blue

eks-blueprint-green

eks-blueprint-green

eks-blueprint-green

...

|

eks‑blue와 eks‑green 클러스터 이름이 모두 표시됨